vRealize Automation Cluster IP Change

This post will go through the steps taken for changing IP addresses on a vRealize Automation (or Aria Automation which will, eventually, be the new name) cluster in my lab environment.

Environment setup

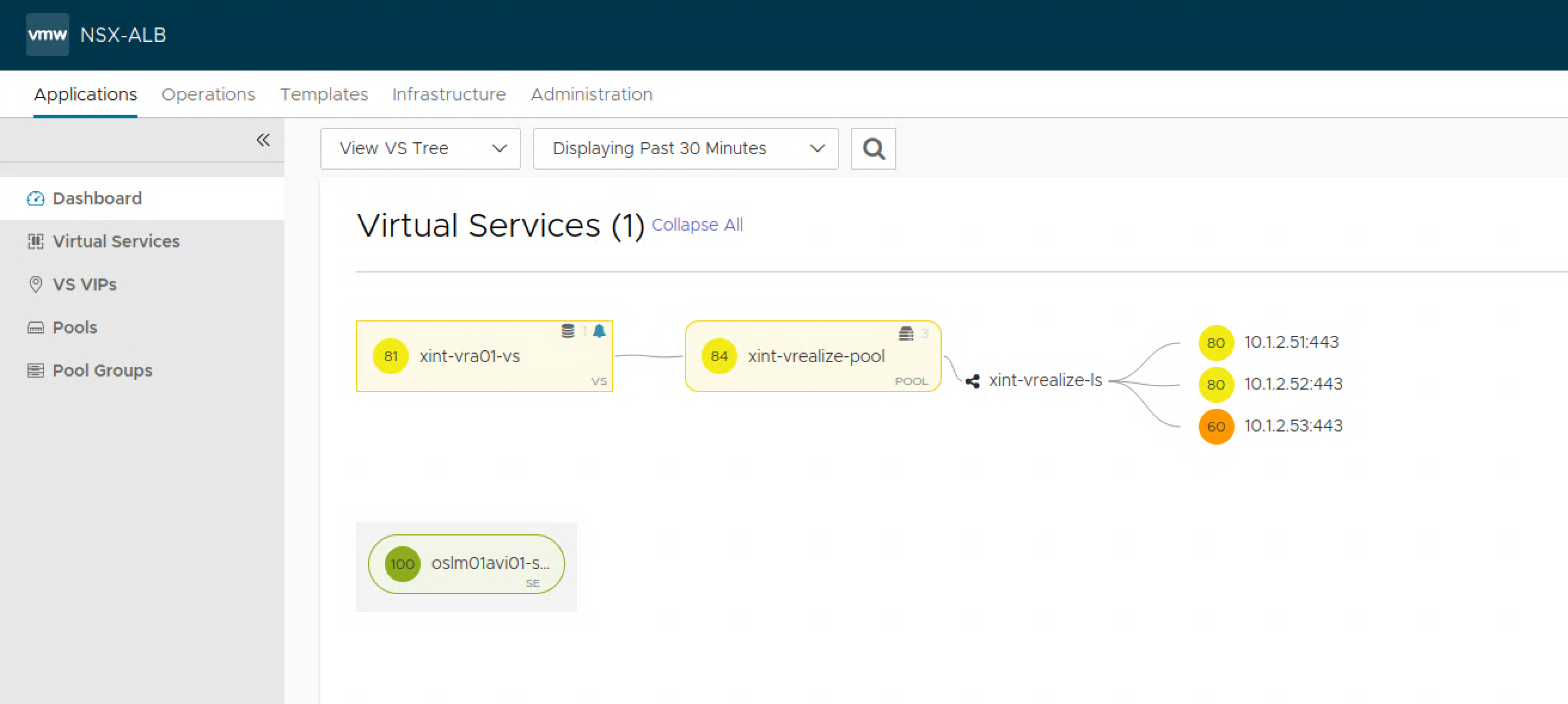

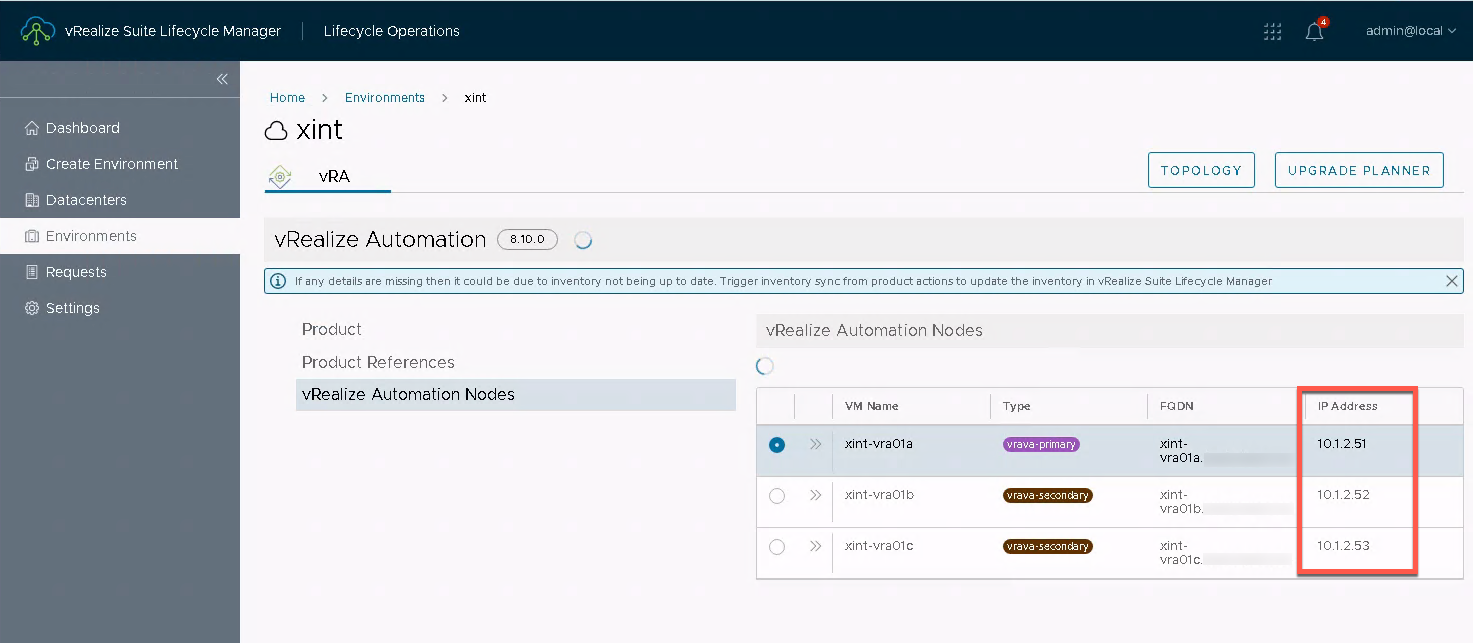

The vRA cluster is running version 8.10.0 and has been deployed through vRealize Suite Lifecycle Manager (vRSLCM). The cluster consists of three nodes, and a Virtual IP (VIP) is set for the Load Balancer in front. The Load Balancer in use is the VMware NSX Advanced Load Balancer (Avi Networks).

In addition to this there's also a VMware Workspace ONE Access (VMware Identity Manager) node and a vRSLCM node running which also will need to have its IP address changed. For brevity I've split the process for those two out in different blog posts.

All nodes are connected to the same NSX Overlay network segment.

Step-by-step process for changing IP details

There is a documentation article from VMware explaining the necessary steps which I've used in this process. Note that this is for the vRA cluster.

As mentioned, in this process I've also changed IP addresses for both vRSLCM and vIDM/WSA as these were all running on the same network. Check the corresponding blog posts for details on those specifically.

For completion, the whole process:

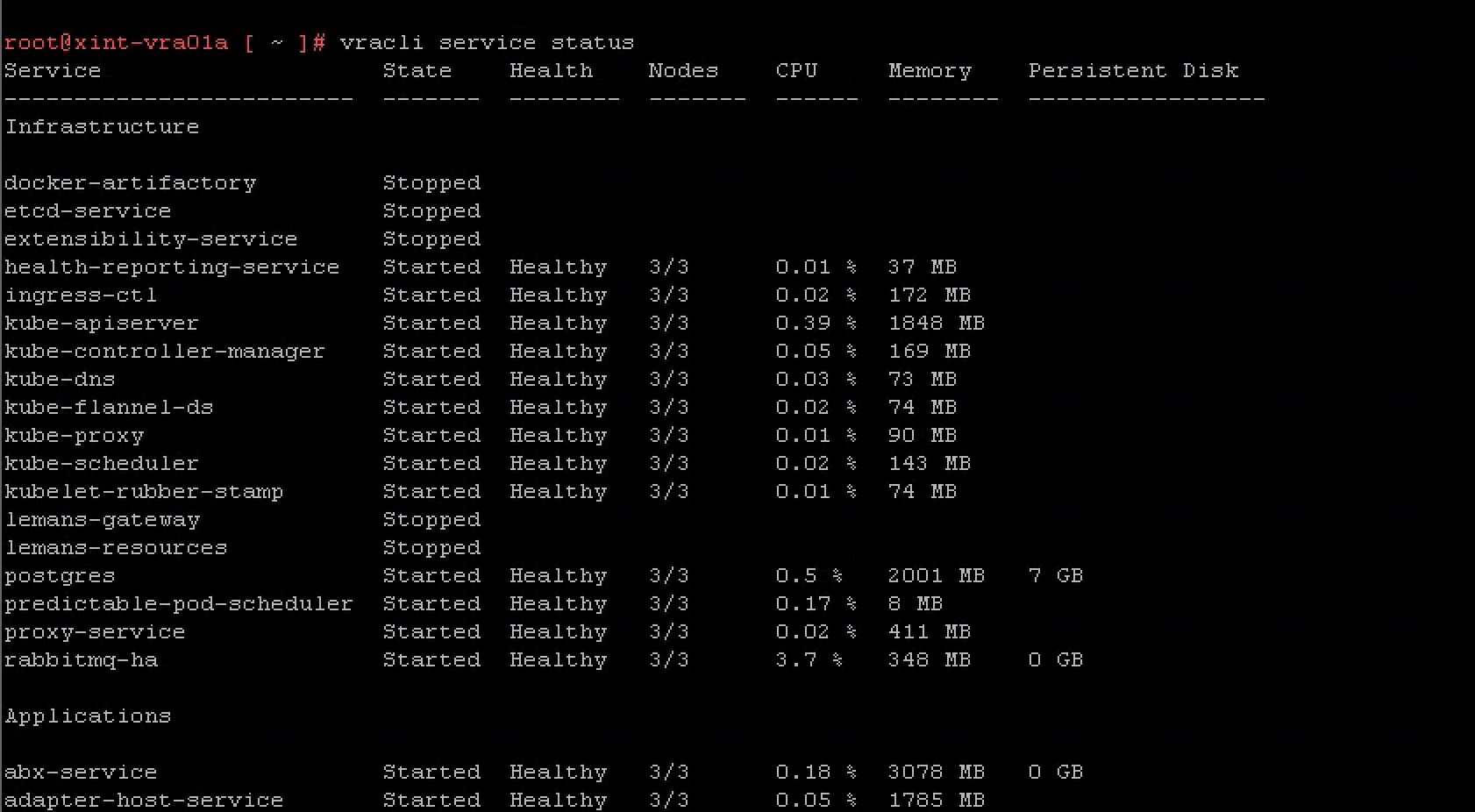

- Verify status on all nodes and apps

- Power off and snapshot all nodes

- Power on and verify status again

- Perform IP change on vRA

- Perform IP change on vIDM

- Perform IP change on vRSLCM

- Change underlying network

- Verify connectivity

- Verify vRSLCM and vIDM

- Change IP on vRA Load Balancer

- Update DNS

- Update certificates (optional)

- Start vRA cluster and verify

- Finished

Note that there's quite some downtime for the individual apps and nodes here. I could have had some of them up and running immediately after the IP change if I had wanted by migrating stuff to different virtual networks with the correct IP subnet. However since I wanted to keep the existing NSX segment (because I didn't want the ID to change) I went with a longer downtime and keep all nodes on the existing network.

Snapshot

After verifying the status of the environment it's a good idea to take a snapshot of the nodes in question, preferably with the nodes powered off.

Power on and verify cluster status

After taking a snapshot we'll bring the vRA cluster back online to verify that everything is running as it should.

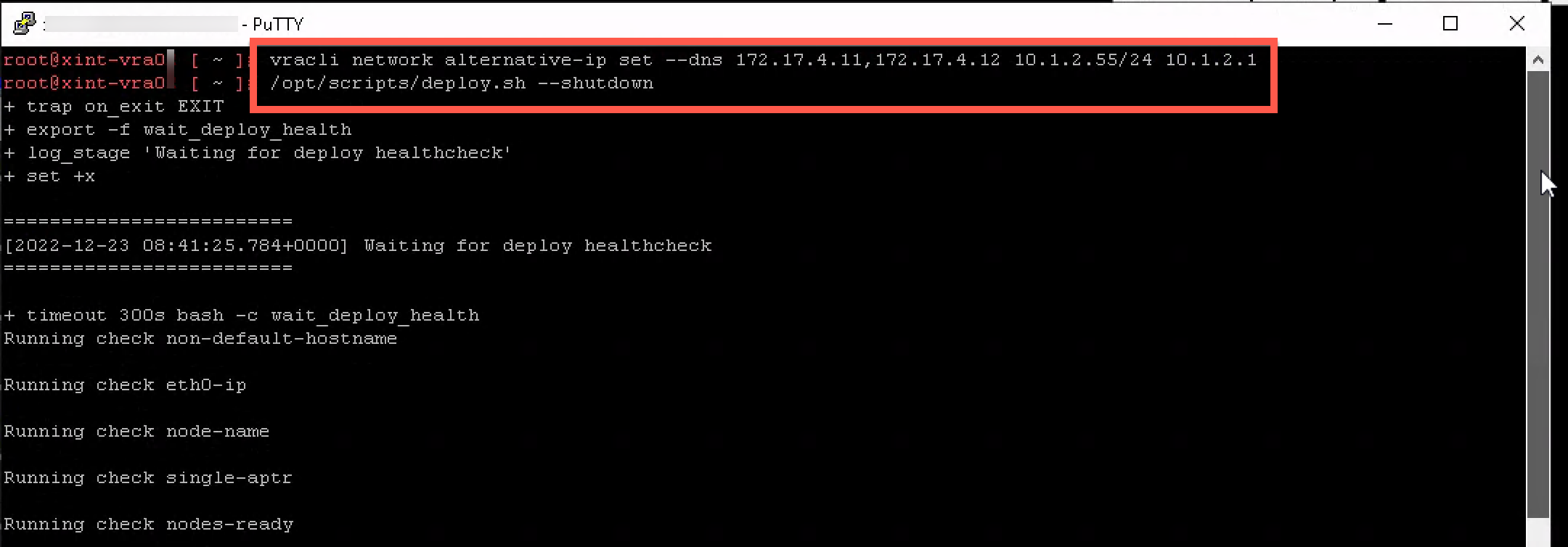

Set Alternative IP on vRA

The IP change process comes from the process needed when using SRM for recovering the cluster to a new network in case of disaster.

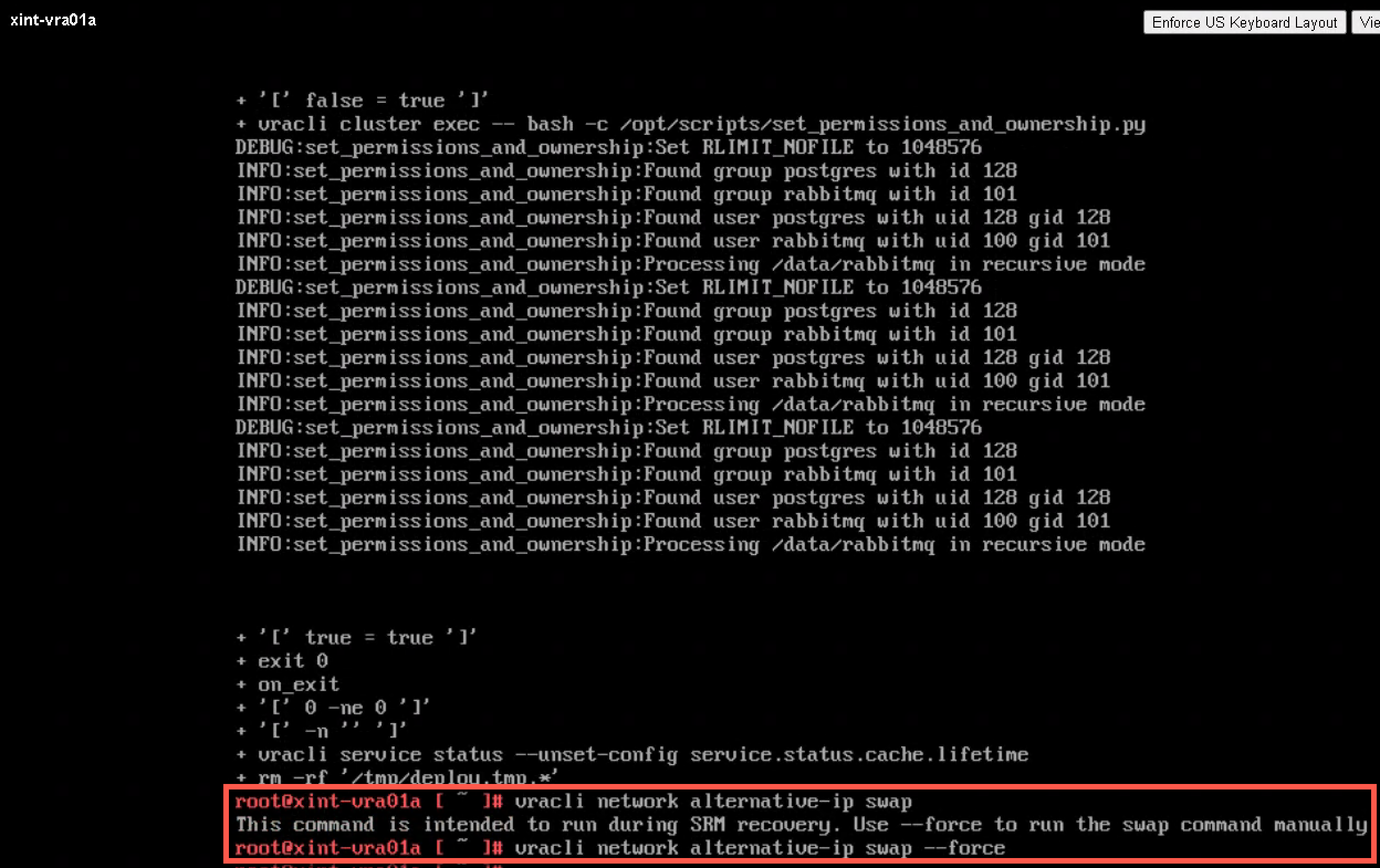

While the cluster is still up we'll add in a Alternative IP for each node. Note that the screenshot shows that we shutdown the cluster also. Make sure that you run the alternate ip command FIRST on ALL nodes in the cluster before shutting down the cluster.

After the cluster has been stopped we can swap the IP to the alternate one. This must be done on ALL nodes.

Note that I had to run the command with the --force command although this was not specified in the documentation at the time of this writing

Restart nodes and verify connectivity

After the cluster has been stopped and the IP addresses has been changed we'll perform a reboot of all nodes to make sure that the new network details stick

Change network

Now we can change the underlying network, either the actual network or change the virtual portgroup the VM is connected to. And after changing the network and IP we reboot the VM to verify that the network details stick.

Note that in my case I waited to change the underlying network until all nodes in both vIDM and vRSLCM also was changed

Change the Load Balancer

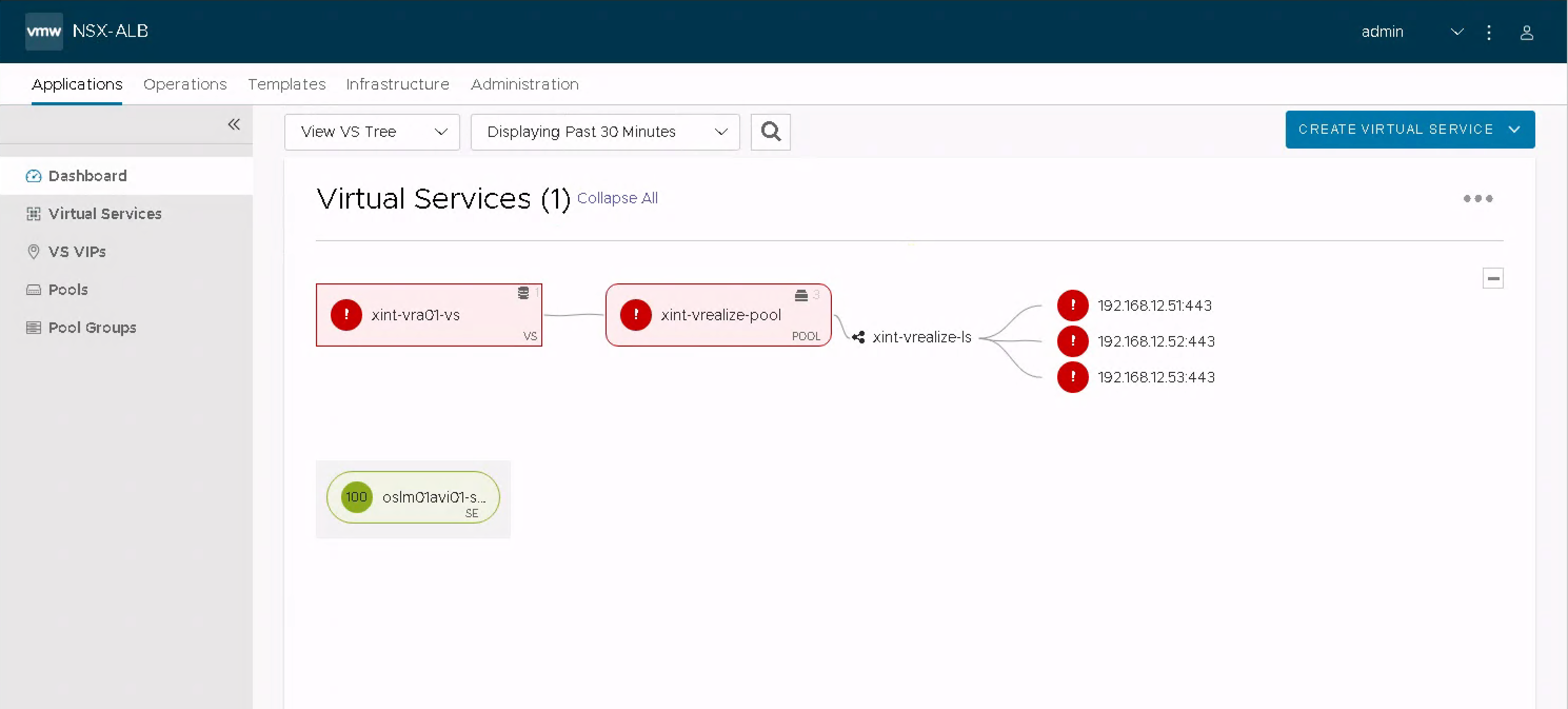

Before starting the cluster it might be a good idea to fix the IP on the load balancer. In my case the Load Balancer is a software based platform, NSX Advanced Load Balancer (Avi Vantage).

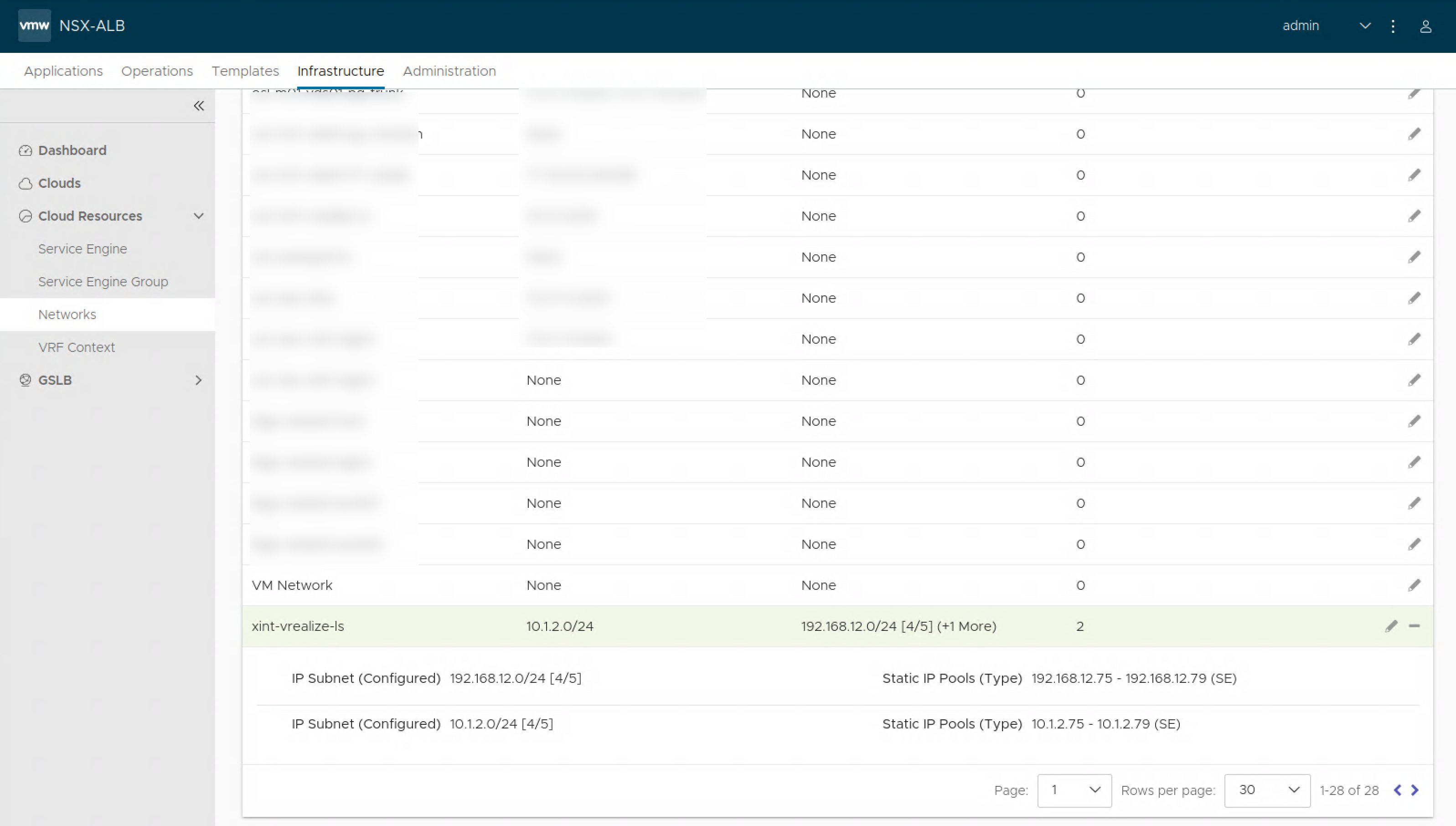

First we'll update the underlying network segment to reflect the new IP range. Note that if you switch to a new or different network this will be different

Based on your infrastructure you might also have to change the routing in use by the Avi platform

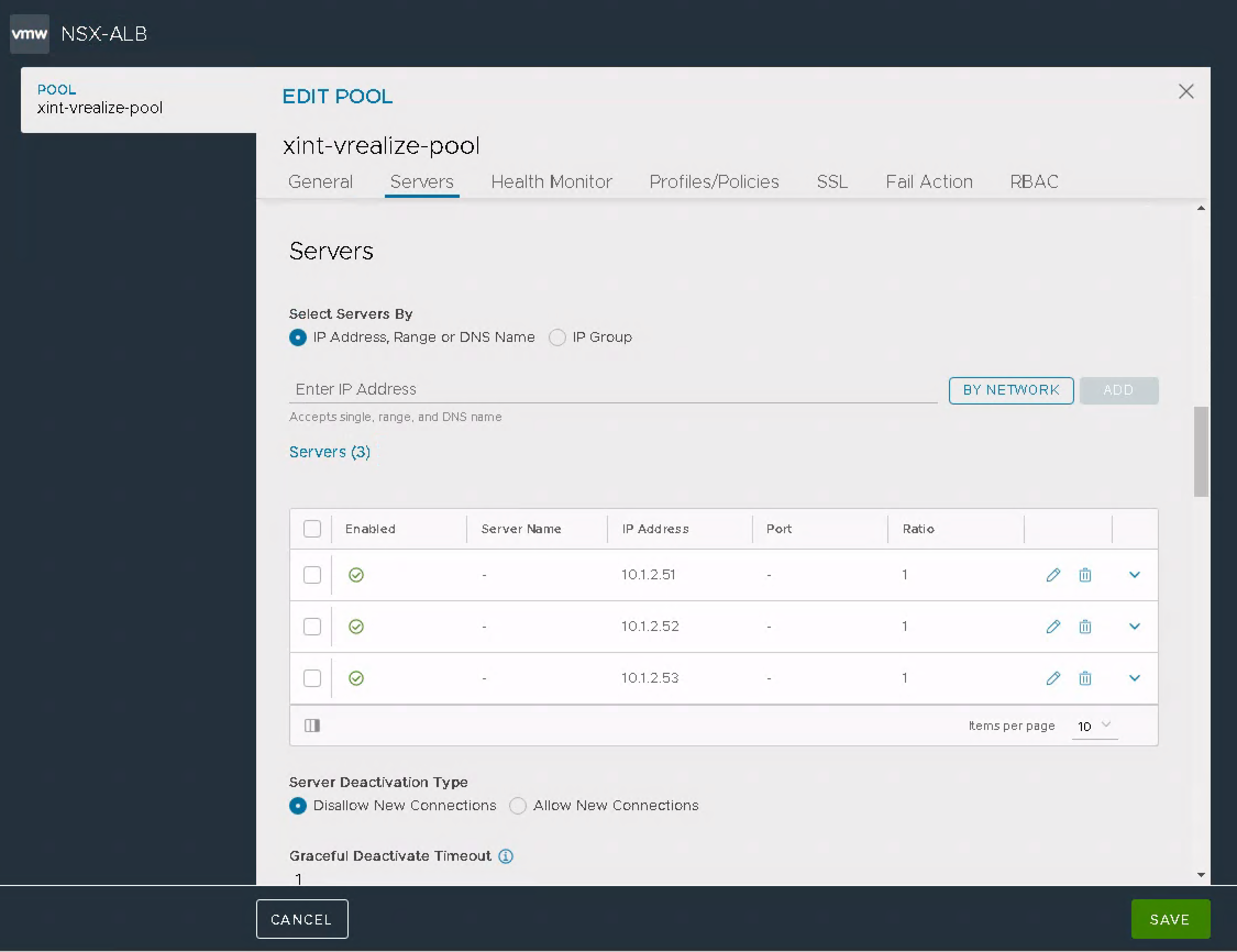

We'll have to update the pool of servers to reflect the new IPs

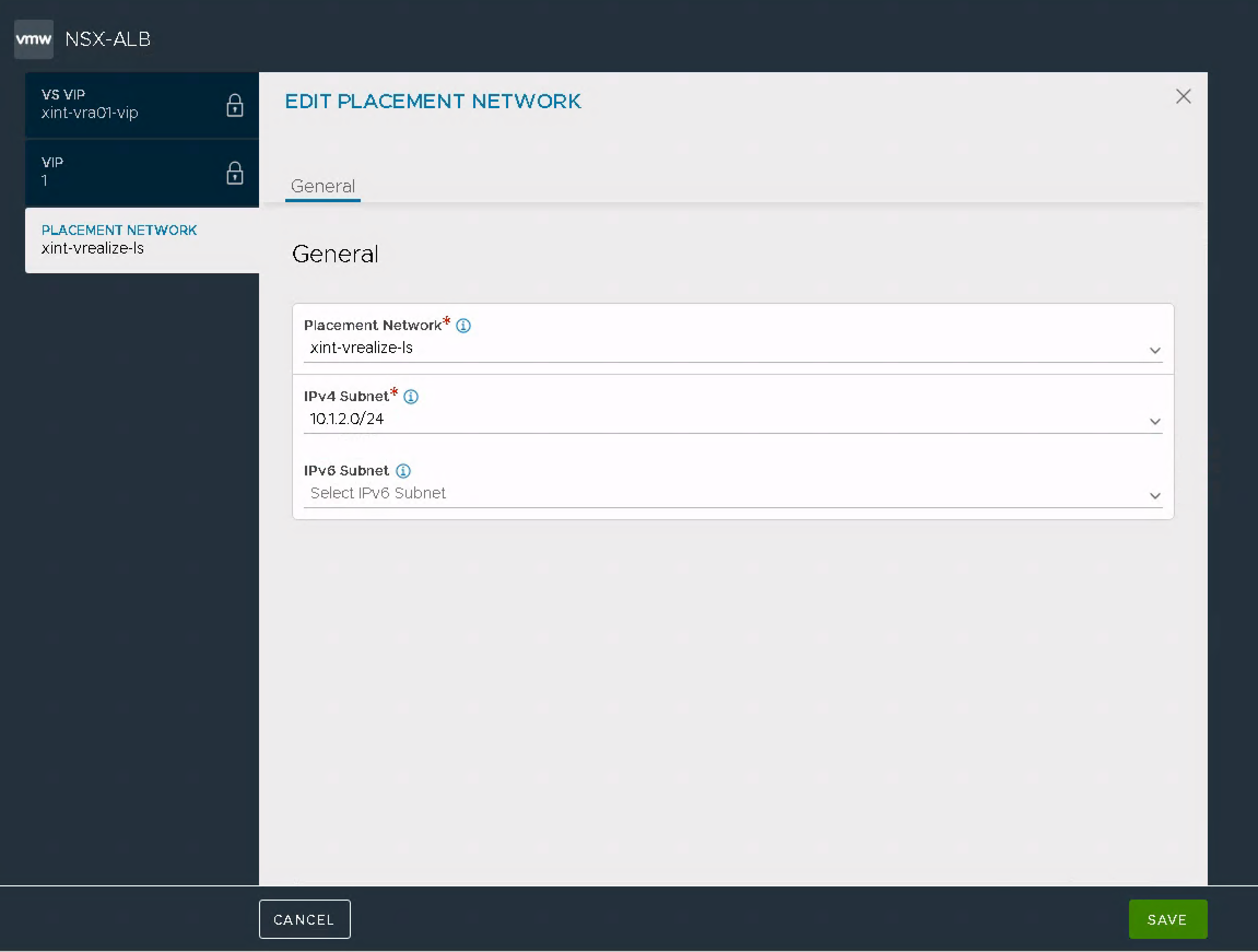

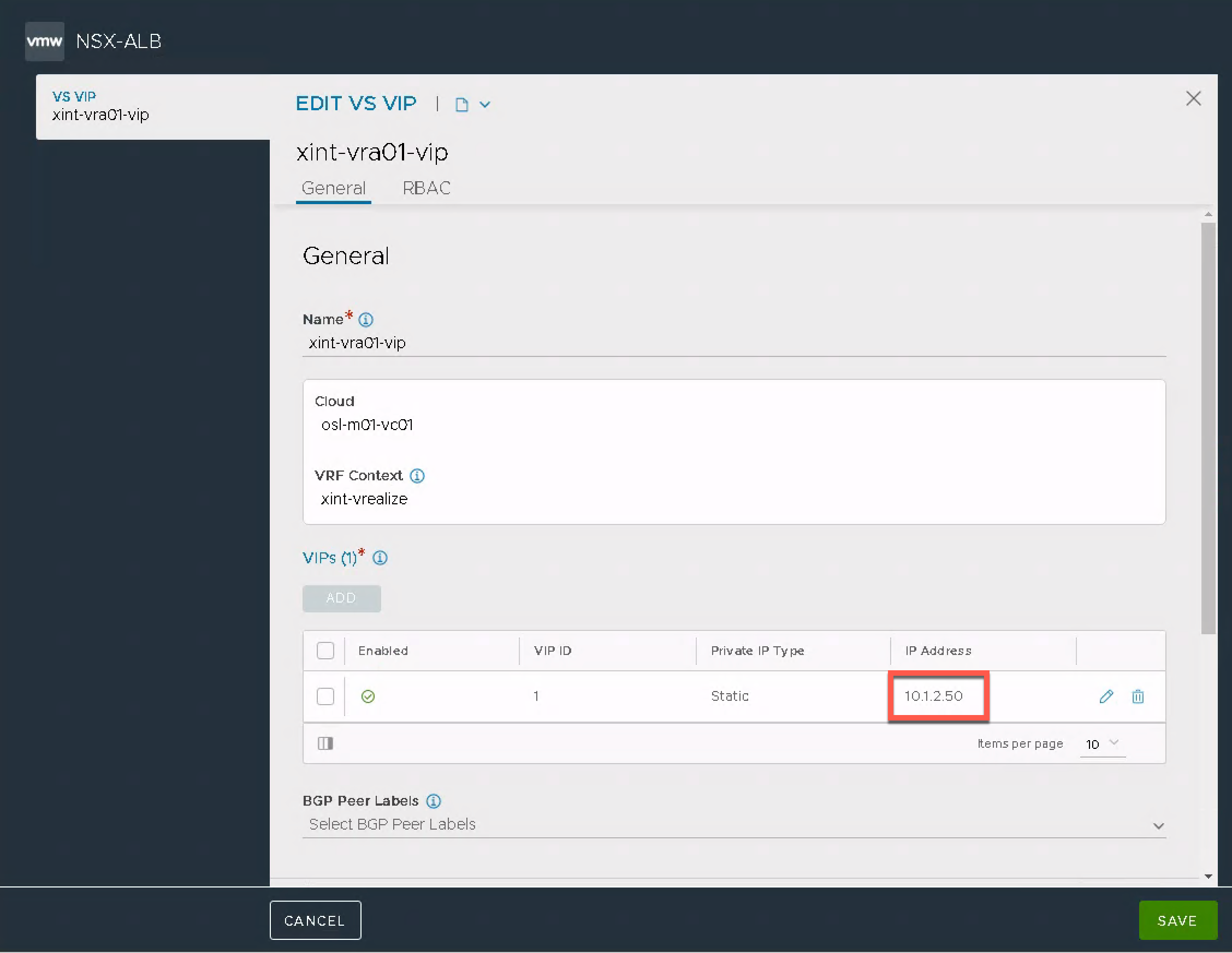

And we'll change the IP and the Placement network of the VIP

After a while the Virtual service should be back up

I struggled for some time to get the Virtual service to actually work even though it seemed fine in Avi. After restarting the Service Engines and the whole vRA cluster, and updating certificates withouth avail, I decided to recreate the whole Virtual Service which then solved the problem.

Update DNS

Make sure to update your DNS server with the new IP addresses of the vRA cluster nodes and VIP.

Start vRA cluster and verify connectivity

Now we can start up the vRA cluster and after a while we should have our cluster up and running.

Verify vRSCLM

Since this is deployed through vRSLCM we'll trigger an inventory sync and verify that the details are updated

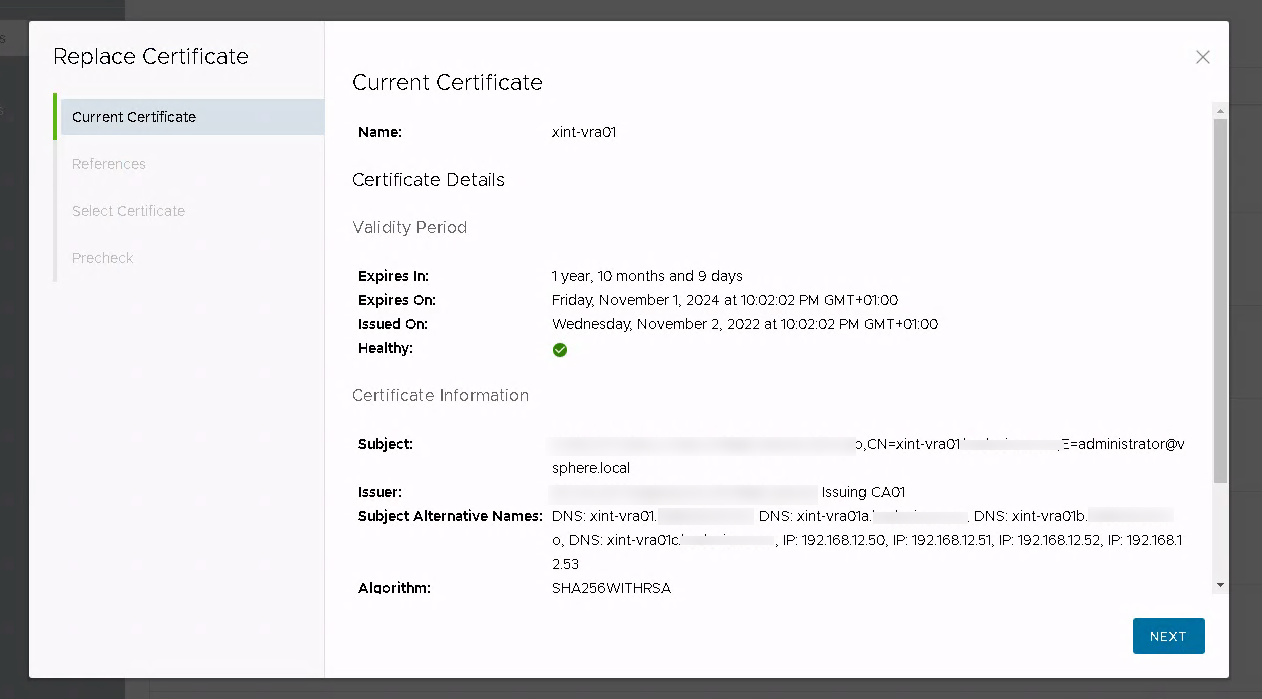

Update certificates

We'll also have to update the certificate in use if they include the IP address of the vRA nodes and load balancer. After generating a new certificate this is pretty straightforward by using the vRSLCM Locker and replace functionality on the existing certificate

Summary

This blog post has explained the steps I took when changing the IP network and the IP addresses for a three node vRealize Automation Cluster and a Load Balanced virtual service for handling the traffic to the cluster

Thanks for reading, and reach out if you have any comments or questions.