Gitops Your Scheduled Tasks

Overview

In this, rather lengthy, post we'll take a stab at using Kubernetes, Git and GitOps to run scripts in our environment. With the help of an existing Developer Infrastructure platform we've moved most of our automation to Kubernetes and a "GitOpsy" mindset.

Why do you wonder? Maybe you're running this as Scheduled tasks on a Windows Server and have done successfully for years?

Well, my take on it is that transitioning a VI Admins ways of running his or hers infrastructure to a more DevOps way has some real advantages. Scheduling in Kubernetes, source control with Git and automated workflows is some of them. Is it a good fit for all? Maybe not. It will take some adjustments, both in the infrastructure, but mostly on the "soft" side, i.e. the organization and the individual admins and operators. But in the long run, I think we all have to go down that route. And as more and more organizations run their workloads in Kubernetes this is a great way of getting hands on as a VI admin.

The example code is also available on GitHub

Solution Overview

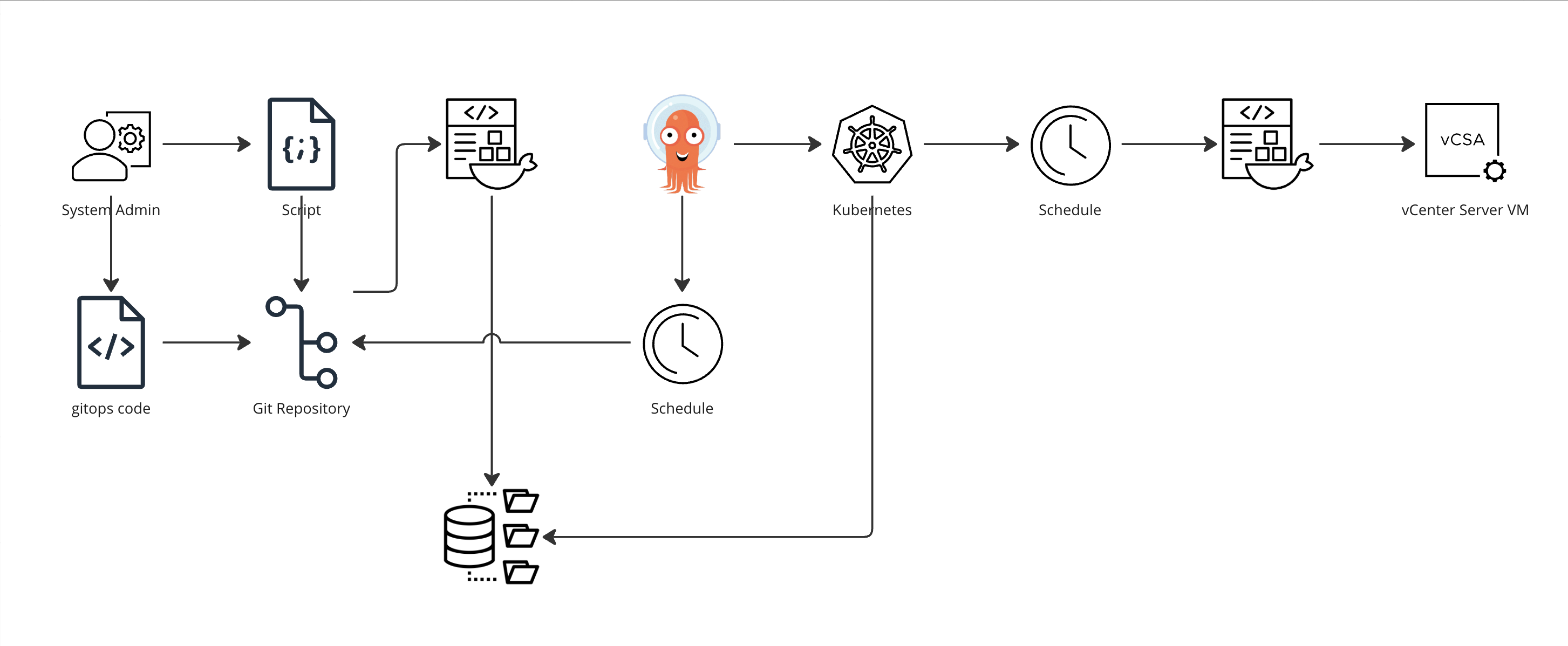

To start with let's take a look at what we aim to achieve here.

So the building blocks used is a script, a Git repository, a Docker image, an Image Repository, a Kubernetes cluster, Argo CD and of course ultimately the vCenter we're connecting to to get our job done.

This post will assume you already have all of these in place and will focus on how we stitch these things together and not how to setup the individual pieces.

Check out the Argo CD docs for a walkthrough of how to get Argo CD up and running

The script

So, let's kick off with what we actually want to do.

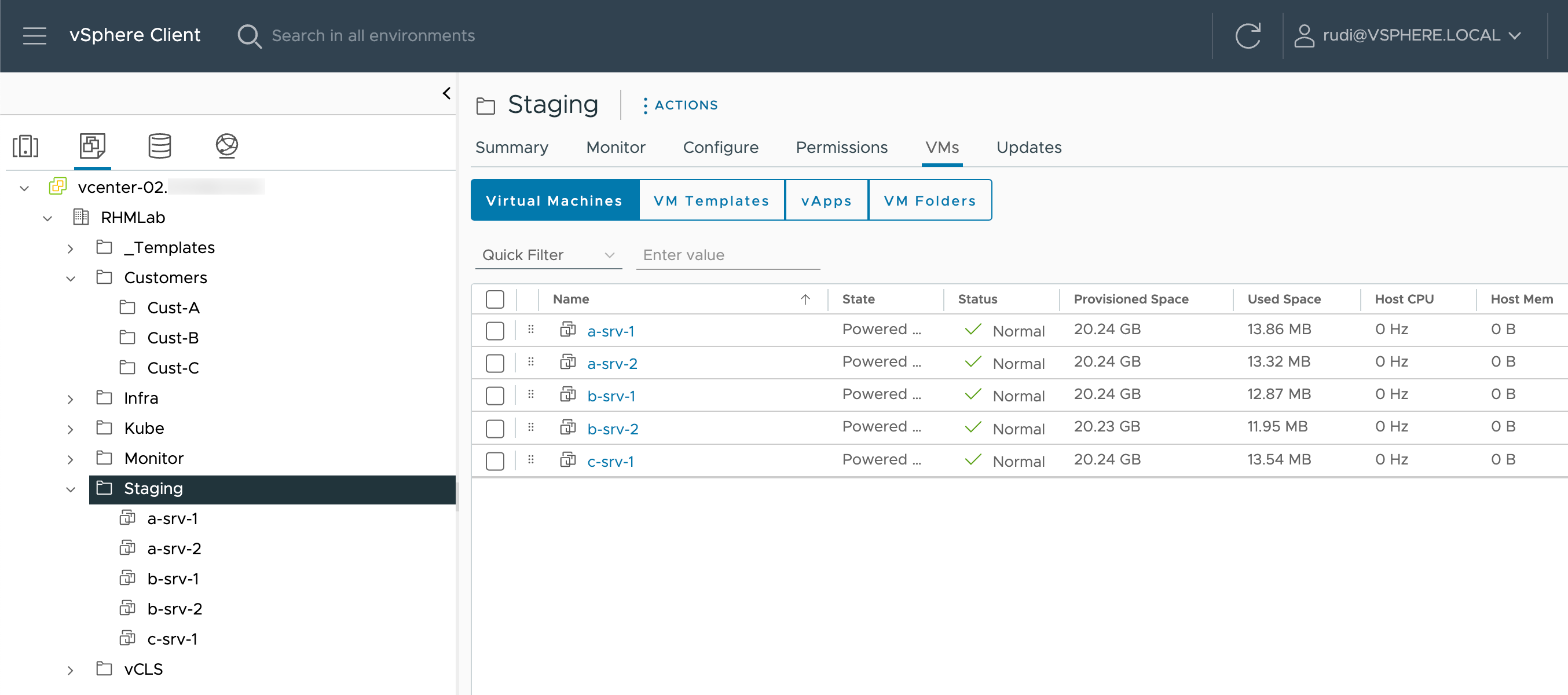

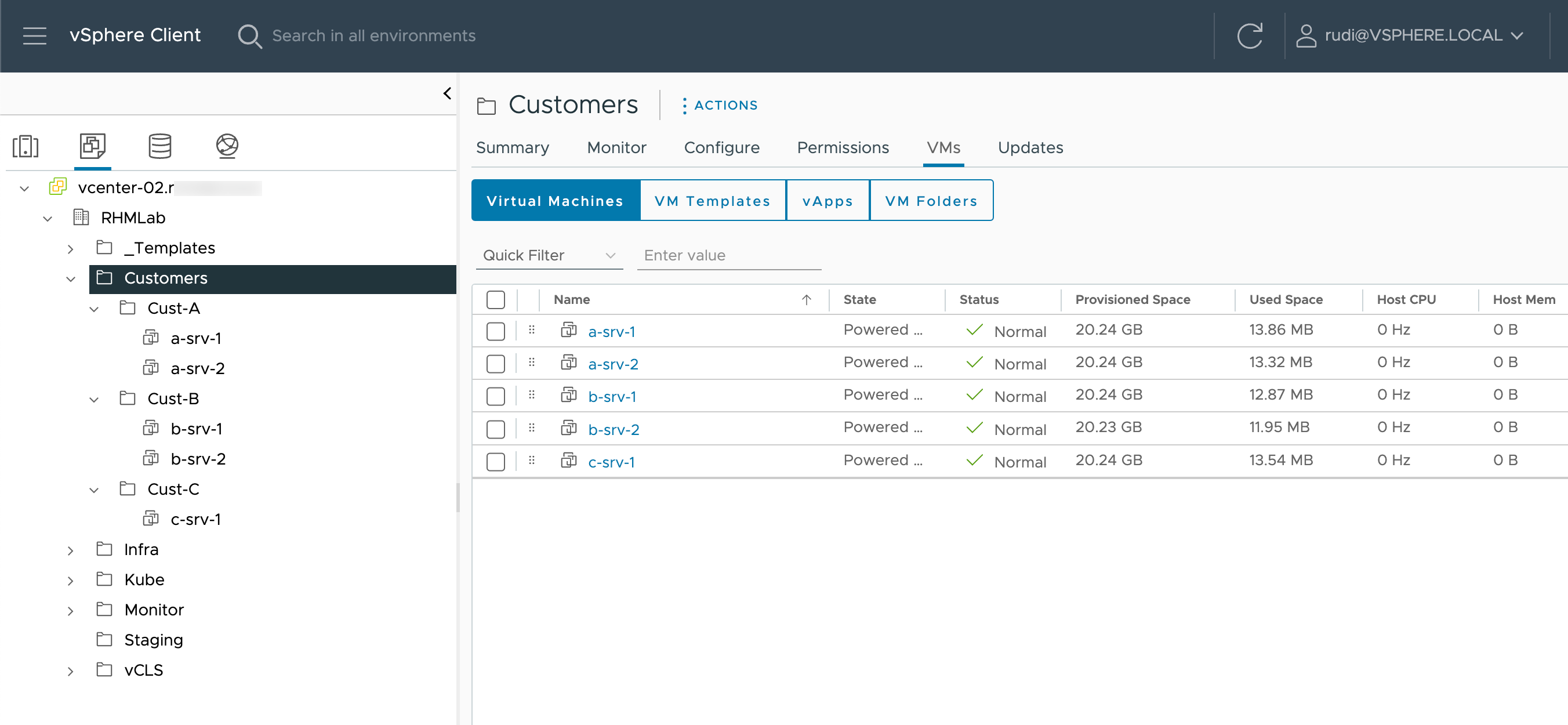

The example we'll use is a script that moves virtual machines from a staging folder to a specific folder based on their name.

1$vcenter = $ENV:VCENTER_SERVER

2$username = $ENV:VCENTER_USER

3$password = $ENV:VCENTER_PASSWORD

4

5try {

6 Write-Output "Connecting to vCenter $vCenter"

7

8 Connect-VIServer -Server $vcenter -User $username -Password $password -ErrorAction Stop

9}

10catch {

11 Write-Error "Couldn't connect to vCenter $vCenter"

12 break

13}

14

15$stagingFolder = Get-Folder -Type VM -Name Staging

16$customerRoot = Get-Folder -Type VM -Name Customers

17

18$vms = $stagingFolder | Get-VM

19Write-Output "Found $($vms.count) vms to process"

20foreach($vm in $vms){

21 $vmName = $vm.Name

22 Write-Output "Processing vm $vmName"

23

24 $custName = $vmName.Substring(0,1)

25

26 Write-Output "Searching for customer folder"

27 $custFolder = Get-Folder -Type VM -Name "Cust-$custName" -Location $customerRoot

28

29 try{

30 Write-Output "Moving VM $vmName to customer folder"

31 $vm | Move-VM -InventoryLocation $custFolder | Out-Null

32 }

33 catch{

34 Write-Error "Couldn't move vm $vmName to correct folder"

35 continue

36 }

37

38}

39Write-Output "Disconnecting from vCenter $vCenter"

40Disconnect-VIServer $vcenter -Confirm:$false

The example script assumes that all new servers land in a folder called "Staging", and that the customer VM folders lives under a parent "Customer" folder. For brevity the script does not create a folder if it doesn't exist.

Credentials for logging in to vCenter are fetched through Environment variables, make sure you check what is the preferred way of doing this in your own environment.

The Git repo

Now, one of the huge advantages in GitOps is the use of a Git repository. This allows us to take advantage of source control, collaboration and so on.

Even though you don't go down the full CI/CD route please use Git for source controlling your scripts

1git add <script-file>

2git commit -m "<change-description>"

3git push

In this example the Git repository in use is a self-hosted Gitlab instance, but other repos would work just fine. We'll revisit the Git repo in a later post to take advantage of some CI pipelining

The container

So, to run this script in Kubernetes we obviously have to get it running in a container.

We'll use the official VMware PowerCLI container image as our base. From this we'll build a new container where we copy in our script.

1FROM vmware/powerclicore

2

3RUN pwsh -c 'Set-PowerCliConfiguration -InvalidCertificateAction Ignore -ParticipateInCeip $false -Confirm:$false'

4

5COPY move-vms.ps1 /tmp/script.ps1

6

7ENTRYPOINT ["pwsh"]

We'll push this file to Git as well

1git add Dockerfile

2git commit -m "Adding Dockerfile"

3git push

Note that there's multiple ways of doing this. Building a specific container for just one script might seem to be a bit overkill as we could pull the script in at runtime in the official container image, but we've chosen to do it this way as we have full control over the code in place for our script and the task it should perform. Argo CD mentions a few Best practices which you should check out. One of which is to separate code from config which we're not doing in this post.

Let's build the container so that it's ready to be run

1docker build -t gitops-vmfolder:<tag> .

This container could now be tested and run manually to check that it performs our tasks as expected.

1docker run --rm -it --env-file=<some-env-file> gitops-vmfolder:<tag> /tmp/script.ps1

The Image repo

To be able to use this new container image in our Kubernetes cluster we'll have to push it to a Container Image repo so that the cluster can download and run it. There's several Image repositories out there, not only the defacto standard Docker Hub. In this post we'll make use of an internal Harbor registry.

To push it to the correct repo we'll need to tag our existing image

1docker -t <image-repo>/<project>/gitops-vmfolder:<tag> gitops-vmfolder:<tag>

2docker push <image-repo>/<project>/gitops-vmfolder:<tag>

In a full CI/CD setting we would also add a CI pipeline that would take care of building and pushing the container image to the image repository when we do changes to our scripts. In the GitHub repository we have a sample CI pipeline job that works for Gitlab that could take care of this.

The CD app

Now, we'll stitch a few of these things together with our CD tool, Argo CD. Note that there's alternatives here which also are great tools, e.g. Flux CD, which might suit your organization or workflow better. But for this example we'll stick with Argo CD.

Before we jump in to Argo CD we'll create a new file in our Git repo, specifically to be used by Argo CD.

CronJob yaml manifest

This file is a yaml specification for a CronJob which will be run on our Kubernetes cluster.

The CronJob will create a pod based on our image which includes our script, and after the job has finished it will terminate and delete it self.

1apiVersion: batch/v1

2kind: CronJob

3metadata:

4 name: folder-job

5spec:

6 schedule: "*/5 * * * *"

7 jobTemplate:

8 spec:

9 activeDeadlineSeconds: 60

10 template:

11 spec:

12 containers:

13 - name: vmfolder

14 image: <image-repo>/<project>/gitops-vmfolder:<tag>

15 imagePullPolicy: IfNotPresent

16 command:

17 - pwsh

18 - -file

19 - /tmp/script.ps1

20 env:

21 - name: VCENTER_SERVER

22 value: <some-vcenter-server>"

23 - name: VCENTER_USER

24 valueFrom:

25 secretKeyRef:

26 name: vc-user-pass

27 key: username

28 - name: VCENTER_PASSWORD

29 valueFrom:

30 secretKeyRef:

31 name: vc-user-pass

32 key: password

33 restartPolicy: OnFailure

34 imagePullSecrets:

35 - name: regcred

We'll put this file in a folder called gitops and push it to our Git repo.

There's a few things to note here.

- Kubernetes is scheduling this as a Cronjob so we're using the Cron syntax for specifying the schedule. Take a look at Crontab.guru if you want to learn more about the syntax

- We're specifying the container image to use based on our internal image repository which we also have a registry secret created for.

- We're also specifying a few environment variables, specifically for connecting to the vCenter server.

- The vCenter server is specified directly in the yaml spec, whereas the credentials are pre-created as a Kubernetes secret in the namespace.

Note that secrets management is a huge topic and you should take measures to keep them secure and follow your organizations principles in this area

ArgoCD Application

Now, we're ready to create an application in Argo CD

There's ways of performing the below through CLI commands. In this example we're using the GUI

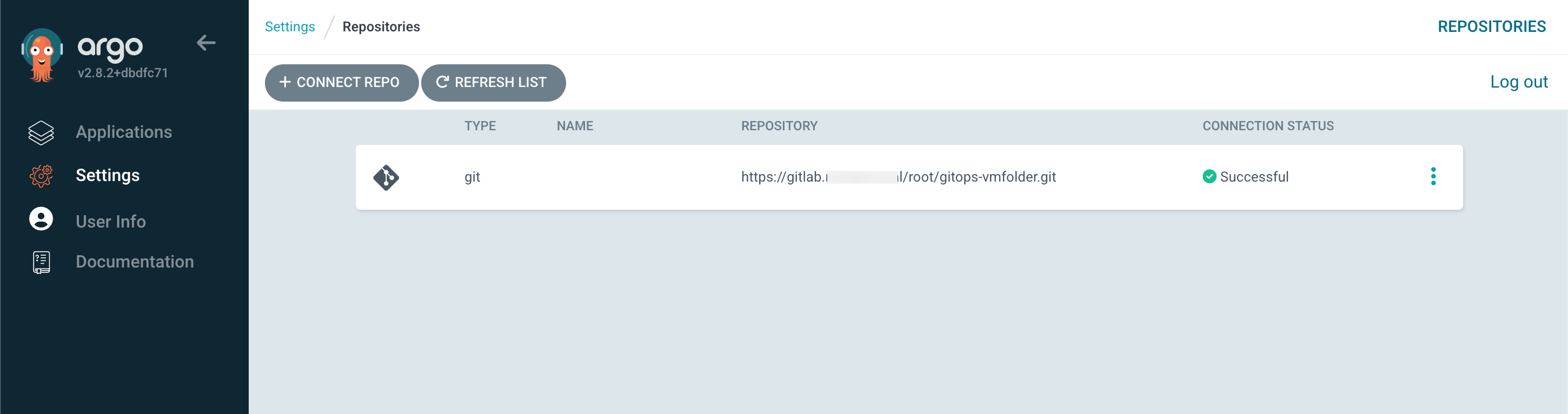

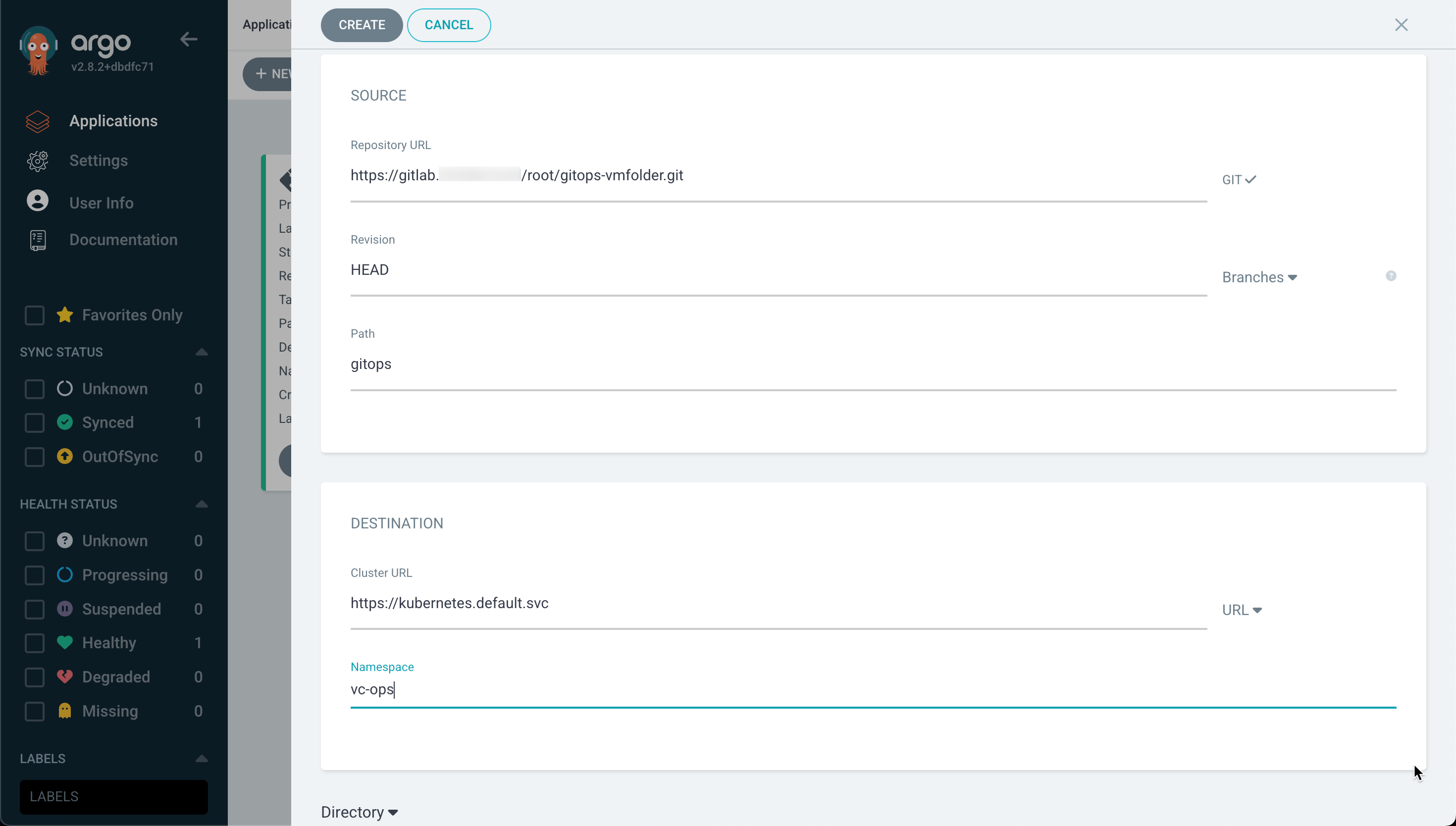

First we'll connect Argo CD to our Git repo

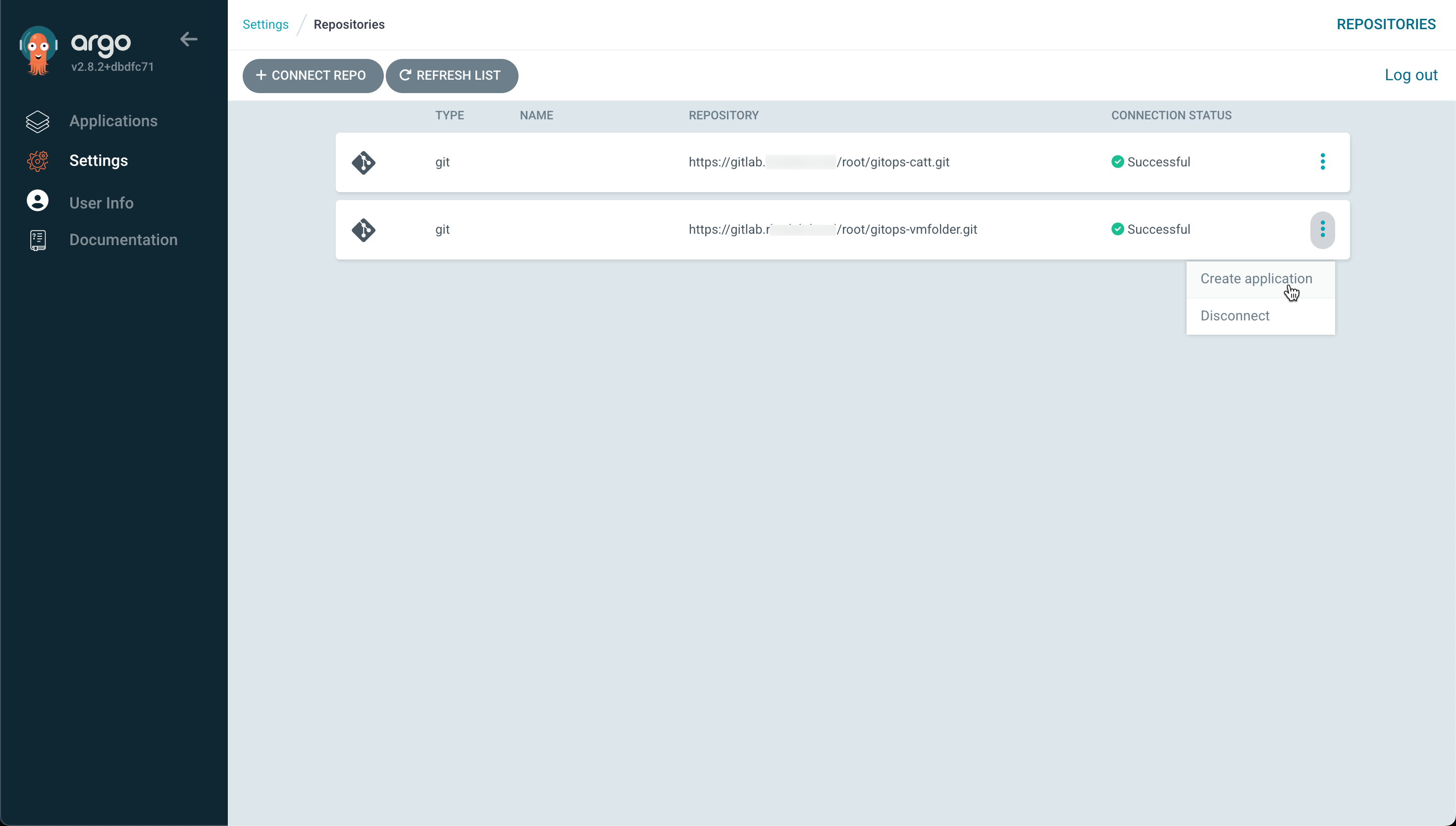

After this we can create our Application

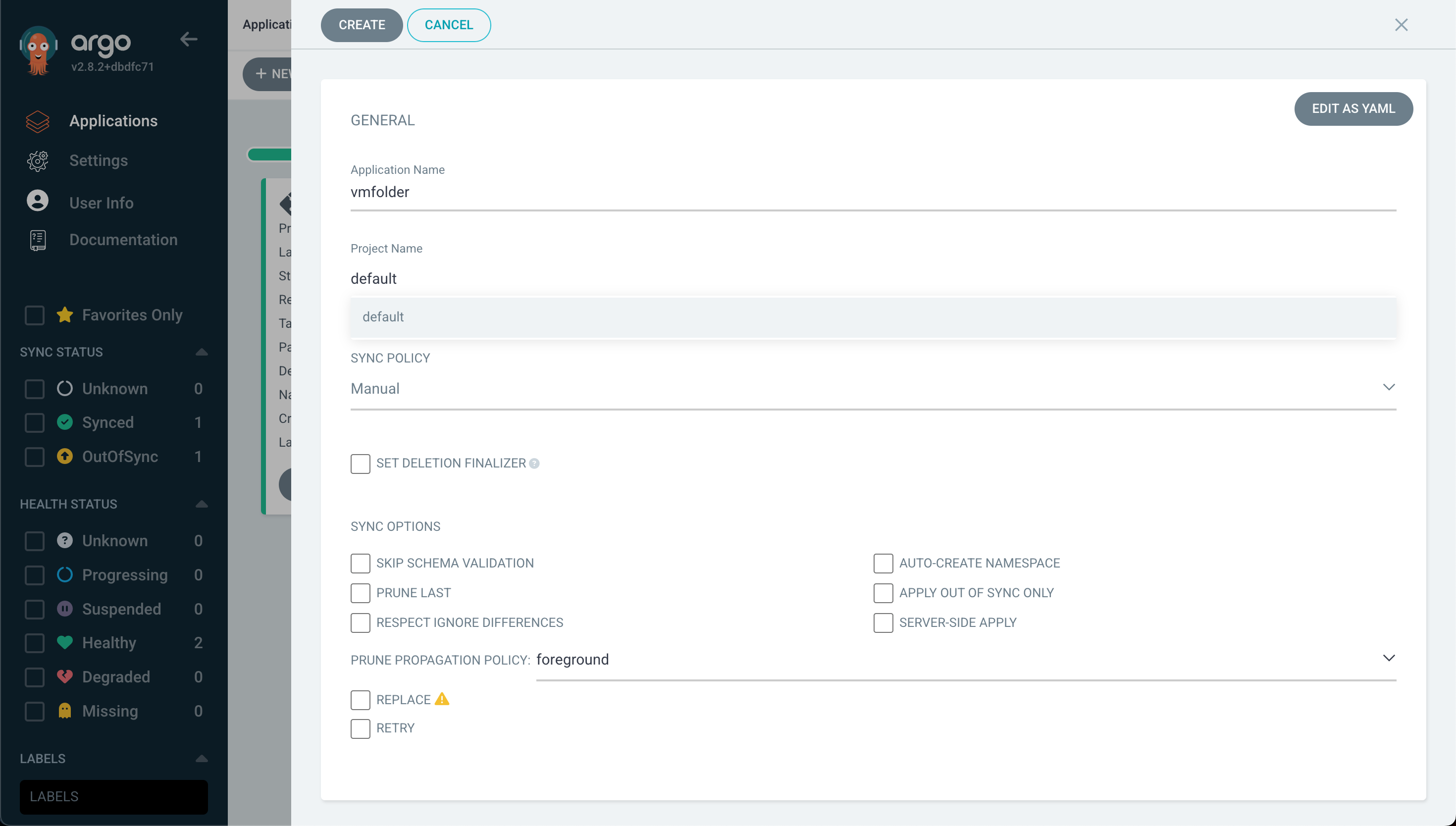

In the Create App wizard we fill in the name of our application

And we also fill in the Git repository for Argo CD to connect to, the path where Argo can find the job specification(s), the Kubernetes cluster to run it on, and the namespace

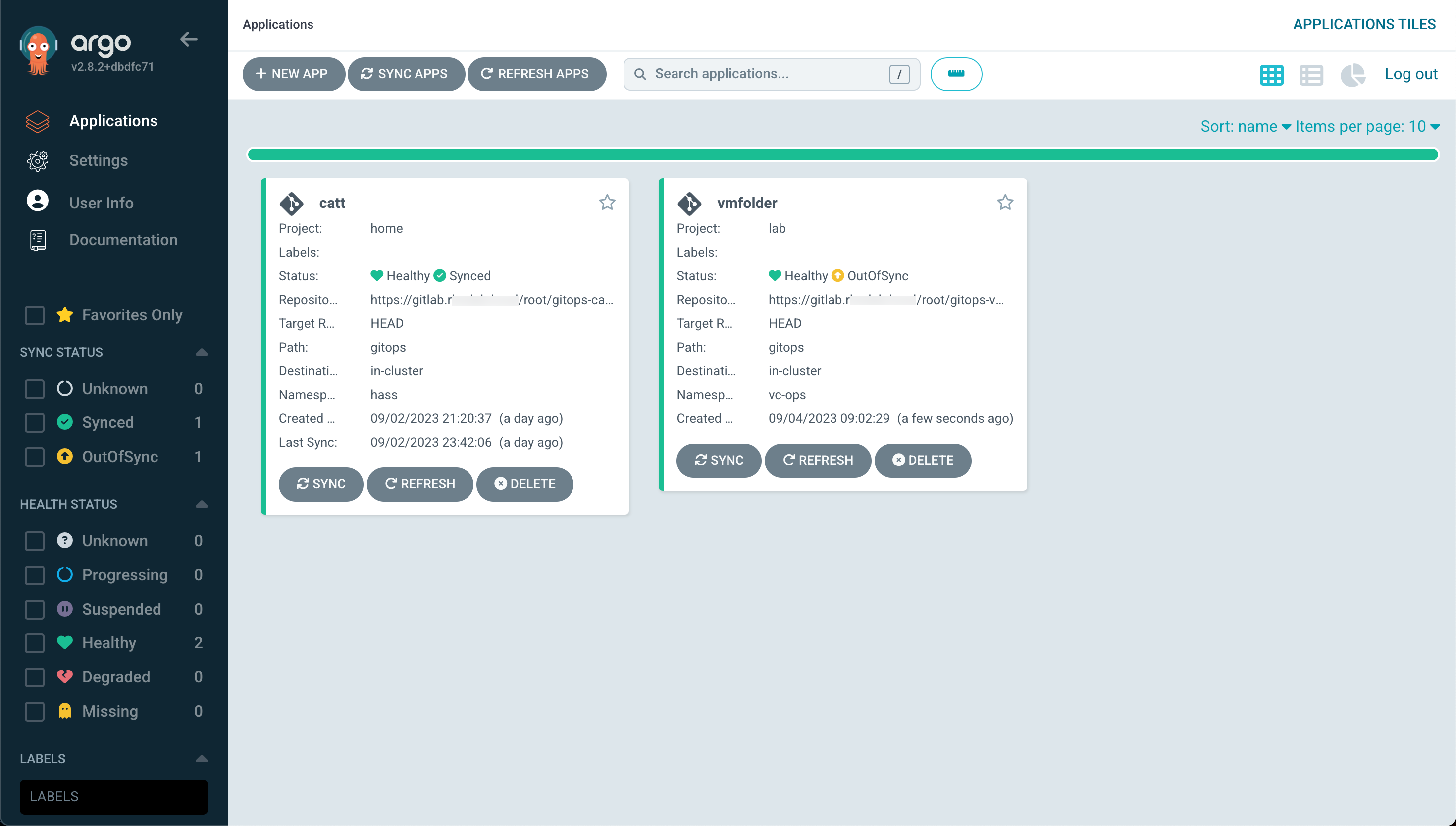

With that in place we can go ahead and create our Application

If we check the details of our app we will see that the status is currently "OutOfSync". This is because in the Create App wizard we selected "Manual" as the sync method.

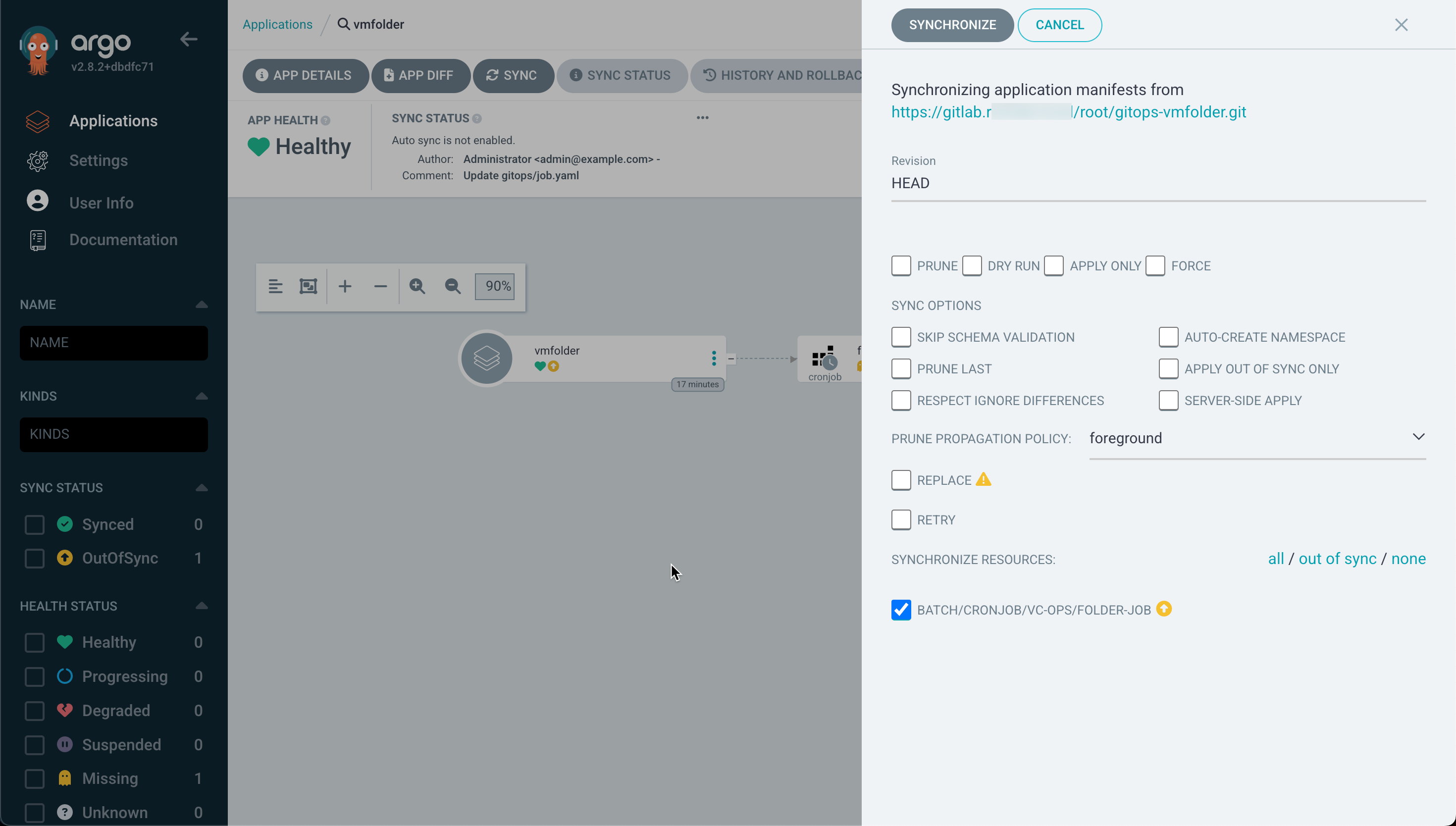

To get our application sync'ed we'll simply hit Sync in Argo CD and it will reach out to the Git repository to fetch any changes and start creating the cronjob in Kubernetes.

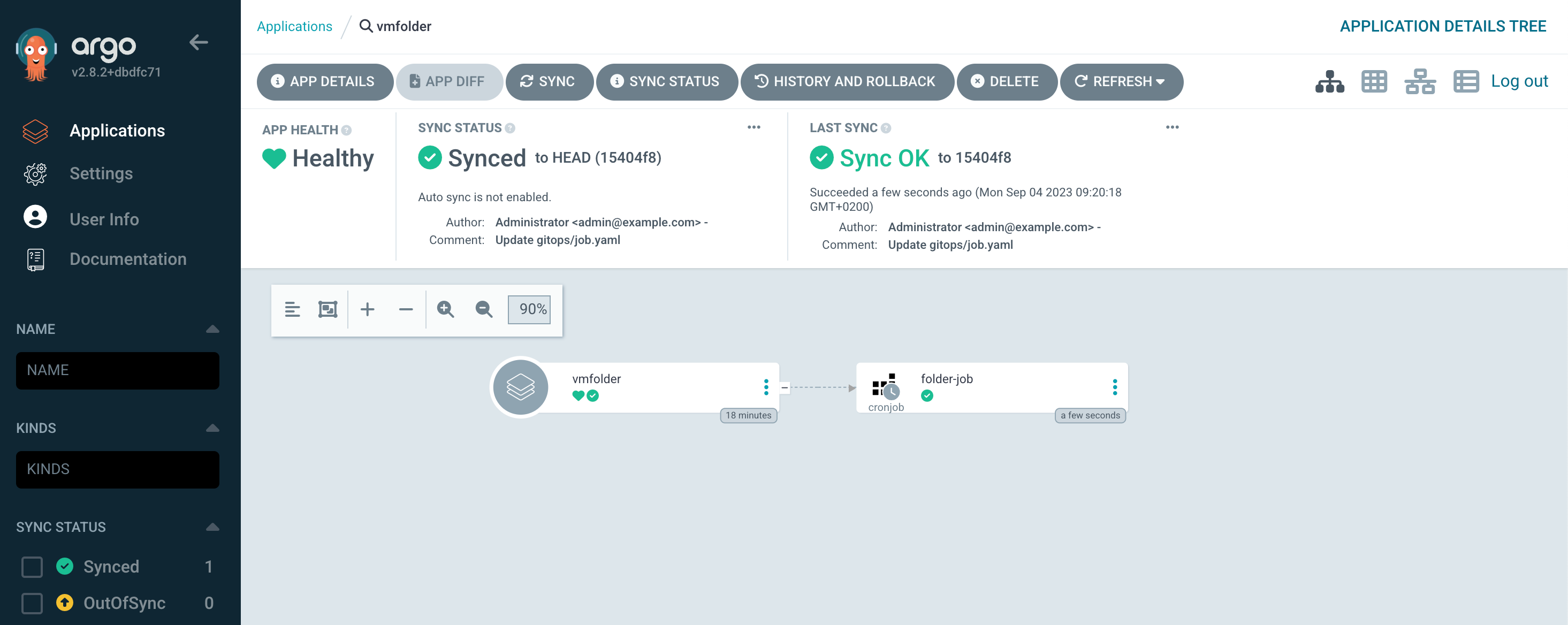

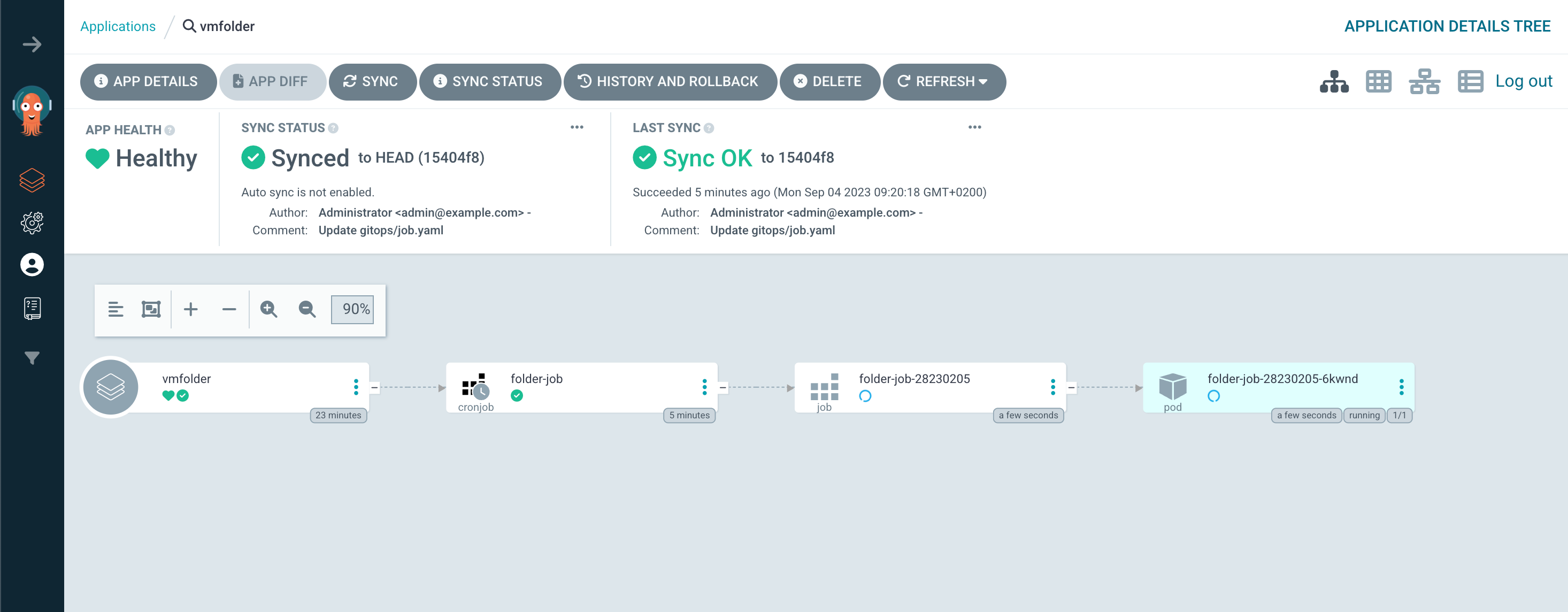

After a few seconds our app should be synced

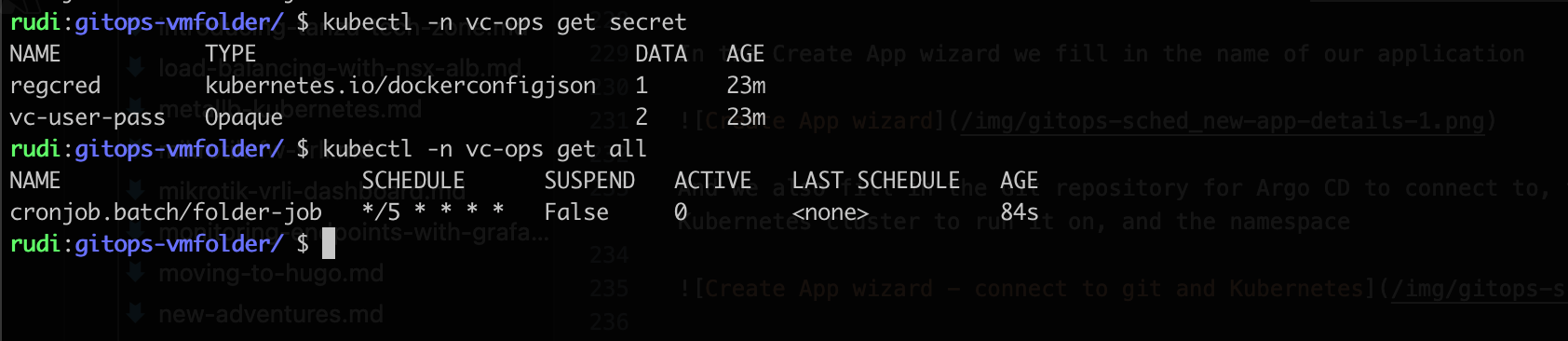

At this point we can head over to the Kubernetes cluster to check if our Cronjob has been created. Note that there's nothing more in our namespace at this point besides that we have prepared the Kubernetes namespace with two secrets, namely for connecting to vCenter and for connecting to the image repository

Depending on our Cron schedule we will after a while see that the Cronjob is run and that a Pod will be spun up with our Container running the script against vCenter

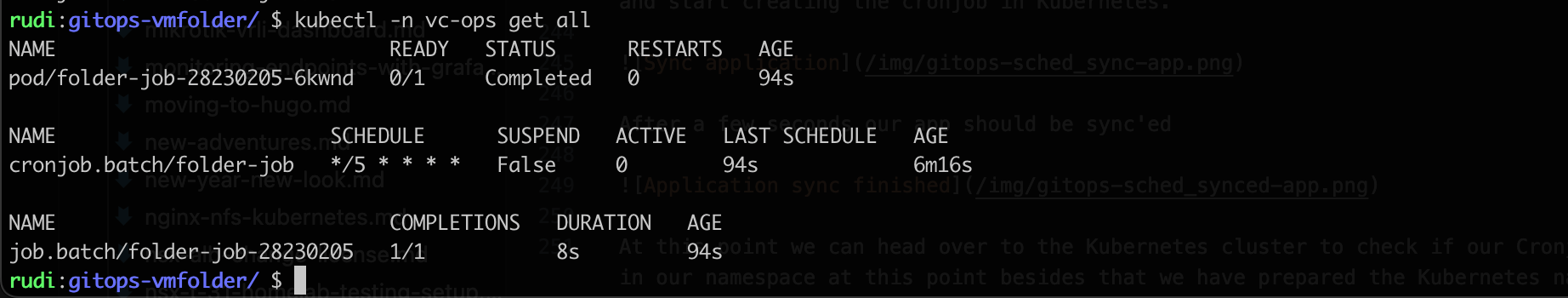

If we go back to the Kubernetes cluster and check we'll see that the Cronjob has created two more resources. A job and a pod

If we check the status of the Pod in Argo CD we can verify that it has pulled and runned our container image

Auto-sync

Now, let's assume that we have an error, or see that our script isn't running as expected and that our VMs remains in the staging folder.

If we drill down to the pod resource in Argo CD we can check the logs outputted from the container

We can see that the script didn't find the "Deploy" folder! Seems like someone made a typo in the script. Let's fix this in our source code and build a new container

Obviously we should've tested the script and container before putting it in production, but for the sake of demonstrating updates we've skipped that step for this post

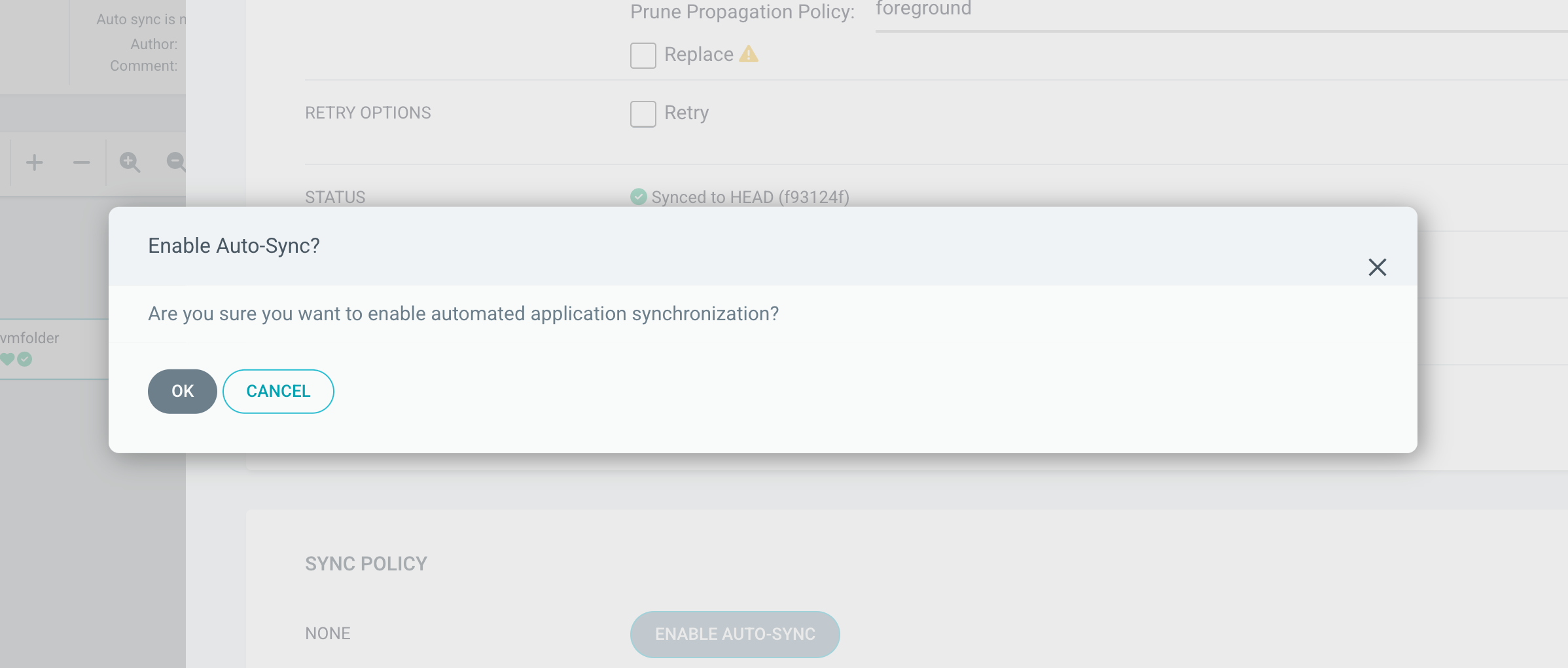

Before we correct our script, let's enable auto-sync for the app in Argo CD

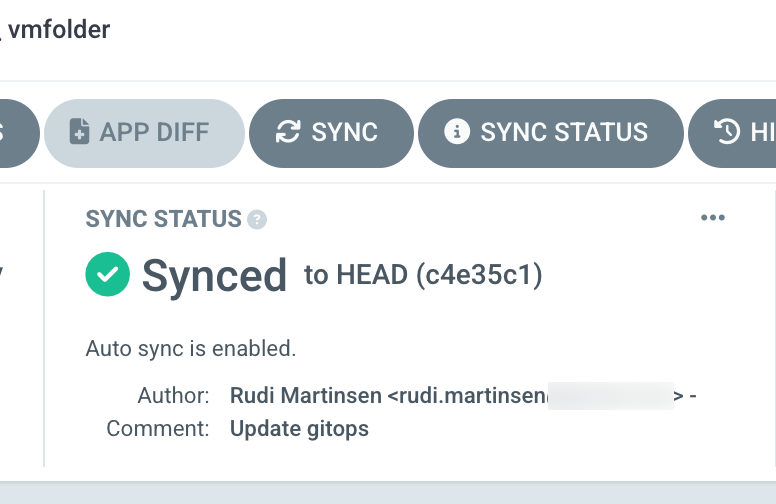

After enabling the automation our App should change it's status

Now, again for the sake of brevity let's assume we've updated our script and built and pushed a new container image to our image repository.

1docker build -t gitops-vmfolder:<new-tag> .

2docker -t <image-repo>/<project>/gitops-vmfolder:<new-tag> gitops-vmfolder:<new-tag>

3docker push <image-repo>/<project>/gitops-vmfolder:<new-tag>

In this small lab environment we're using Gitlab CI to handle building and pushing a new image automatically. Check back later for a blog post on that

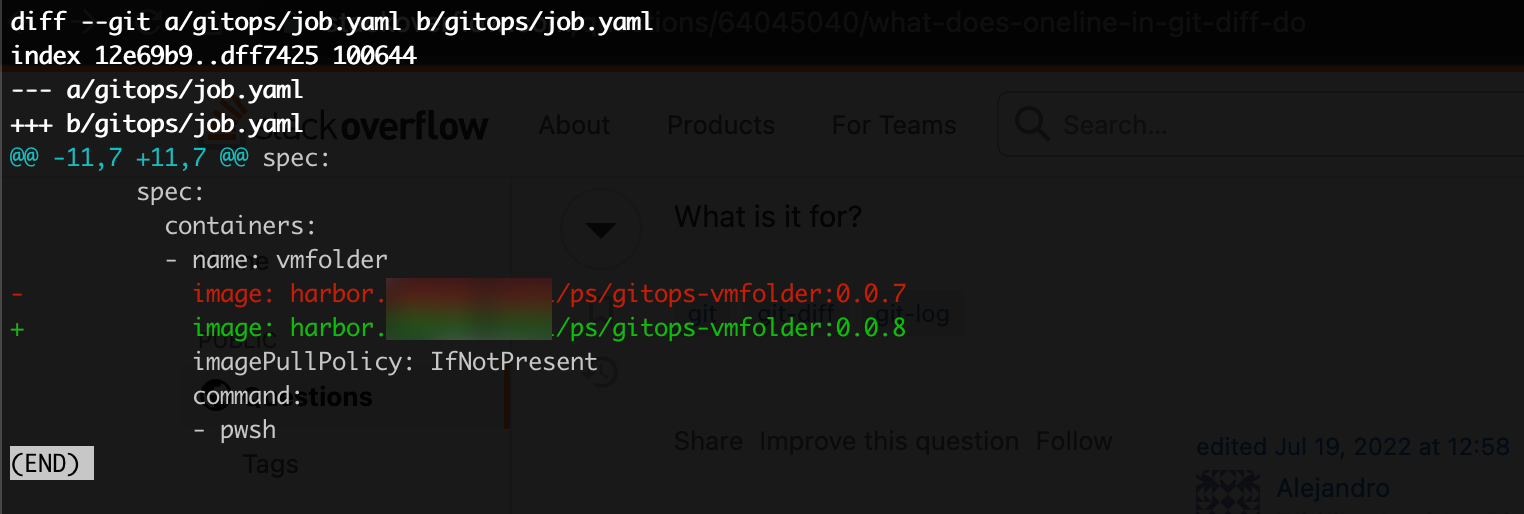

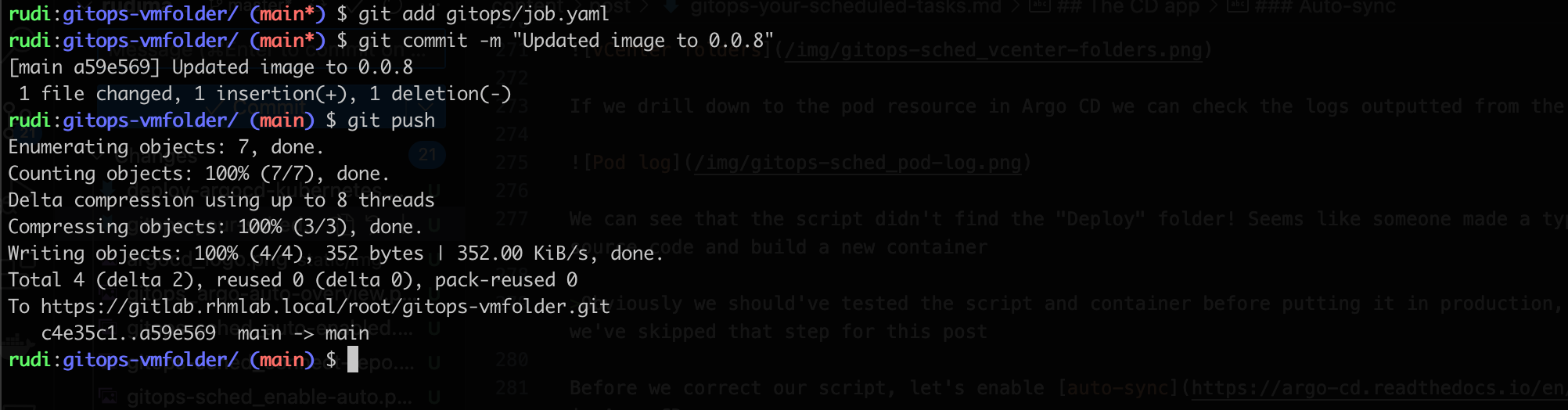

We'll update our job specification with a new image tag

And commit and push that file to git

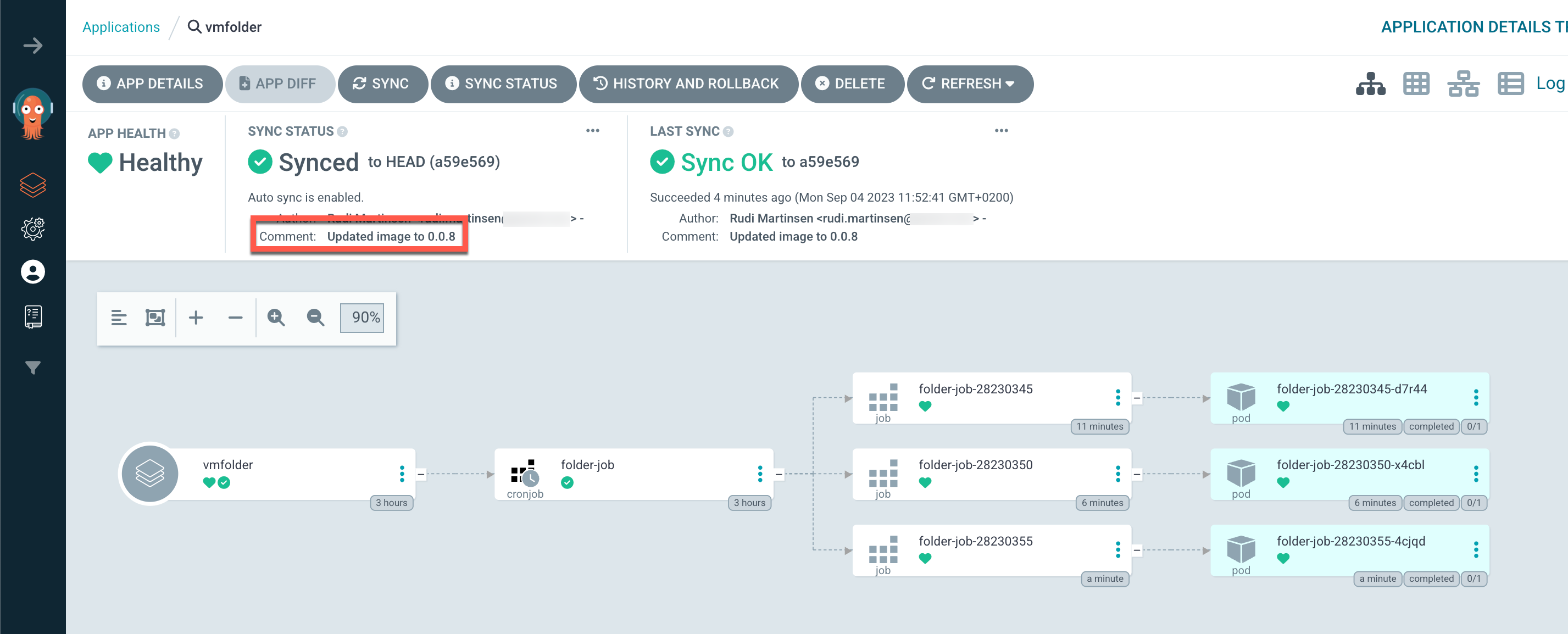

After a few minutes we can see that our Argo CD app has automatically updated the change, synchronized with the Git repository, and (depending on the schedule) started using the new image

The default sync interval is 180 seconds. Check the Argo CD documentation for more info

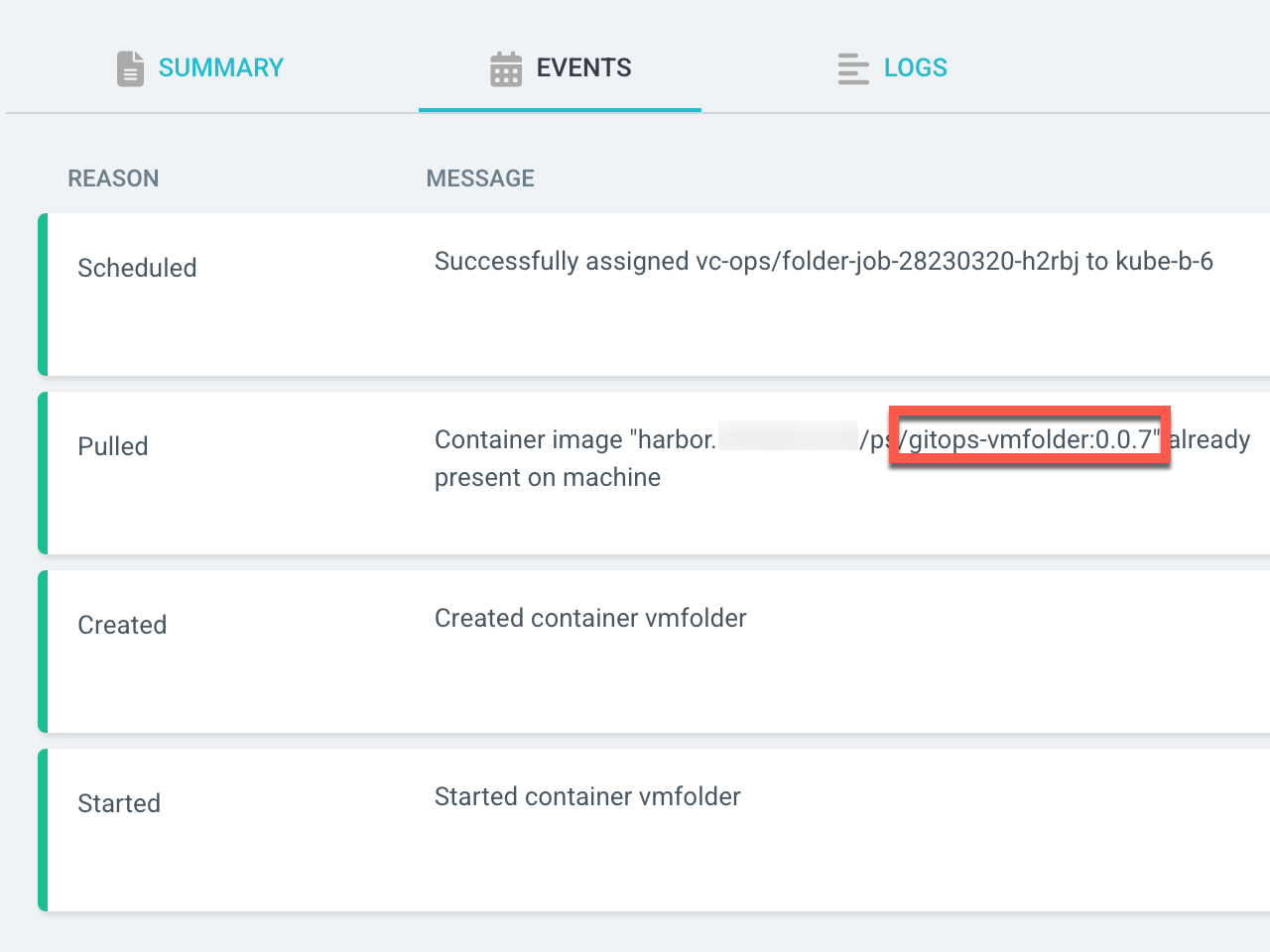

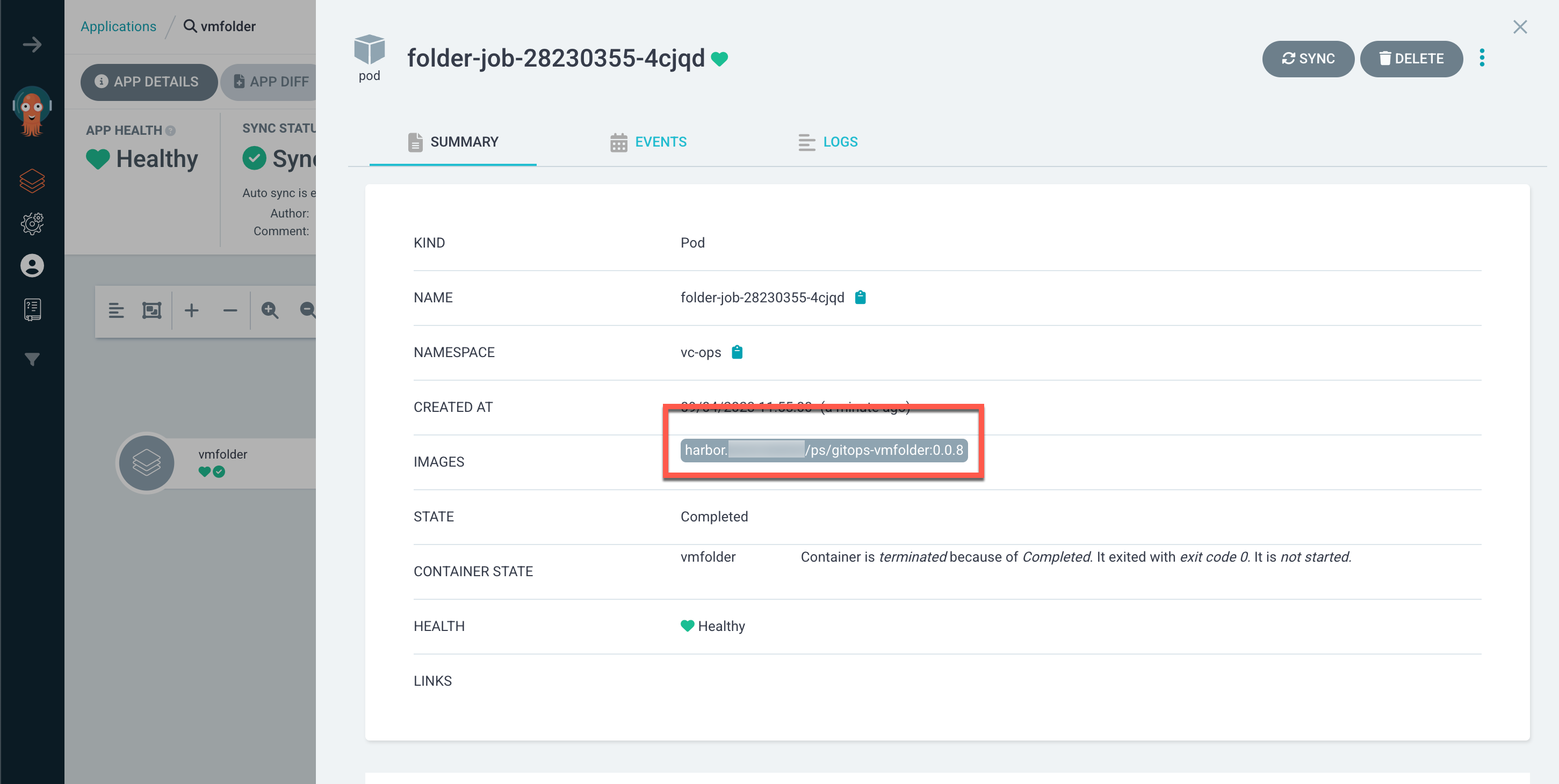

And we can verify that the pod is running the updated container image

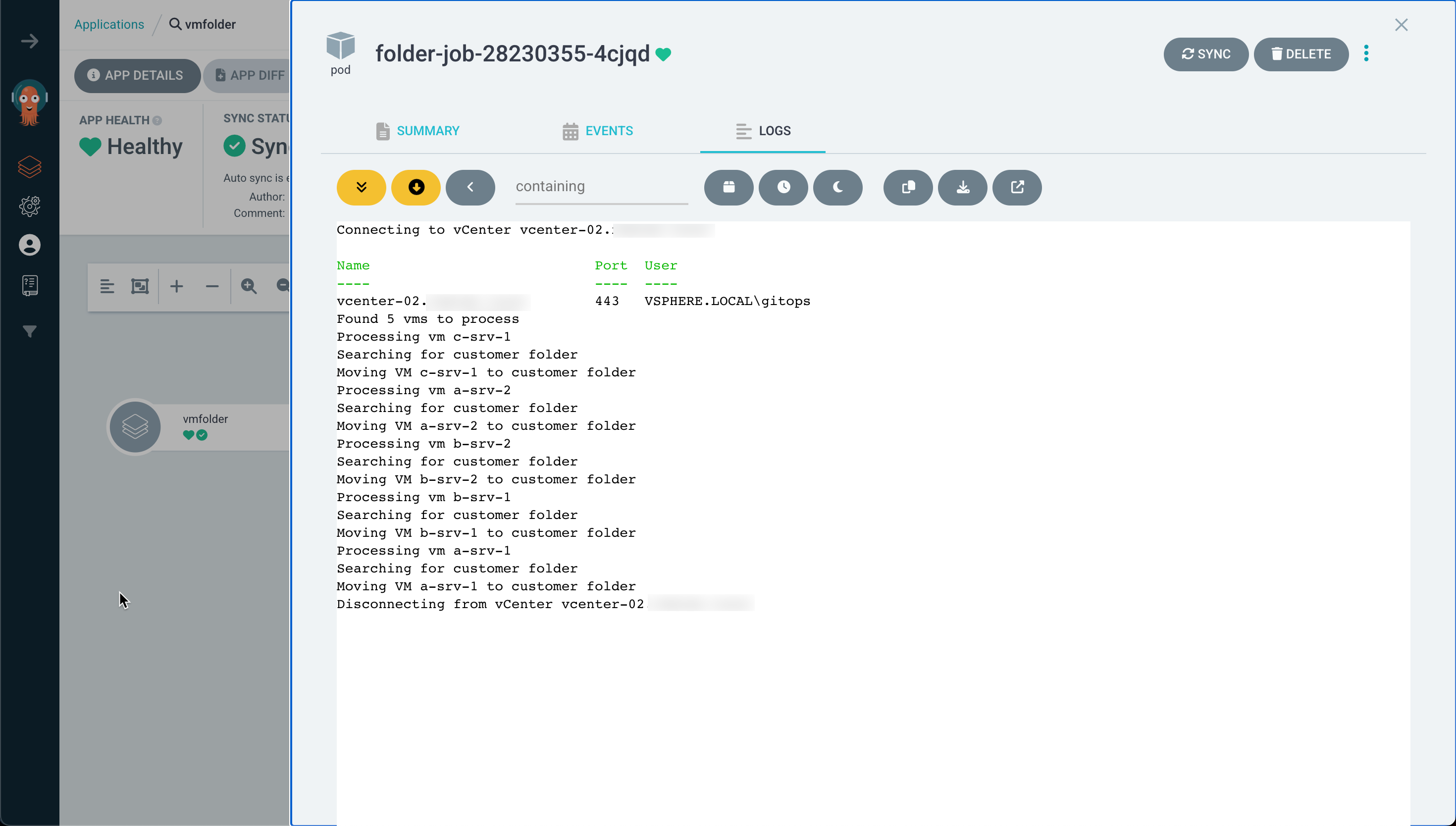

Let's check the pod log to see that the script executes correctly

And finally that our VMs have been moved to their correct folders

Summary

This was quite a long post on how to configure Argo CD for running a script against our vCenter server. And it might seem that it's a bit overkill to set up all of this just to run a script.

Well, as mentioned the Git and source control part is something I think every org should have in place for their scripts regardless of running the script in Kubernetes or on a Windows Server.

The GitOps portion with Argo CD might seem too much, but after getting this set up for the first script we'll have things in place and can easily add in more going forward.

Thanks for reading, and please feel free to reach out if you have any questions or comments.