Integrate NSX Advanced Load Balancer with Kubernetes

Update 2022-10-21: After just one year in the wild VMware announced on Oct 21 2022 that they would no longer update or maintain the TCE project and that by end of 2022 the Github project will be removed. For more information check out my blog post here

Most of the things mentioned in this post (outside of installing TCE) should still be valid for other Kubernetes distributions

A Load Balancer is an essential part of a Kubernetes cluster and something that we need to provide ourselves, it is not a built-in feature.

I've previously looked at MetalLB which is an easy way of providing Load Balancing services in a Kubernetes cluster and at Contour for providing Ingress. In this post we will look at the NSX Advanced Load Balancer (Avi Vantage) and the Avi Kubernetes Operator (AKO).

Note that Avi is an Enterprise grade software load balancer and most of what we'll discuss in this post requires the Enterprise Edition license.

AKO Overview

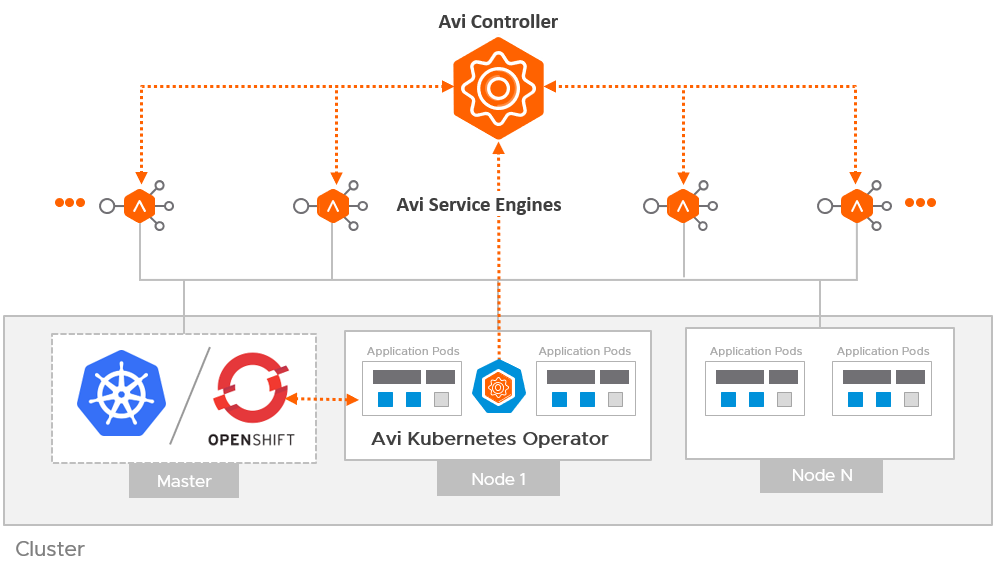

AKO consists of the following:

- An Avi controller

- Service Engines (SE)

- The Avi Kubernetes operator (AKO)

The Controller delivers the control plane functionality and is where we perform our administrative tasks in an Avi platform. The controller has both an UI, a CLI and an API.

The SE's are the data plane and is where the magic happens. The SE's are deployed as virtual machines on an infrastructure platform.

The AKO is a pod running inside the Kubernetes cluster. It provides an Ingress controller and Avi configuration capabilities. It watches relevant Kubernetes objects and calls the Avi API (on the Controller) to deploy services on the Service Engines

There are a lot of features and functionality available to us in the platform, and by that a lot of design considerations to take. To keep this post "short" and sweet please refer to the Avi documentation for all design considerations

AKO deployment

Let's see how we can integrate Avi into a Kubernetes cluster

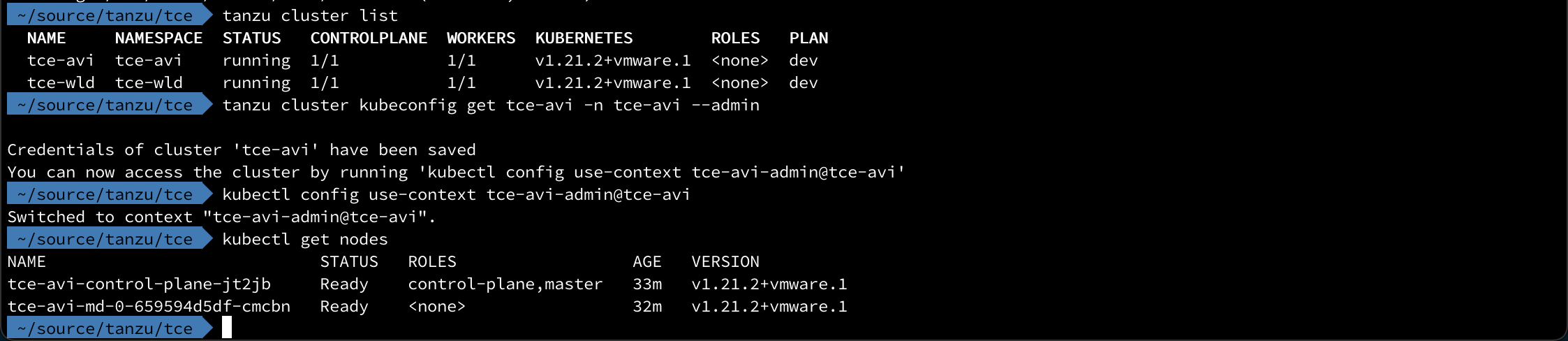

Kubernetes Cluster

I have deployed a TCE cluster for the purpose of this post. The cluster is running on the Tanzu Community Edition platform, but the procedures in this post should fit most Kubernetes platforms.

Avi controller

I already have an Avi controller deployed and configured with a vSphere cloud. I've previously written about how to deploy the Avi platform here. This post uses Avi with vSphere with Tanzu, but the deployment steps should be pretty much the same.

The Avi documentation for deployment of Avi on vSphere can be found here

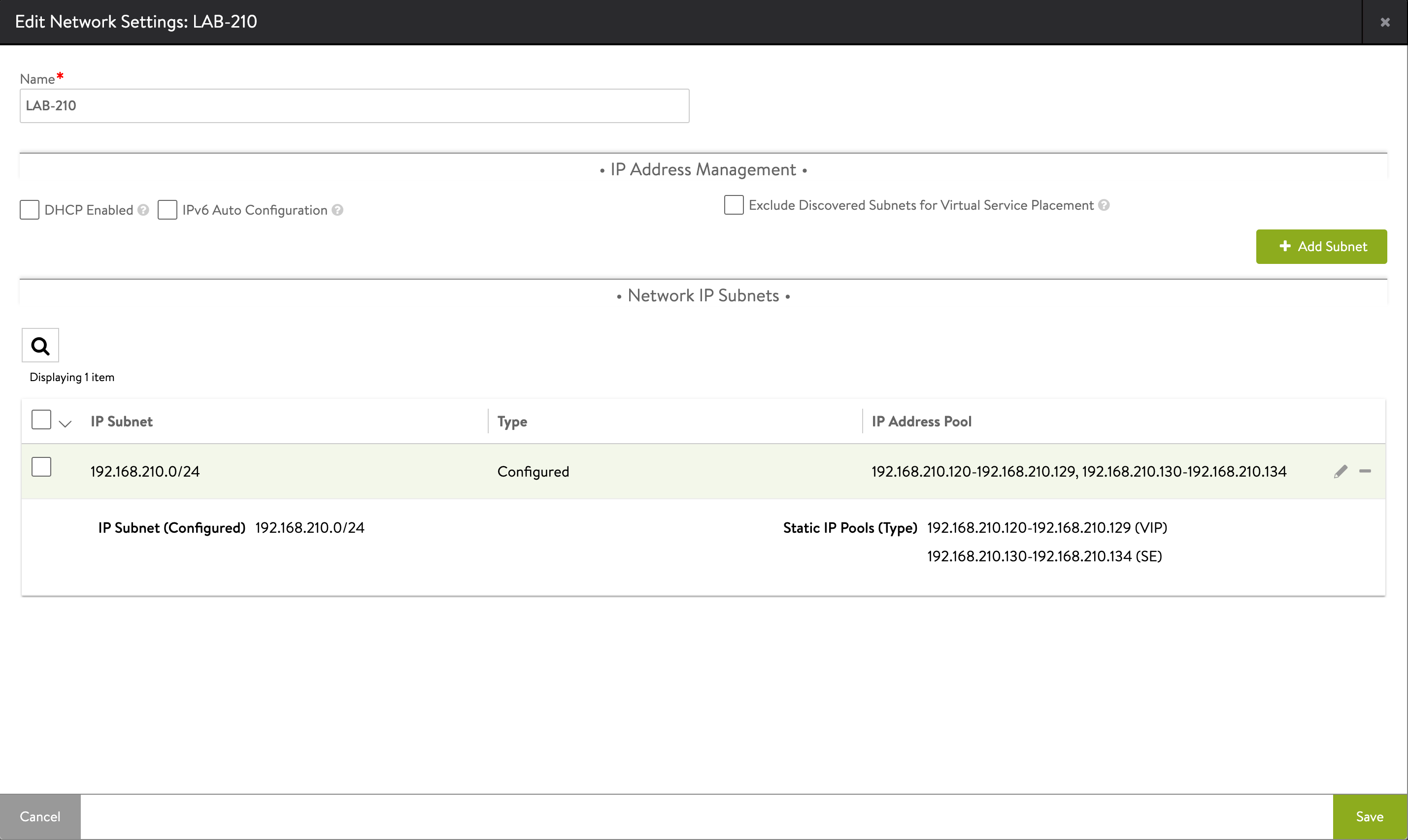

I have a network configured for the Kubernetes resources that the controller will pull IP addresses from, one pool for SE's and one pool for VIPs

Note that the SE's also has a management IP in a different network.

Install AKO

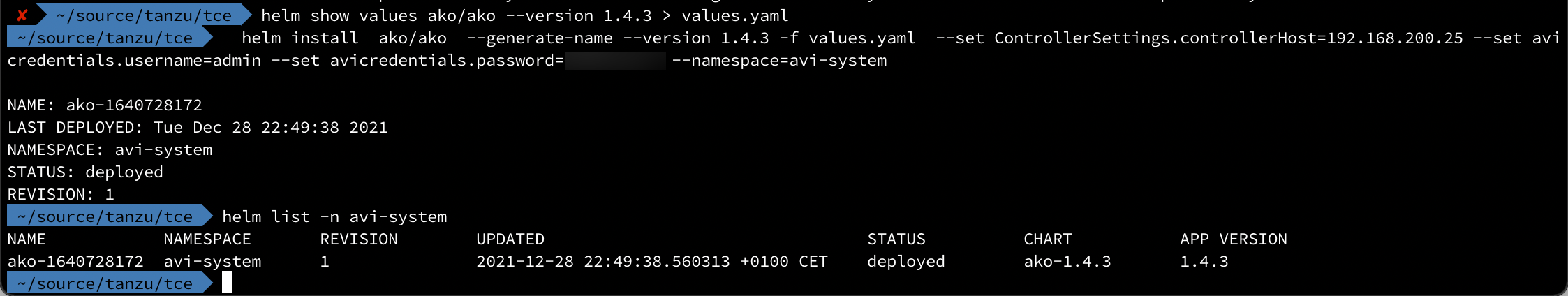

Installing the Avi Kubernetes Operator is documented here and is what I've used as a reference for this post.

The installation is done through helm so this is something we need to have on our client machine before deployment.

Helm uses a values file for the specifics of the deployment and in this setup I'm keeping things simple by just specifying the following:

- Avi controller version (update to match your setup)

- Cni plugin

- Clustername

- subnetIP

- subnetPrefix

The controller IP and user credentials are specified as parameters to the helm command

With AKO installed there's not much happening over on our Avi controller. No new Service Engines or Virtual services are deployed so if our Controller is a fresh install we won't have anything running on it.

The SE's and services will be deployed only when we set up our first service of the Load Balancer type in Kubernetes

Load balancer service type

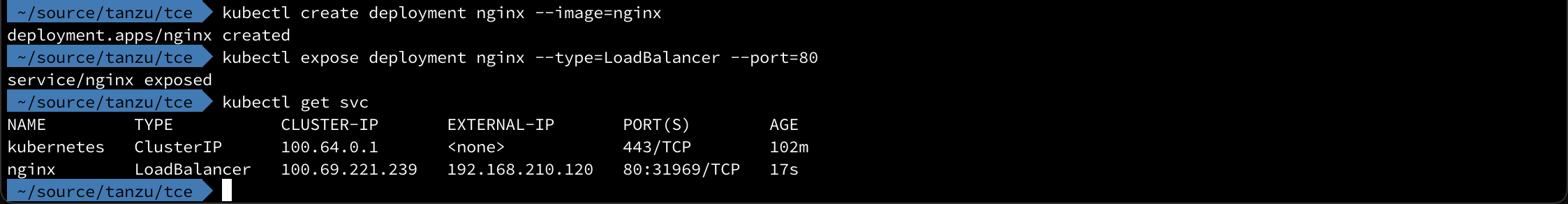

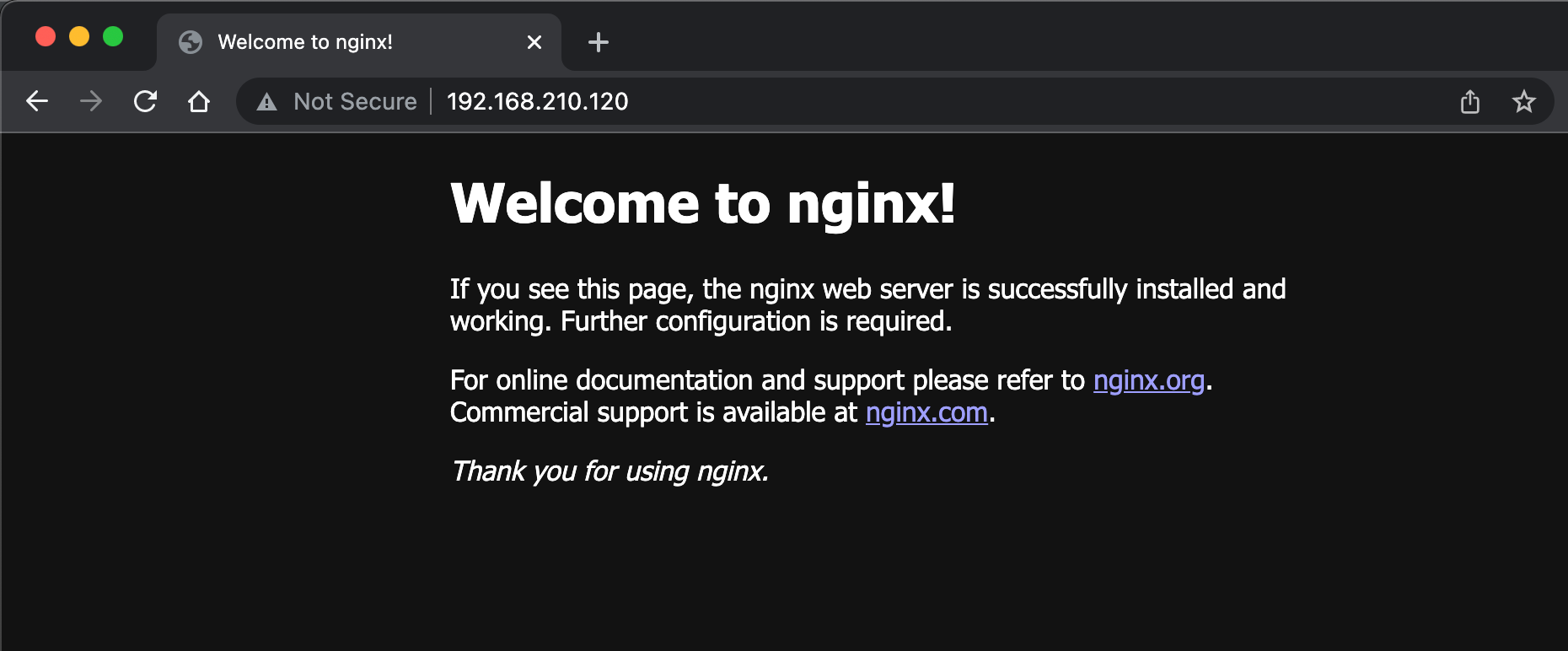

Let's create a nginx deployment and expose it with the Load Balancer service type

Note that since this might initiate the deployment of a virtual service which in turn are depending on Service Engine(s) it might take some time before the IP is working

Avi components

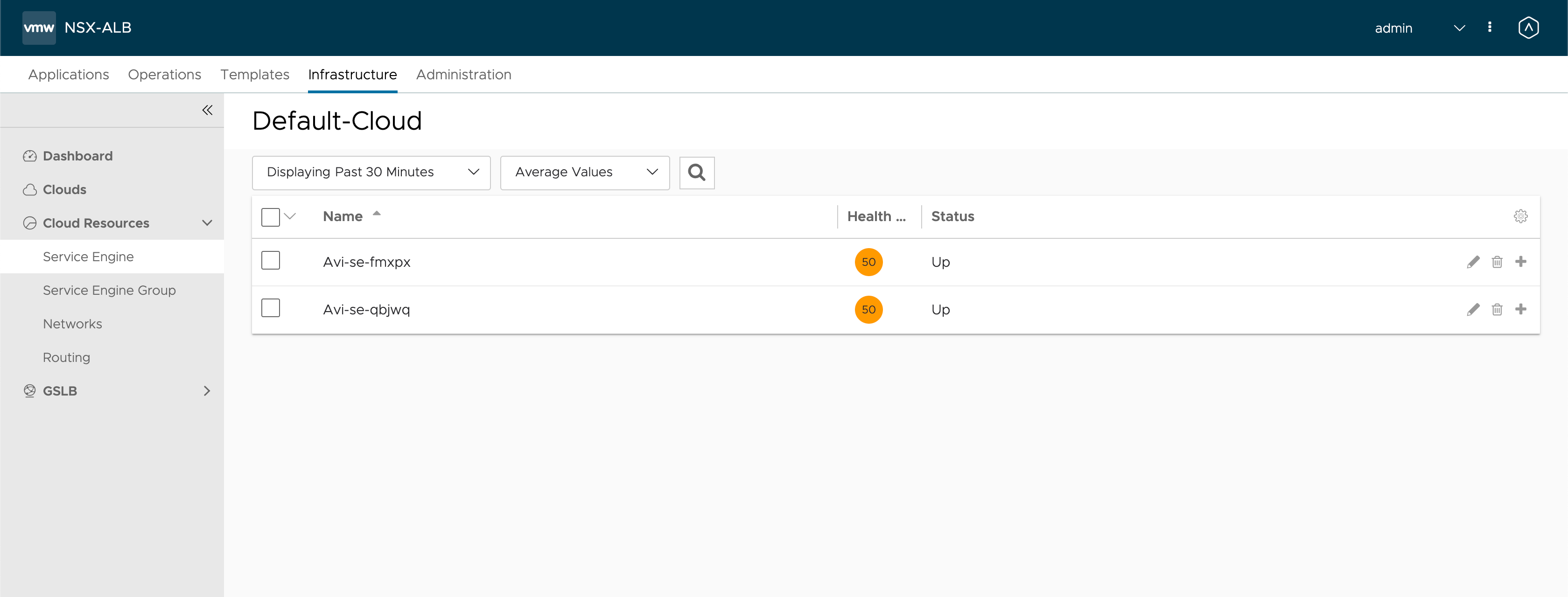

So what happened over in Avi?

Two Service Engines got deployed

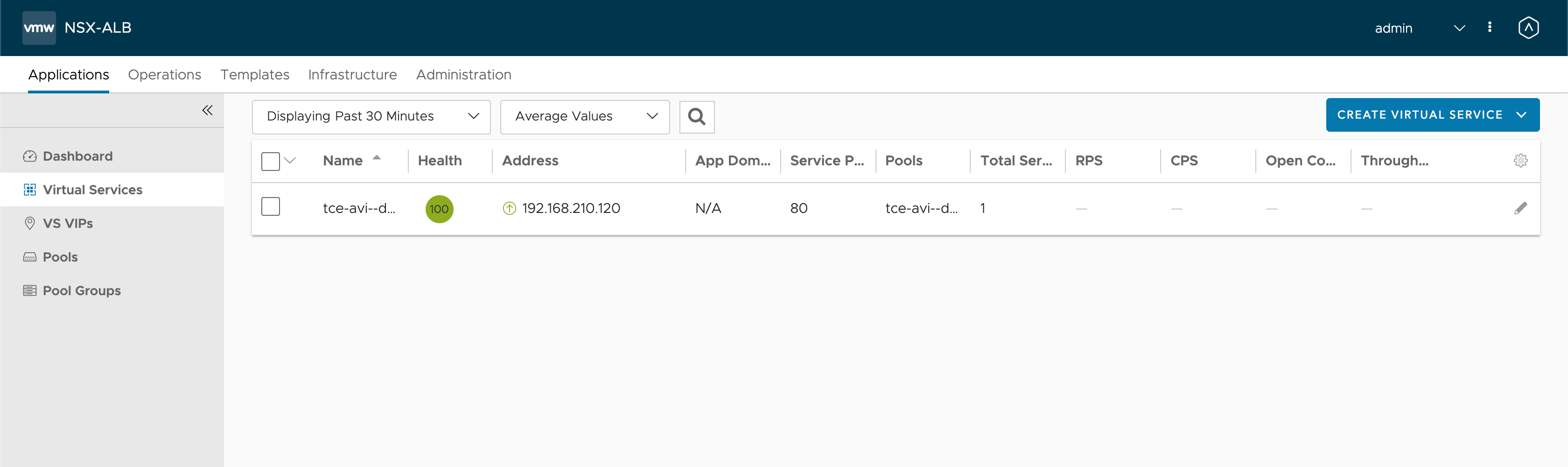

A virtual service pointing to our Load Balanced IP has been created

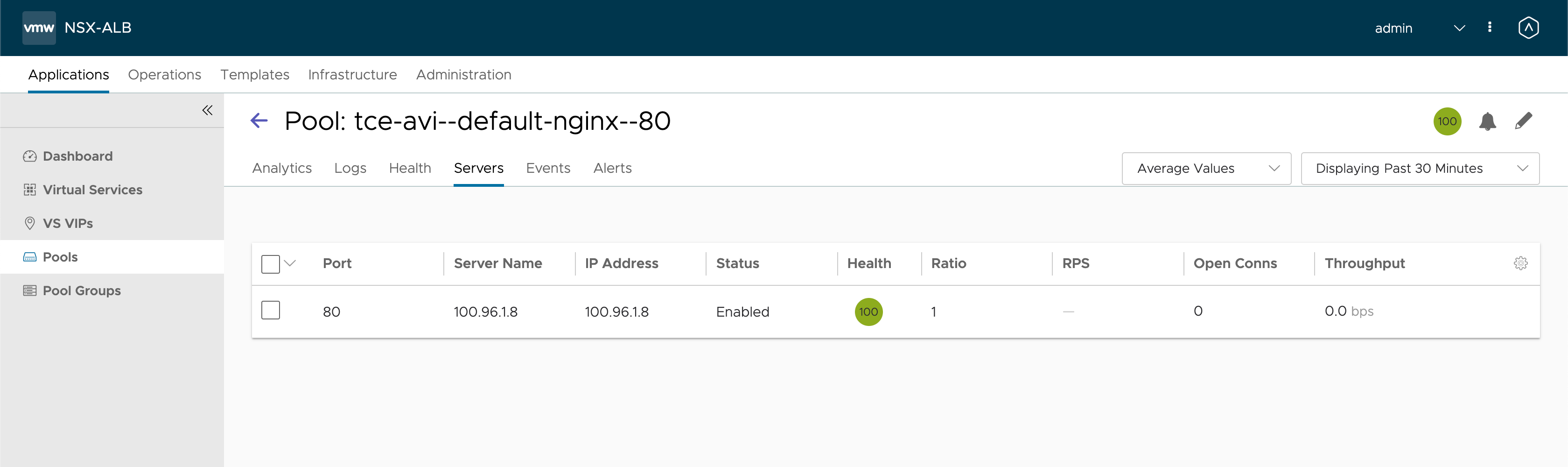

The virtual service uses a Server pool which points to our pod

Again, note that it might take some time before the components are up and running and also it will take some time before the status is healthy

Avi DNS Service

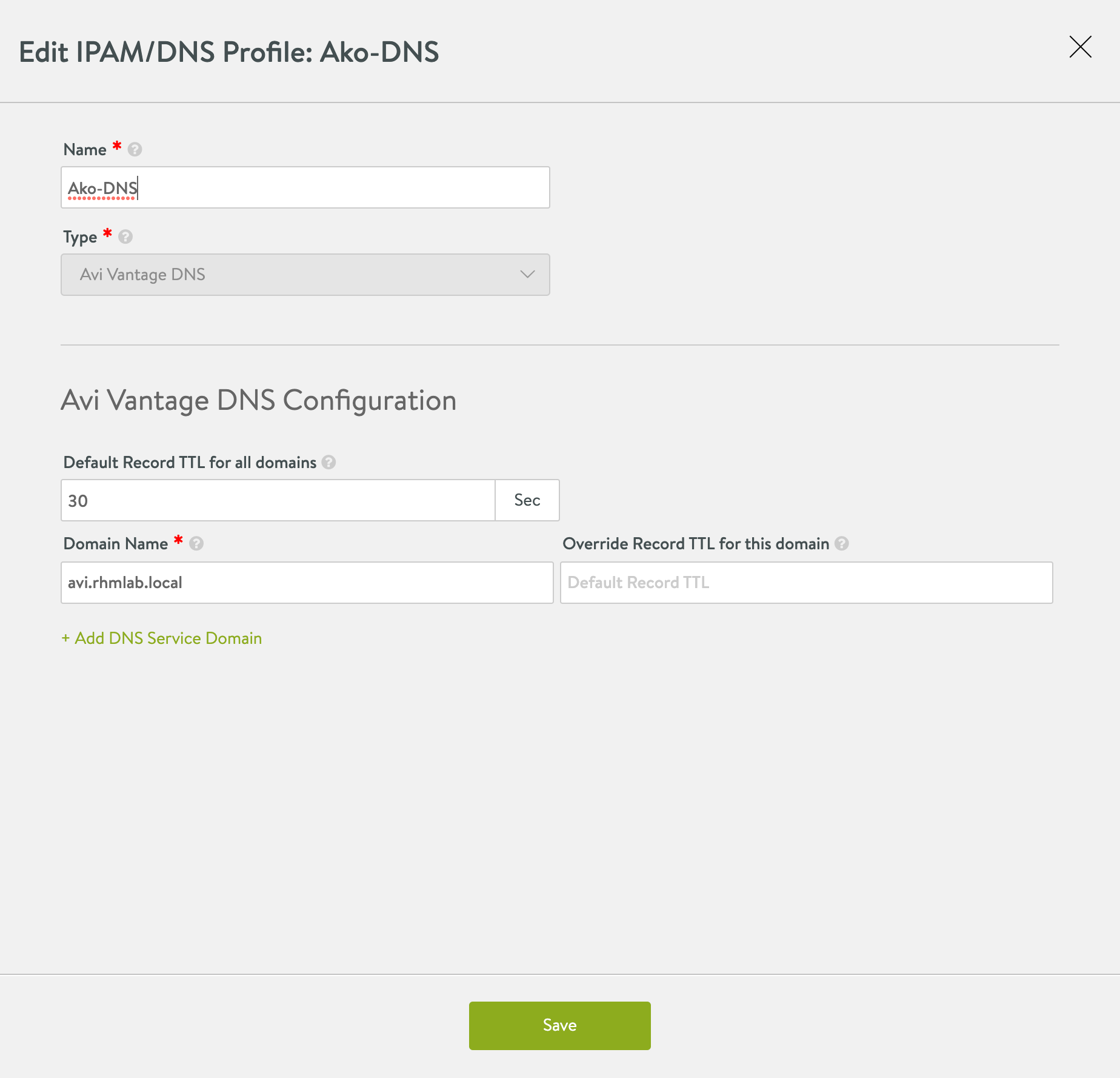

Avi comes with the ability to deliver DNS services for the virtual services it controls. The DNS service is configured with a DNS profile, and a DNS service

The documentation specifies the steps needed

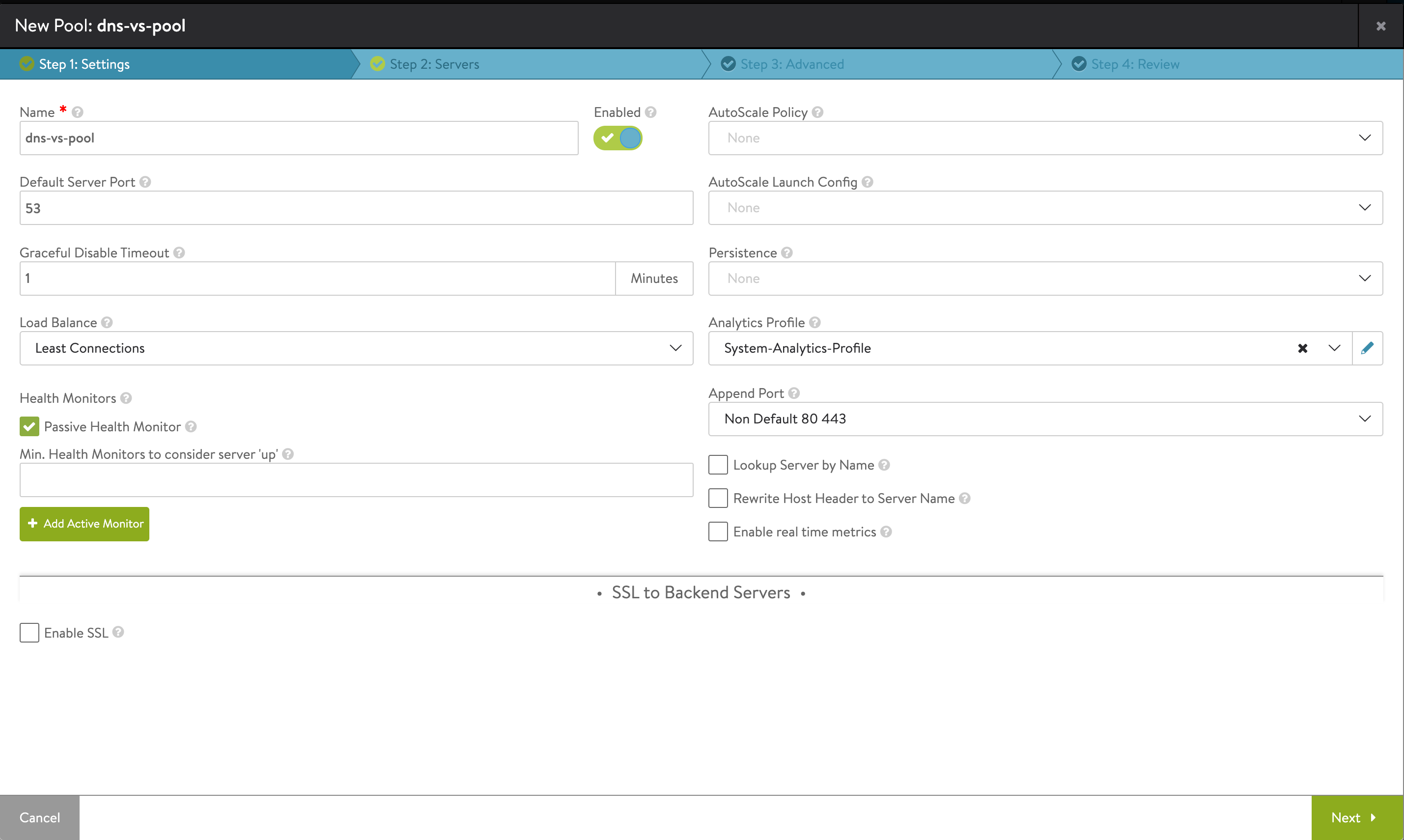

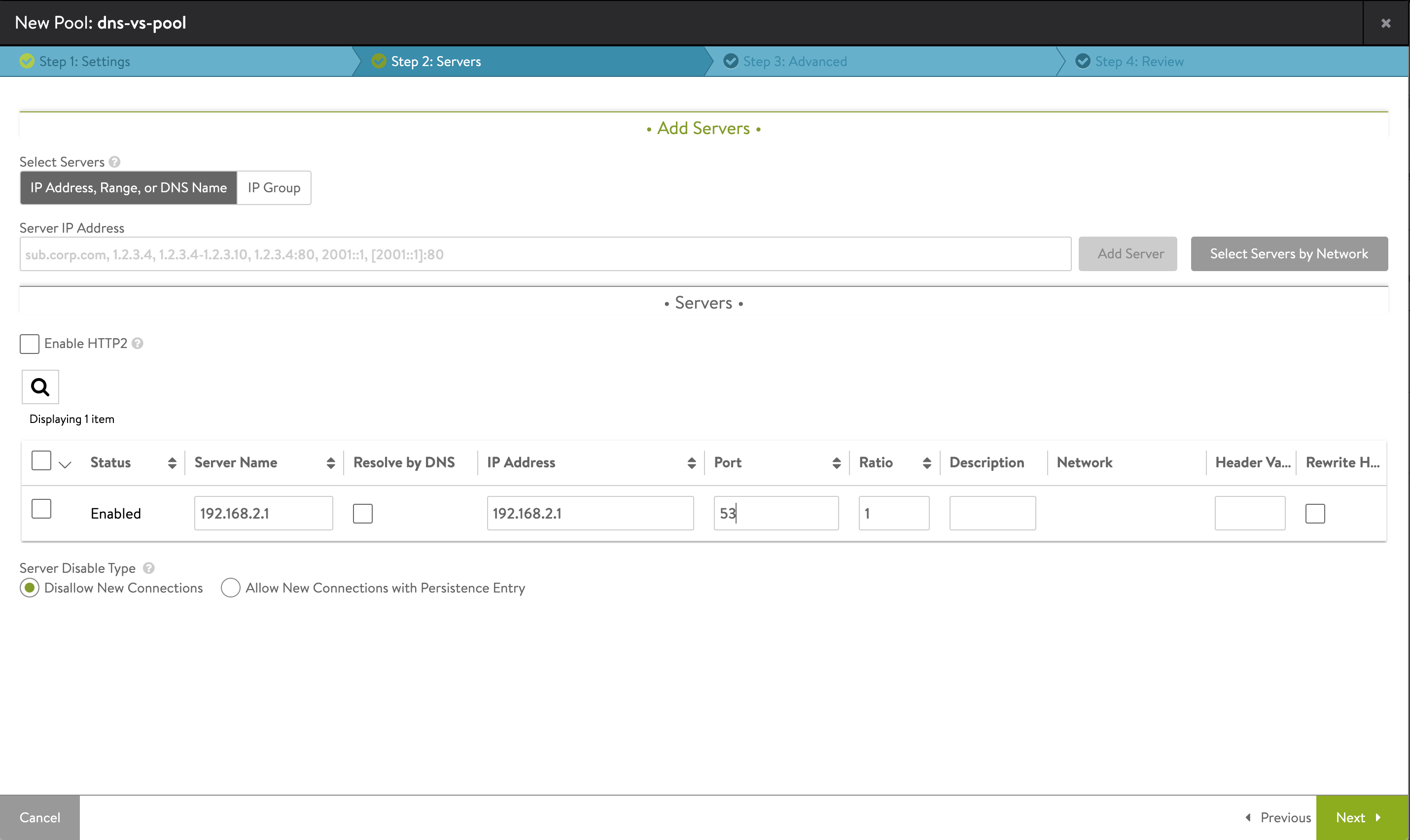

We need a DNS profile

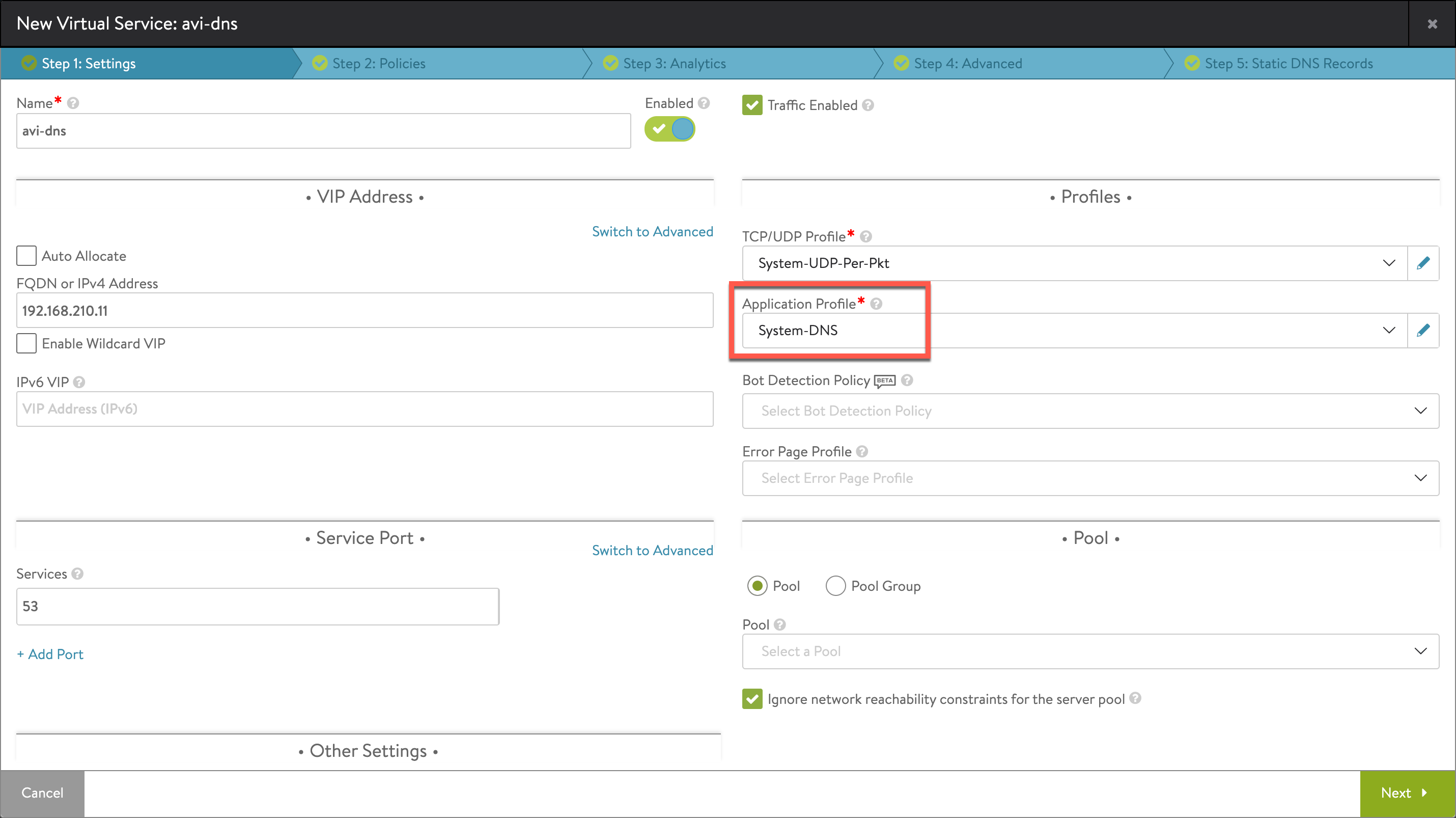

And a Virtual service

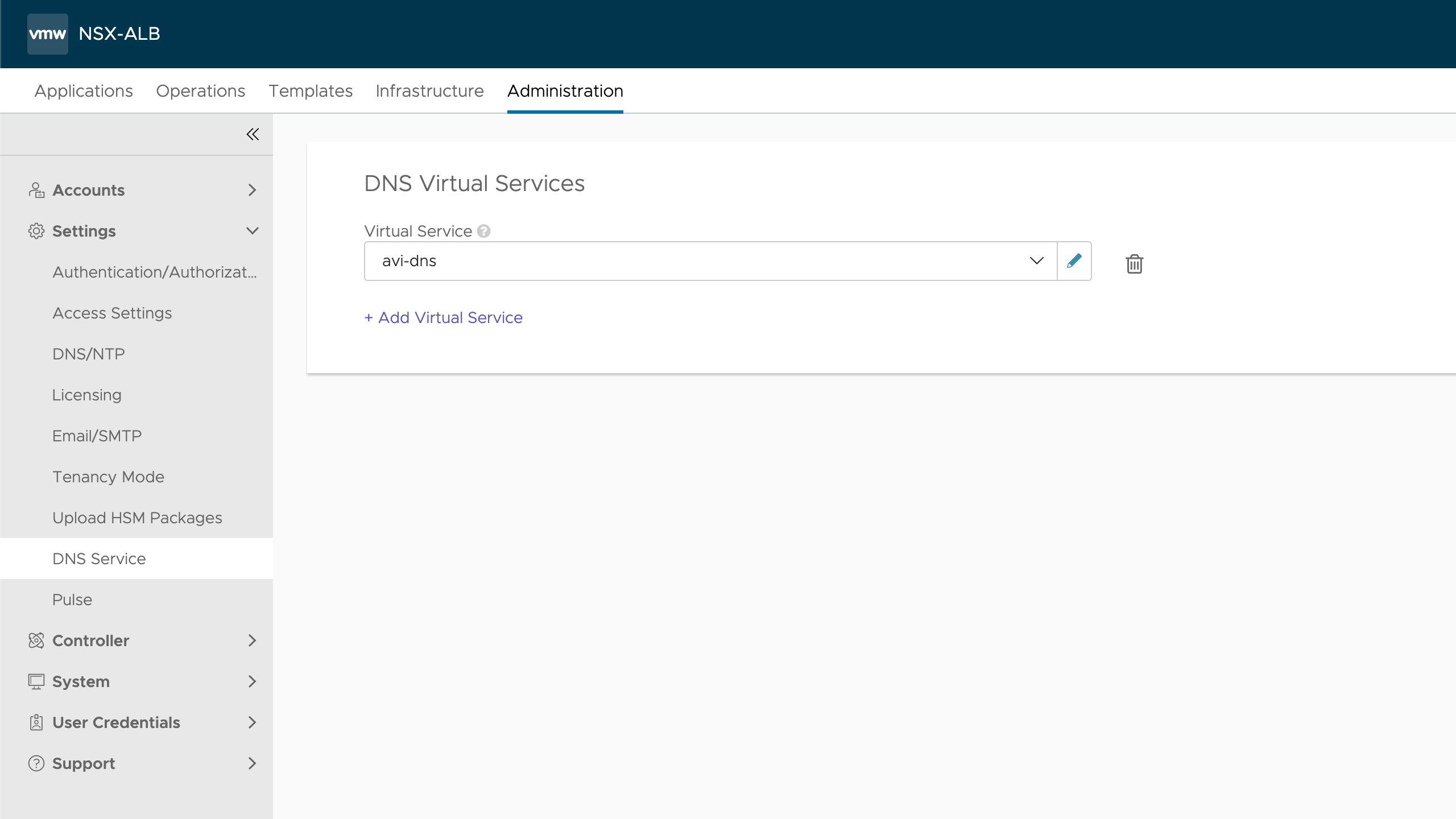

And activate the service in the Avi settings

Note that we do not have to specify servers for the DNS virtual service, but we could add in the address for our "physcial" DNS server so that queries that Avi doesn't control will be routed there

Server list

As a last step we should also add a delegation for the DNS subdomain that we configure in Avi on our normal DNS server

Ingress

Now let's take a look at the Ingress capabilities in AKO.

Ingress objects let's us work with L7 and http routes but these requires an Ingress controller to work. Luckily AKO delivers that capability.

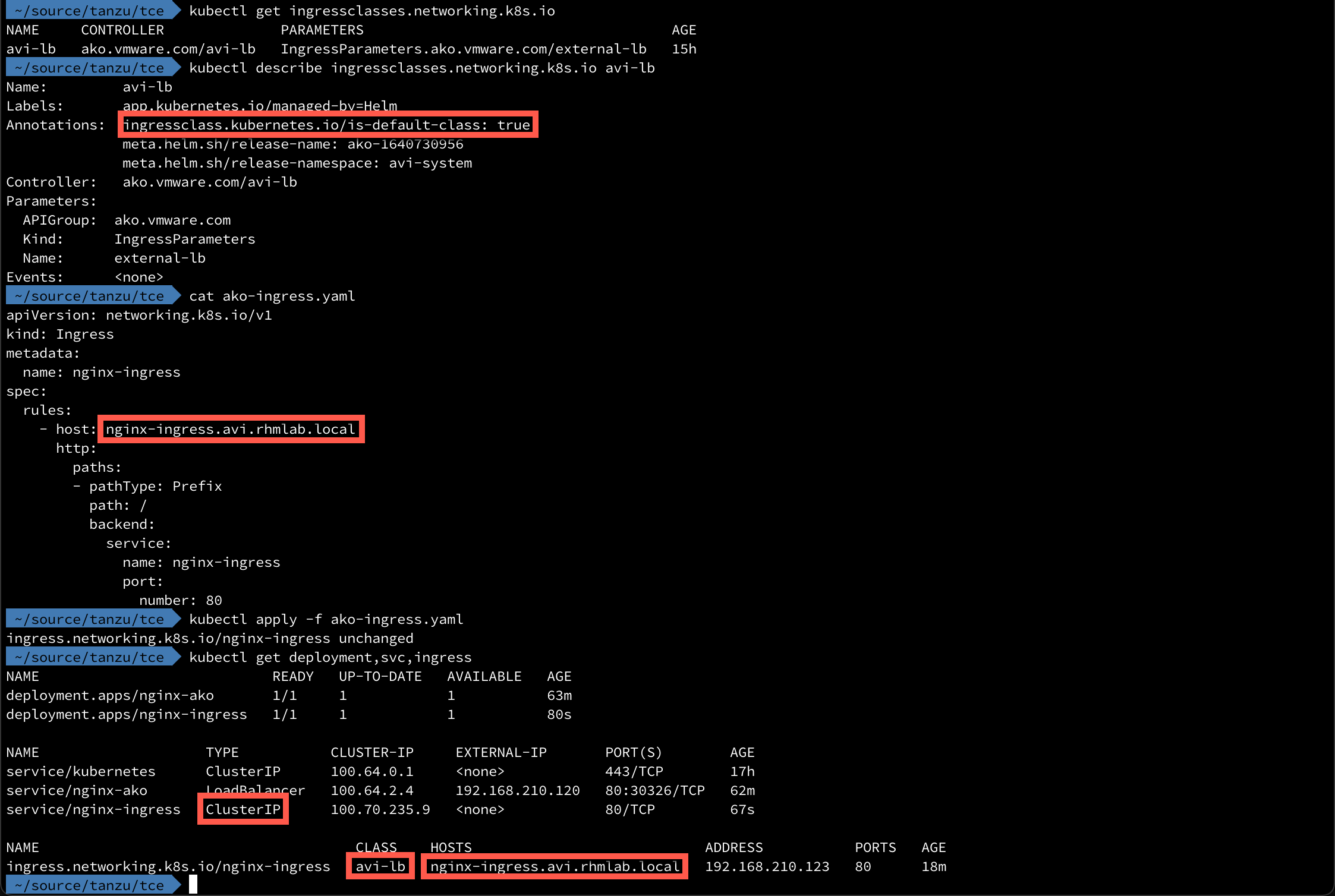

By default the AKO Helm installation creates an Ingress class and sets it as the default.

To test the ingress, I have created a new deployment and expose it as a service of type ClusterIP.

We'll examine the Ingress class, and create a new Ingress object. Note that since the avi-lb has been set as the default class we don't have to specify this in our Ingress.

1apiVersion: networking.k8s.io/v1

2kind: Ingress

3metadata:

4 name: nginx-ingress

5spec:

6 rules:

7 - host: nginx-ingress.avi.rhmlab.local

8 http:

9 paths:

10 - pathType: Prefix

11 path: /

12 backend:

13 service:

14 name: nginx-ingress

15 port:

16 number: 80

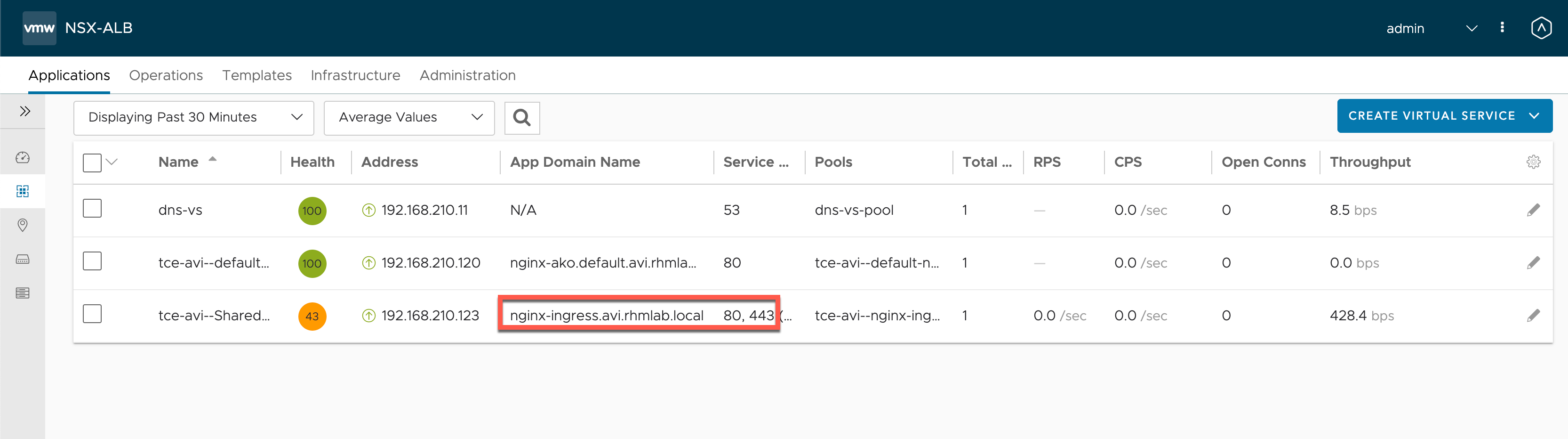

And after creating the Ingress object we can see that we have a new Ingress pointing to a specific hostname.

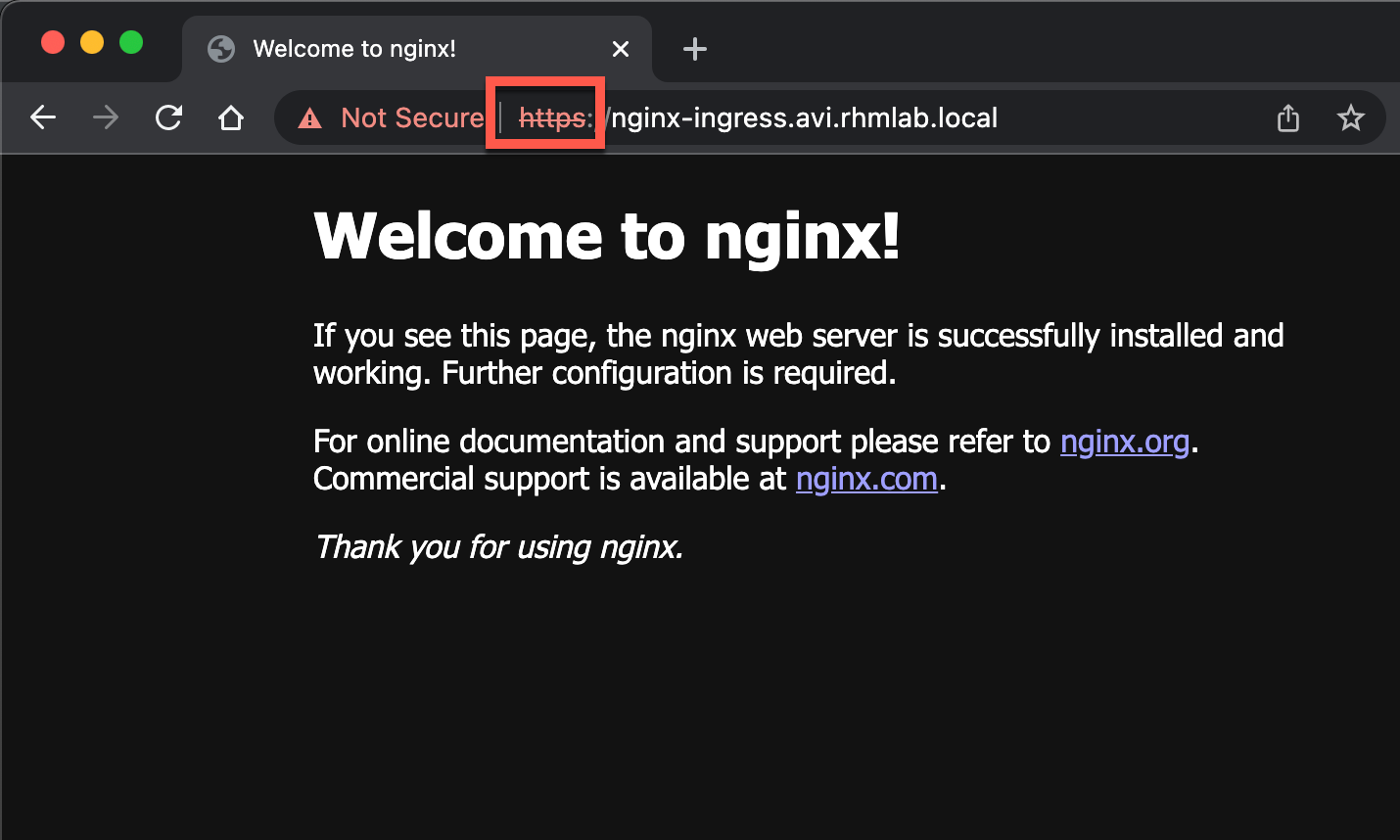

When we test the hostname in a browser we can actually see that, since I'm using Chrome that tries to use https, Avi has created a Virtual service that answers on both port 80 and 443.

Let's see the resources in the Avi controller

There's much more to Ingress than what we've covered here, but this shows at least some of the capabilities in Avi.

Summary

This post has shown how we can integrate the NSX Advanced Load Balancer into a Kubernetes cluster with the Avi Kubernetes Operator (AKO).

AKO also comes with Custom Resource Definitions (CRD) for even more advanced capabilities and integration with Avi specific features, but that's a topic for later. A blog worth checking out for a lot more details than we've looked at in this post is sdefinitive.net. Another post with examples is this from Robert Guske

As we can see Avi delivers lots of great capabilities to Kubernetes clusters, but of course this comes with a price since the platform needs to be licensed with the Enterprise edition to use most of the features.

Thanks for reading!