TCE Cluster on vSphere

Update 2022-10-21: After just one year in the wild VMware announced on Oct 21 2022 that they would no longer update or maintain the TCE project and that by end of 2022 the Github project will be removed. For more information check out my blog post here

Most of the things mentioned in this post (outside of installing TCE) should still be valid for other Tanzu Kubernetes distributions

Earlier this year VMware announced it's open-source Kubernetes offering Tanzu Community Edition, which is a full-featured Kubernetes platform. It's aimed for anyone that wants to get their hands dirty with an enterprise grade Kubernetes platform, but as it's fully featured with the commercial VMware Tanzu offering it can be used for much more than "just learning". Bear in mind though that it does not come with any official support and that there's (currently) no way to easily shift from TCE to the commercial offerings.

This will be a post on how to configure a TCE management cluster and a workload cluster on vSphere. There's lots of blog posts out there covering the same so this is just as much as a reference for myself and my lab environment, but if it can help others that's a great bonus.

A couple of good starter points I've used are listed below:

- https://tanzucommunityedition.io/docs/latest/

- https://williamlam.com/2021/10/introducing-vmware-tanzu-community-edition-tce-tanzu-kubernetes-for-everyone.html

Install TCE

TCE consists of the tanzu cli and a set of plugins (we'll not be touching them in this post). There's also two "versions" that can be used, one with Managed clusters and one with Standalone clusters. The latter is still a bit experimental and are not meant to be long-running so in this post we'll focus on the Managed cluster deployment method.

I'm installing the Tanzu cli on my Mac and for that I've used the official documentation procedure

Note that a prereq is that you already have kubectl and Docker desktop installed.

Deploy TCE on vSphere

Lab environment

The environment we'll deploy TCE to is running on a single NUC where I've installed ESXi 7.0.2 as the hypervisor and on top of that I've deployed a vCenter server on version 7.0.3.

I'm going to use two different physical networks which has their own VLAN ids.

- 192.168.200.0/24 - VLAN ID 200 - "Infra" network

- 192.168.210.0/24 - VLAN ID 210 - "TCE" network

The two networks needs to be able to connect to eachother

In addition I have a DNS server running on the 192.168.2.0/24 network.

Deploy Management cluster

There's quite a few pre-req's listed for deploying the Management cluster to vSphere. Note that I'm using a vCenter cluster in my setup, but you should be able to deploy directly to an ESXi host as well.

Preparations

The full preparation procedure is documented here, but in short we'll

- Download an OVA with a prebuilt (by VMware) template that is used for the Kubernetes nodes

- Deploy the OVA to your vSphere environment and mark it as a template

- Create an SSH key pair on your local machine

Deploy cluster

The deployment can be done either through the UI or with the tanzu CLI. If you want to use the CLI all configuration parameters needs to be prepared in advanced while the UI wizard guides us through the configuration (and also outputs a file to be used later on!).

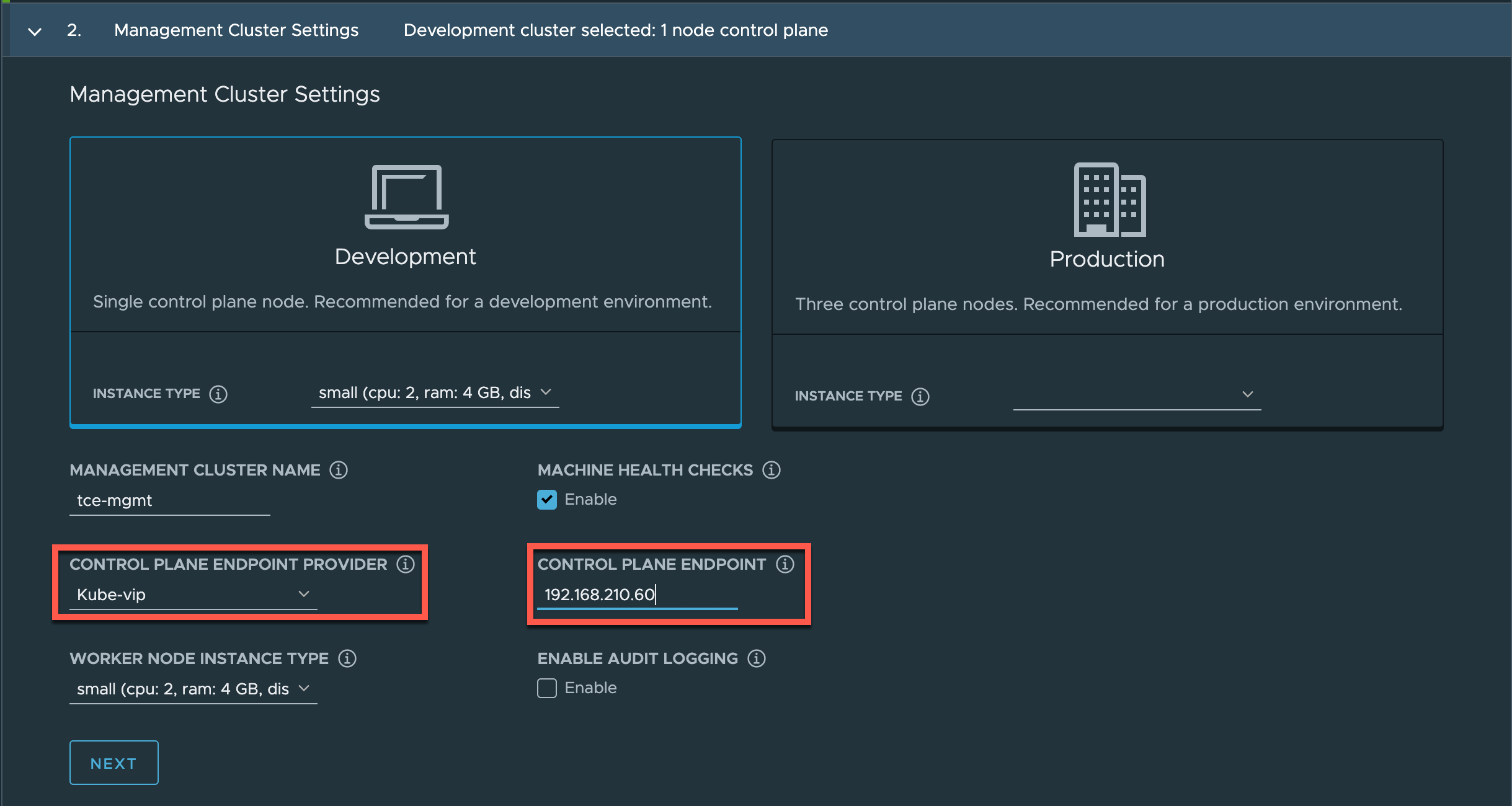

I won't list all of the configuration details, but to start of we have a couple of important things to configure. The Control plane endpoint provider and the Endpoint IP address

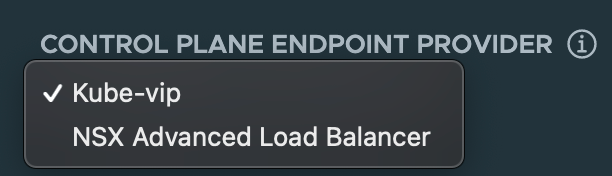

For the control plane endpoint provider we can choose from Kube-vip or NSX Advanced Load Balancer (Avi). I'm using kube-vip in this setup. Note that this will only serve as a load balancing/proxy endpoint for the Kubernetes control plane and not as a load balancer provider for Kubernetes services

The IP we configure here will be the IP that we use to connect to the managmenet cluster later on.

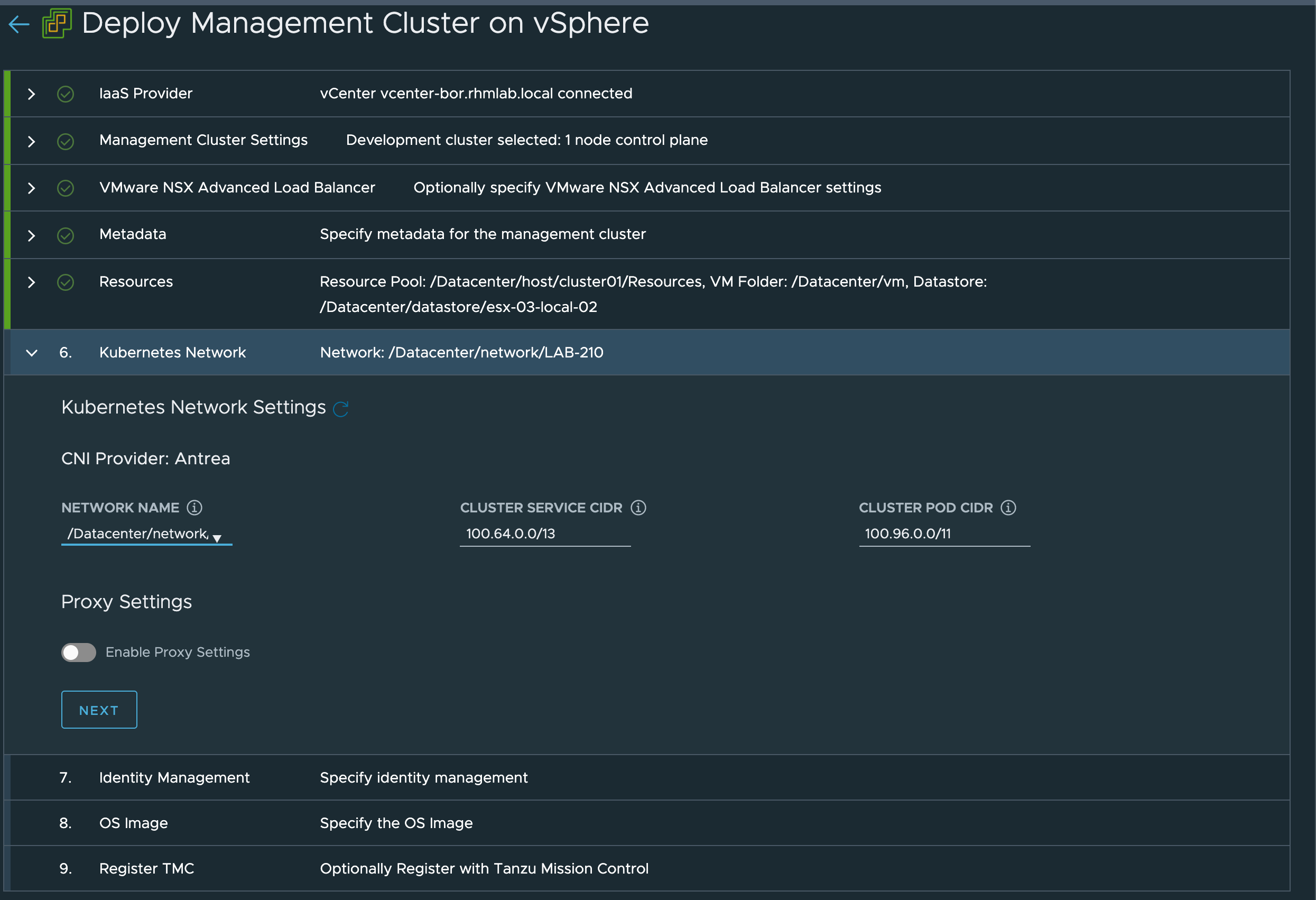

The wizard also let's us select the cluster, datastore and folder to use for our resources.

In the Network settings we select the port group for the network we want to use (the VLAN 210 in my setup) and leave the defaults for the Service and Pod CIDRs

After completing the wizard we can deploy the cluster directly, or if we want the UI shows us how to deploy through the CLI by pointing to a yaml file with our configuration options. It's a good idea to keep a copy of this file as we're going to make use of it later on when we deploy workload clusters

The command for deploying a management cluster will be:

tanzu management-cluster create --file <path-to-config-file> -v 6

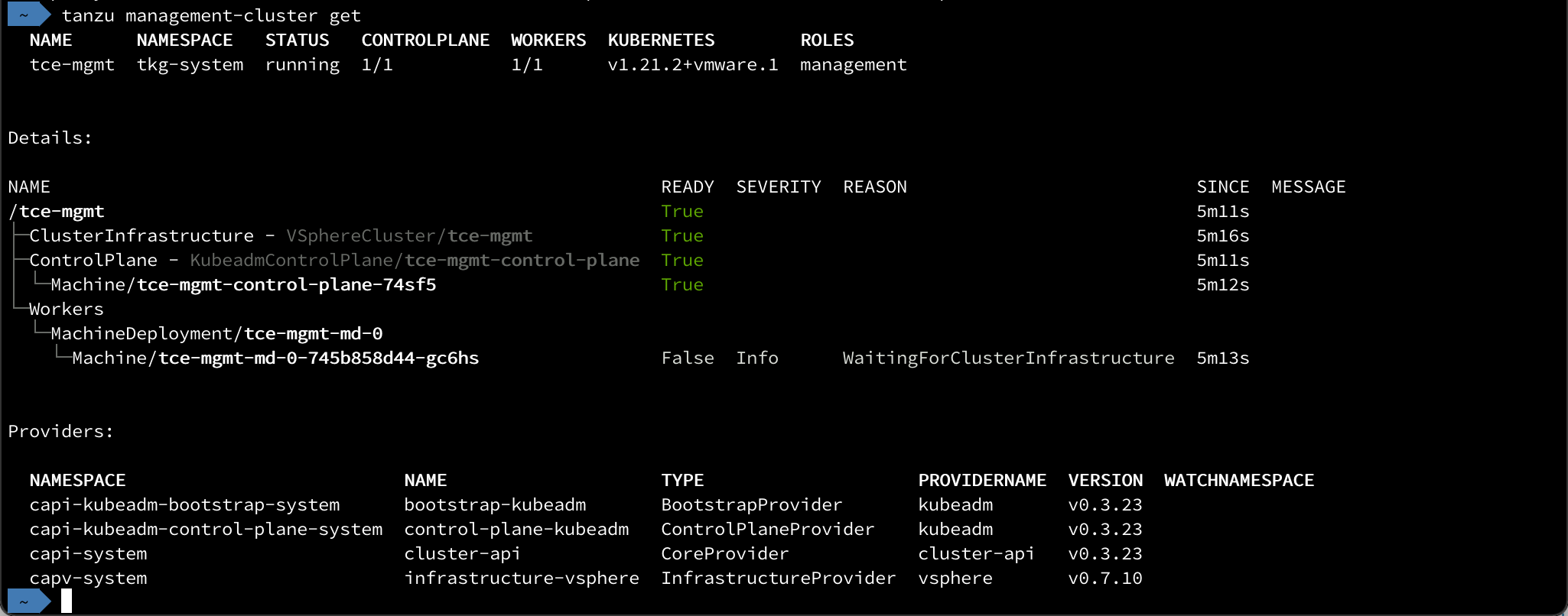

After the deployment finishes we can verify the cluster by using the tanzu cli

tanzu management-cluster get

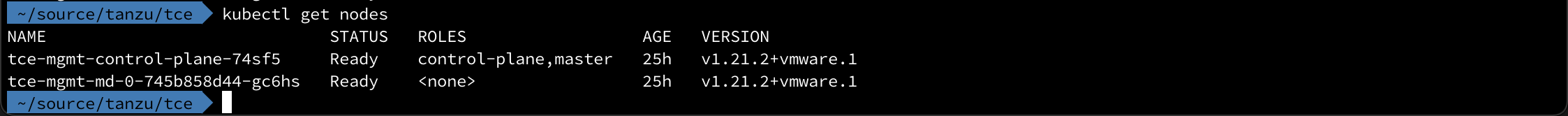

After setting our kubectl context we can also check the Kubernetes node from kubectl

Deploy Workload cluster

Preparations

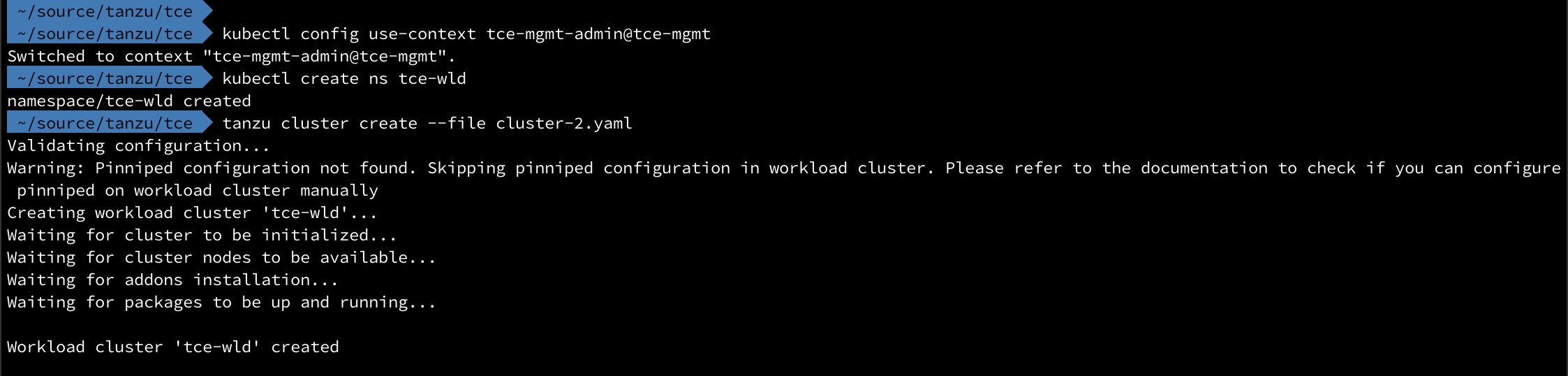

Before deploying a workload cluster we need to prepare a config file with the details for the cluster. The easiest way to create this is to make a copy of the file used/created for the management cluster since a lot of the settings will be the same.

Be sure to change the parameters for the cluster name CLUSTER_NAME, the control plane endpoint VSPHERE_CONTROL_PLANE_ENDPOINT. Optionally we can also change settings for the number of nodes, i.e. WORKER_MACHINE_COUNT for the worker node count and the namespace NAMESPACE. Note that the namespace we set in this parameter needs to exist in the management cluster before deploying the workload cluster.

Deploy cluster

There's no UI for deploying the workload cluster so we're left of with the tanzu cli.

The syntax for deploying the cluster is the following

1tanzu cluster create --file <CONFIG-FILE>

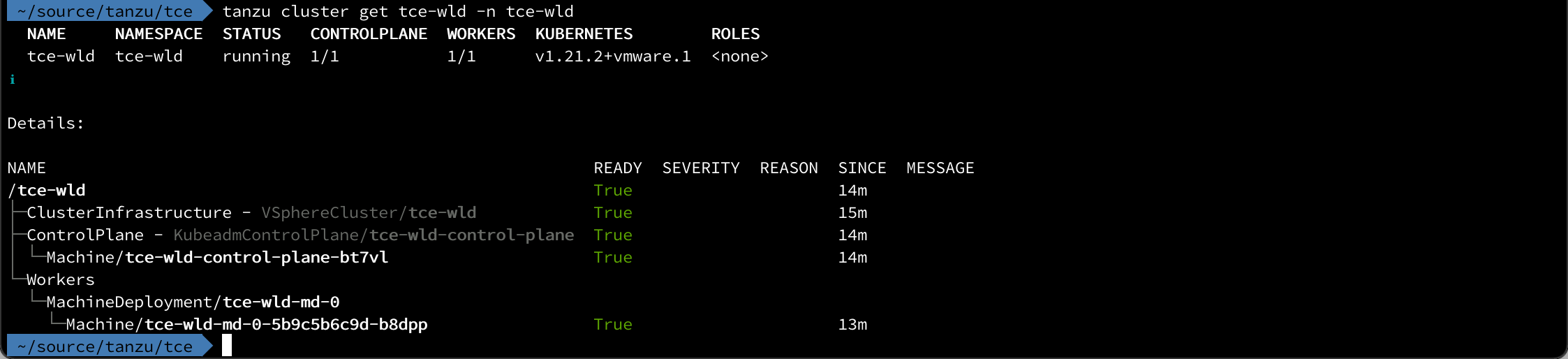

After the workload cluster has been deployed we can view it through the tanzu cli. If you've deployed to a specific namespace make sure to specify that

Set kubectl context

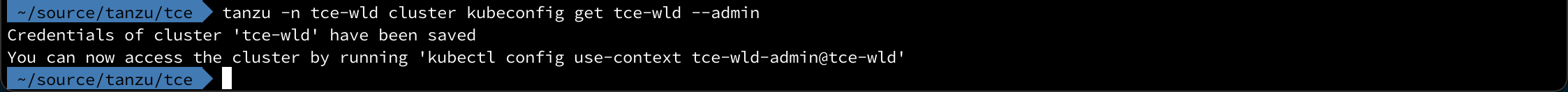

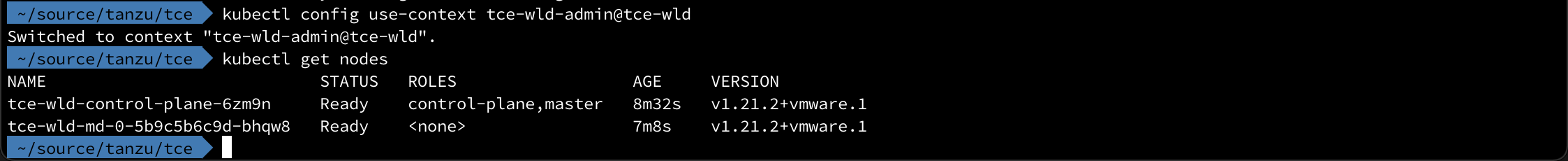

To be able to administer the Kubernetes resources in the workload cluster we need to set our kubectl context to the TCE workload cluster as this is not done automatically

First capture the workload cluster's kubeconfig

1tanzu cluster kubeconfig get <WORKLOAD-CLUSTER-NAME> --admin

And then use it in kubectl

1kubectl config use-context <WORKLOAD-CLUSTER-NAME>-admin@<WORKLOAD-CLUSTER-NAME>

Now we can use kubectl to check out our Kubernetes nodes with kubectl

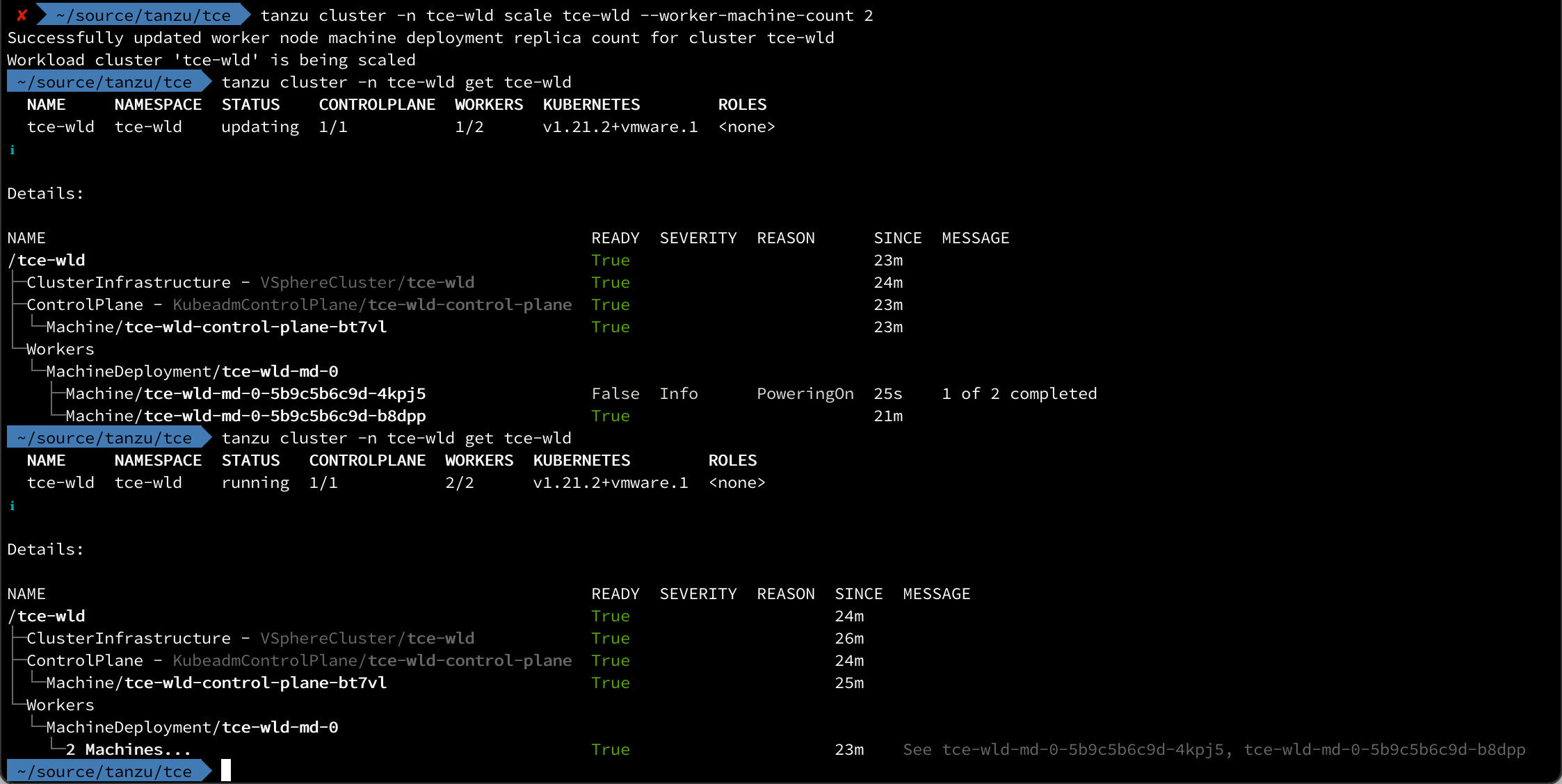

Scale clusters

We can also use the tanzu cli to scale the number of both control plane and workload nodes

1tanzu cluster scale <CLUSTER-NAME> --workload-machine-count <NUMBER-OF-NODES> --controlplane-machine-count <NUMBER-OF-NODES>

After running the command the Cluster API provider will deploy additional nodes in vSphere and after a short while they will be ready to use and part of the cluster

Delete cluster

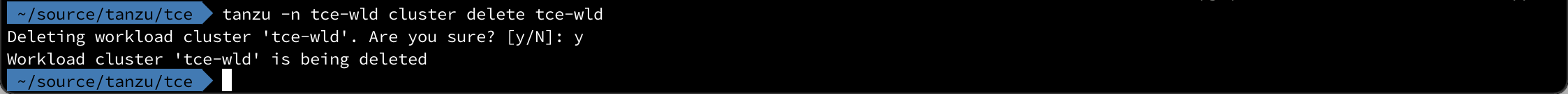

Delete workload cluster

Deleting a workload cluster is done with the tanzu cluster delete command. Note that you might need to remove some of the services and volumes if this has been configured

Delete managment cluster

Deleting a management cluster is done with the tanzu management-cluster delete command. The deletion is done with a temporary kind cluster that will be deployed on your machine

For more information refer to the TCE documentation

Summary

TCE is a great platform for both learning Kubernetes, but also to get more hands-on experience with the Tanzu platform. Although I'm using vSphere as the infrastructure provider in this setup I could have used Docker instead eliminating the need for any other infrastructure than my laptop.