Using MetalLB as a load balancer in a Kubernetes cluster

Update 2022-10-21: After just one year in the wild VMware announced on Oct 21 2022 that they would no longer update or maintain the TCE project and that by end of 2022 the Github project will be removed. For more information check out my blog post here

Most of the things mentioned in this post (outside of installing TCE) should still be valid for other Kubernetes distributions

This will be a short write up on how to deploy MetalLB as a load balancer for Kubernetes.

Kubernetes doesn't have a built in service for providing load balancer service types which leaves us with NodePort and ExternalIps as service types for external access. A load balancer is essential in a Kubernetes cluster, and this is one of the advantages when selecting a Kubernetes service from one of the public cloud providers such as AWS, Azure or GCP.

In a previous post I described how I've deployed a TCE on vSphere, and although it comes with support for a Load balancer that is based on the NSX Advanced Load Balancer (Avi) which is something that you might not have access to. The control plane endpoints in my clusters are supported by Kube-vip which is an alternative also for providing LoadBalancer services

In this post I'll make use of MetalLB as a load balancer in a TCE workload cluster and test provisioning of Kubernetes services of type LoadBalancer.

Pre req

There are a few prerequisites for MetalLB which you should take a look at before installing. One of them are the Network provider (CNI).

Most CNI's should work, but not all are tested. TCE uses Antrea which is not tested by MetalLB, but in my use-cases I have not seen any issues.

Installation

The installation procedure is documented here

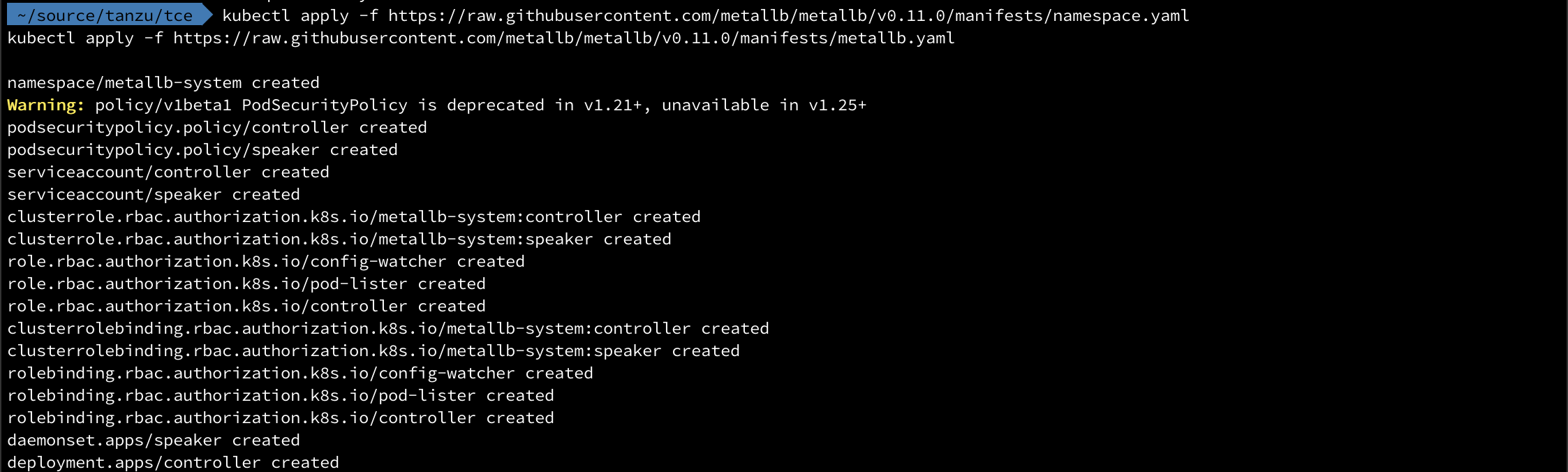

I'm using manifest files for the deployment, there are procedures for Kustomize and Helm as well.

The following are the files for v0.11.0

1kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.11.0/manifests/namespace.yaml

2kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.11.0/manifests/metallb.yaml

Configuration

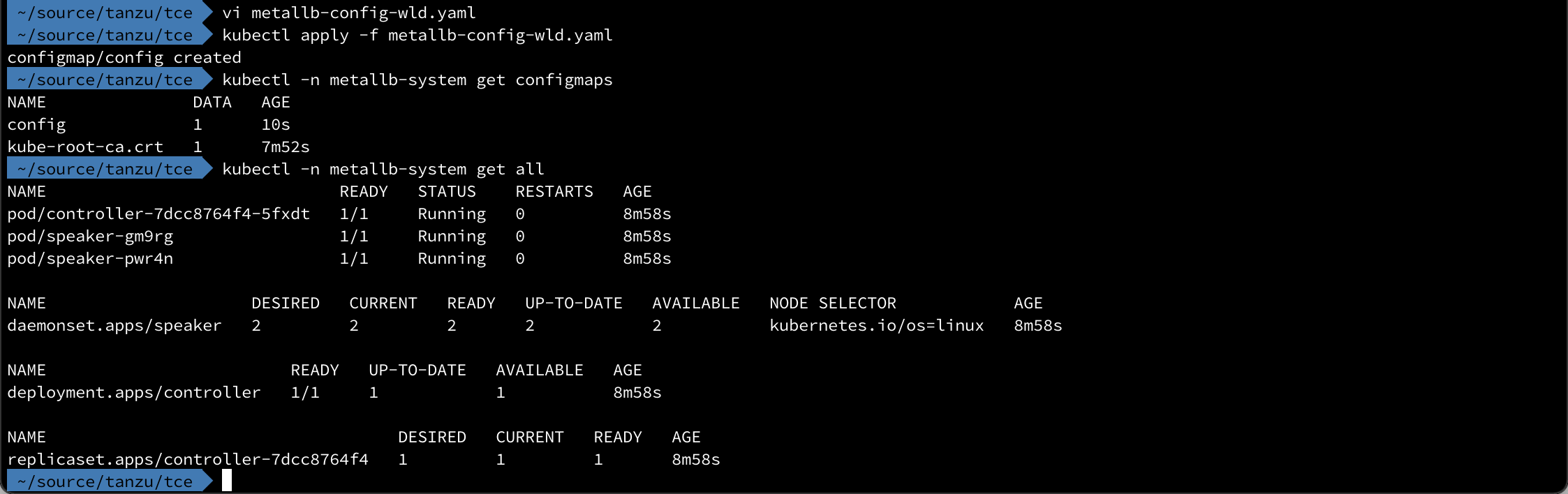

After the deployment MetalLB will remain idle until configured. The configuration is done through a ConfigMap in the same namespace as MetalLB was deployed

An example config file can be found here

There's lots of possible configurations to be done, i.e. for BGP, limiting to certain nodes etc, but I'll create a simple Layer2 config with a range of addresses to be used as LoadBalancer IPs

1apiVersion: v1

2kind: ConfigMap

3metadata:

4 namespace: metallb-system

5 name: config

6data:

7 config: |

8 address-pools:

9 - name: default

10 protocol: layer2

11 addresses:

12 - 192.168.210.110-192.168.210.119

The ConfigMap is created with a kubectl command and after a short while the MetalLB pods should be running

1kubectl apply -f <file>

Test LoadBalancer service

Now let's test the LoadBalancer service type.

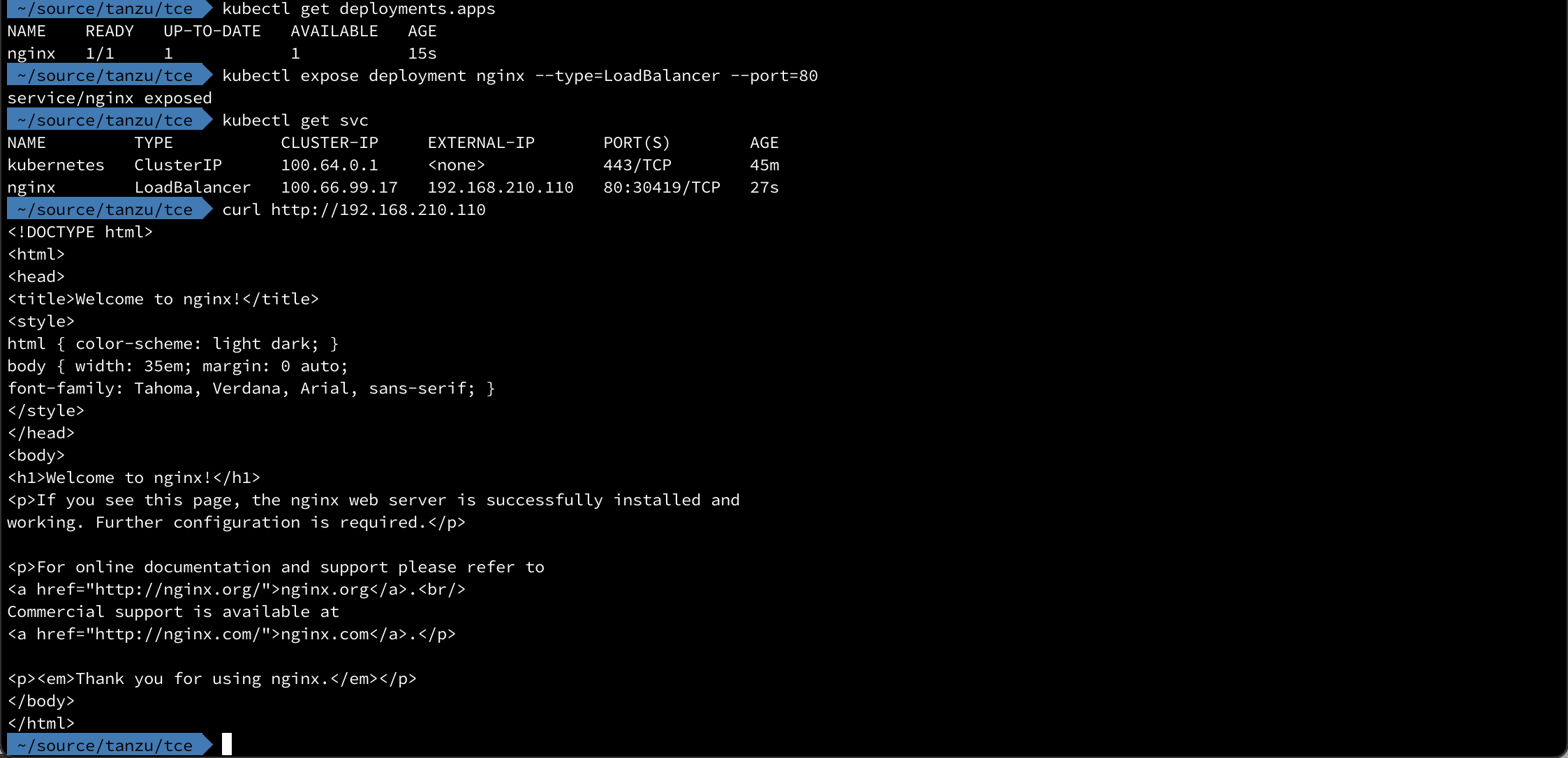

First we'll deploy a nginx instance in a deployment, and then expose the deployment through a LoadBalancer service

1kubectl create deployment nginx --image=nginx

2kubectl expose deployment nginx --type=LoadBalancer --port=80

Note that the External IP has been picked from the range we specified in the MetalLB ConfigMap

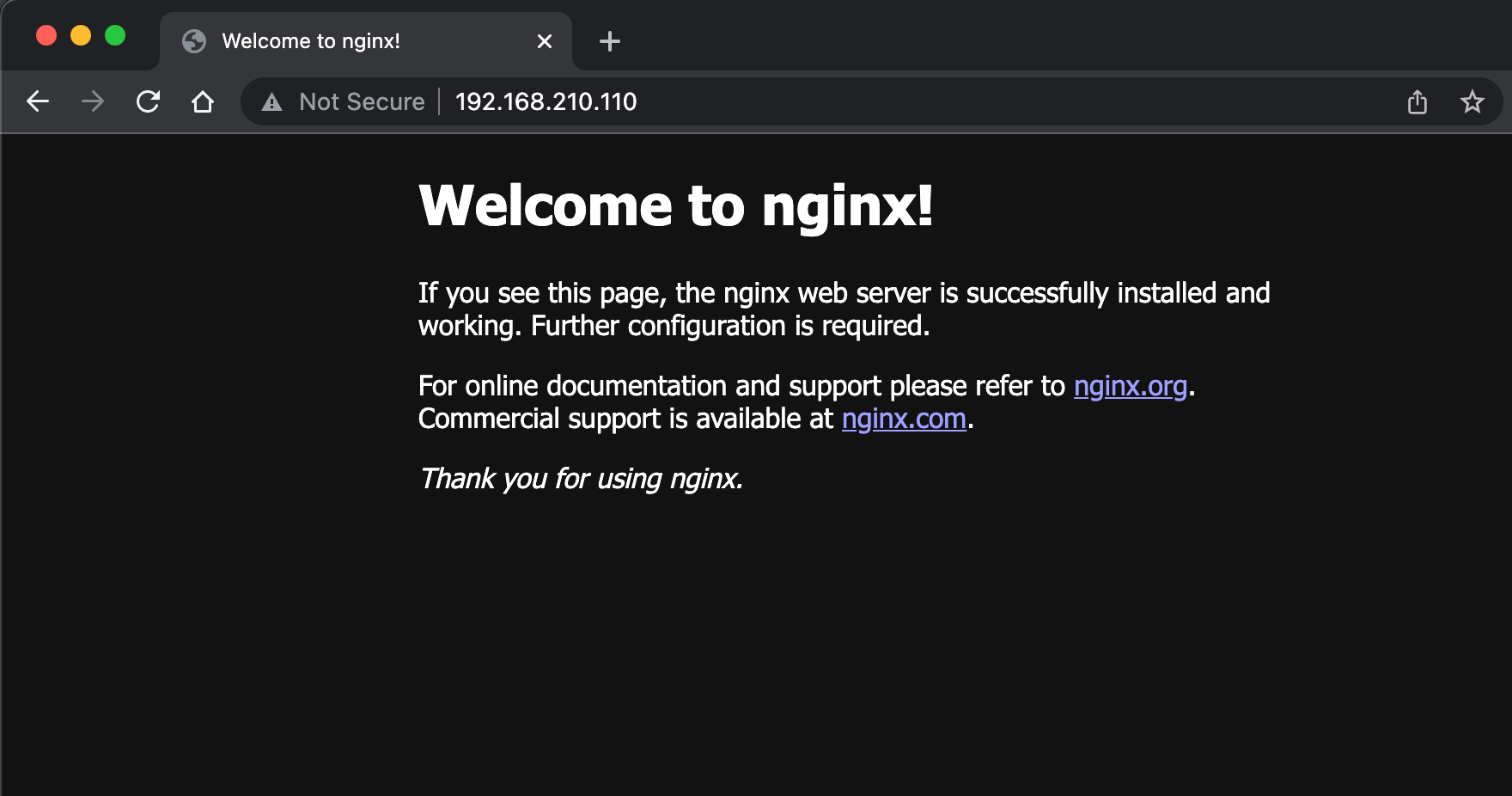

We can also verify the IP by pointing a web browser to it

Summary

MetalLB is an easy way to implement support for the LoadBalancer service type in a Kubernetes cluster. It integrates nicely with most networking setups and aims to "just work".