CKA Study notes - Pod Scheduling - 2024 edition

Overview

This post is part of my preparations towards the Certified Kubernetes Administrator exam. I have also done a post on Daemon Sets, Replica Sets and Deployments which we normally use for deploying workloads.

This is an updated version of a similar post I created around three years ago.

In this post we'll take a look at Pod scheduling and how we can work with the Kubernetes scheduler and decide how and where Pods are scheduled. This can also be incorporated in Daemon Sets, Replica Sets and Deployments, which is how you normally deploy stuff. I'll keep it easy and just schedule Pods in these examples.

Note #1: All documentation references are pointing to version 1.28 as this is the current version of the CKA exam (as of Jan 2024).

Note #2: This is a post covering my study notes preparing for the CKA exam and reflects my understanding of the topic, and what I have focused on during my preparations.

Kubernetes Scheduler

Kubernetes Documentation reference

First let's take a quick look at how Kubernetes schedules workloads.

The scheduler does not work with individual containers directly, the smallest entity Kubernetes will manage is a Pod. A Pod contains one or more containers.

To decide which Node a Pod will be run on Kubernetes works through several decisions which can be divided in to two steps

- Filtering

- Scoring

Filtering

The first operation is to filter which Nodes are candidates to run the Pod. These are called feasible nodes. In this step Kubernetes is filtering which nodes have enough resources to meet the Pods requirements. Resources include both cpu and memory requirements, but also storage mounts and networking.

In this step we are also checking if the Pod is requesting to run on a specific set of nodes. This can be done with Taints and Tolerations, Affinity, and NodeSelectors.

Scoring

When the Kube scheduler has filtered out the list of feasible nodes it goes ahead and sorts that list based on scoring. The scheduler ranks the nodes to choose the most suitable node

Custom schedulers

Kubernetes is extensible in it's nature and it allows for custom schedulers so you can write your own scheduler to fit your exact needs if the default one comes too short.

Taints and Tolerations

Kubernetes Documentation reference

Taints are a way for a Node to repel Pods from running there. Meaning you can add a taint on a node which will instruct the scheduler to not run a specific (or any) pod on that node.

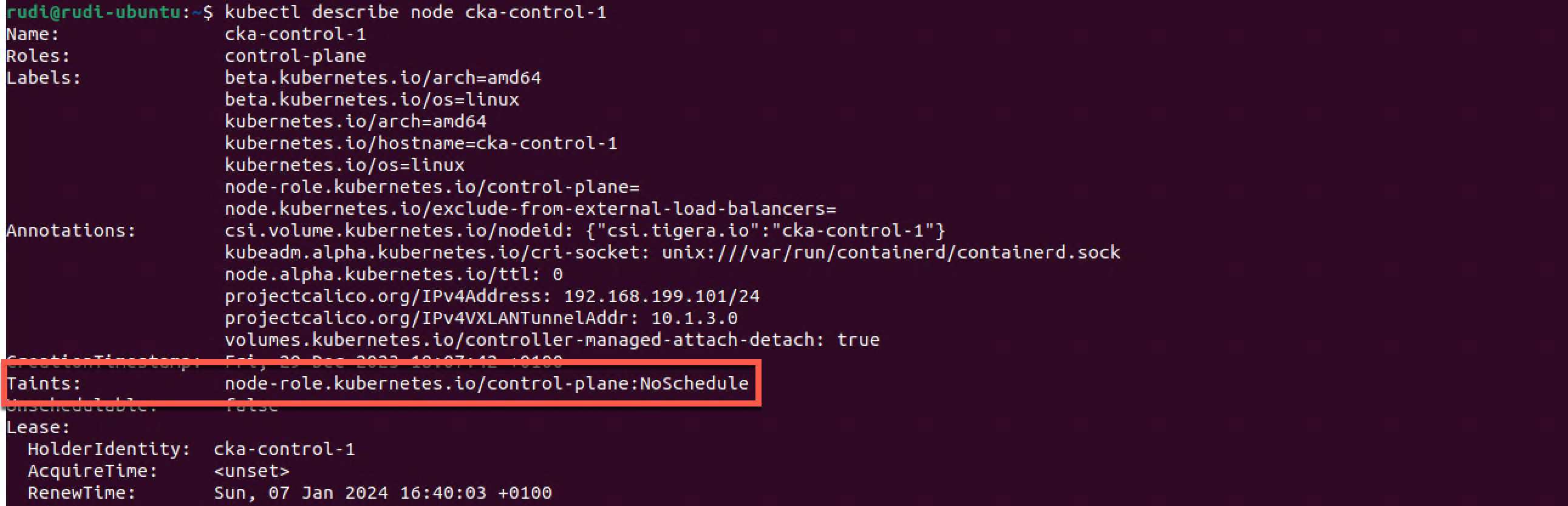

By default a Kubernetes cluster already have taints set on nodes. The control plane nodes has a NoSchedule taint by default to prevent normal pods to be scheduled on them.

1kubectl describe node <CONTROL-PLANE-NODE>

This control plane node has the taint control-plane:NoSchedule. Because of this the scheduler will not schedule a Pod to it as long as it doesn't have a Toleration for it

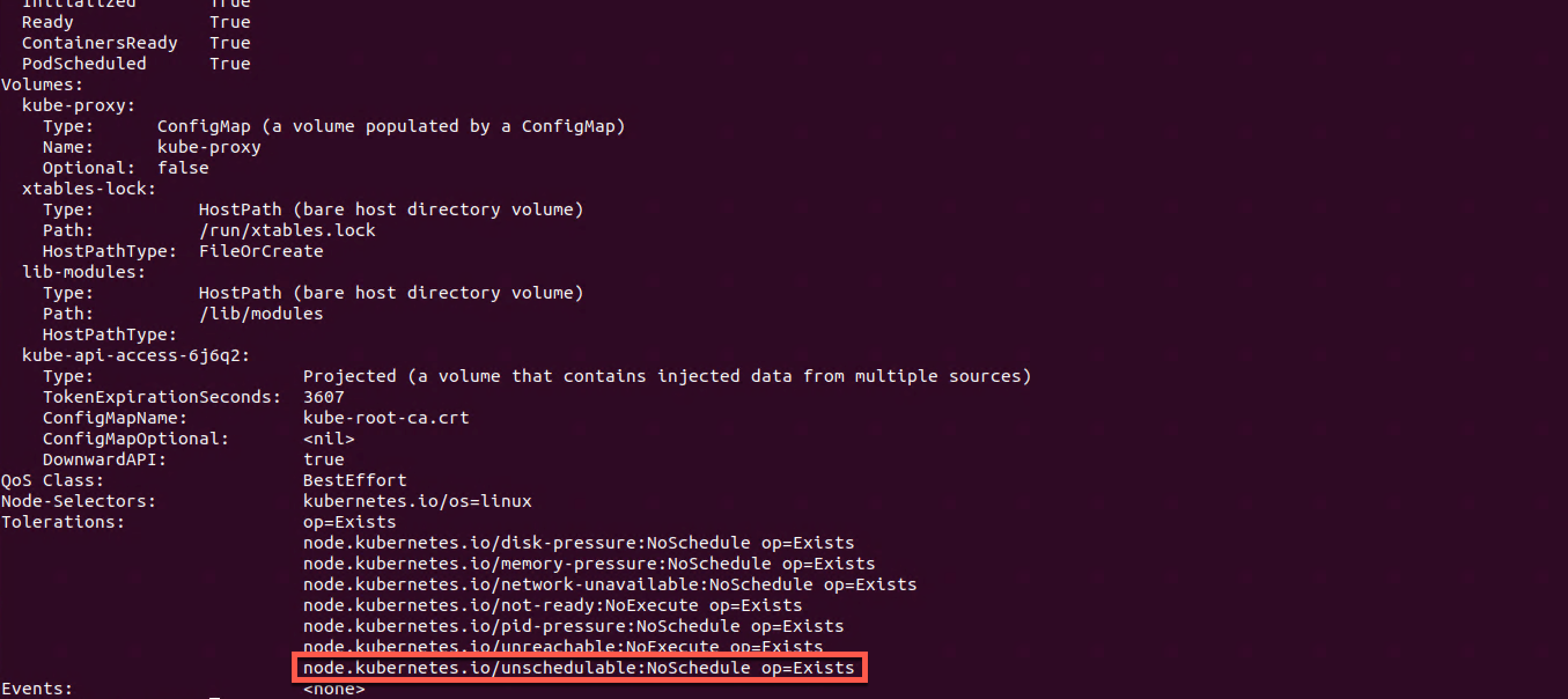

A Toleration is assigned to a Pod which allows the pod to be scheduled on a node with a corresponding taint. An example of this can be found in Daemon set pods which oftentimes runs on all nodes.

From my post on Daemon sets we used the kube-proxy pod which are running on all nodes. First let's identify a pod running on a control plane node

1kubectl -n kube-system get pod -o wide -l k8s-app=kube-proxy

Let's check the Tolerations on one of the pod

1kubectl -n kube-system describe pod <POD-NAME>

This toleration will match the control-plane:NoSchedule taint on the control plane nodes and thereby let the kube-scheduler schedule the pod on the node.

Let's do a couple of our own examples

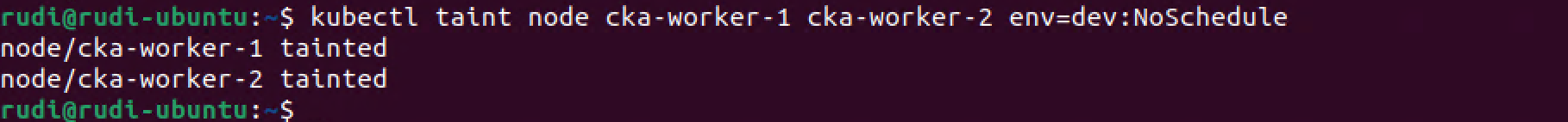

First we'll taint our worker nodes with some taints. We'll do the classic Prod/Dev example

1kubectl taint node <PROD-NODE> env=dev:NoSchedule

2kubectl taint node <PROD-NODE> env=dev:NoSchedule

With these taints the cka-worker-1 and -2 nodes should not run dev pods, only production pods. Production pods can run on all three workers.

Let's create a pod with a dev tag and see where the kube-scheduler puts it

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-dev-1

5 labels:

6 env: dev

7spec:

8 containers:

9 - name: nginx

10 image: nginx

11 imagePullPolicy: IfNotPresent

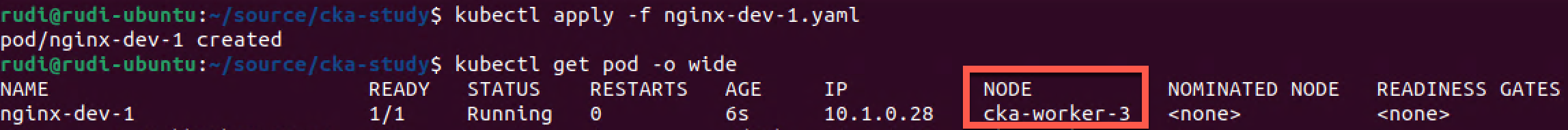

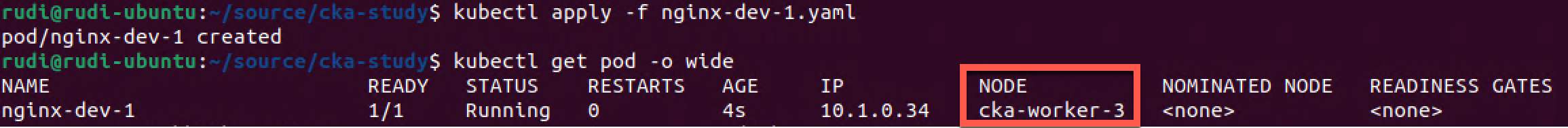

Note the env:dev tag. We'll create this pod with the kubectl apply -f filename command. And then we'll retrieve our pods and see what node it got scheduled on.

1kubectl apply -f <file-name-1>

2

3kubectl get pods -o wide

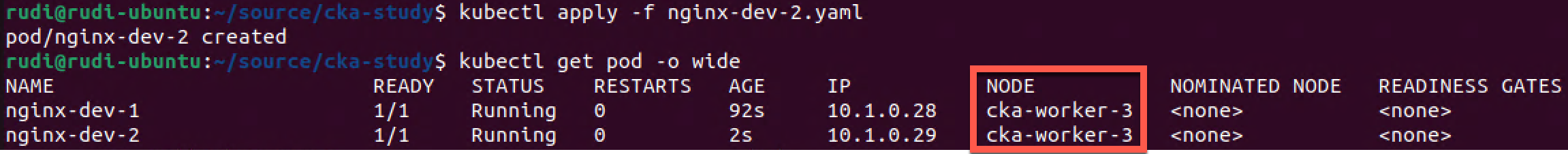

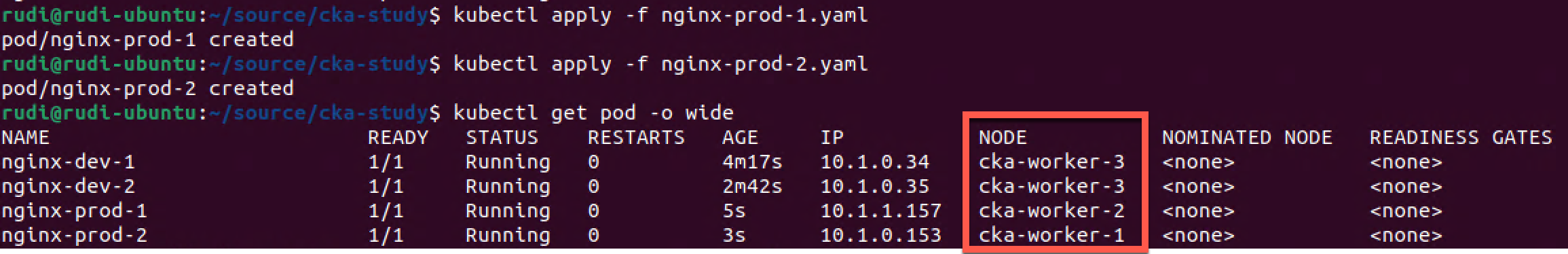

As expected it was scheduled to cka-worker-3 which was not tainted earlier. Let's create a second pod with the same spec and tag, just with a different name to see where that gets scheduled

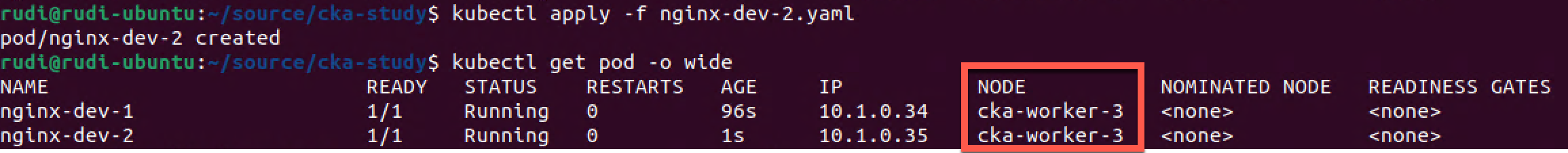

1kubectl apply -f <file-name-2>

2

3kubectl get pods -o wide

Again the pod was scheduled on worker-3

Now let's create a pod tagged with the env:prod tag and see where that gets scheduled

Not working example

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-prod-1

5 labels:

6 env: prod

7spec:

8 containers:

9 - name: nginx

10 image: nginx

11 imagePullPolicy: IfNotPresent

1kubectl apply -f <file-name-3>

2

3kubectl get pods -o wide

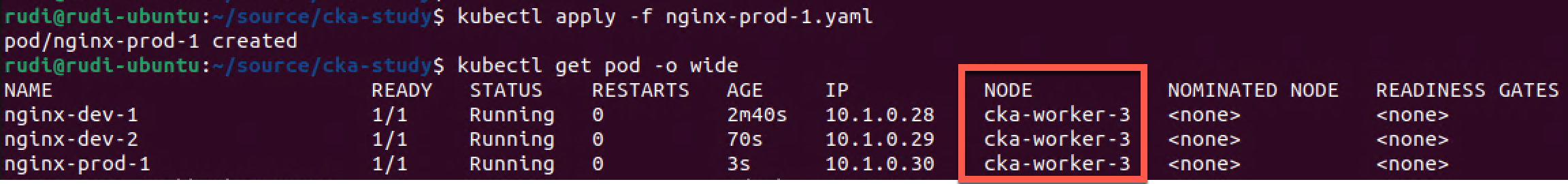

But wait! Why wasn't that scheduled on one of the prod tainted nodes?

Well, as mentioned previously, a pod needs to have a Toleration matching the taint to be scheduled. Let's change the config for our prod pod and add that toleration

Working example

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-prod-1

5 labels:

6 env: prod

7spec:

8 containers:

9 - name: nginx-prod-1

10 image: nginx

11 imagePullPolicy: IfNotPresent

12 tolerations:

13 - key: "env"

14 effect: "Equal"

15 value: "dev"

16 effect: "NoSchedule"

And let's delete and recreate the prod pod and see if it will be scheduled on a "prod node" now

1kubectl delete pod <prod-pod-name>

2

3kubectl apply -f <file-name-3>

4

5kubectl get pods -o wide

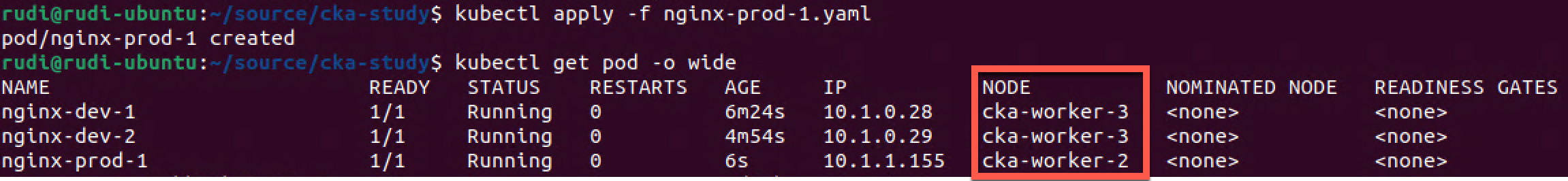

Now we finally got the prod pod scheduled to one of the prod nodes.

It actually took me a little while to get a grip on this thing and that's why I wanted to document why it didn't work as expected the first time.

There are obviously some use cases for using Tains and Tolerations, with the control plane node as an example. More can be found here. To me it's a bit confusing, and I see it as a way to ensure that only a specific set of Pods gets scheduled on a set of nodes.

Taints are also used to prevent pods in case of conditions on the nodes, e.g. Memory pressure etc. Again this can be overridden by Tolerations. reference

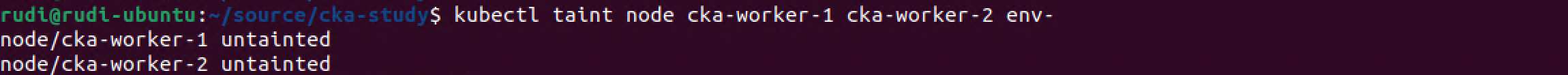

Another way of controlling scheduling can be to use node affinity which we'll take a look at next, but first we'll untaint our nodes

1kubectl taint node <NODE> env-

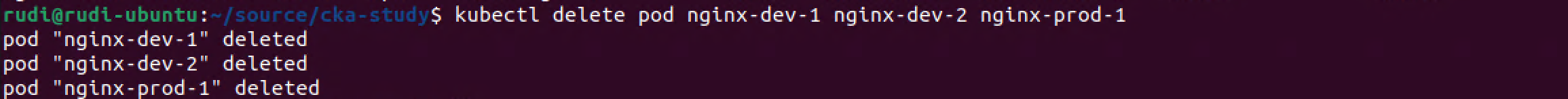

We'll also delete our pods

1kubectl delete pod <POD-NAMES>

Node affinity

So, let's see how we can explicitly say which node we want to schedule the pods on. To do this we'll either use the NodeSelector spec or an Affinity

As opposed to Taints and Tolerations where we instruct the scheduler with which nodes should NOT have pods assigned UNLESS they have a specific Toleration, we specify which Nodes a Pod MUST or SHOULD run on.

First let's see how to use the nodeSelector

NodeSelector

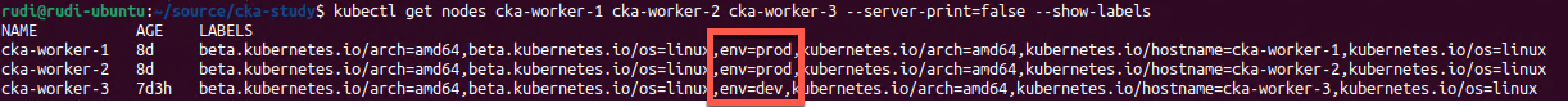

nodeSelector is a field in the podSpec and it works by tagging a node with a label which will be used in the nodeSelector. Again let's use our prod/dev example from before and tag a couple of nodes with a prod label

1kubectl label nodes <NODE-NAME> <KEY=VALUE>

2

3kubectl get nodes --show-labels

Now let's add the nodeSelector to the Pod configuration

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-dev-1

5spec:

6 containers:

7 - name: nginx

8 image: nginx

9 imagePullPolicy: IfNotPresent

10 nodeSelector:

11 env: dev

And let's create the Pod

1kubectl apply -f <file-name-1>

2

3kubectl get pod -o wide

As expected the pod is scheduled on the dev node.

Let's schedule a second dev pod.

1kubectl apply -f <file-name-2>

2

3kubectl get pod -o wide

So we've verified that our dev pods have been scheduled on the one node that is labeled with env:dev

Now let's create a couple of prod pods to see where they'll get scheduled

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-prod-1

5spec:

6 containers:

7 - name: nginx

8 image: nginx

9 imagePullPolicy: IfNotPresent

10 nodeSelector:

11 env: prod

1kubectl apply -f <file-name-3>

2kubectl apply -f <file-name-4>

3

4kubectl get pod -o wide

Again we can verify that the pods have been scheduled on a prod node. In this case we have two nodes matching the label hence it's up to the kube-scheduler to select which one has the best match based on the filtering and sorting we discussed earlier

The nodeSelector scheduling is easier, and to me, more understandable, however it might not fit more complex use cases. For this you might use Node Affinity rules.

It's also not as strict as the Taints and Tolerations example so you could probably end up scheduling a pod on a wrong node if the labels are not specified correctly.

Affinity/anti-affinity

Kubernetes Documentation reference

Node Affinity is similar to nodeSelector and also uses node labels. However the possibilities on selecting the correct node(s) is more expressive.

Affinity (and anti-affinity) rules can use more matching rules, we can also incorporate "soft" rules where we specify that a pod should (preferred) run on instead of must (required) run on. And there's also the possibility to have a affinity rule concerning pods instead of nodes. E.g. Pod A should not run on the same node as Pod B. For a VI admin this is quite similar to DRS rules with both VM to Host rules, and VM to VM rules.

So let's see it in action.

We'll add an affinity portion to the podSpec where we specify that the nodeAffinity must match a nodeSelector label. Notice I've used the "In" operator indicating that we can have multiple values.

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-dev-3

5 labels:

6 env: dev

7spec:

8 affinity:

9 nodeAffinity:

10 requiredDuringSchedulingIgnoredDuringExecution:

11 nodeSelectorTerms:

12 - matchExpressions:

13 - key: "env"

14 operator: In

15 values:

16 - dev

17 containers:

18 - name: nginx

19 image: nginx

20 imagePullPolicy: IfNotPresent

1kubectl apply -f <file-name-2>

2

3kubectl get pods -o wide

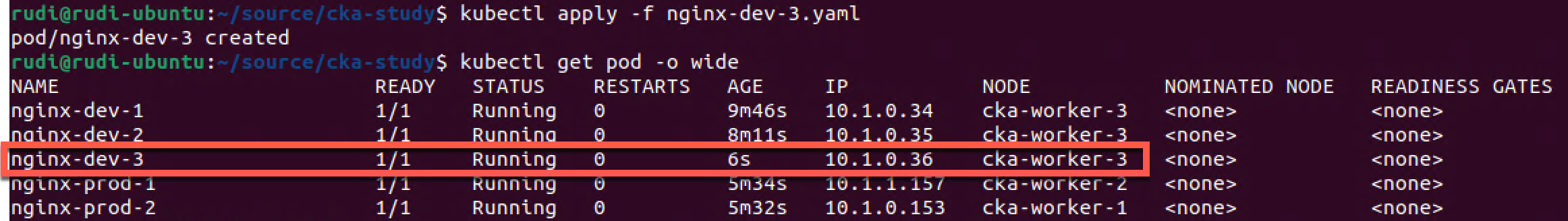

Our third dev pod get's scheduled on the dev node although it's missing the nodeSelector spec. Instead we're relying on nodeAffinity

Inter pod-affinity/anti-affinity

Kubernetes Documentation reference

So let's try the anti-affinity option where we instruct that a pod needs to stay away from another pod.

We'll add a new Dev pod which needs to stay away from other pods with the same env=dev label. This is done by adding a podAntiAffinity in the podSpec. We also need to add a topologyKey from the nodes. I'm using the hostname label, but you could use others or create your own.

Similarly we could add a podAffinity if we wanted the pods to be together.

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nginx-dev-4

5 labels:

6 env: dev

7spec:

8 containers:

9 - name: nginx

10 image: nginx

11 imagePullPolicy: IfNotPresent

12 affinity:

13 nodeAffinity:

14 requiredDuringSchedulingIgnoredDuringExecution:

15 nodeSelectorTerms:

16 - matchExpressions:

17 - key: "env"

18 operator: In

19 values:

20 - dev

21 podAntiAffinity:

22 requiredDuringSchedulingIgnoredDuringExecution:

23 - labelSelector:

24 matchExpressions:

25 - key: env

26 operator: In

27 values:

28 - dev

29 topologyKey: "kubernetes.io/hostname"

Let's create this pod and see what happens

1kubectl apply -f <file-name-3>

2

3kubectl get pods -o wide

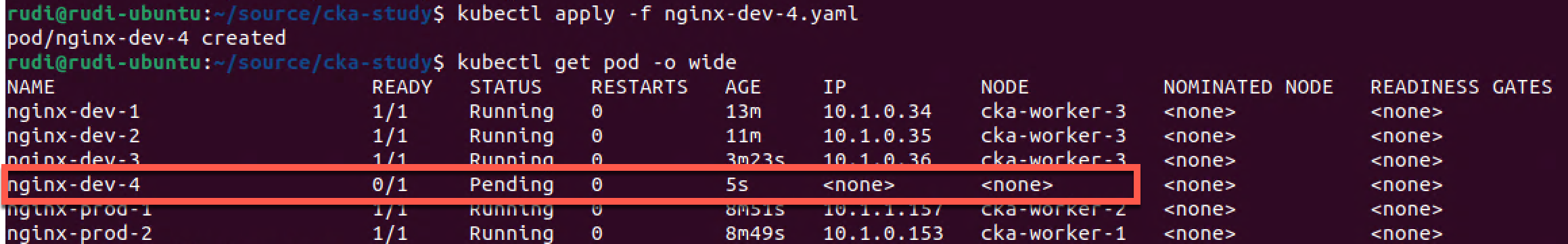

The pod can't be scheduled because there's no node available that matches both the nodeAffinity AND the podAntiAffinity, hence it's stuck in pending status

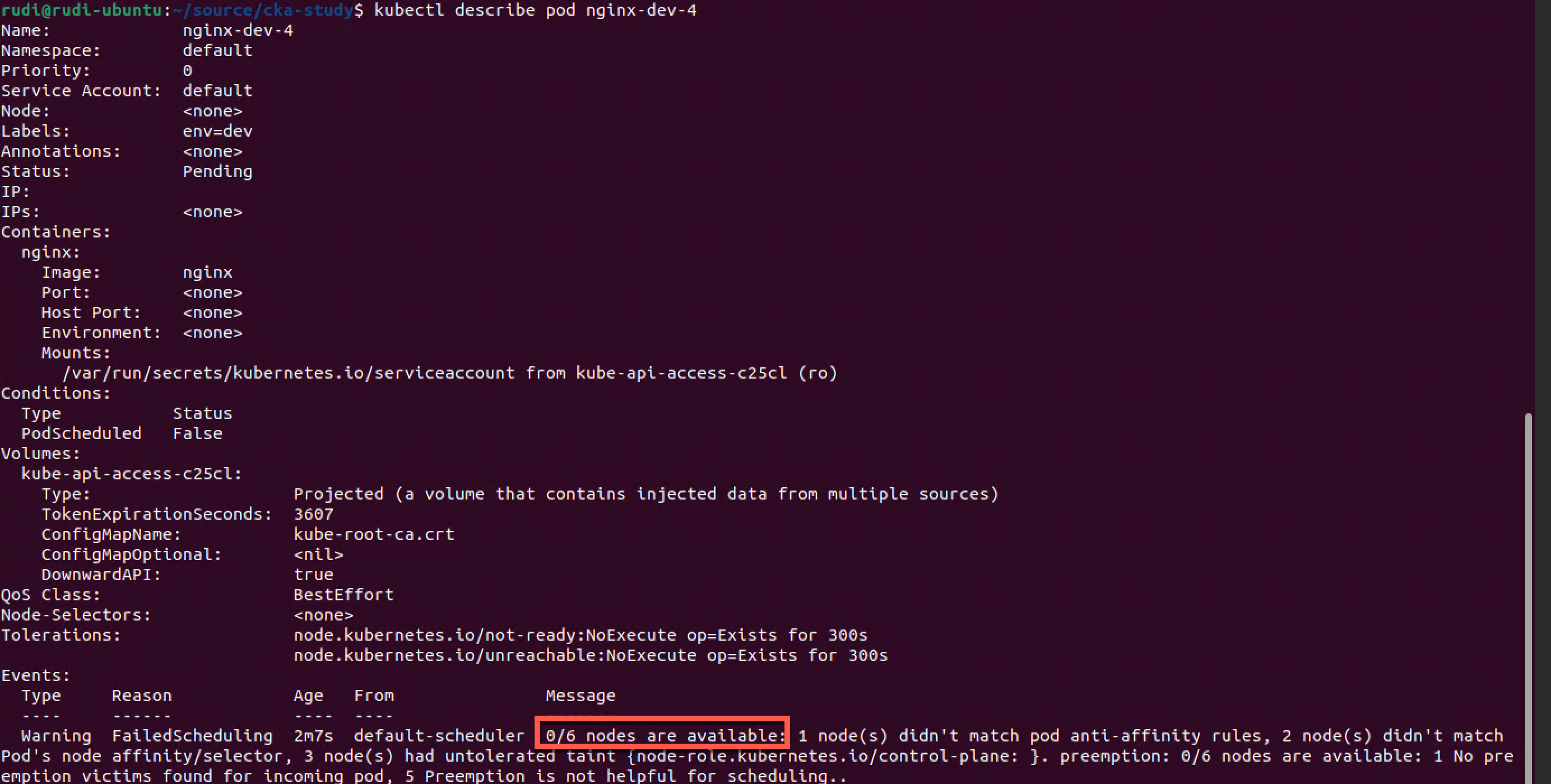

This can be verified by checking the status of the pod with the kubectl describe pod command

1kubectl describe pod <POD-NAME>

As we can see of our six nodes none is available

- 1 node didn't match pod anti-affinity rules, e.g. cka-worker-3

- 2 nodes didn't match node affinity/selector, e.g. cka-worker-1 and -2

- 3 nodes had untolerated taint (control-plane), e.g. cka-control-1 through -3

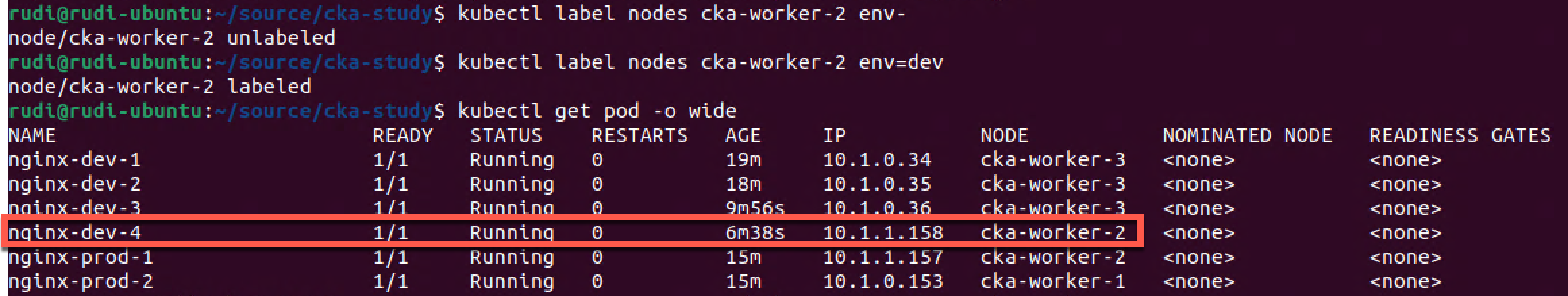

Now, let's try to re-label one of the prod nodes to be a dev node.

1kubectl label node <PROD-NODE-NAME> env-

2kubectl label node <PROD-NODE-NAME> env=dev #Could've used the --overwrite flag to do this in one operation

3

4kubectl get pod -o wide

Voila! Our pod has now been scheduled on the newly relabeled node. We can also see that the prod pods previously scheduled on this node keeps running even though the node has changed.

To get that prod pod to stop running on this node we would have to recreate it, or drain the node (we'll discuss this in an upcoming post).

Check out a few other use-cases around scheduling from the Kubernetes documentation

A little warning as it comes to using podAffinity rules: This requires a lot of processing in the kube-scheduler which can slow down scheduling overall in large clusters. reference

Summary

A quick summary:

Taints is a way for a node to keep pod(s) away, can be overridden with Tolerations on a Pod

labels can be used in a nodeSelector expression in the podSpec to tell the scheduler where a pod wants to run. Affinity and anti-affinity can be set up so that a pod gets scheduled together with or away from a specific set of other pods, affinity rules can be written with a hard (required) or soft (preferred) requirement.

Kubernetes allows for custom schedulers

There's obviously a lot more to scheduling, nodeAffinity and podAffinity than what's covered here. Check out the documentation for more information and examples.