CKA Notes - Upgrade a Kubernetes Cluster - 2024 edition

Overview

Continuing with my Certified Kubernetes Administrator exam preparations we're now going to take a look at upgrading a Kubernetes cluster.

This is an updated version of a similar post I created around three years ago.

I'm not taking any precautions on the stuff running in the cluster, and not even backing up the state of the cluster which is something you'd want to do in a "real" environment.

Earlier I've written about how the cluster has been [set up on virtual machines]/2023/12/29/kubernetes-cluster-on-vms-2024) and how it was scaled out with more nodes

State of the union

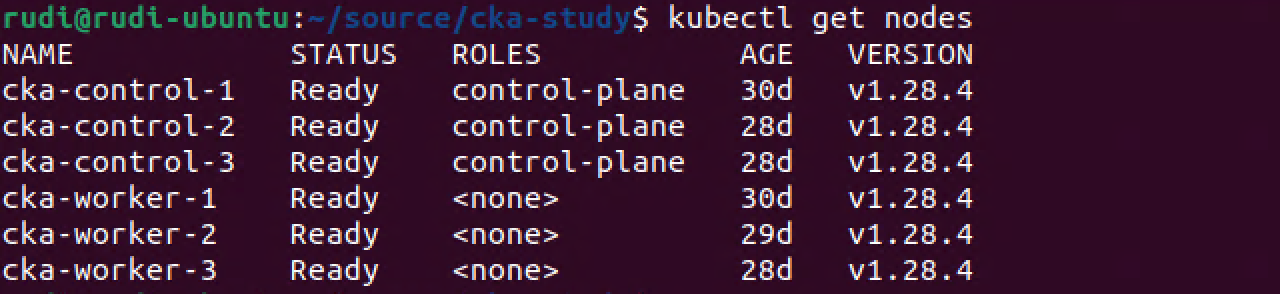

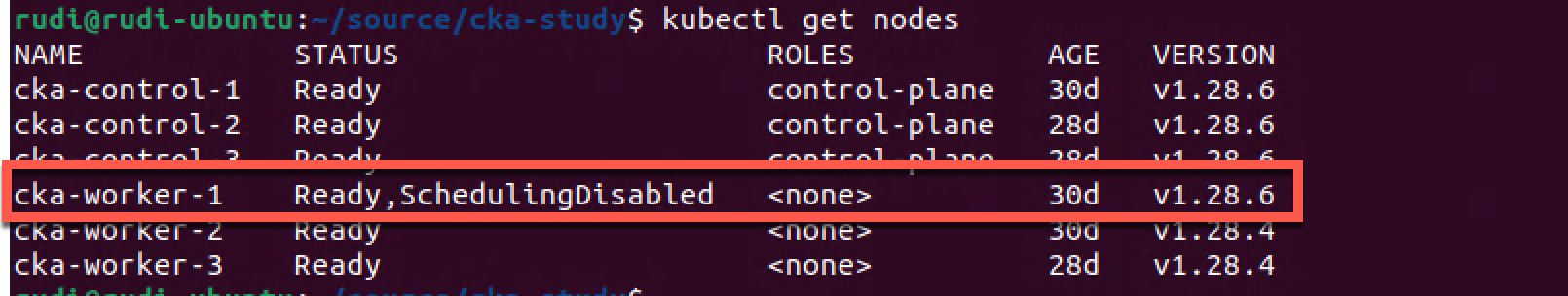

So if you've followed the CKA series you'd probably be aware of how the cluster is setup, but we'll quickly refresh so that we're on the same page.

Our cluster consists of six nodes, three control plane nodes and three worker nodes. In addition to this we have one machine running HAProxy in front of the cluster to proxy incoming requests to the API server.

All nodes are running as virtual machines in a VMware vSphere environment and all VMs are running Ubuntu Linux 22.04

More details about the virtual environment can be found here

The cluster was installed and configured with kubeadm which is also what we'll use for upgrading it.

The cluster is currently running on version 1.28.4. At the time of writing 1.28 is the version used on the CKA exam.

1kubectl get nodes

Upgrade steps

Kubernetes documentation reference

The supported way of a cluster is to not skip any minor versions, i.e. upgrade from 1.27 -> 1.28 -> 1.29. Going directly from e.g. 1.27 to 1.29 is NOT supported.

For this blog post we'll stick with the same version and just work with the patch version 1.28.x even though version 1.29 has been available for some time. Upgrade to 1.29 might be covered in an upcoming post but for now I'm preparing for the CKA and then we'll keep us inside the exam version.

In short the process of upgrading would be as follows

- Upgrade a primary control plane node

- Upgrade additional control plane nodes

- Upgrade worker nodes

The designation "primary" control plane node is nothing more than us choosing one of the control plane nodes to upgrade first. The important thing to note here is to let this finish and verified to work before continuing with the remaining nodes.

Note, as mentioned earlier this blog post is not taking into account all steps needed for upgrading a production cluster. In a production environment you'd want to make sure you have a good backup of your cluster and nodes, a verified roll-back plan and so forth

Before upgrading you should read the Release notes for the version you are upgrading to. There might be changes that requires you to take additional steps and preparations before upgrading

Package repository

From september 2023 the legacy package repositories (apt.kubernetes.io and yum.kubernetes.io) have been deprecated. Please make sure that you're using the new pkgs.k8s.io. For more information check the official Kubernetes documentation

Upgrade first node

Kubernetes documentation reference

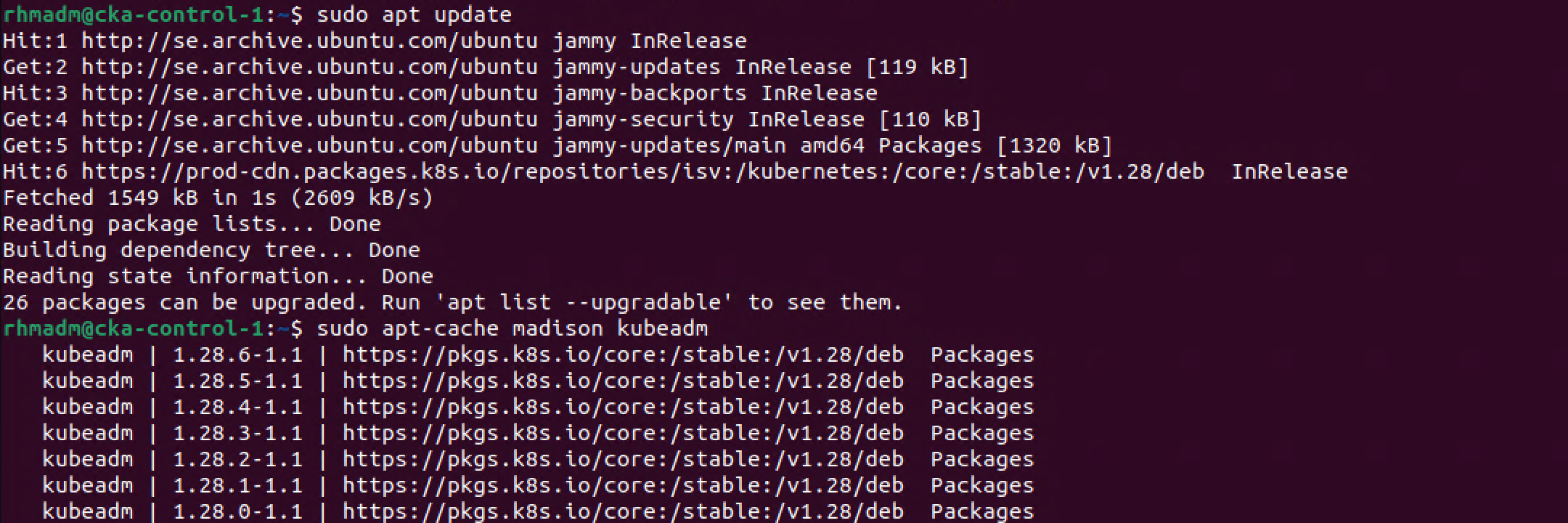

So to upgrade our first control plane node we'll check which versions we have available.

1apt update

2apt-cache madison kubeadm

Since we're using the new package repository we're pinned to the 1.28 versions.

Our current version is 1.28.4 and as we can see there's two newer patch versions available, we'll go with the 1.28.6 going forward.

Upgrade kubeadm

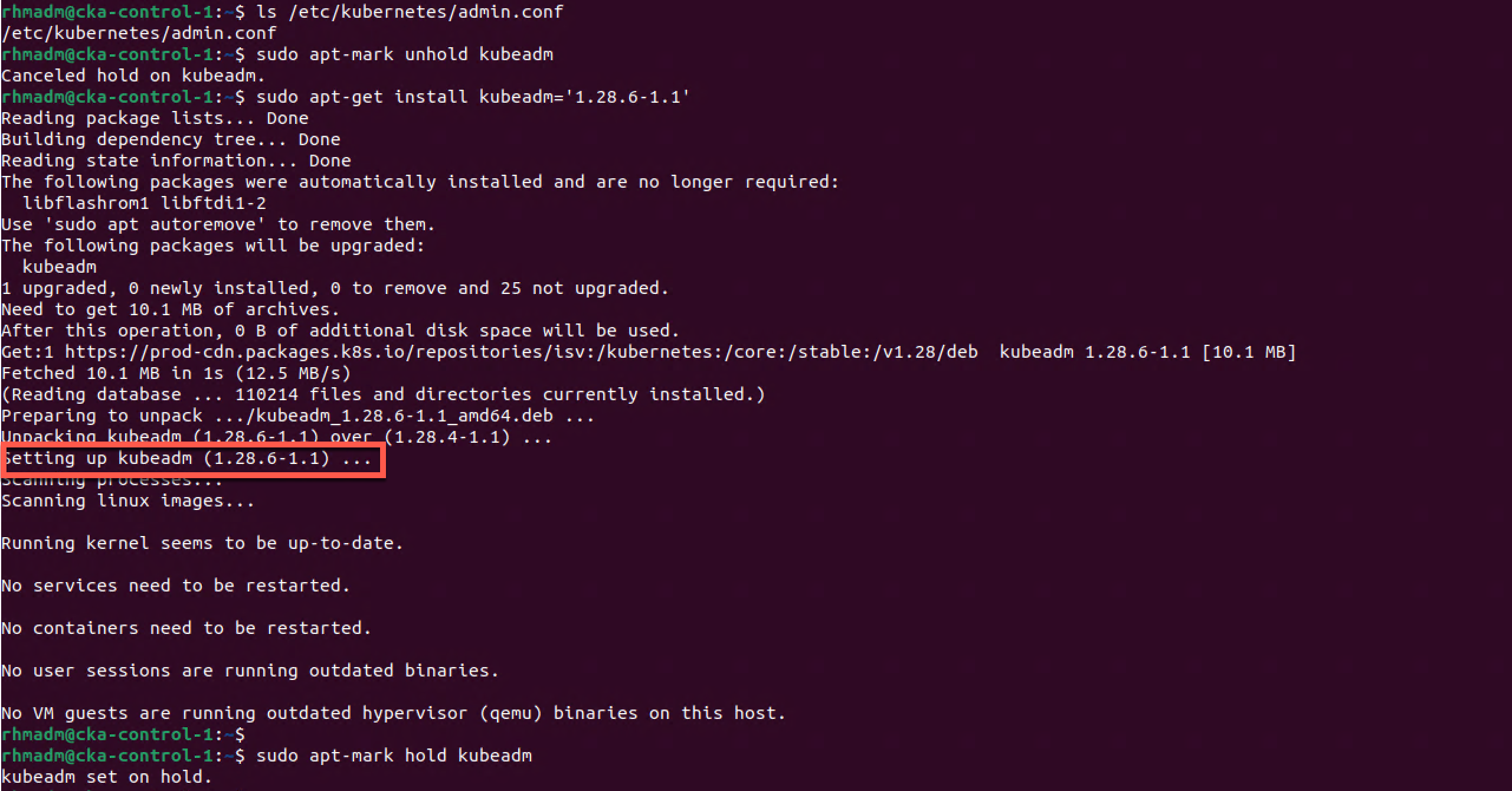

So to kick this off we'll first clear the hold on kubeadm with apt-mark, then we'll upgrade kubeadm and finally we'll put a new hold on kubeadm so that we won't accidentaly upgrade kubeadm later on

1sudo apt-mark unhold kubeadm

2sudo apt-get install kubeadm='1.28.6-1.1'

3sudo apt-mark hold kubeadm

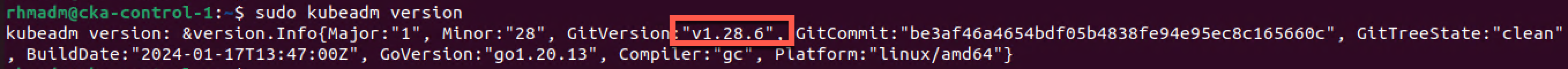

We can verify that kubeadm has been upgraded

1sudo kubeadm version

Remember that kubeadm is just the tool for deploying and upgrading the cluster and at this point we haven't really upgraded anything in the cluster as such

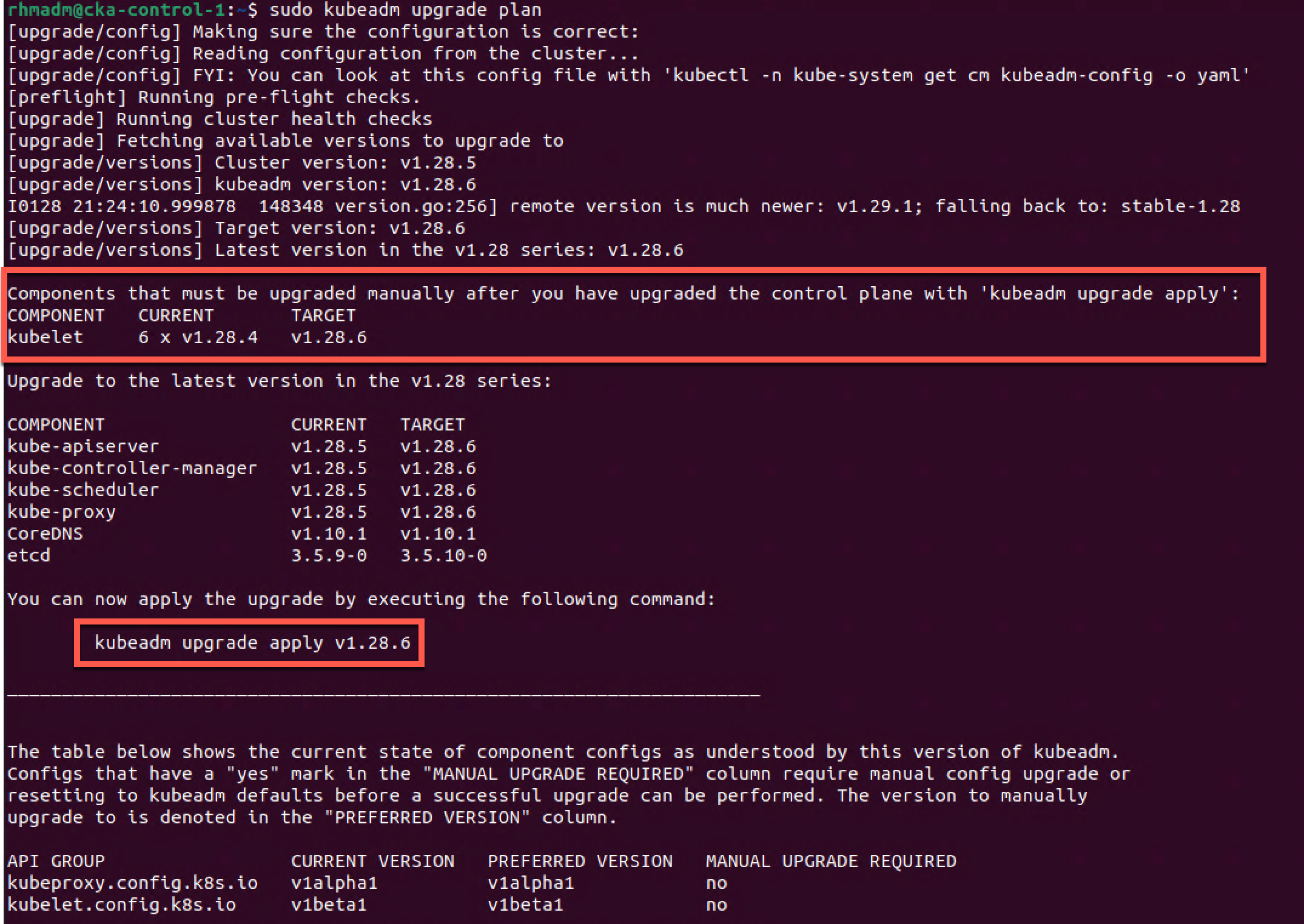

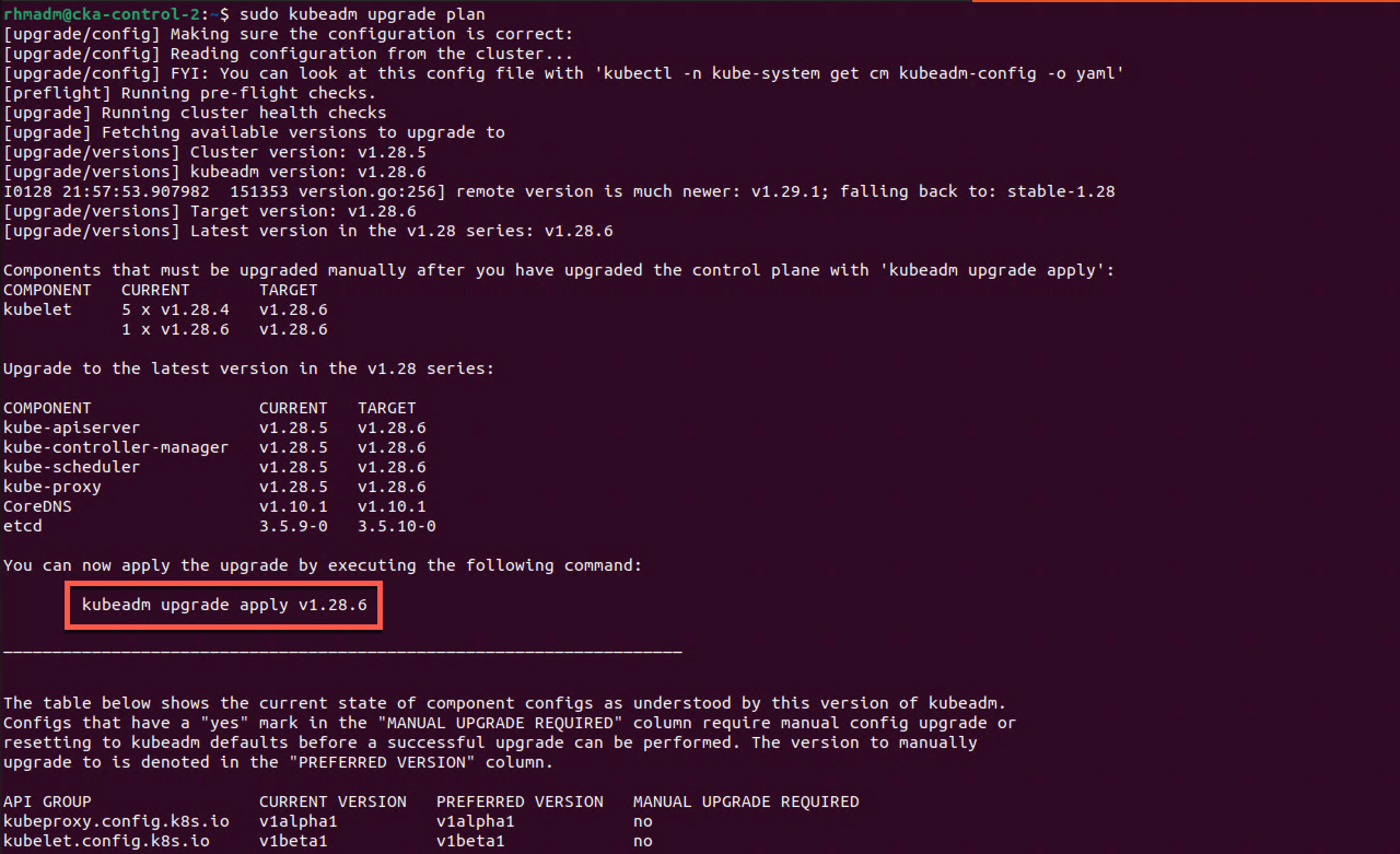

To proceed we'll issue the upgrade plan command with kubeadm. This will check if the cluster is ready for update and output a few details about the upcoming changes.

1sudo kubeadm upgrade plan

As the output shows kubeadm is ready to upgrade to 1.28.6 but it also instructs us to manually upgrade the kubelet

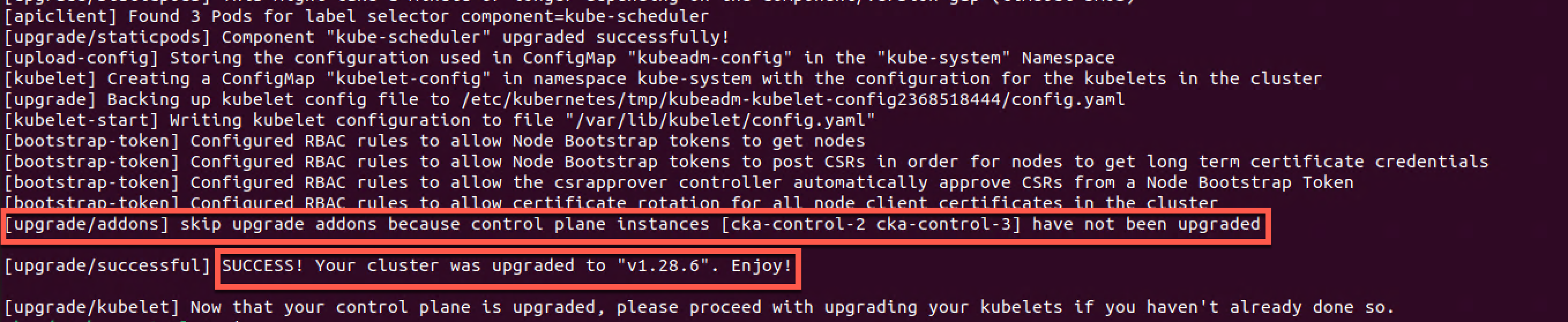

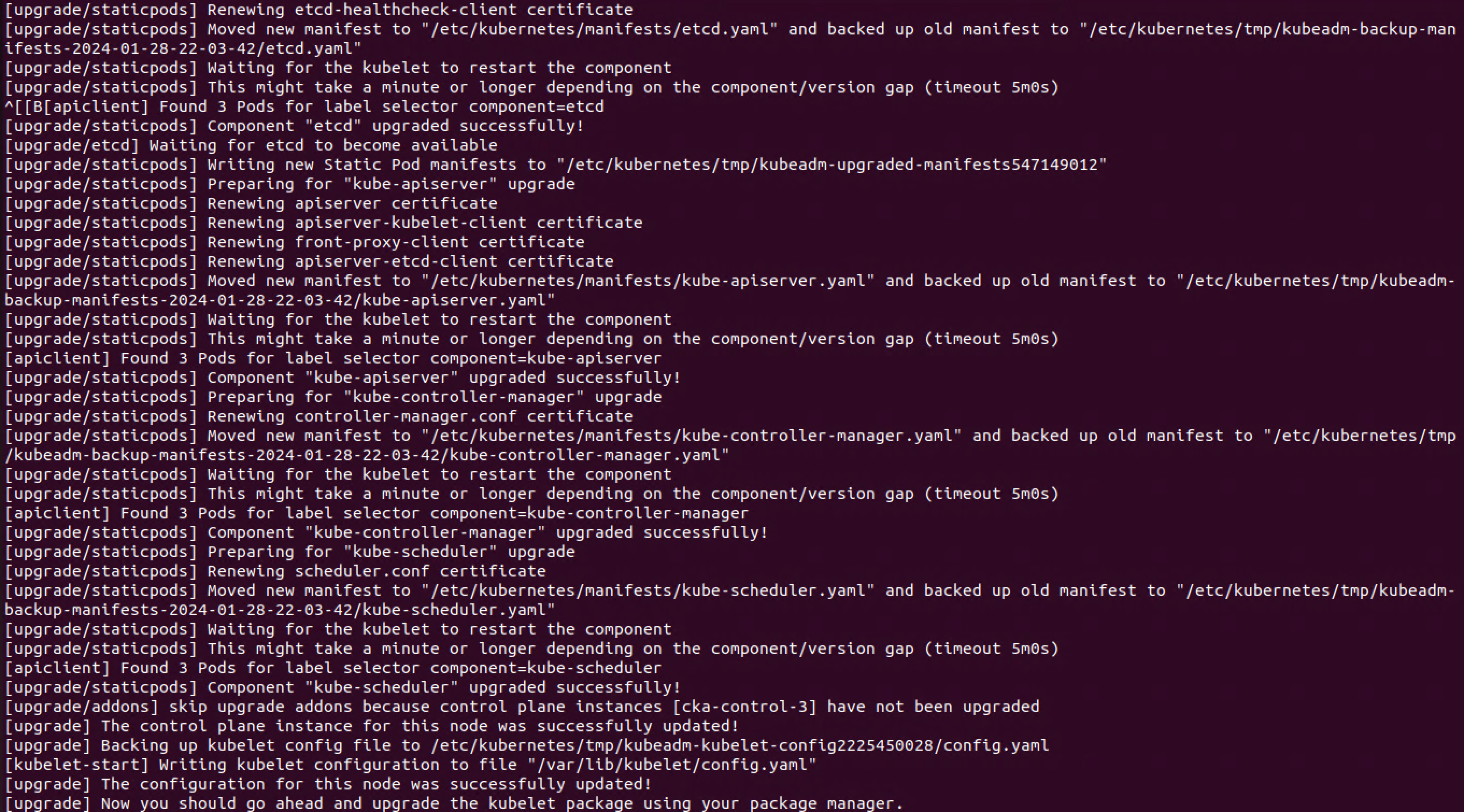

1sudo kubeadm upgrade apply v1.28.6

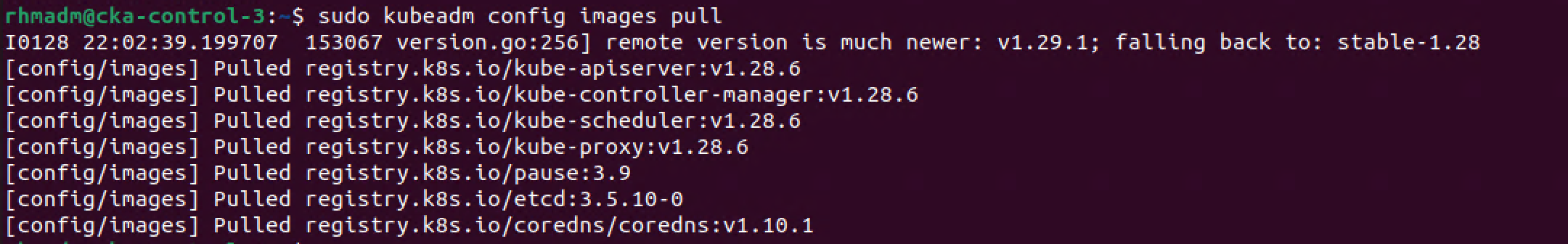

In my lab environment this command takes some time to finish. As the output suggests the images used for the upgraded components can be downloaded prior to running the command to save time when running the upgrade with the

kubeadm config images pullcommand

As we can see the kubeadm upgrade finishes successfully, but it informs us that there's add-ons that won't be updated before the remaining control plane nodes are upgraded.

This has changed in 1.28, prior to this add-ons were upgraded with the first control plane node.

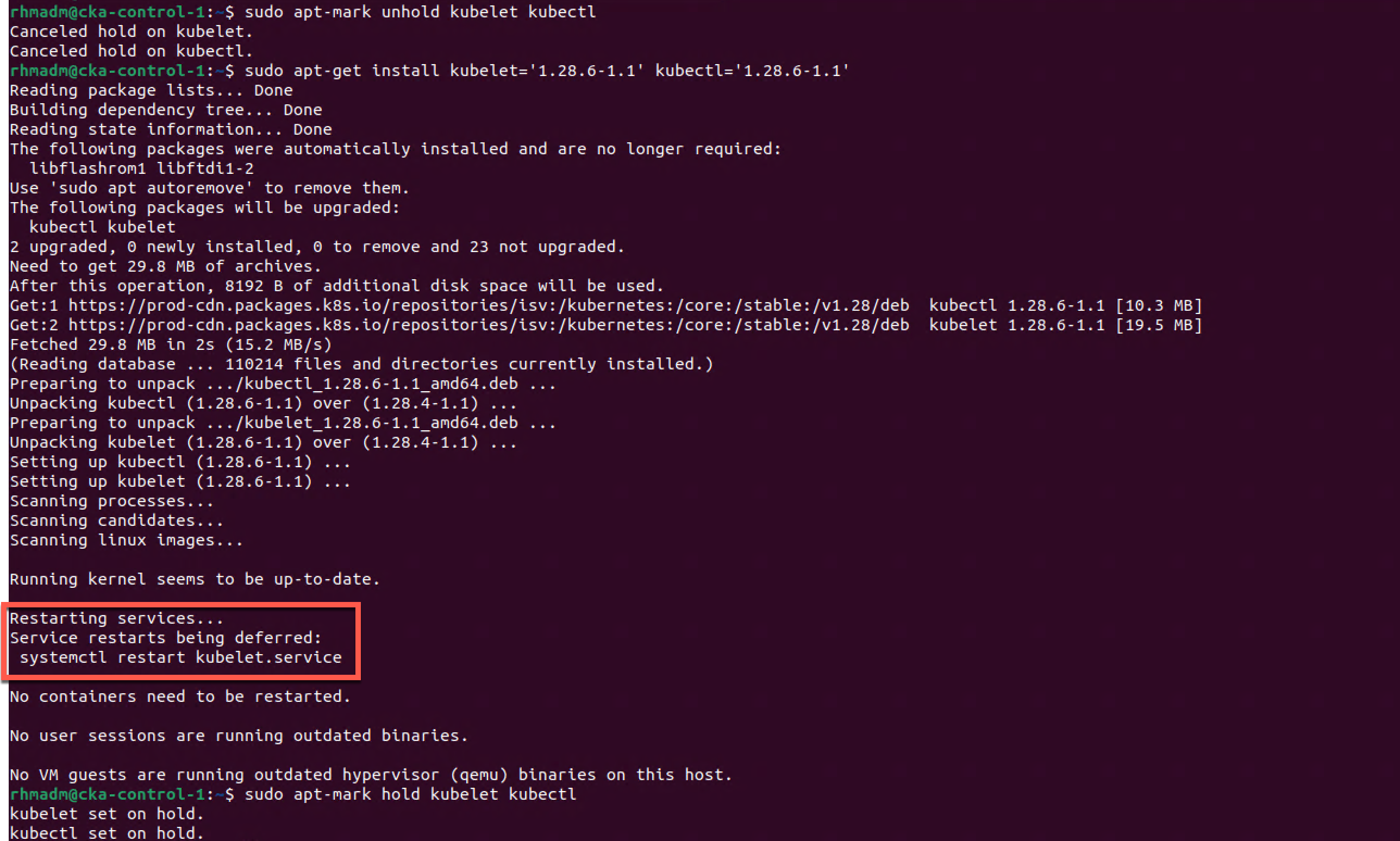

Upgrade kubelet and kubectl

The next step is to upgrade kubelet and kubectl on our first node

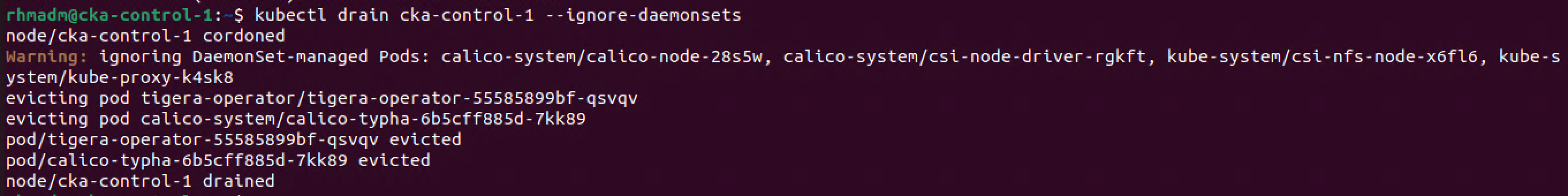

First we'll drain the node for running pods

1kubectl drain cka-control-1 --ignore-daemonsets

Now we can go ahead and run the upgrade of the kubelet and kubectl

1sudo apt-mark unhold kubelet kubectl

2sudo apt-get install kubelet='1.28.6-1.1' kubectl='1.28.6-1.1'

3sudo apt-mark hold kubelet kubectl

Note that the kubelet upgrader prompted if I wanted to restart the kubelet service. I chose to defer this

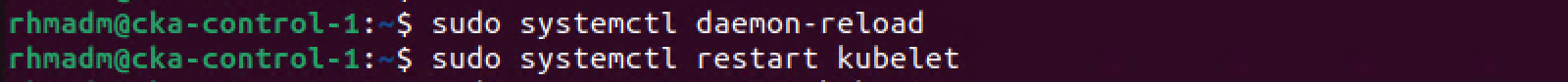

We're ready for restarting the kubelet

1sudo systemctl daemon-reload

2sudo systemctl restart kubelet

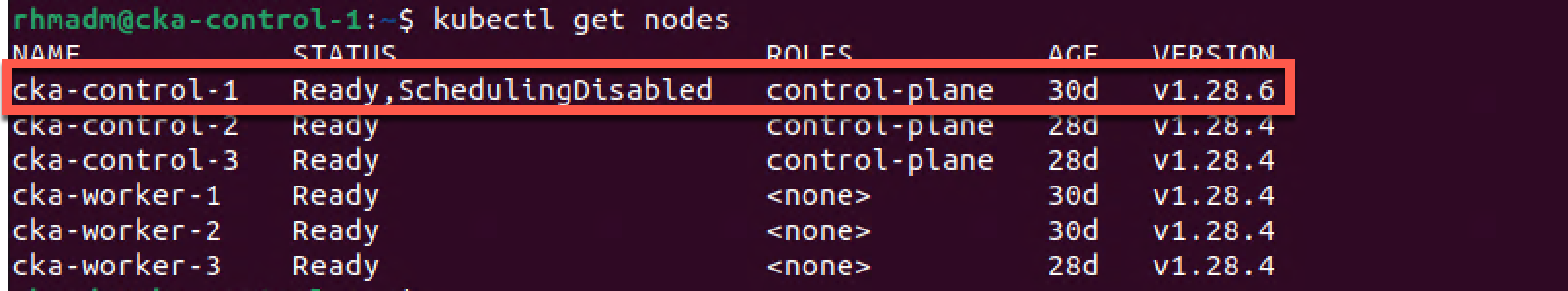

At this point we see that our first node is reporting the new version in the cluster

1kubectl get nodes

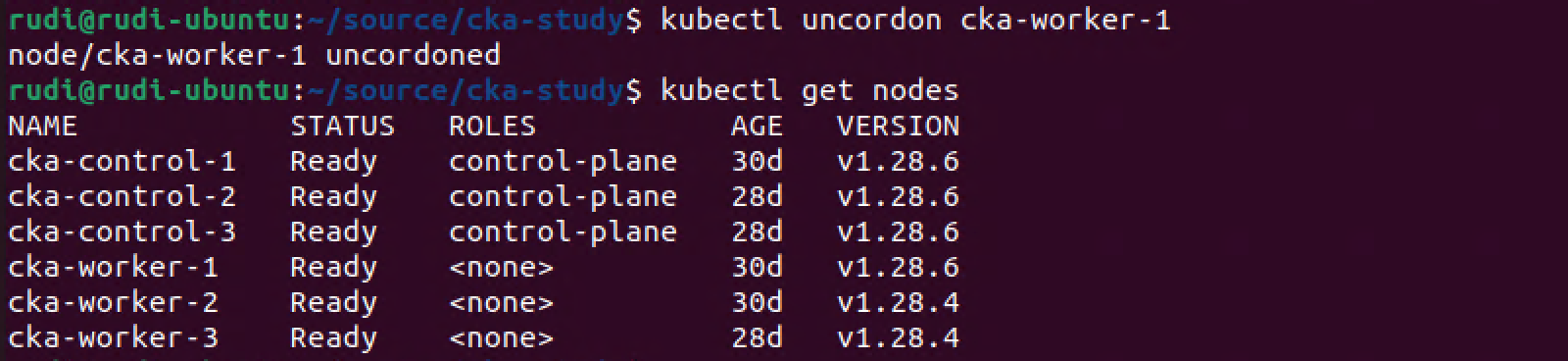

Let's put the control plane node back in to business by uncording it

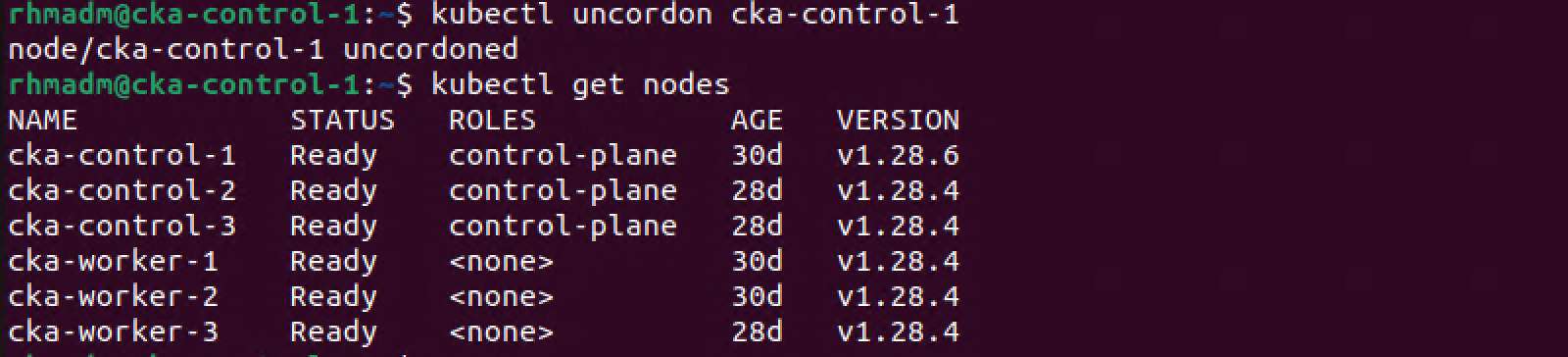

1kubectl uncordon cka-control-1

2kubectl get nodes

Our first control plane node has been uncordoned and is ready for running workloads.

Upgrade remaining control plane nodes

Now we can continue with upgrading the remaining two control plane nodes. The process is the same as for the first control plane node with the only difference being that we'll run kubeadm upgrade node instead of apply

Upgrade kubeadm

On both the remaining control plane nodes we'll run the following

1sudo apt-mark unhold kubeadm

2sudo apt-get install kubeadm='1.28.6-1.1'

3sudo apt-mark hold kubeadm

Now we'll run the upgrade plan command to check that our node is ready for upgrade

1sudo kubeadm upgrade plan

The output is similar to what we got from the plan on the first node so we'll go ahead and run the upgrade node command

1sudo kubeadm upgrade node

Again we're instructed to now go ahead with upgrade the kubelet for our node

Upgrade kubelet and kubectl on remaining control plane nodes

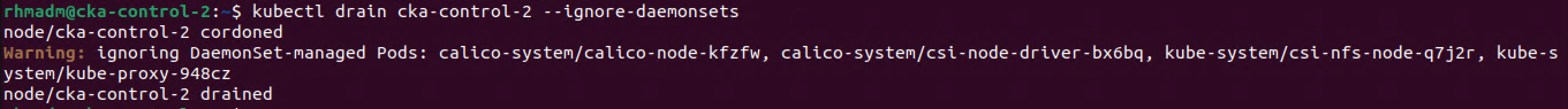

The first thing we'll do is to drain the node

1kubectl drain cka-control-2 --ignore-daemonsets

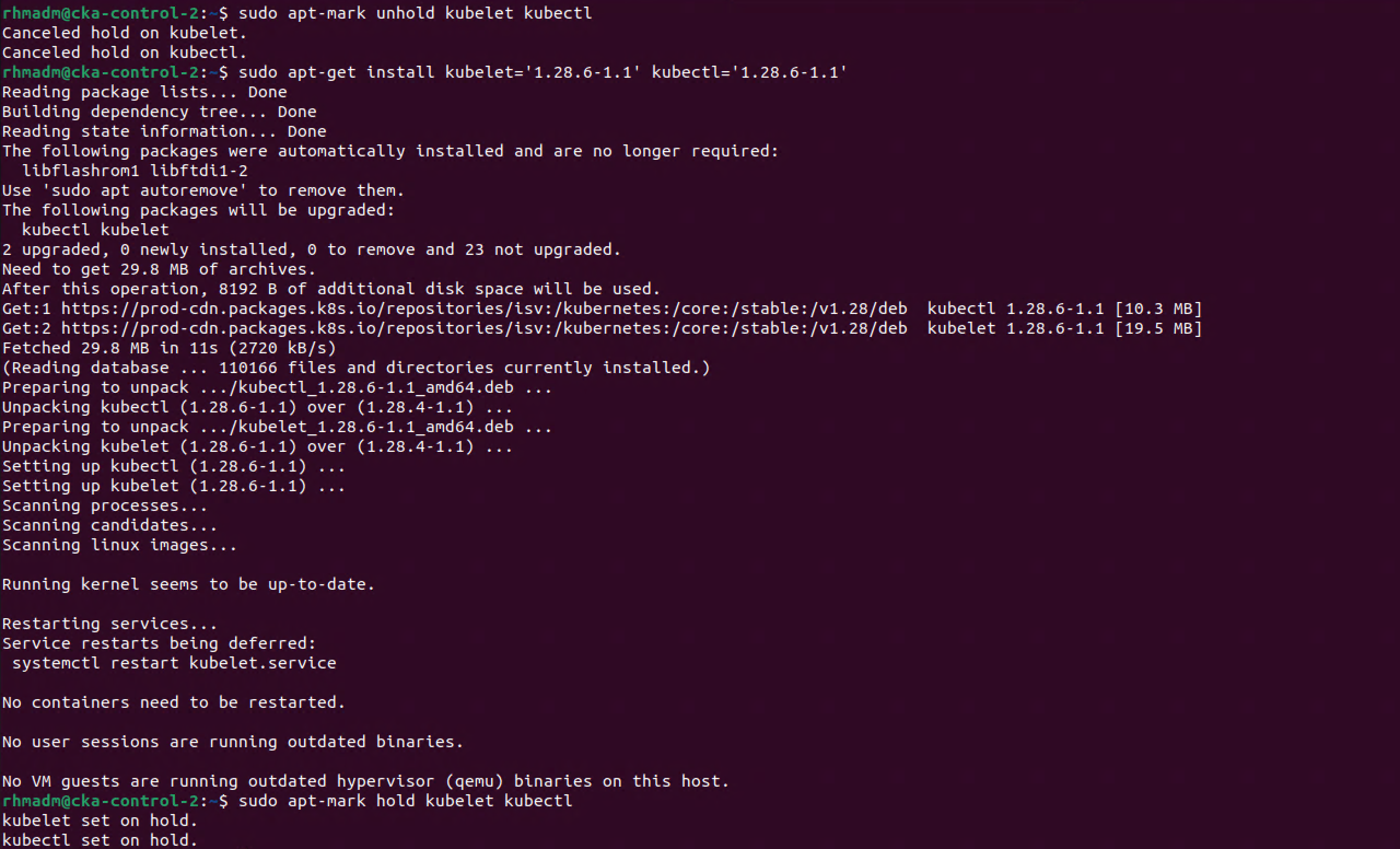

Now we'll run the commands for upgrading kubelet and kubectl

1sudo apt-mark unhold kubelet kubectl

2sudo apt-get install kubelet='1.28.6-1.1' kubectl='1.28.6-1.1'

3sudo apt-mark hold kubelet kubectl

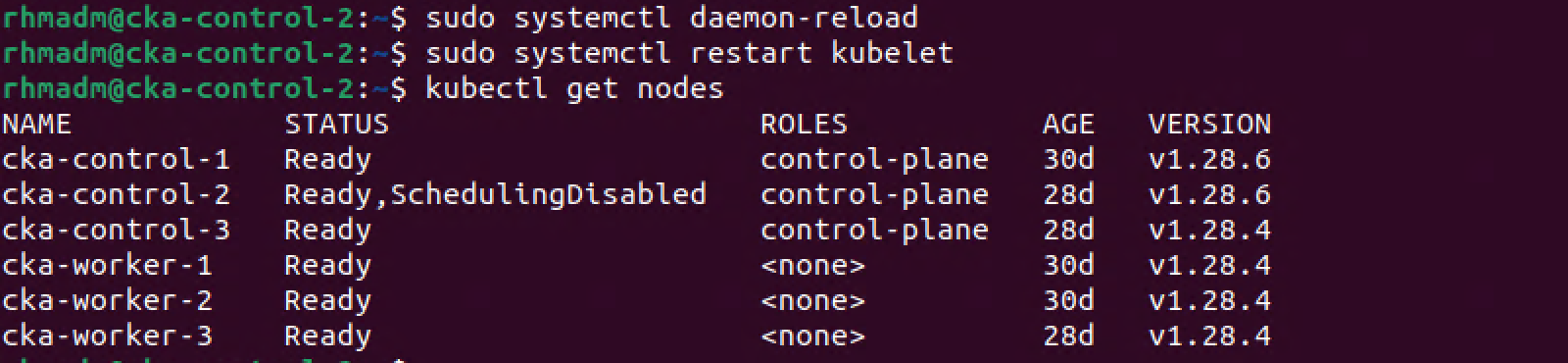

With the kubelet upgraded we can restart the kubelet and check our cluster status

1sudo systemctl daemon-reload

2sudo systemctl restart kubelet

3kubectl get nodes

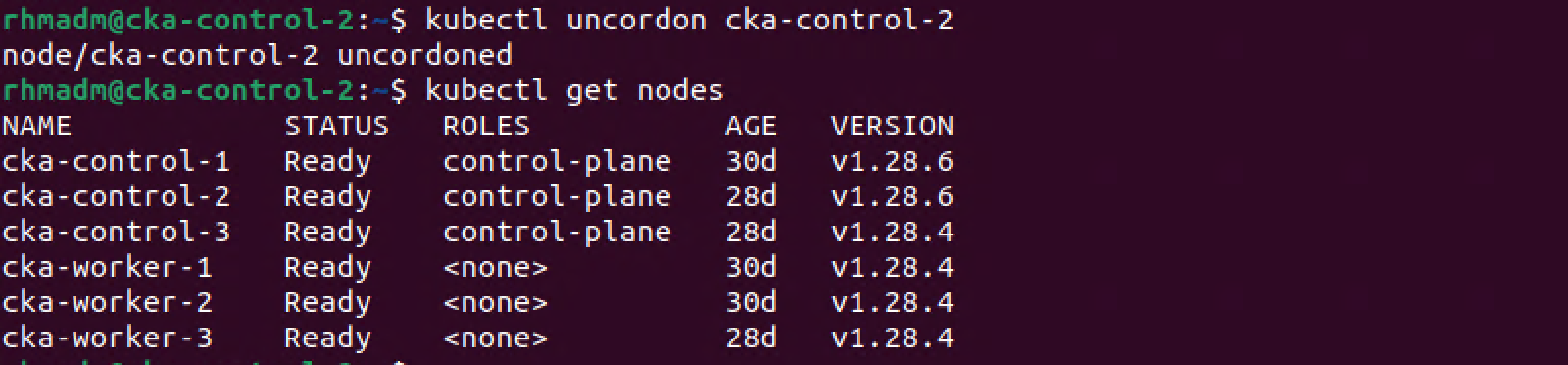

With the kubelet restarted we'll bring our node back in to the cluster

1kubectl uncordon cka-control-2

2kubectl get nodes

Now we have upgraded the second node, to finish up upgrading the control plane we'll run the same steps on the third control plane node before we can start with the worker nodes.

Since the steps are the same as for the second control plane node we'll skip the detailed steps for the third node.

After running the steps for the third control plane node our cluster has the following status

Upgrade worker nodes

Kubernetes documentation reference

The upgrade steps for the worker nodes are very similar to the steps performed on control plane node 2 and 3. The slight difference is that we could, if we wanted to, upgrade a few nodes simultaneously. Just note that you must make sure that there are enough resources in the cluster for running the workloads

High-level steps for upgrading worker nodes (with kubeadm):

- Upgrade kubeadm package

- Drain node to evict running containers

- Run kubeadm upgrade

- Upgrade kubelet and kubectl

- Put node back to work

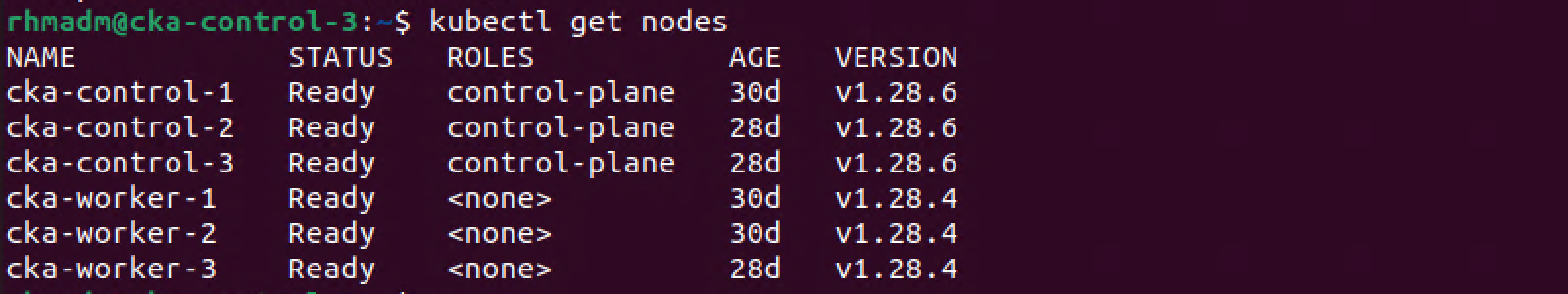

Upgrade kubeadm on worker node

1sudo apt-mark unhold kubeadm

2sudo apt-get install kubeadm='1.28.6-1.1'

3sudo apt-mark hold kubeadm

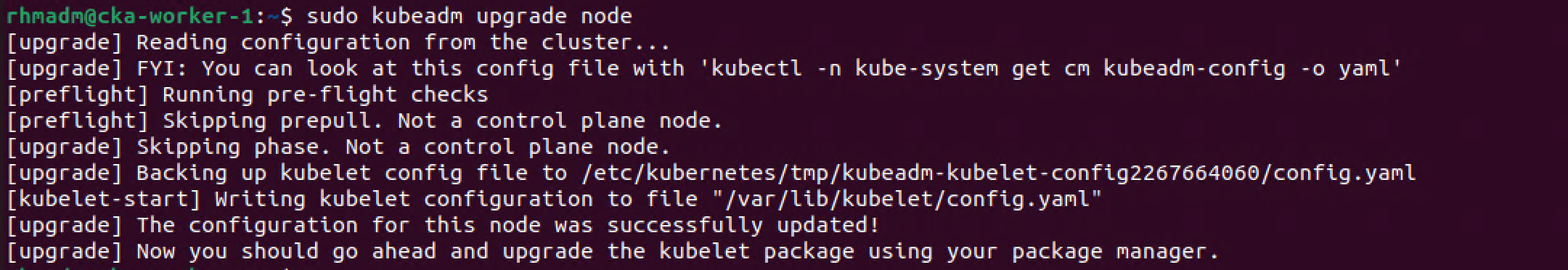

Now we can continue with running the kubeadm upgrade node command

1sudo kubeadm upgrade node

Since this is a worker node this command is much faster than on our control plane nodes

With kubeadm upgraded we can go ahead with upgrading kubelet and kubectl on the worker node

Upgrade kubelet on worker node

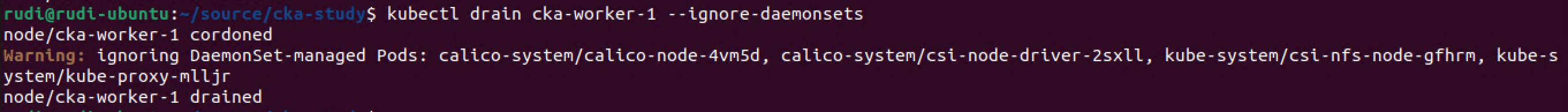

As with the control plane nodes we'll have to drain the worker node we're about to upgrade

1kubectl drain cka-worker-1 --ignore-daemonsets

Note that the kubelet commands done when upgrading the worker nodes are run from my client machine since I don't have the admin kubeconfig on the worker nodes. If running kubectl outside of one of the cluster nodes you might need to upgrade kubectl on your client as well

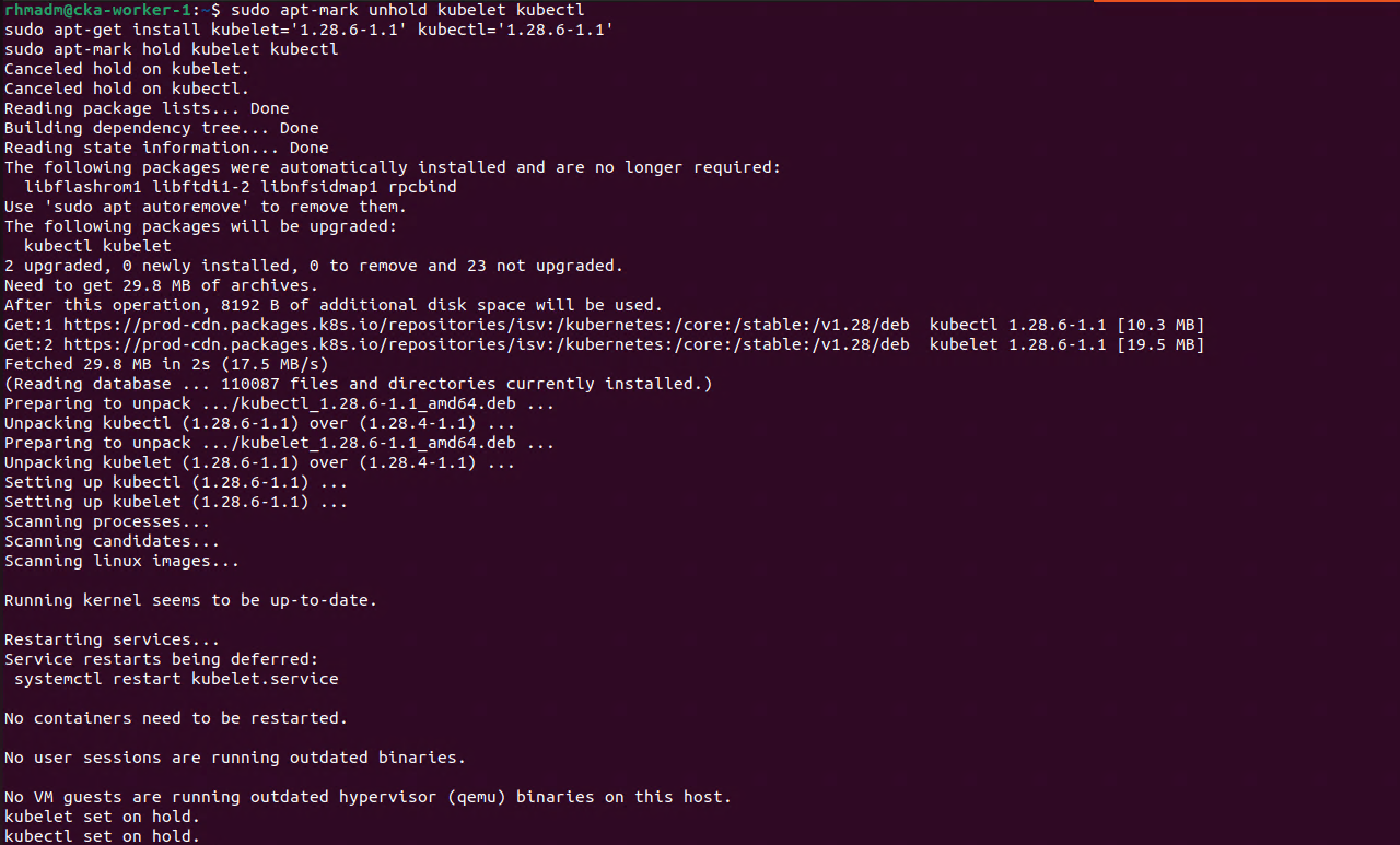

With the worker node drained we can go ahead with ugprading the kubelet

1sudo apt-mark unhold kubelet kubectl

2sudo apt-get install kubelet='1.28.6-1.1' kubectl='1.28.6-1.1'

3sudo apt-mark hold kubelet kubectl

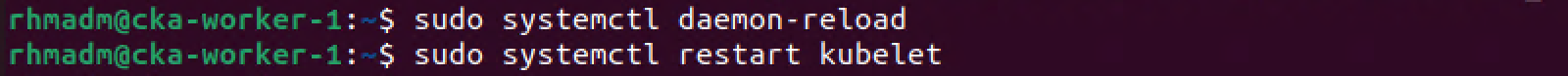

And to finish up the upgrade we'll restart the kubelet on the node and uncordon the node

1sudo systemctl daemon-reload

2sudo systemctl restart kubelet

With the kubelet restarted we can check the status on our cluster

1kubectl get nodes

With the worker node upgraded we can uncordon it to bring it back in to the cluster

1kubectl uncordon cka-worker-1

2kubectl get nodes

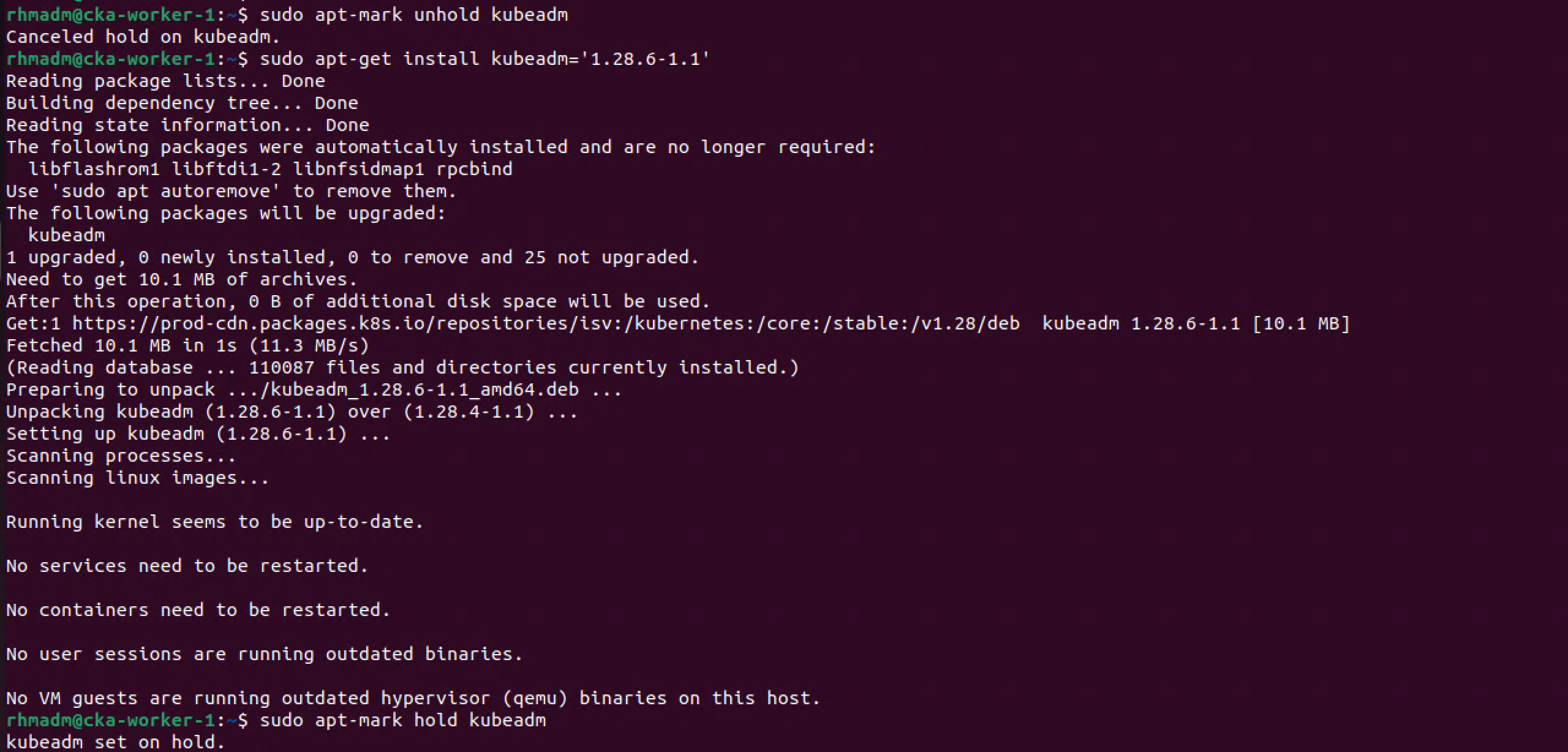

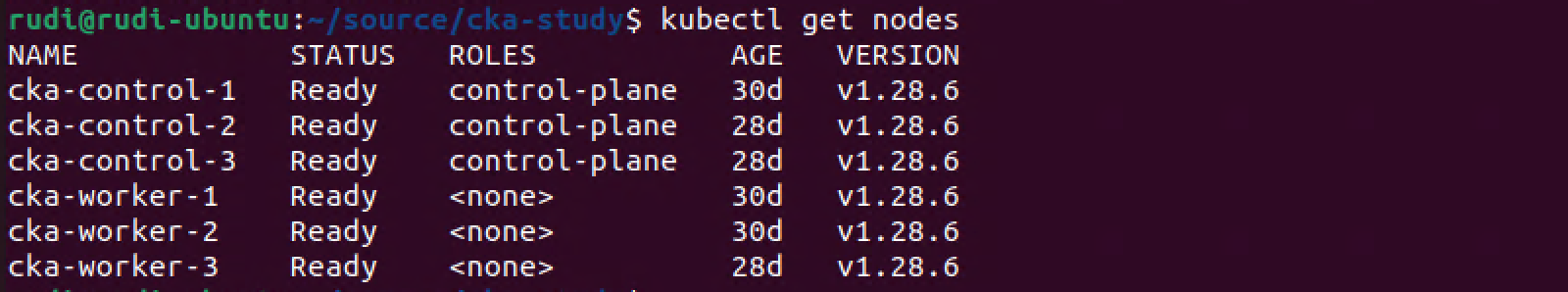

With our first worker upgraded we can run the same steps on the remaining worker nodes to finish up our cluster

After all nodes have been upgraded the cluster has the following node status

1kubectl get nodes

Summary

This post has shown how to run the upgrade of Kubernetes control plane and worker nodes with kubeadm. Again, please make sure you follow any additional steps needed for doing this in a production environment and refer to the Kubernetes documentation specific for your version

Thanks for reading.