Mounting a vSAN Remote Datastore (HCI Mesh)

In this post we'll take a look at how to mount a vSAN datastore on another vSAN enabled cluster.

Remote vSAN datastores are also known as vSAN HCI Mesh and was introduced back in September 2020

This feature allows us to use vSAN storage on vSphere clusters where we might not (yet) have vSAN enabled and/or are in need of additional storage. Note that both the clusters involved will be called vSAN clusters even though one of the clusters will have only remote vSAN mounted.

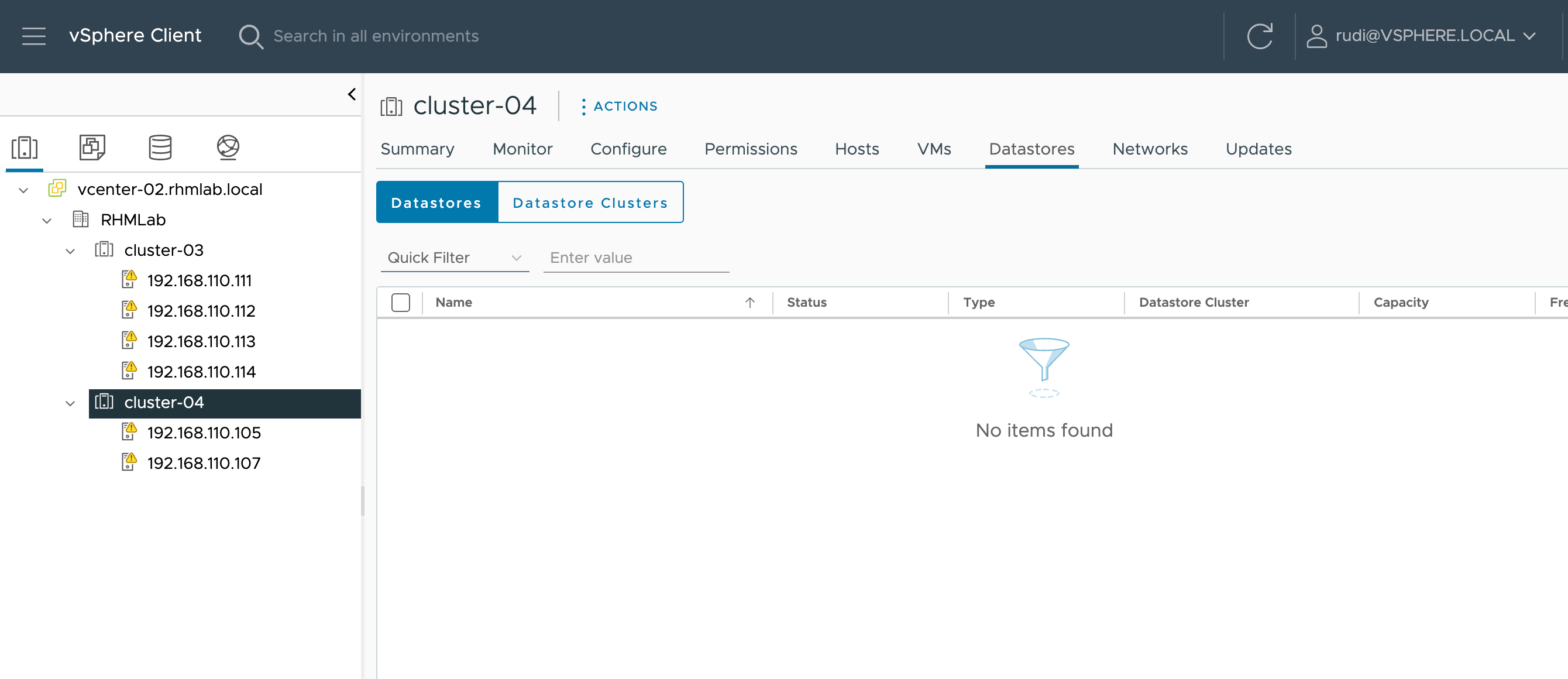

Before we go in to the pre-req's let's have a look at a new cluster we have set up that have two hosts but no storage available

Prerequisites

There are a lot of requirements that needs to be in place before we can utilize vSAN HCI Mesh

The following list has been taken from the official VMware documentation

HCI Mesh vSAN has the following design considerations:

- Clusters must be managed by the same vCenter Server and be located within the same data center.

- Clusters must be running 7.0 Update 1 or later.

- A vSAN cluster can serve its local datastore to up to ten client vSAN clusters.

- A client cluster can mount up to five remote datastores from one or more vSAN server clusters.

- A single remote datastore can be mounted to up to 128 vSAN hosts, including hosts in the vSAN server cluster.

- All objects that make up a VM must reside on the same datastore.

- For vSphere HA to work with HCI Mesh, configure the following failure response for Datastore with APD: Power off and restart VMs.

- Client hosts that are not part of a cluster are not supported. You can configure a single host compute-only cluster, but vSphere HA does not work unless you add a second host to the cluster.

What we will configure in this post is what is referenced as a HCI Mesh Compute-only cluster which has the following design considerations

- vSAN networking must be configured on the client hosts.

- No disk groups can be present on vSAN compute-only hosts.

- No vSAN data management features can be configured on the compute-only cluster.

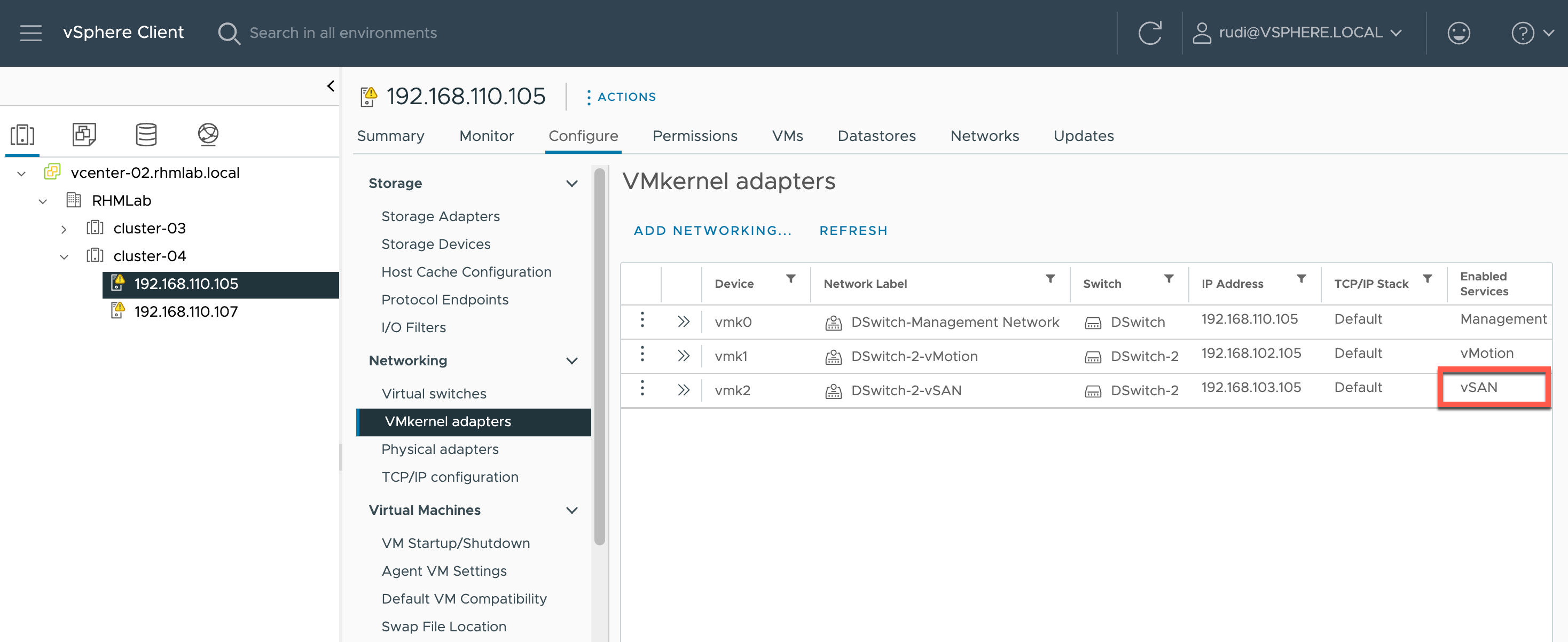

So on our cluster we have no local datastores hence we've got no disk groups and no vSAN data management features configured. We'll have to set up vSAN networking and we'll do this with a vmkernel port which will be on the same portgroup as the hosts on the existing vSAN cluster

vSAN network

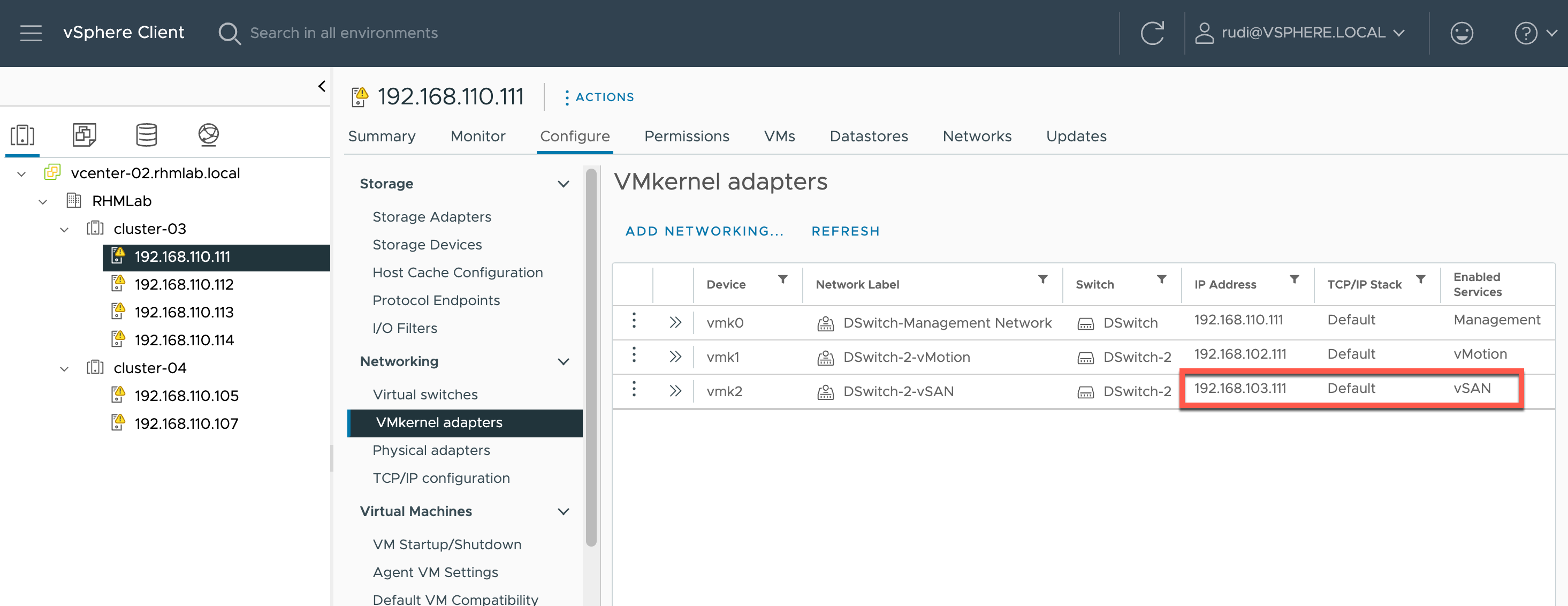

On one of the hosts in the existing vSAN cluster we'll check the vmkernel ports to see the network details on this

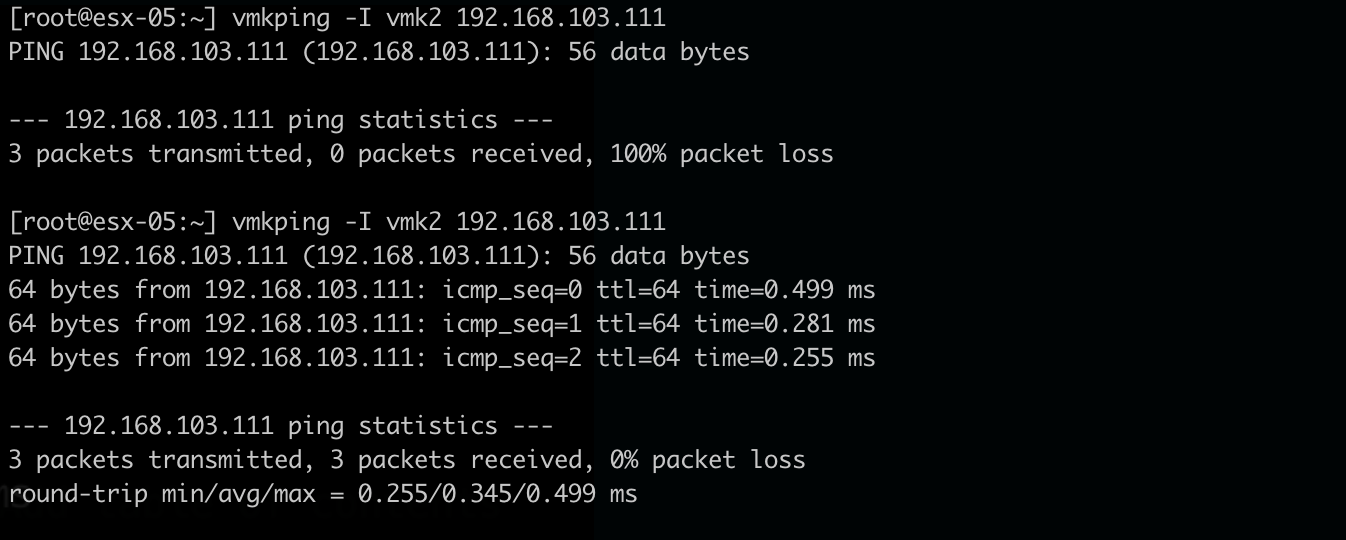

So this vmkernel is on the 192.168.103.0/24 network so we can verify one of our new hosts to verify it can reach this network with vmkping from the ESXi.

Note that the vSphere UI will verify network connectivity before enabling HCI mesh

Enable vSAN remote datastore

With the prereq's verified we can go ahead and mount our remote vSAN datastore on our new cluster.

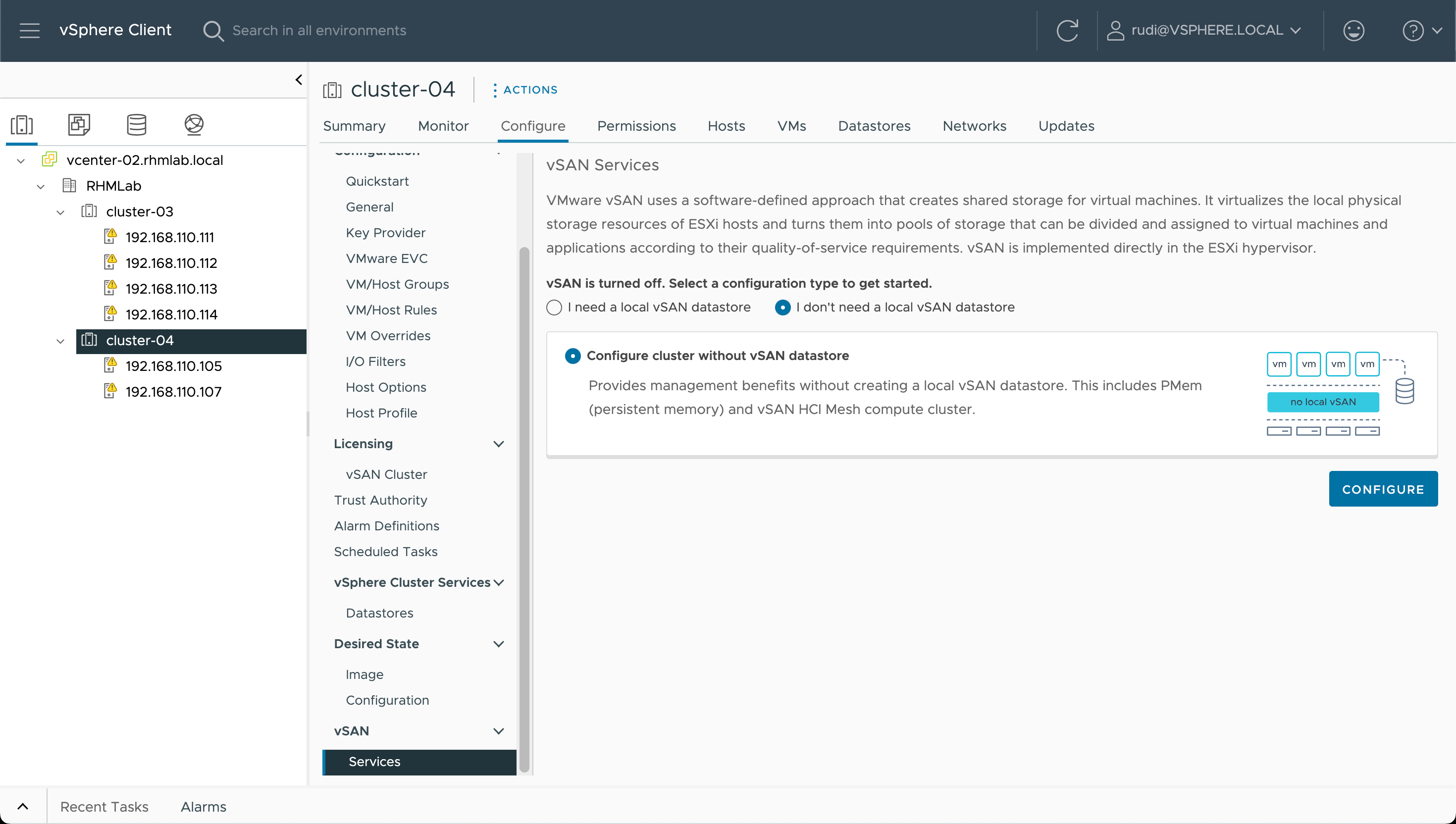

On our new cluster we'll go to Configure->vSAN->Services and we'll select I don't need a local vSAN datastore

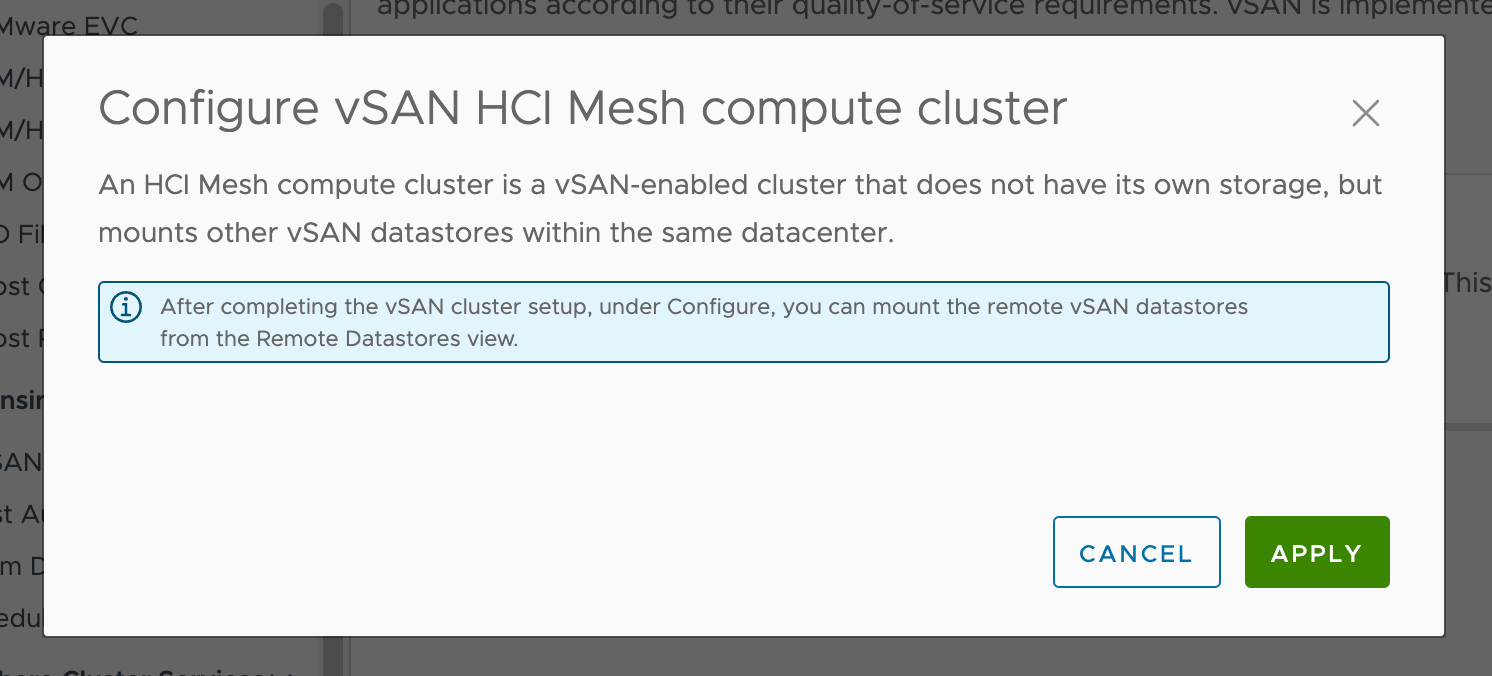

After hitting Configure we'll get this message that we need to accept

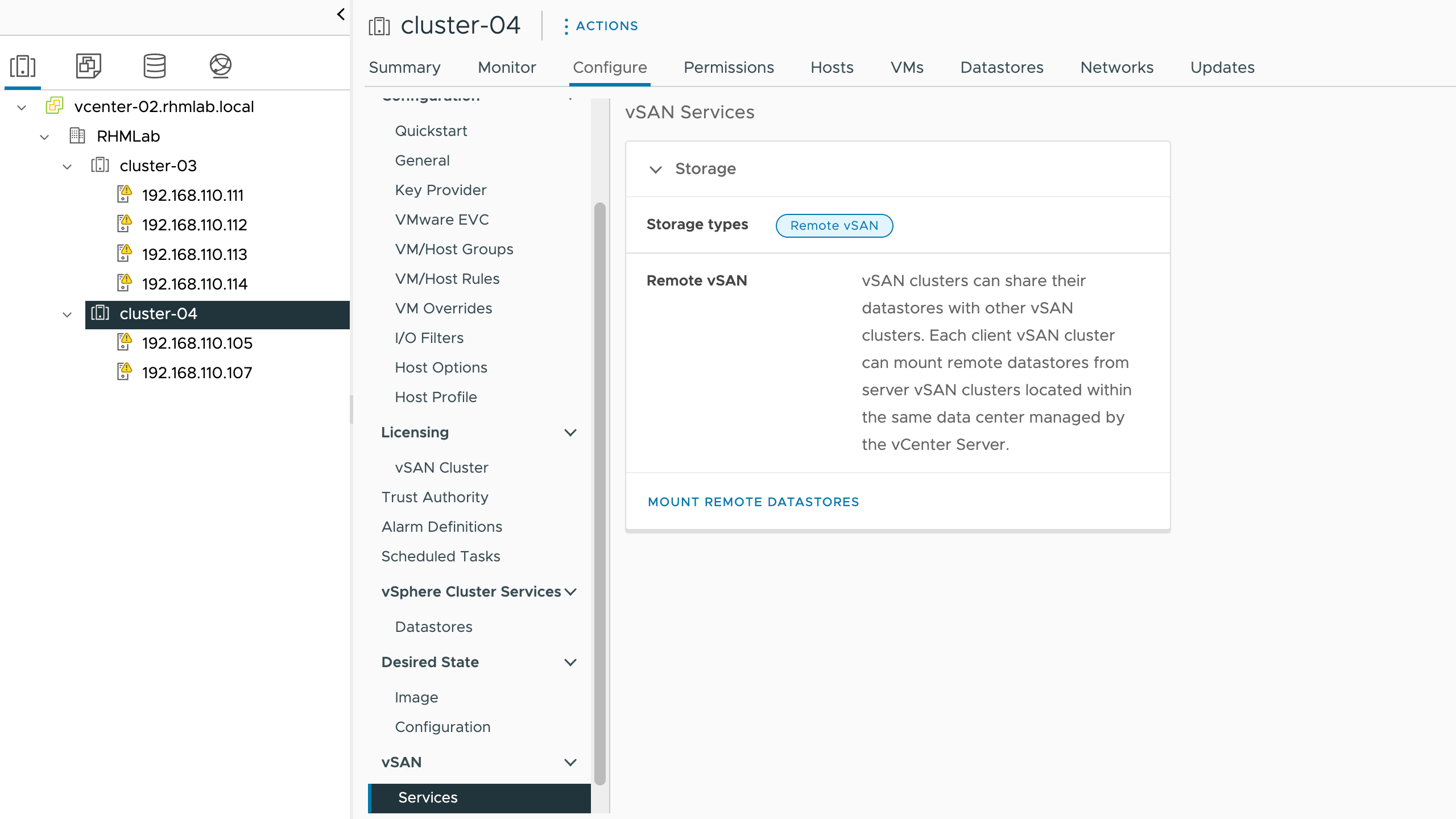

We should now have vSAN enabled for remote datastores

Mount remote datastore

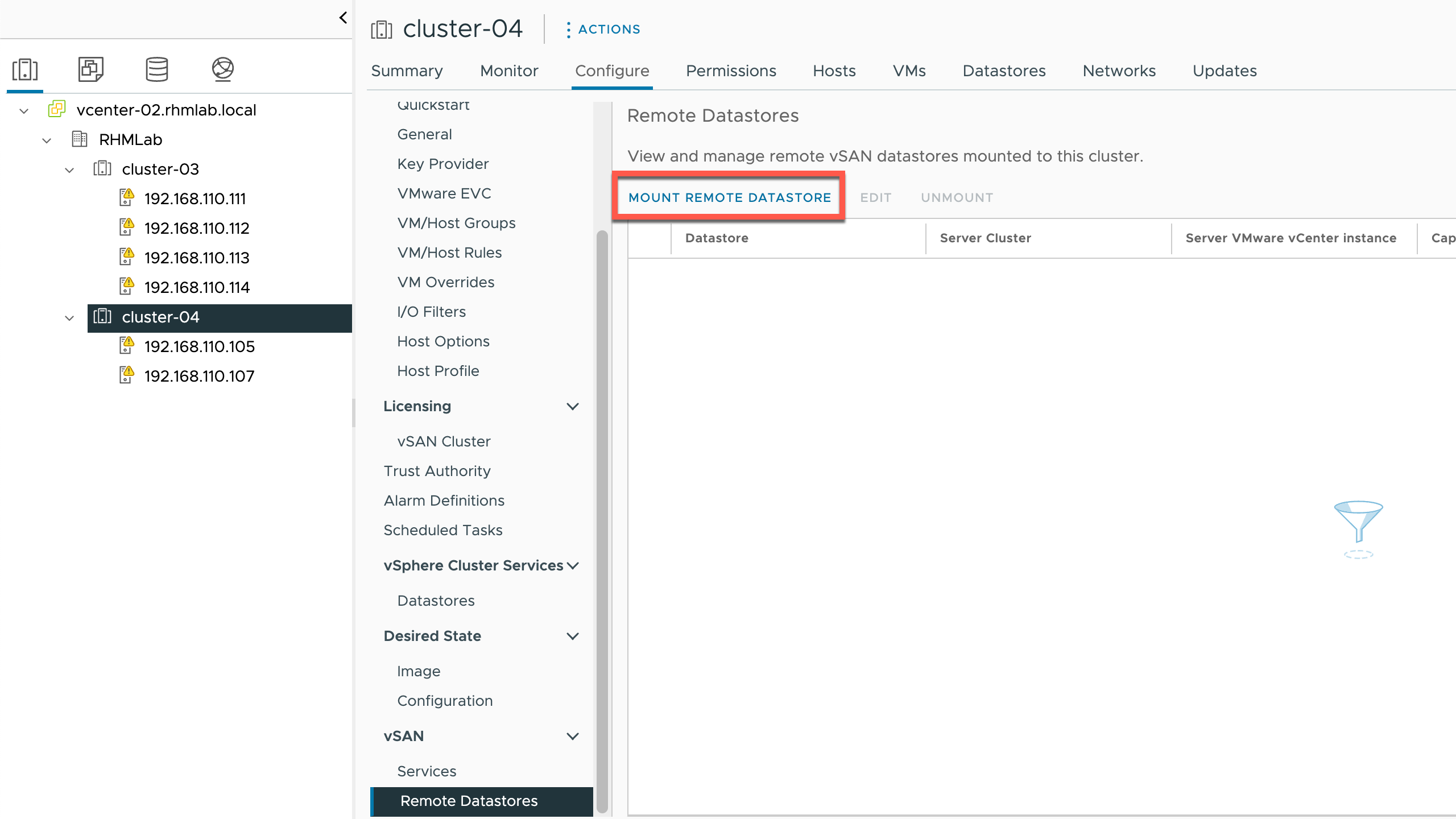

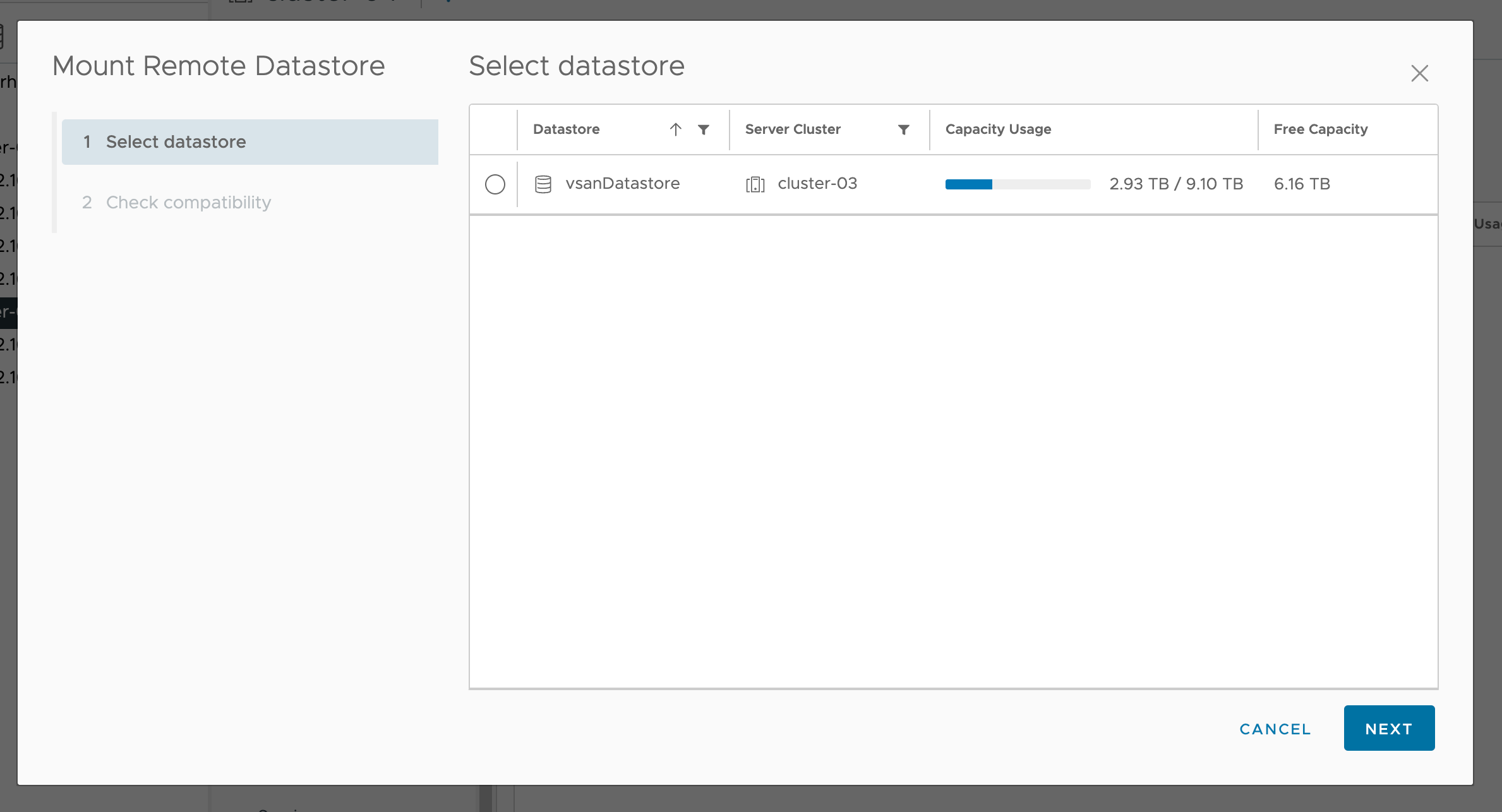

We can now go ahead and mount our remote datastore. We'll select the Remote Datastores menu option and hit Mount Remote Datastore

The wizard will scan the vSAN network and find our existing vSAN datastore from cluster-03

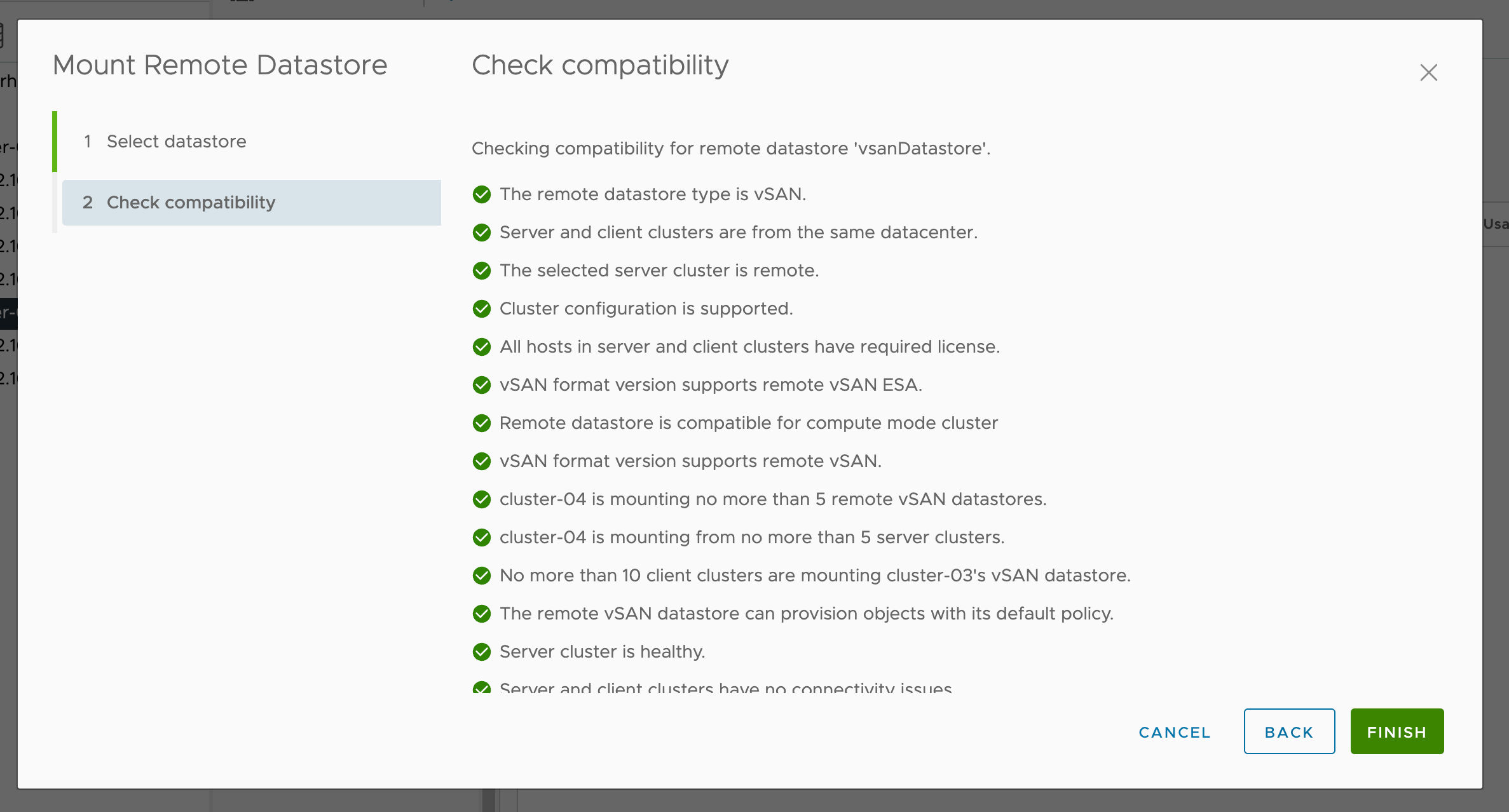

After selecting the vSAN datastore the wizard will verify the compatibility and hit Finish to complete the mount

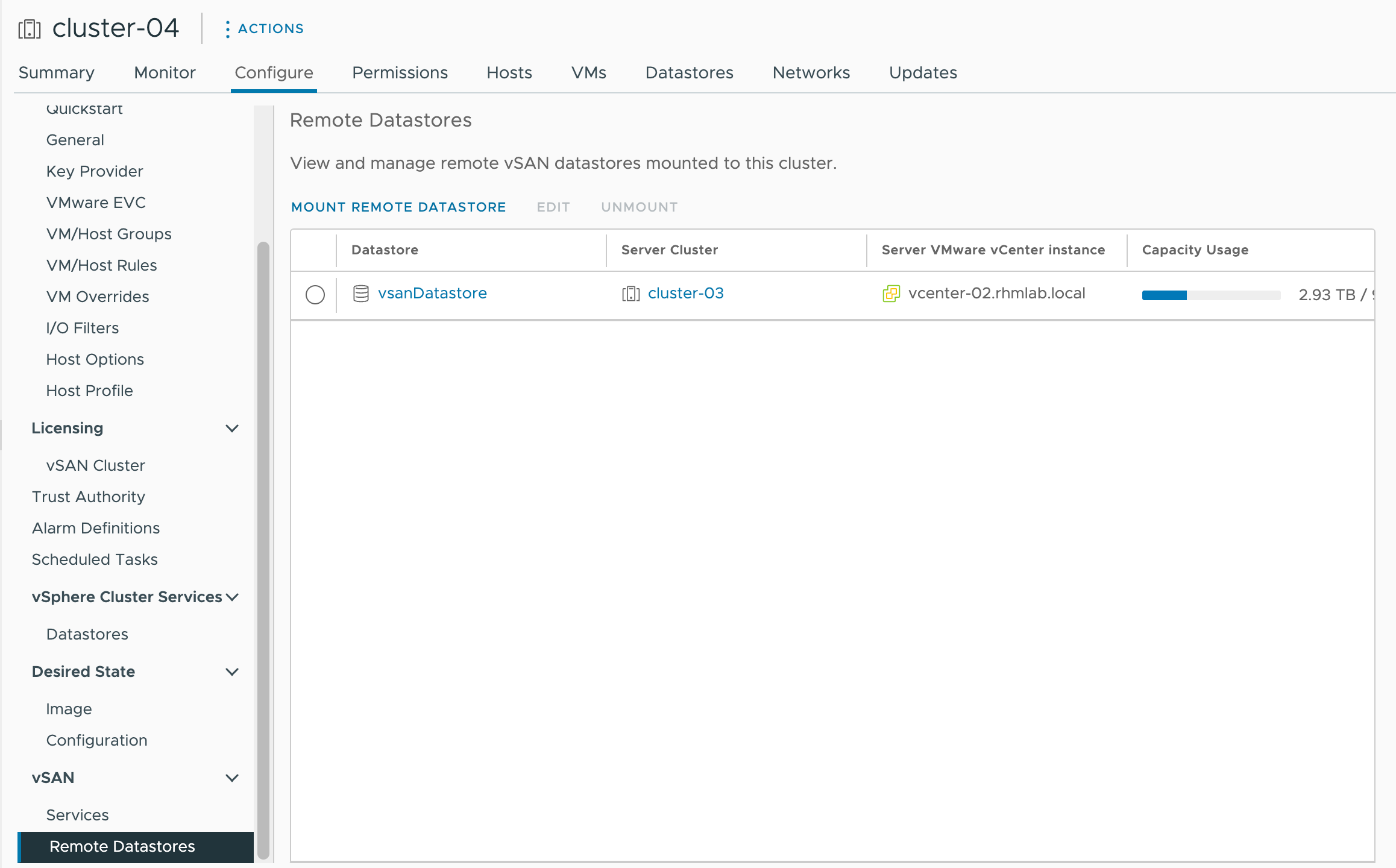

And after the compatibility check has completed and the wizard has finished we've mounted the vSAN datastore

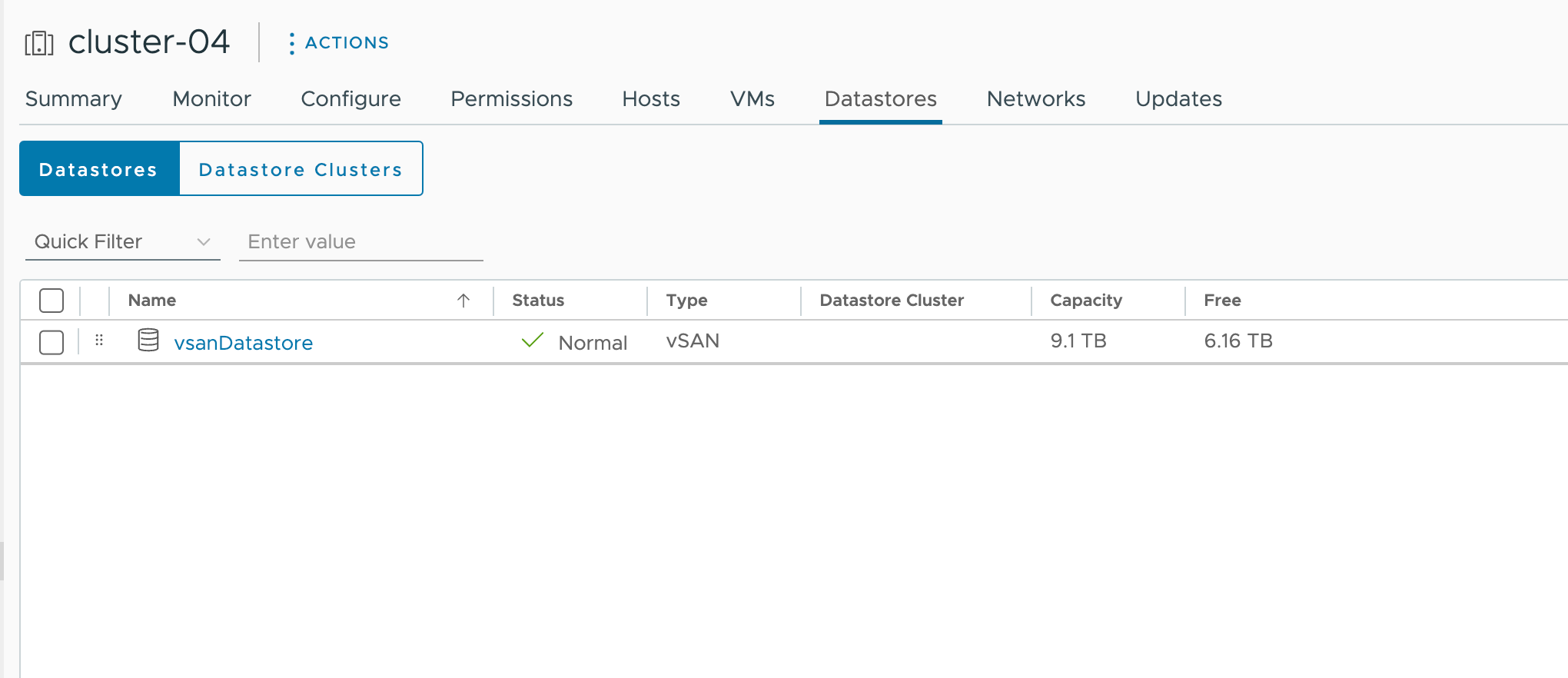

We can verify the available datastores for the Cluster

Deploy VM

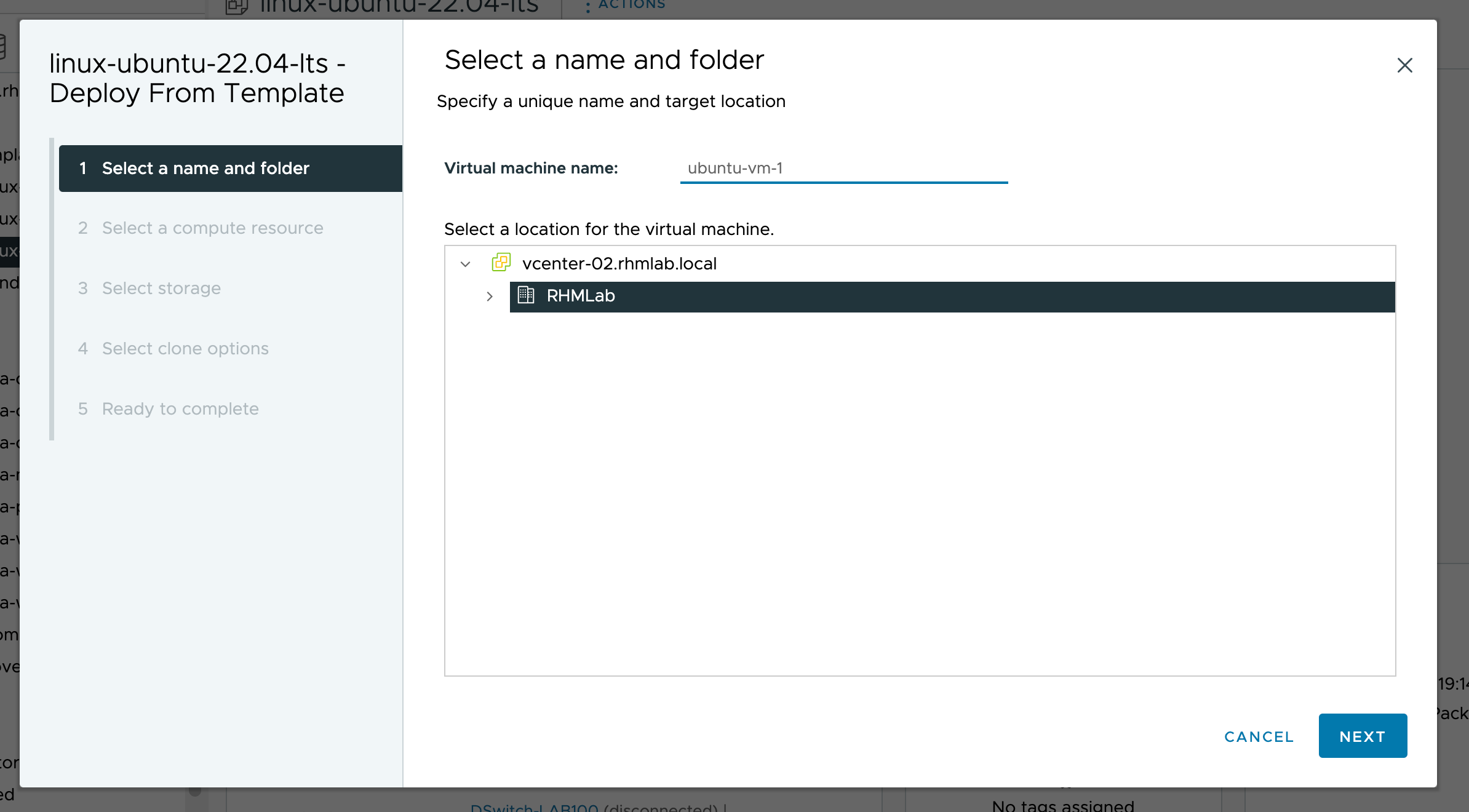

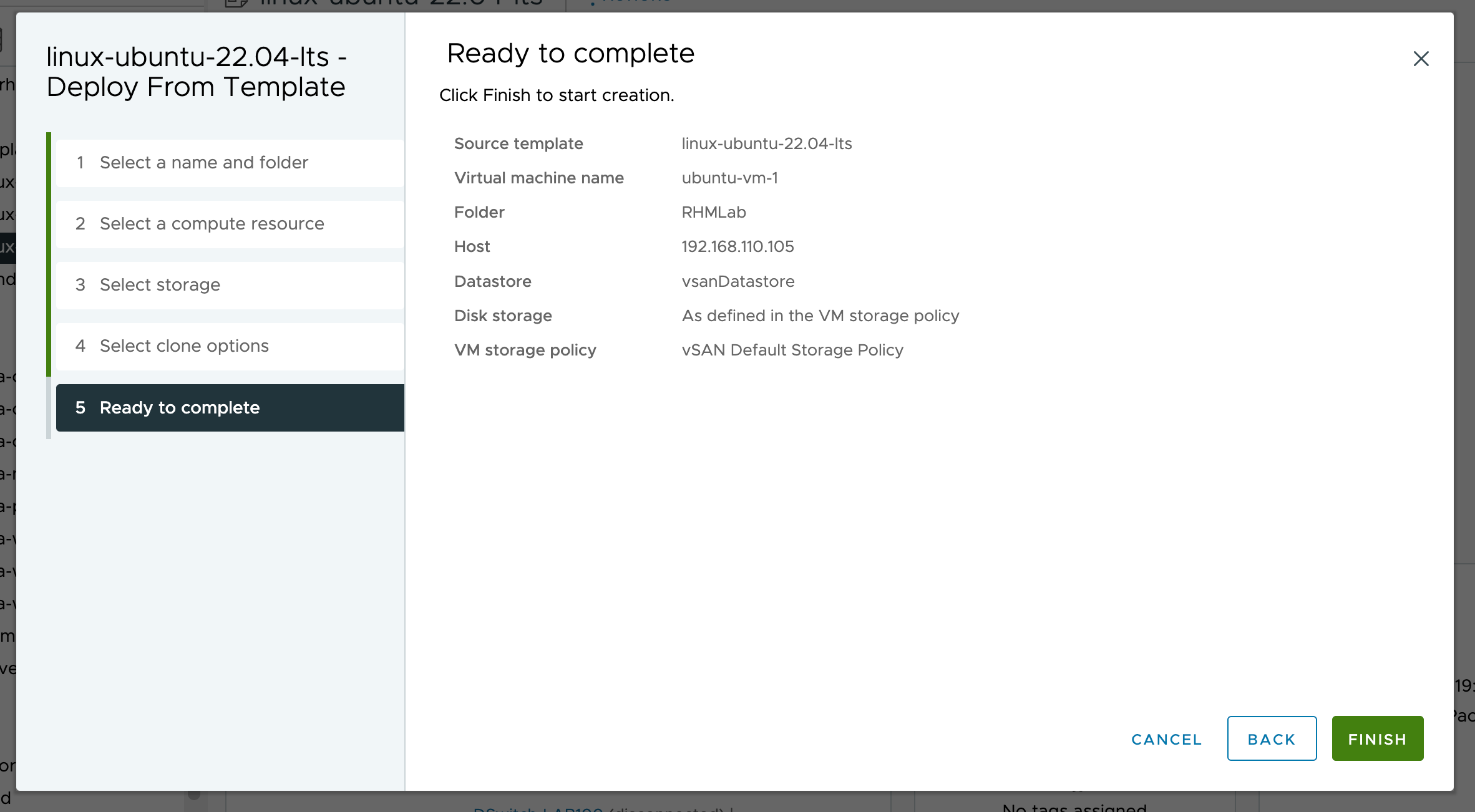

With storage available to us let's try to deploy a VM from an existing Ubuntu template.

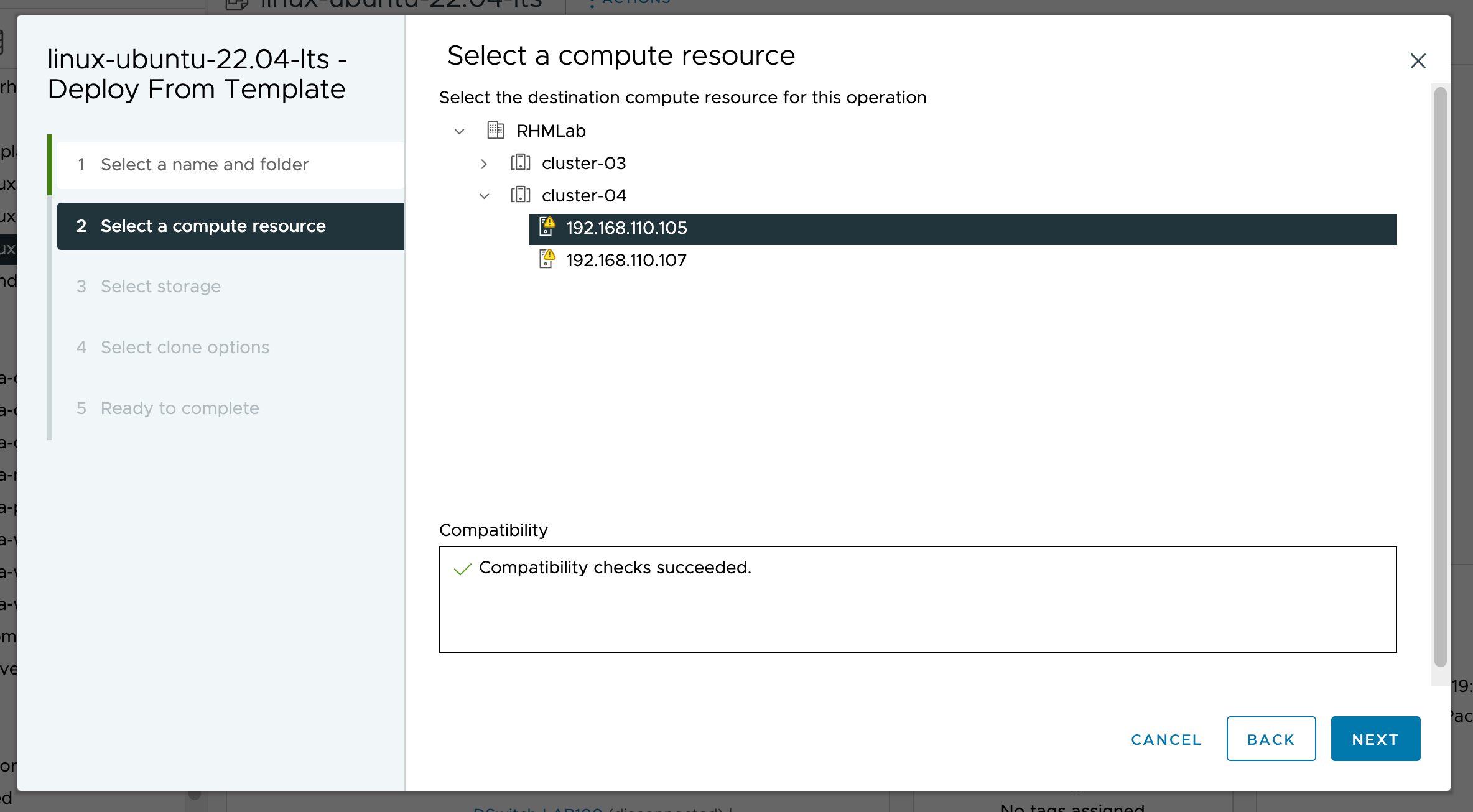

We'll select our new cluster as the Compute resource

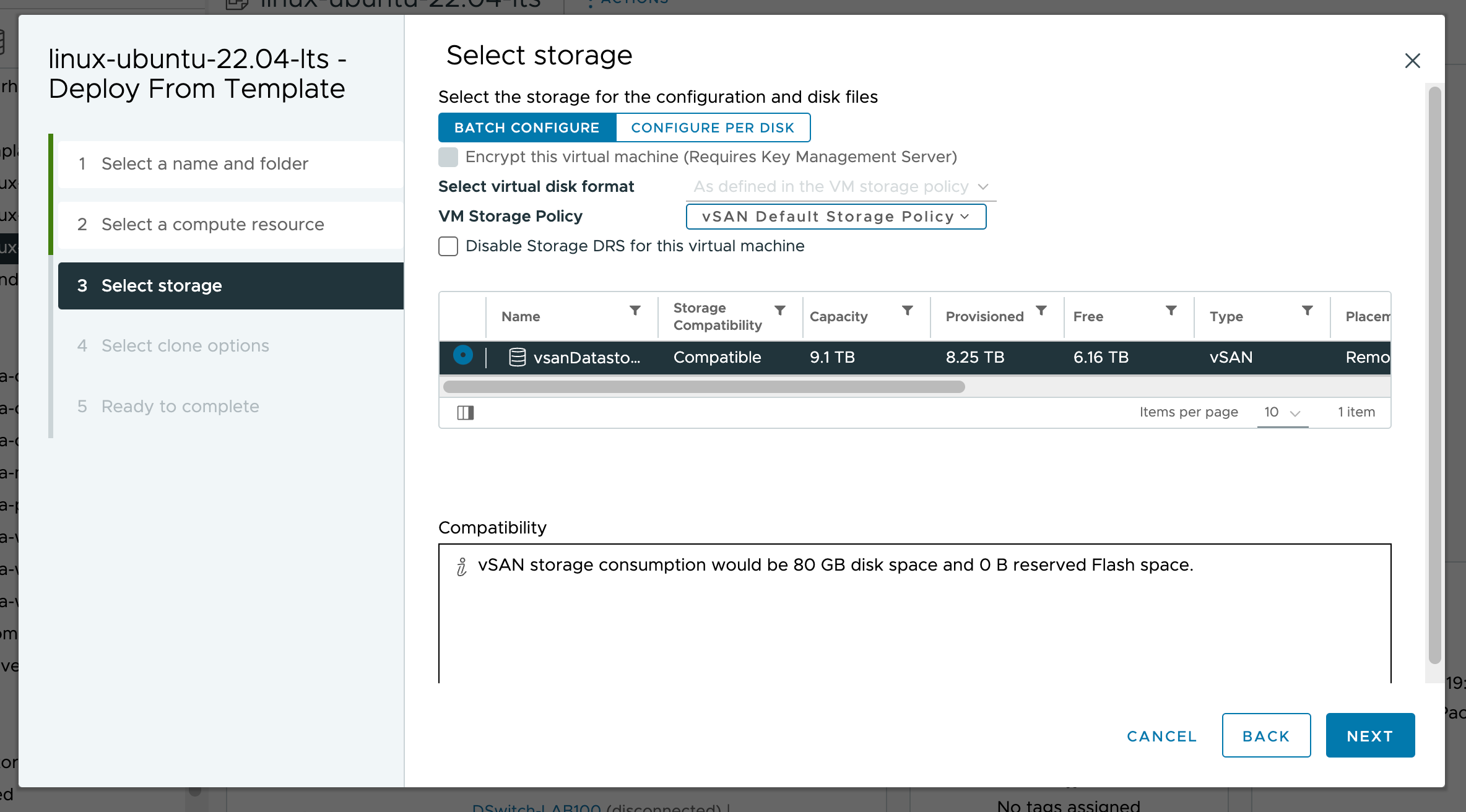

For storage we've now got the possibility to select the vSAN datastore

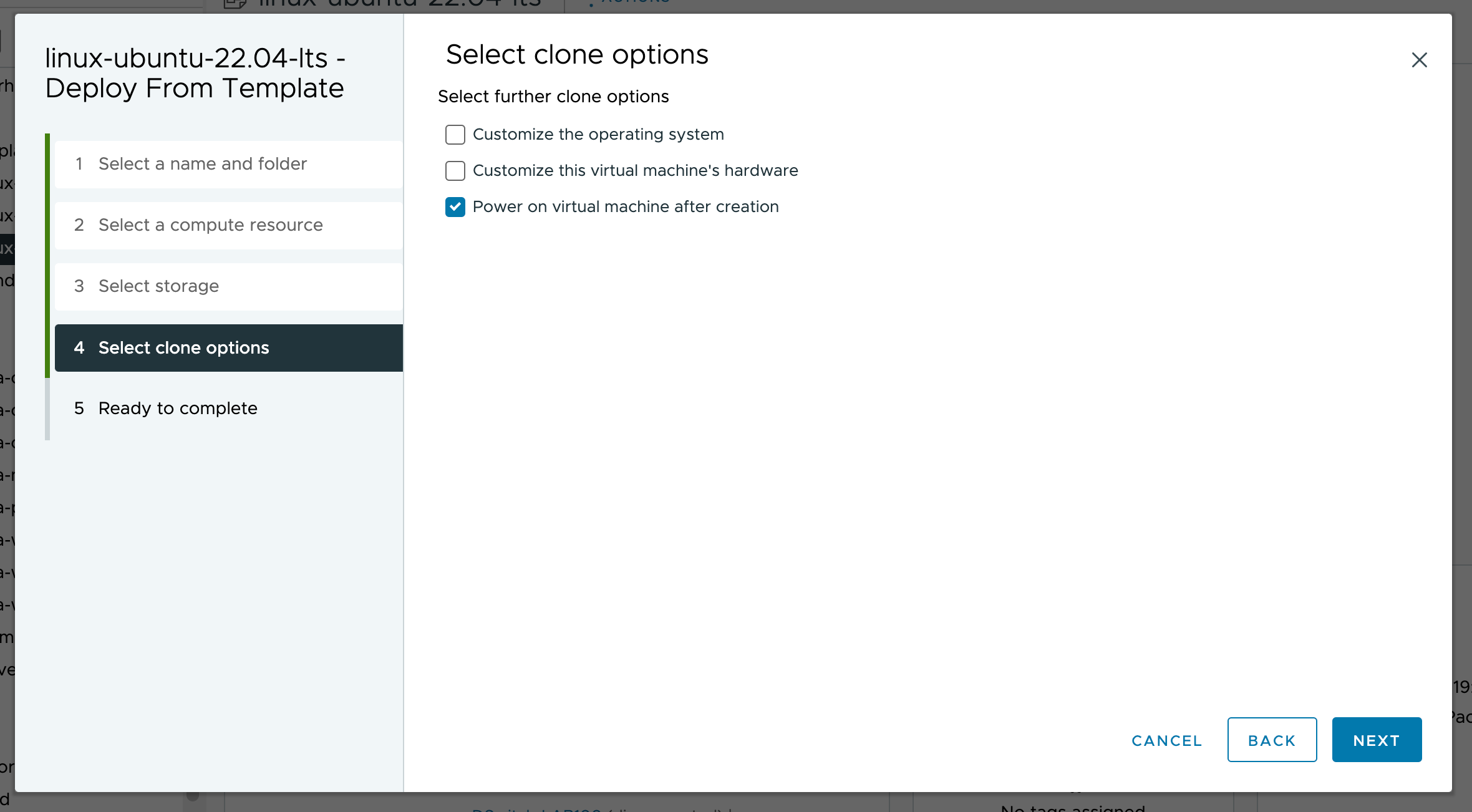

We'll Power On the VM after creation

The wizard is ready and we can deploy our VM

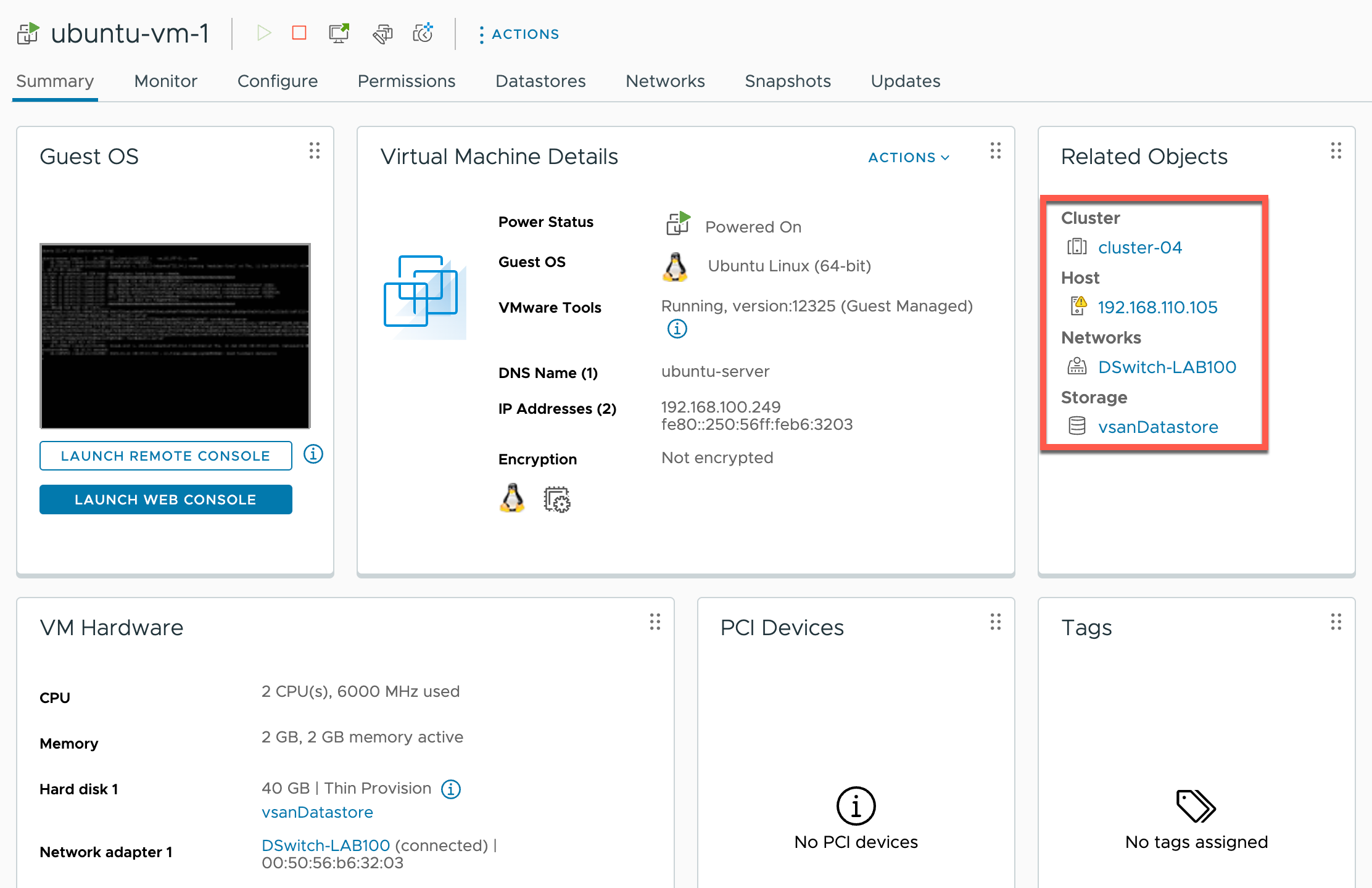

Our VM has been deployed and we can verify that it's deployed on our new cluster and on the remote datastore

Migrate VM

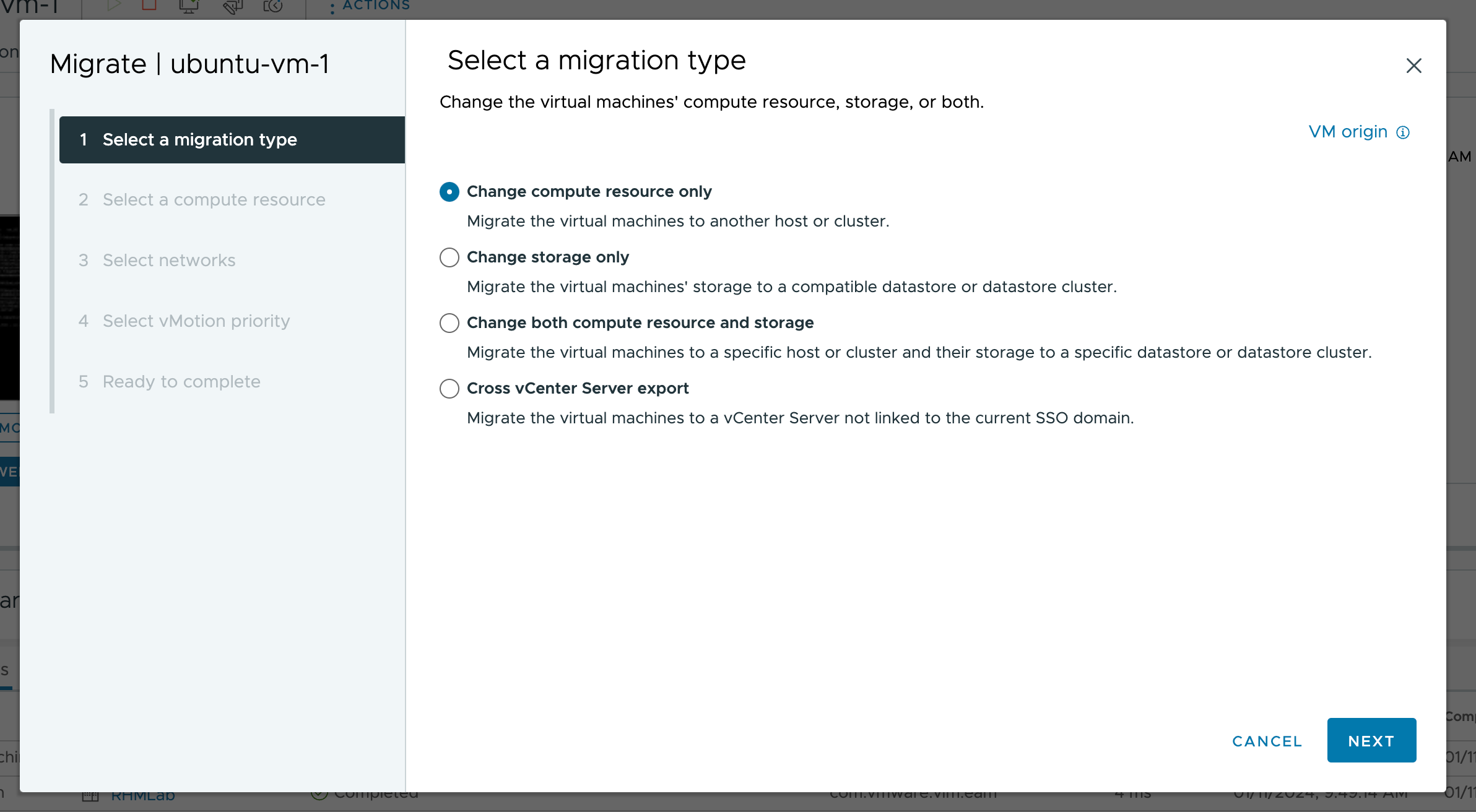

Another nice thing about using a remote datastore like this is that we now have shared storage between the two clusters. This allows for easy vMotion between the two clusters. We do not have to move the storage bits and can select Change compute resource only

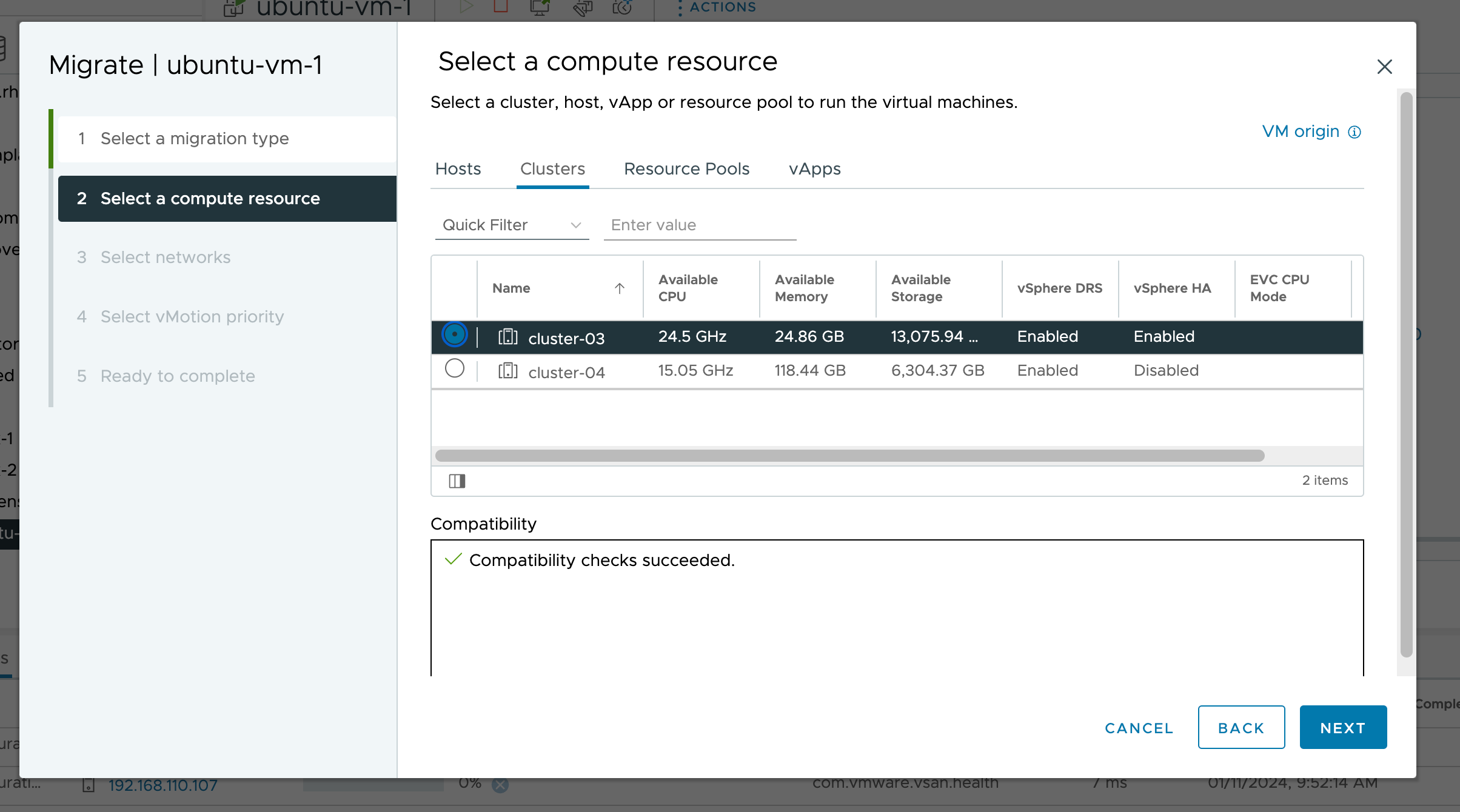

We'll select the other cluster

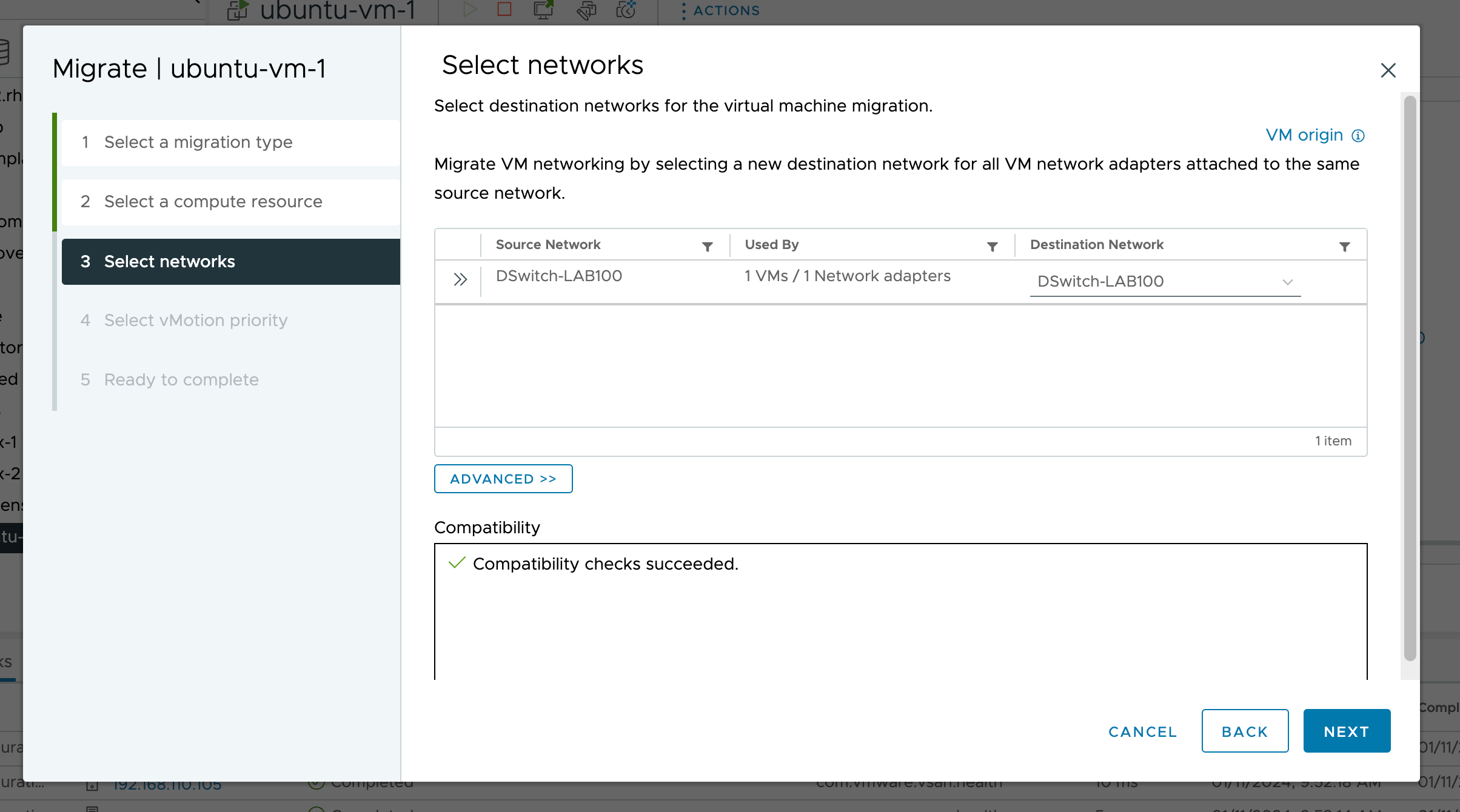

Select the network settings

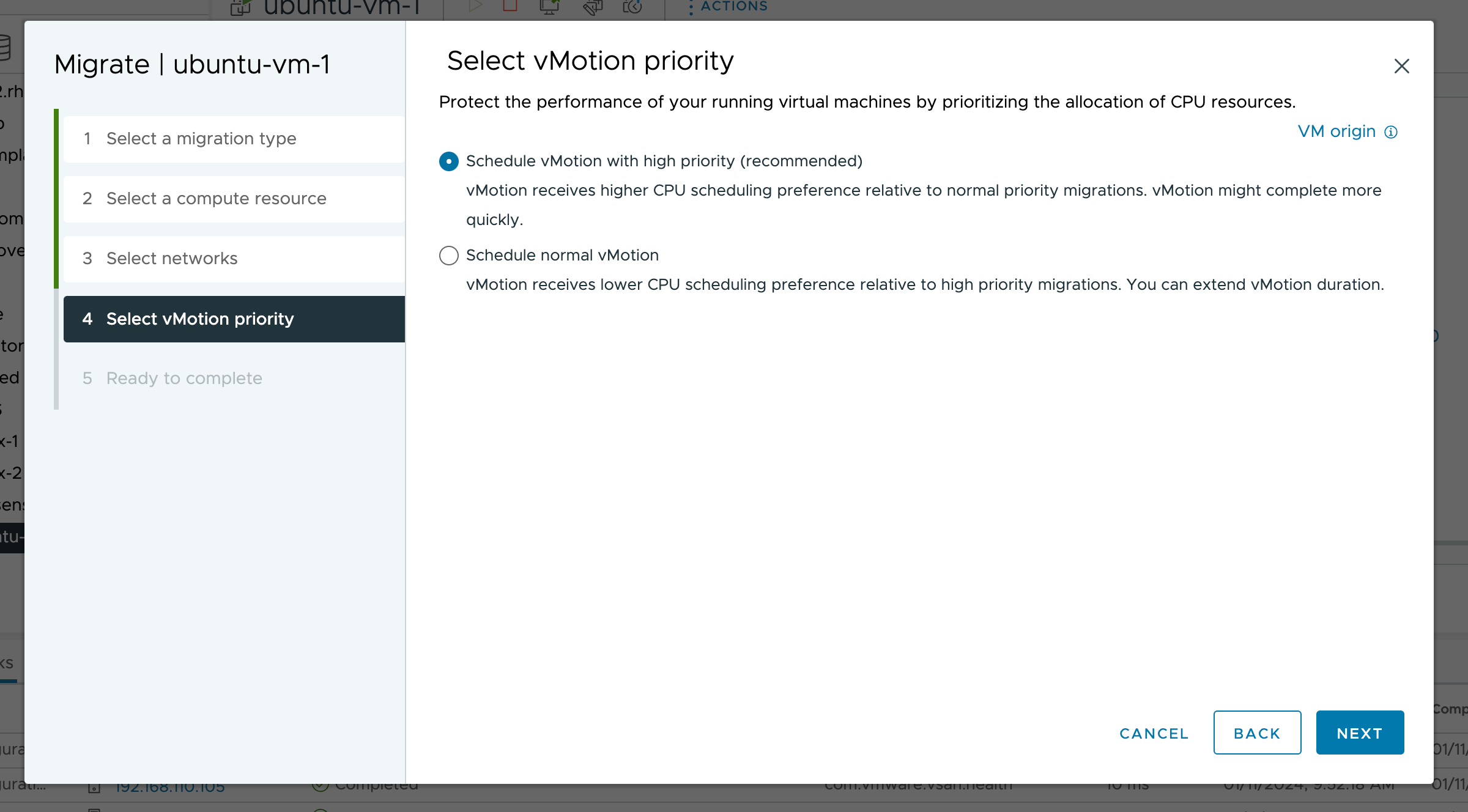

Set vMotion priority

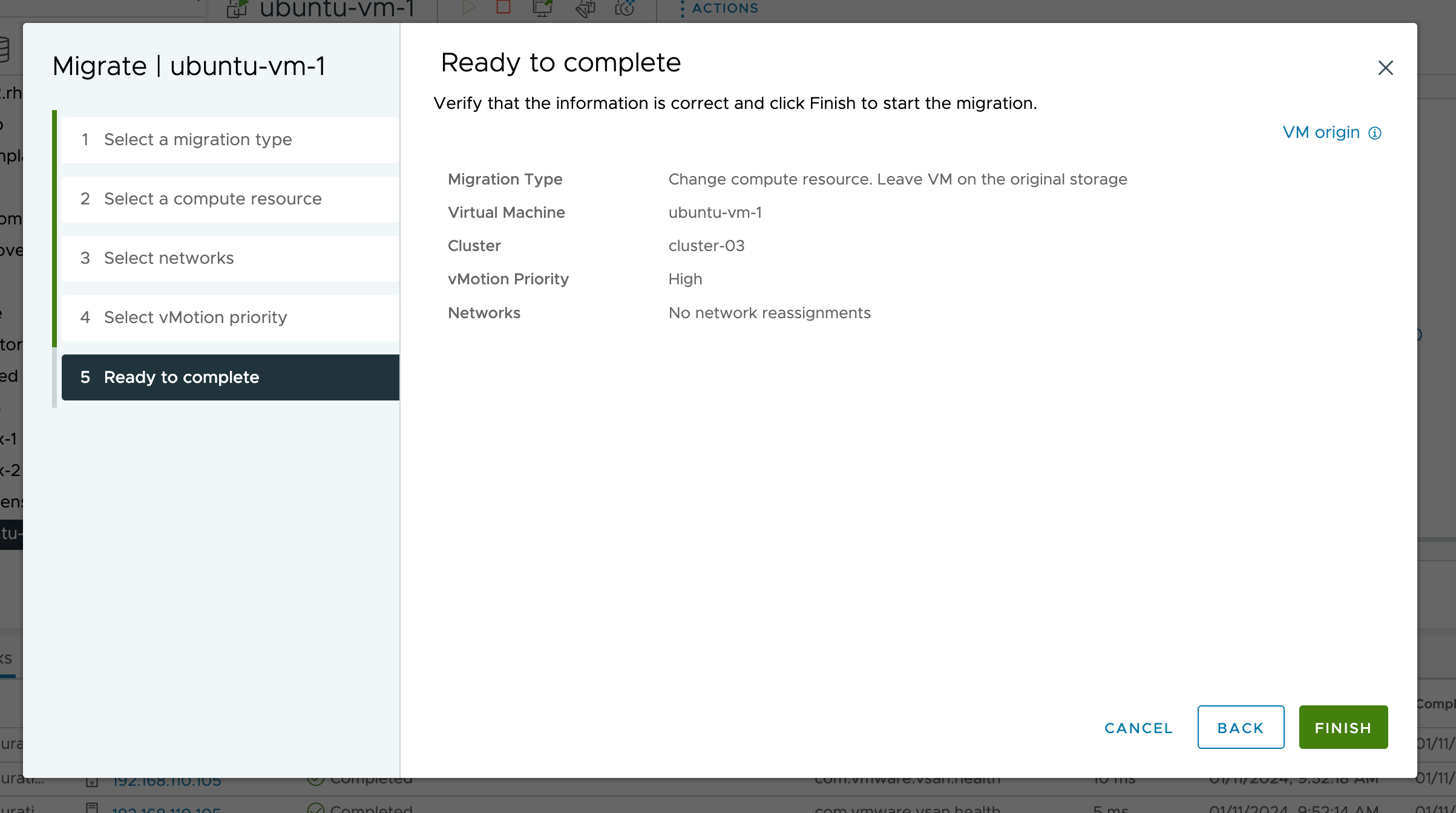

Review details

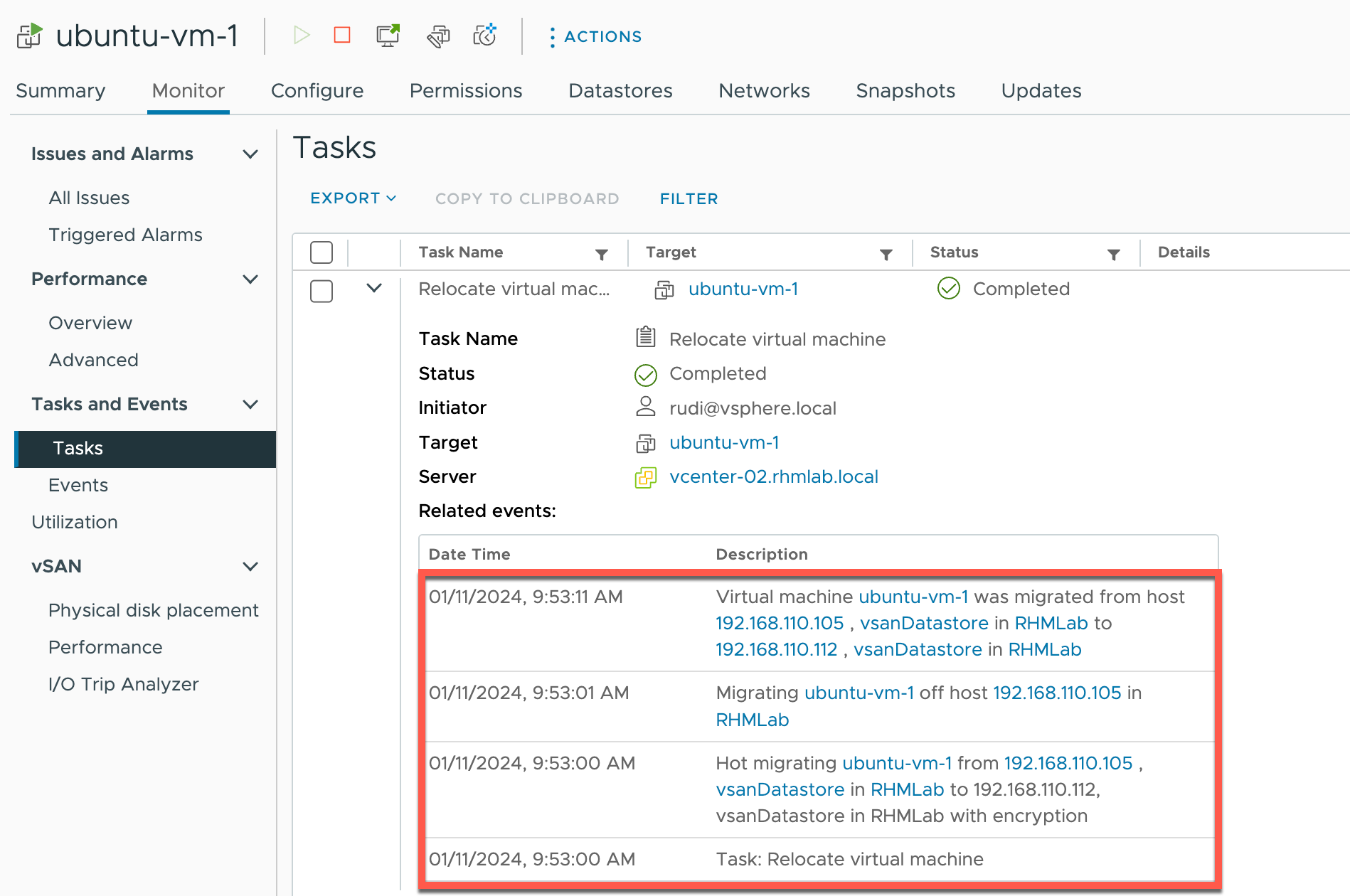

VM migrated in just a few seconds

11 seconds for a compute-only migration might seem to be slow, but note that this is done on a home-lab infrastructure with Intel Nucs, and vMotion on 1Gb/s network adapters

Unmounting remote datastore

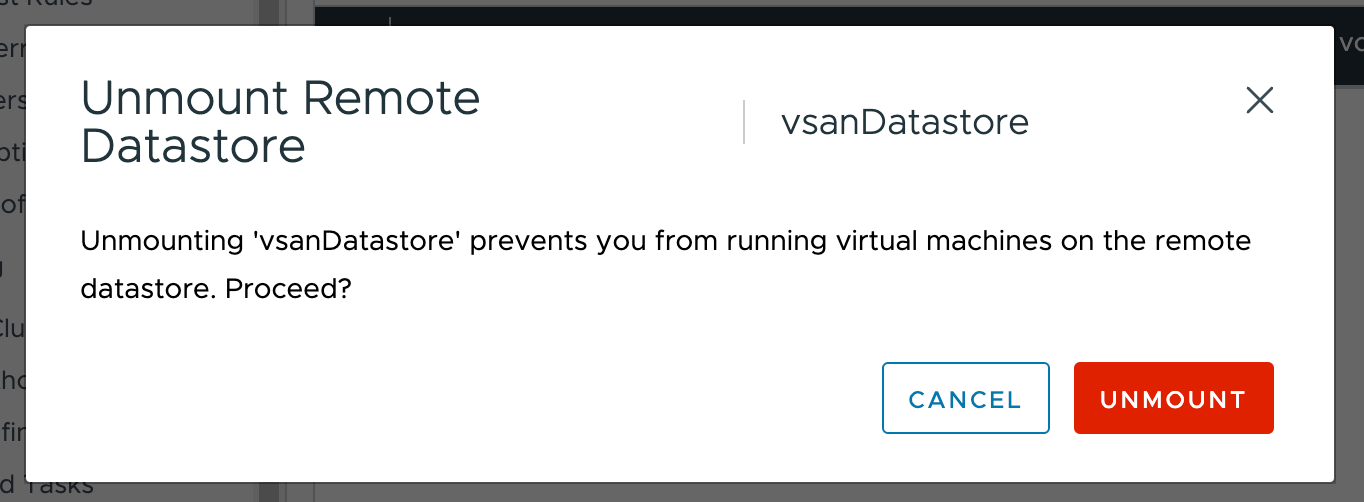

Unmounting a remote datastore is as easy as going to Configure->vSAN->Remote Datastores, select our mounted datastore and hit Unmount. After accepting the Unmount warning our datastore is unmounted

Note that there can be no running VMs on that datastore on the cluster you are unmounting from.

Summary

In this post we've seen how easy it is to enable HCI Mesh and utilize vSAN storage on remote clusters. With HCI Mesh we can quickly make use of existing vSAN storage on remote clusters and manage it with the same Storage Policy Based management (SPBM).

Since HCI Mesh utilizes the vSAN network we do not have to worry about iSCSI, FC or NFS mounts. It also allows us to use Compute-only vMotion between the clusters.

Thanks for reading and reach out if you have any questions or comments