Change IP addresses on a vRealize Log Insight cluster

This post will go through the steps taken for changing IP addresses on a vRealize Log Insight cluster.

Environment setup

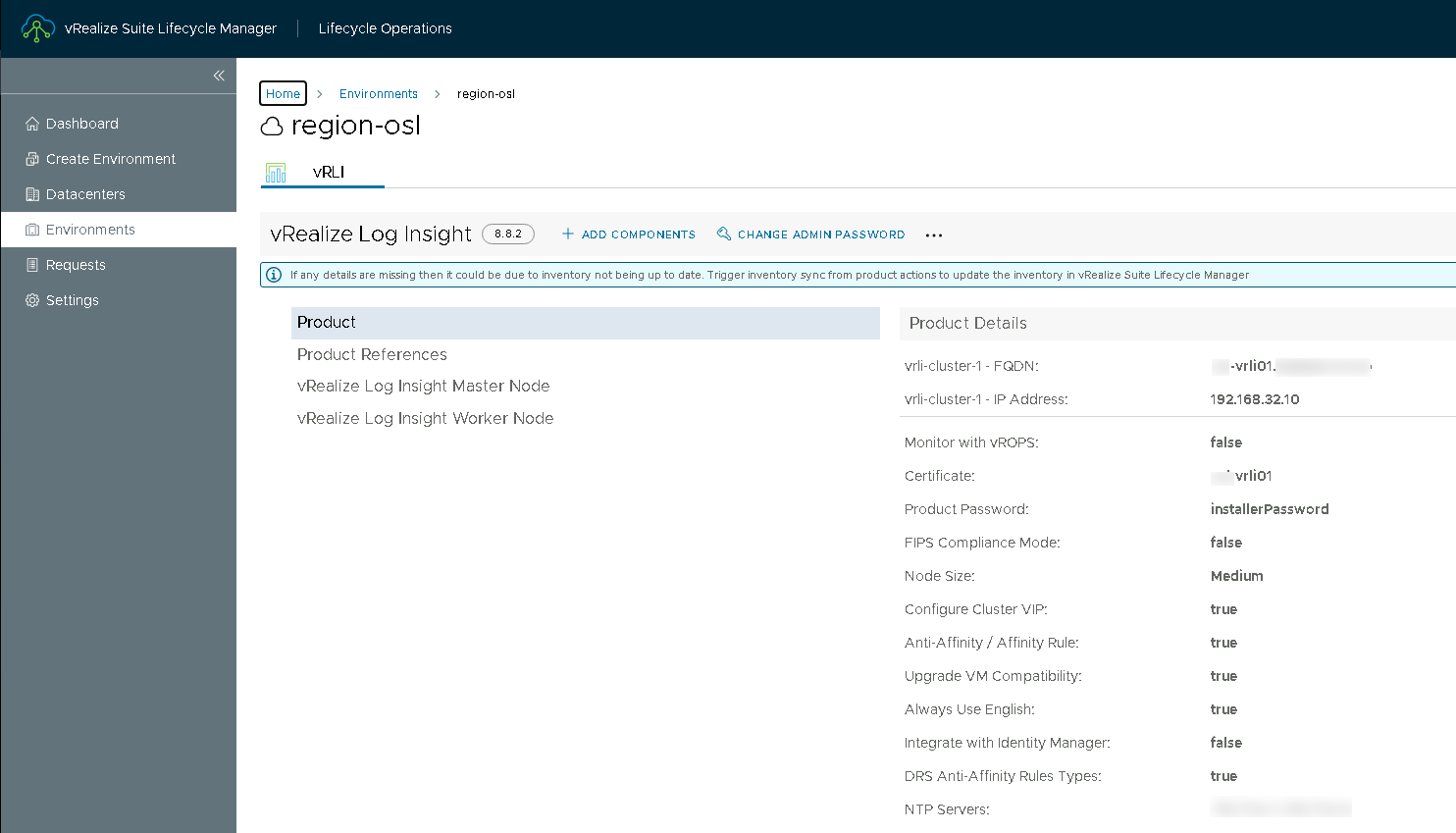

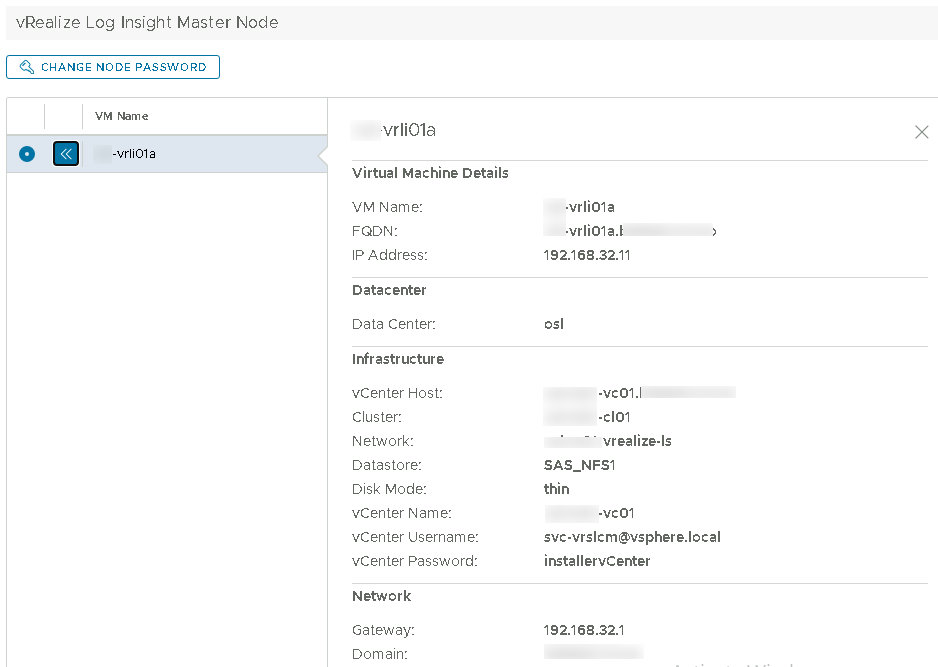

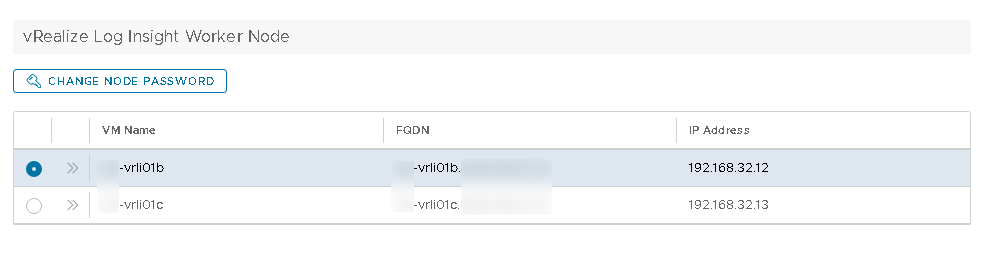

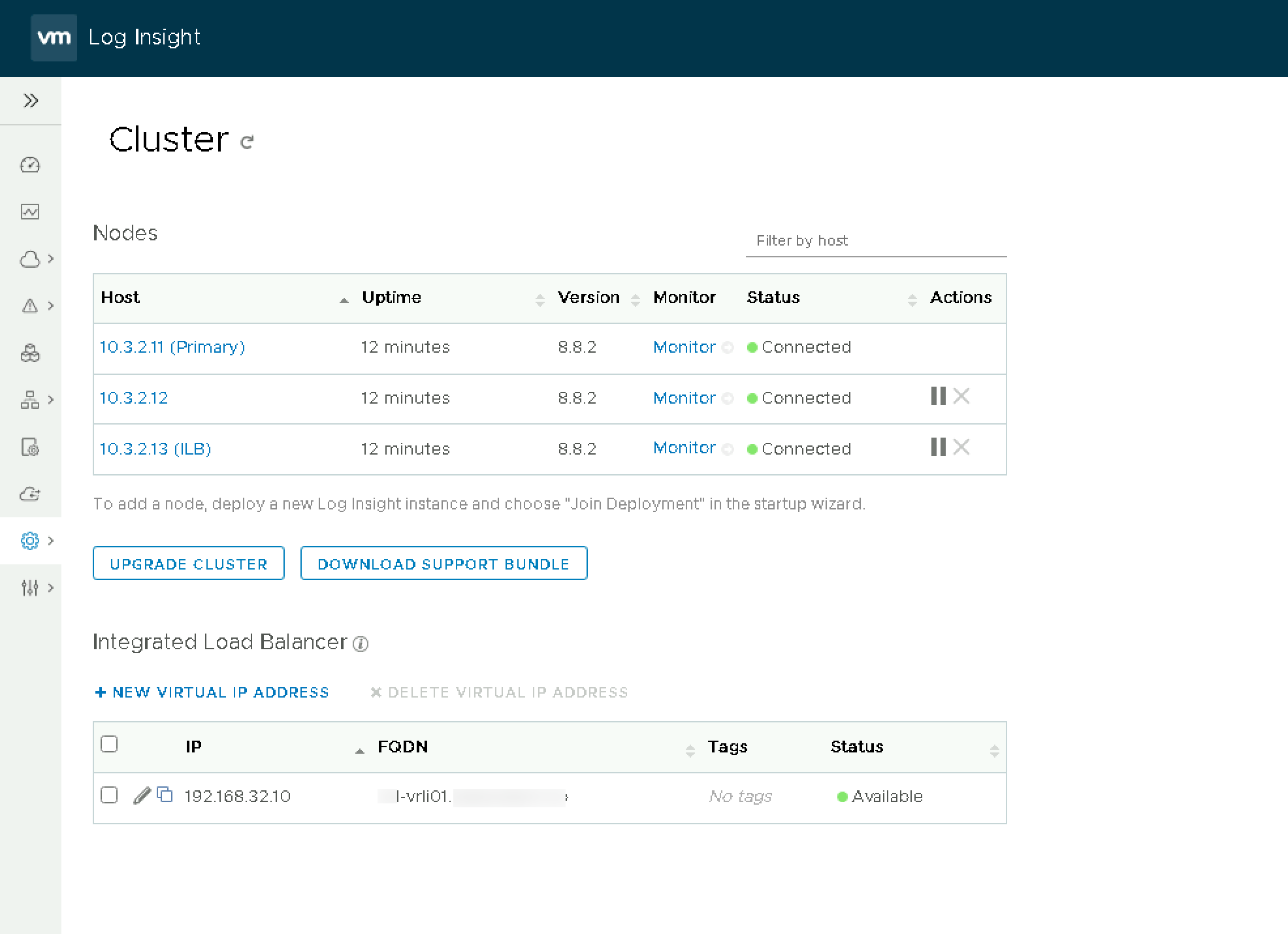

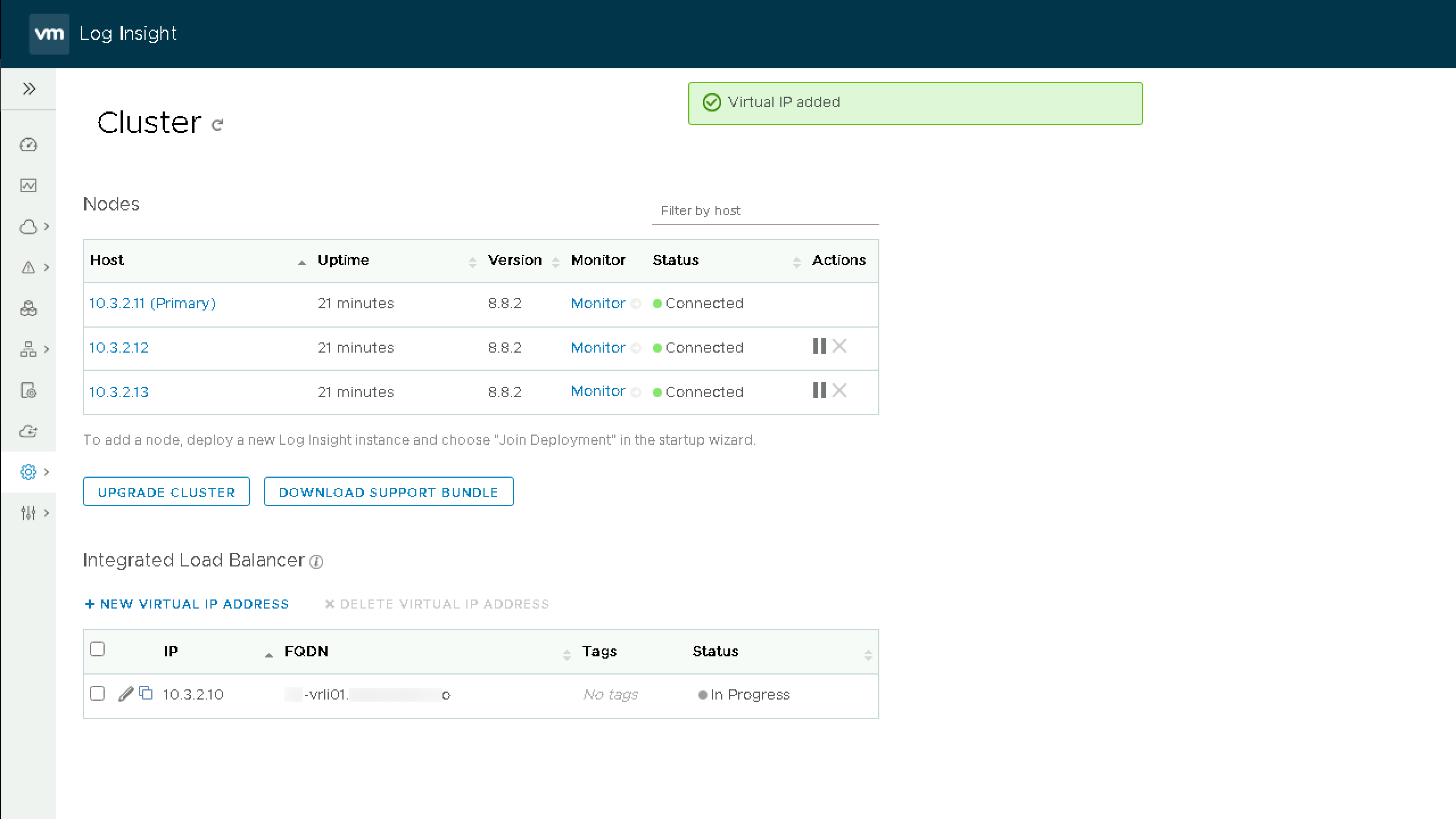

The vRLI cluster is running version 8.8.2 and has been deployed through vRealize Suite Lifecycle Manager. The cluster consists of three nodes, and a VIP is set for the Integrated Load Balancer

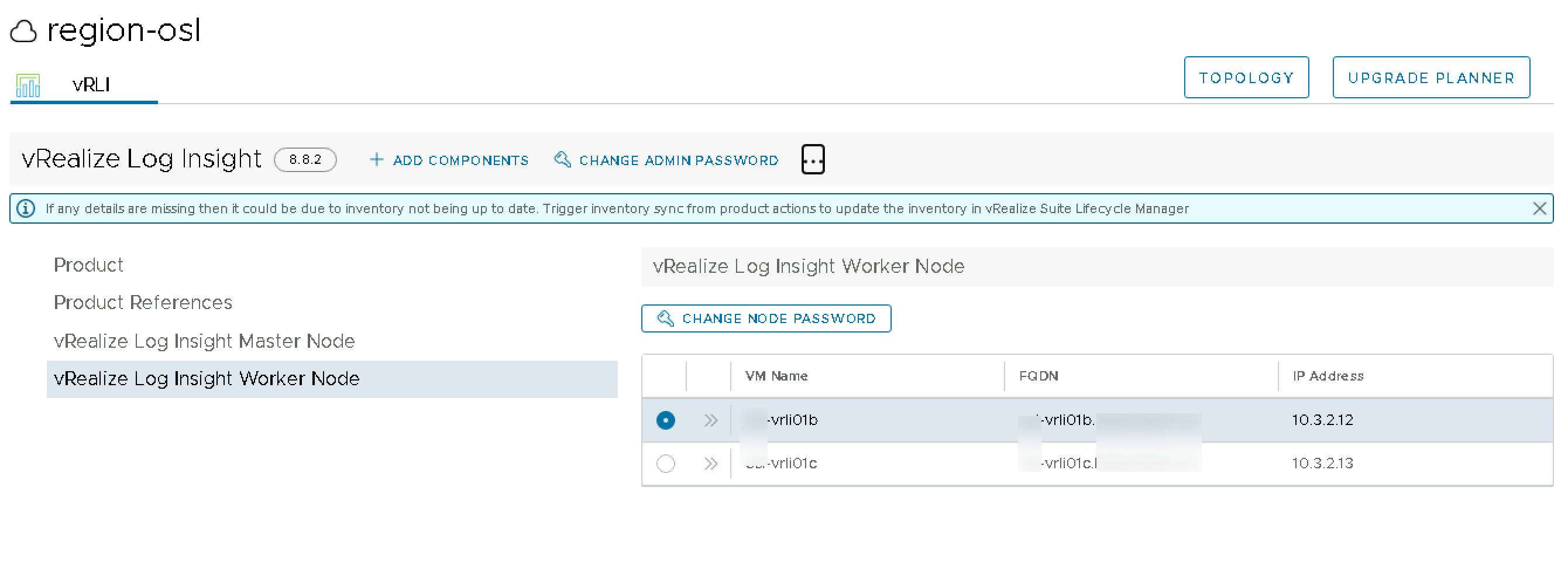

A couple of screenshots from vRSLCM

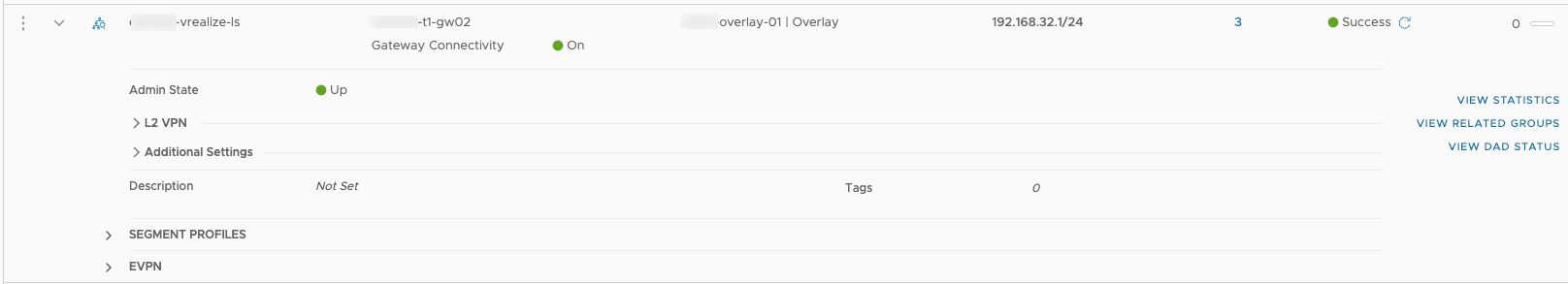

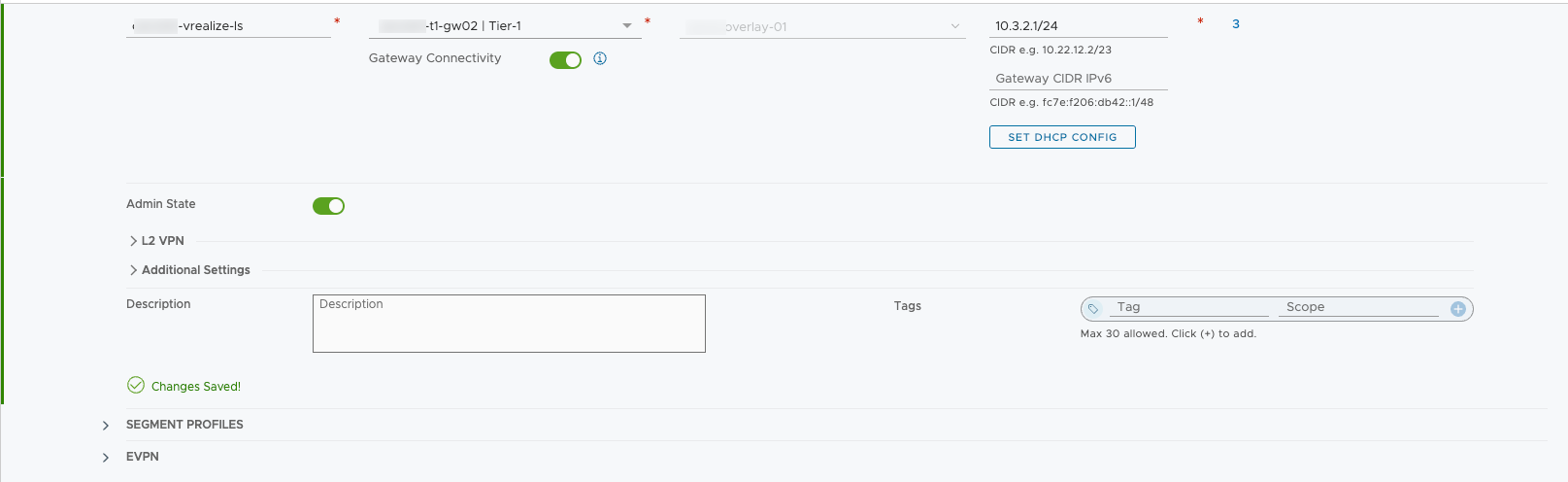

The network the nodes are connected to is a NSX Overlay network connected to a Tier-1 Gateway which is connected to a Tier-0 Gateway with an Edge Cluster configured.

Step-by-step process for changing IP details

There is a KB article from VMware explaining the necessary steps which I've used in this process.

Snapshot

Before doing any changes it is recommended to take a snapshot of the cluster. Note that the best practice is to perform a snapshot with the cluster shutdown which infers downtime on the cluster.

I decided to do the snapshot manually and not through vRSLCM as we also have to do some changes to the vApp properties in vCenter

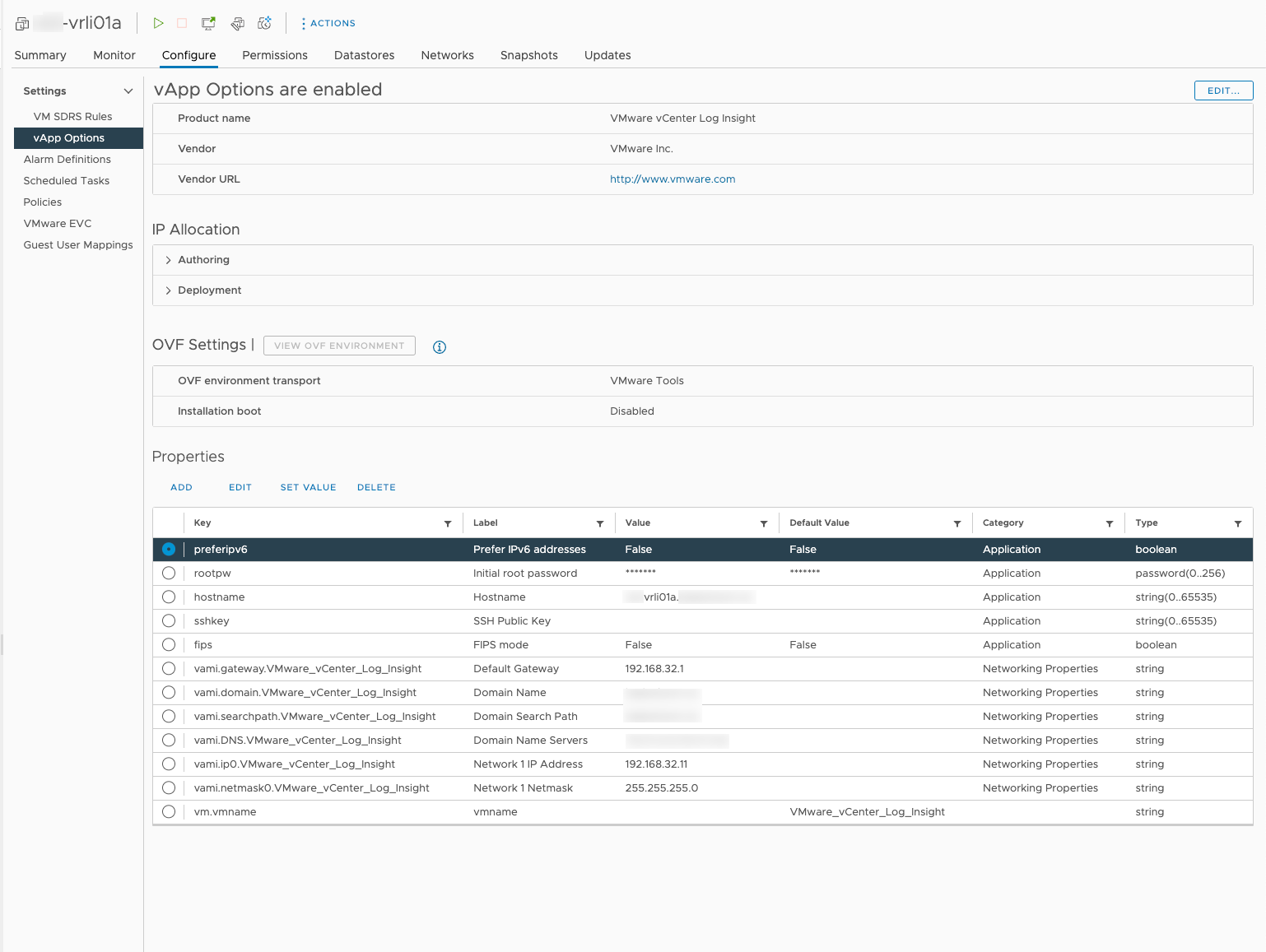

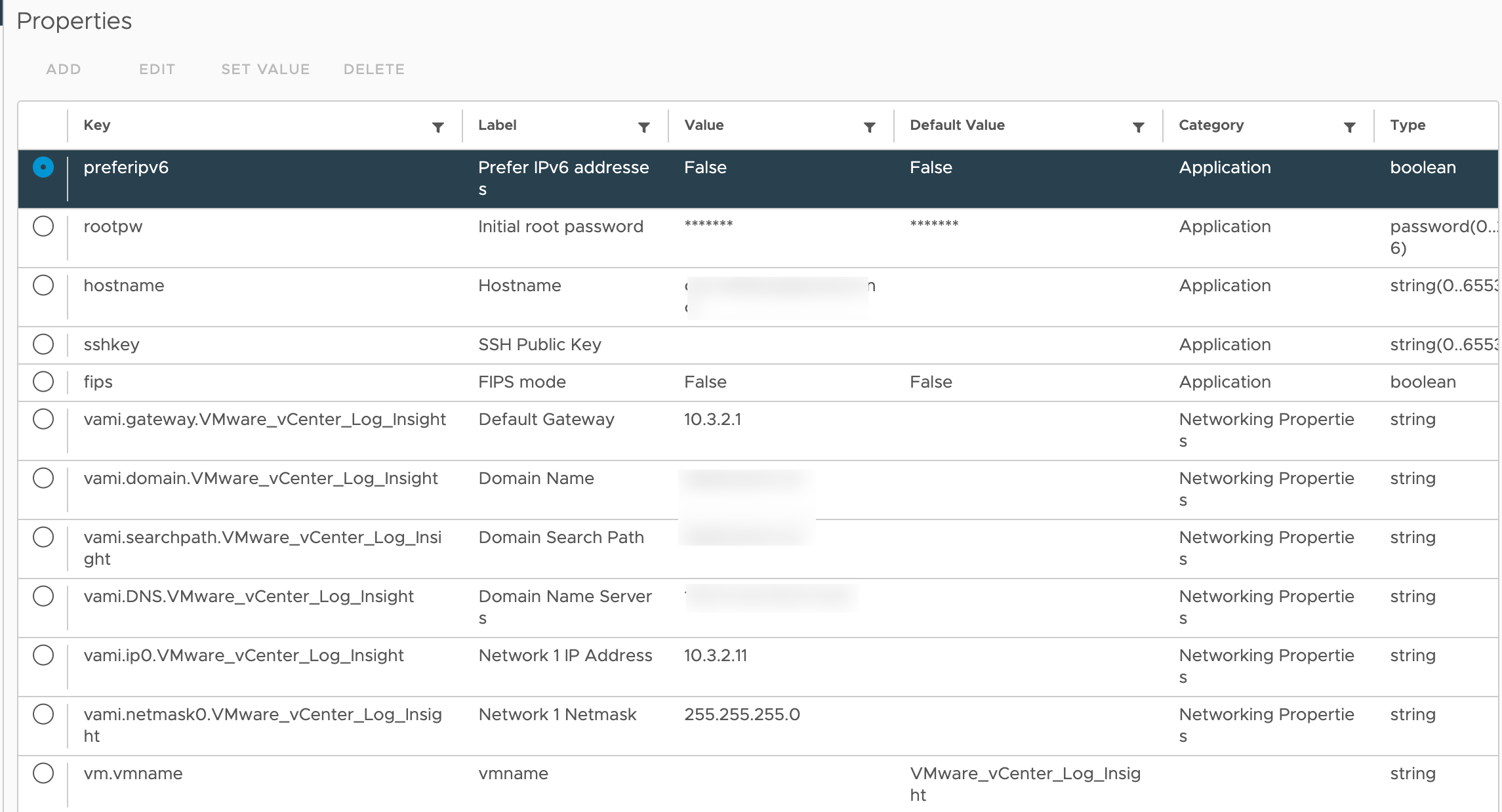

vApp properties

The VMs have the IP details configured in the vApp properties. Before performing the changes in the OS we need to update these, or else things will revert.

Changing the vApp properties must be done while the nodes are powered off.

After changing the properties

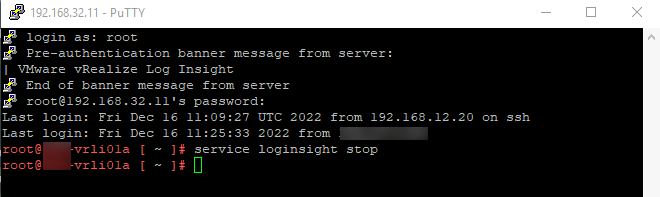

Stop the loginsight service

After snapshotting and the vApp properties is updated we will start the vRLI nodes.

When the nodes are up and running let's first stop the loginsight service on all nodes so that the cluster is down before we perform the actual changes.

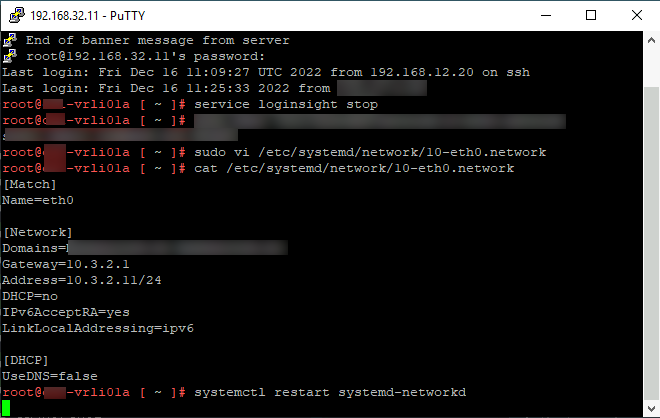

Change IP settings and restart network

Using a text editor we will update the /etc/systemd/network/10-eth0.network file and restart the network service

Note that we will lose access to the ssh session and the node when restarting the network service

Change network segment

Now we can update the NSX segment with the new IP address. Note that we could have created a new NSX segment with the new details and moved individual nodes over, but I wanted to keep the name and ID of the segment so I chose to keep them on the existing segment.

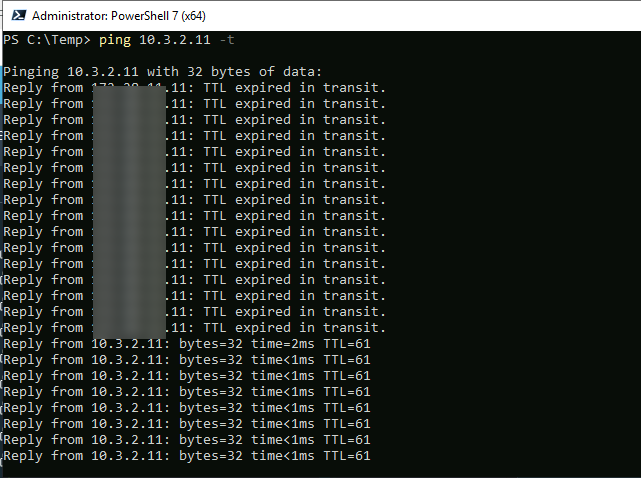

After a couple of seconds our node should start responding to pings

Update firewall rules

Depending on your environment you might need to update your firewall rules to allow traffic to and from the new IPs. In my environment we are using the NSX Distributed firewall targetting the VMs by tags so no changes are needed.

Change config file

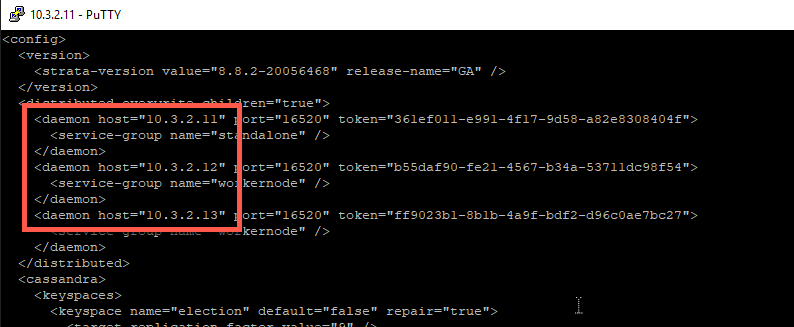

After changing the IP and reconnecting to the nodes via ssh we need to change a config file

Find the latest loginsight-config.xml# file in the /storage/core/loginsight/config directory and take a copy of this file and increment the number by one

In my setup the latest available file was /storage/core/loginsight/config/loginsight-config.xml#18, hence I copied this to /storage/core/loginsight/config/loginsight-config.xml#19

Now we'll edit the new file in a text editor and update the IP details

Although not specified in the KB article I changed details for all three nodes in a new config file on each node. I did not change the VIP address in this config file, we'll do that later on through the vRLI UI.

Restart nodes

After this change we'll restart all nodes and verify that they come online with their new IP.

Change DNS and certificates

Now might be a good time to update the DNS entries for the vRLI nodes and the load balanced virtual ip.

Note that if your certificates includes the IP addresses used we would also have to create new certs.

Log in and update the VIP

After restarting the nodes and waiting until they are accessible we'll have to update the VIP assigned to the cluster

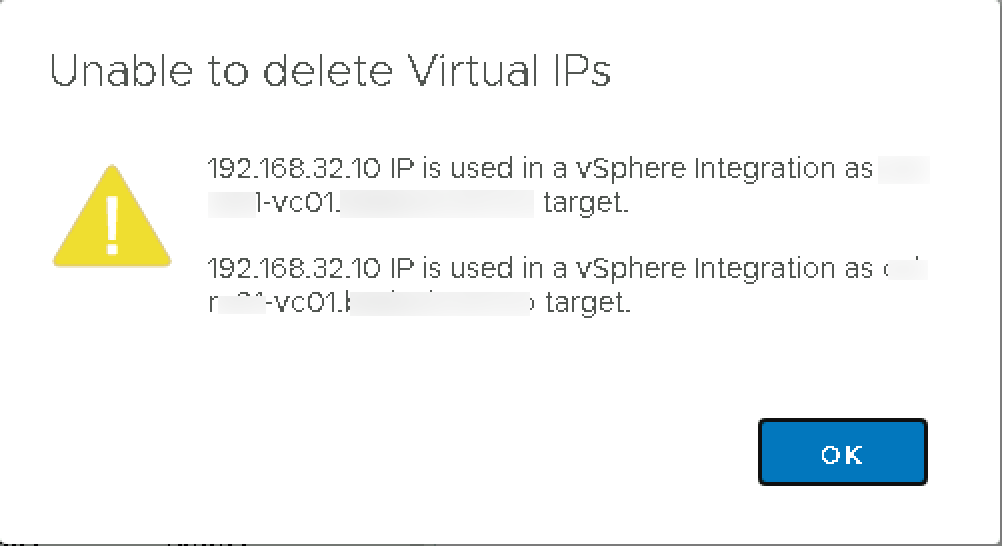

Since we'll use the same FQDN we need to delete the existing VIP. Note that if the VIP is in use we won't be able to delete it.

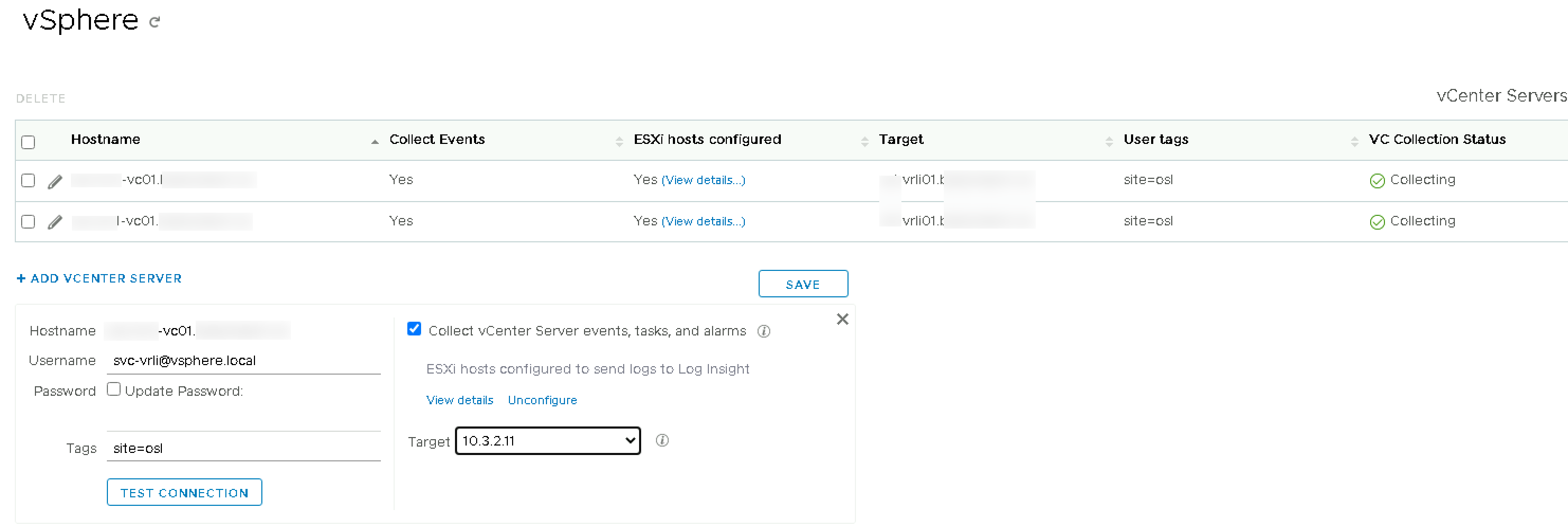

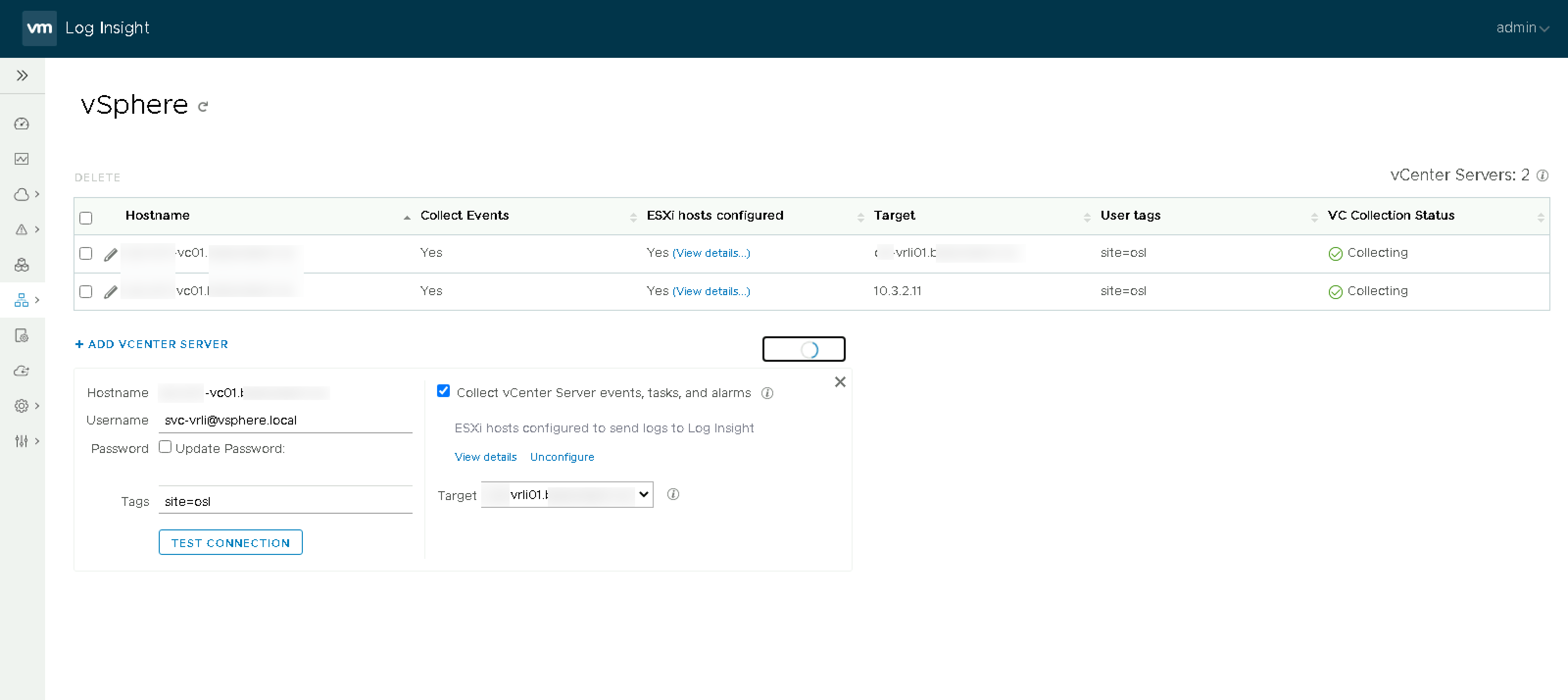

In this case the alert mentions the vSphere integrations, so we'll go and update those to use a specific node as their target

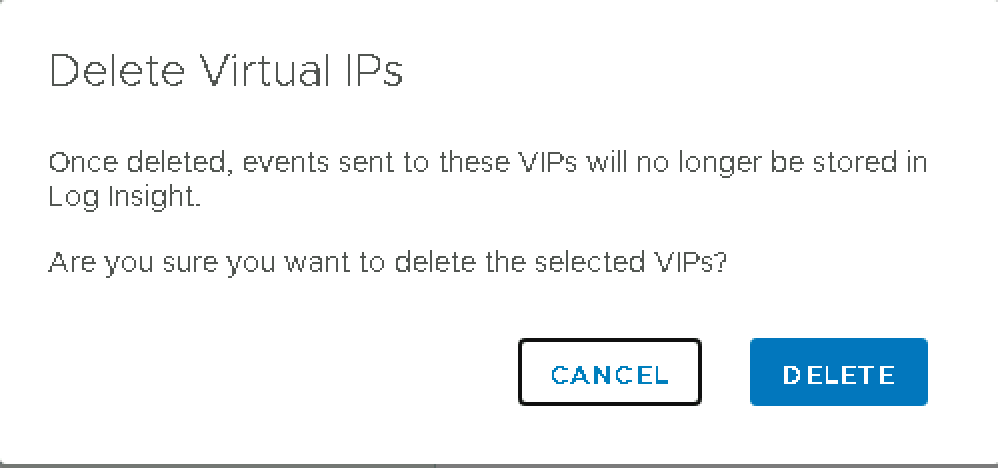

After this we can go ahead an delete the VIP

And then add a new with the correct IP

Now, we'll go back to the vSphere integration and switch back to the VIP

Verify hosts

With this the IP address change should be completed from the vRLI side. Now the next part would be to verify connected hosts and integrations to make sure they are updated with the new details if they use the IP directly and not the FQDN. Note that even if they use the FQDN it might take some time before their DNS cache updates to reflect the change

Sync vRSLCM

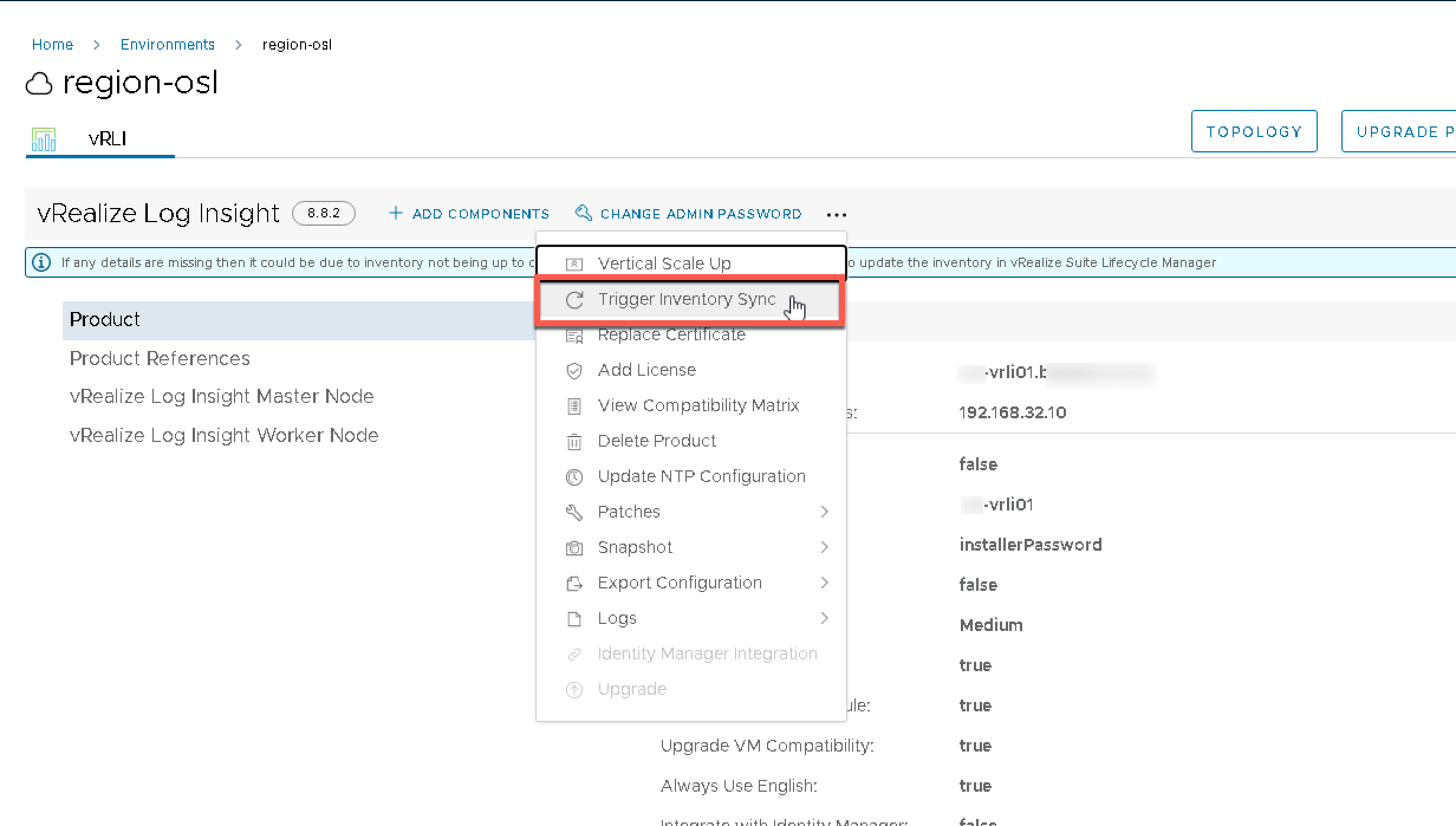

If you're using vRSLCM make sure to update the vRLI installation there to reflect the IP change

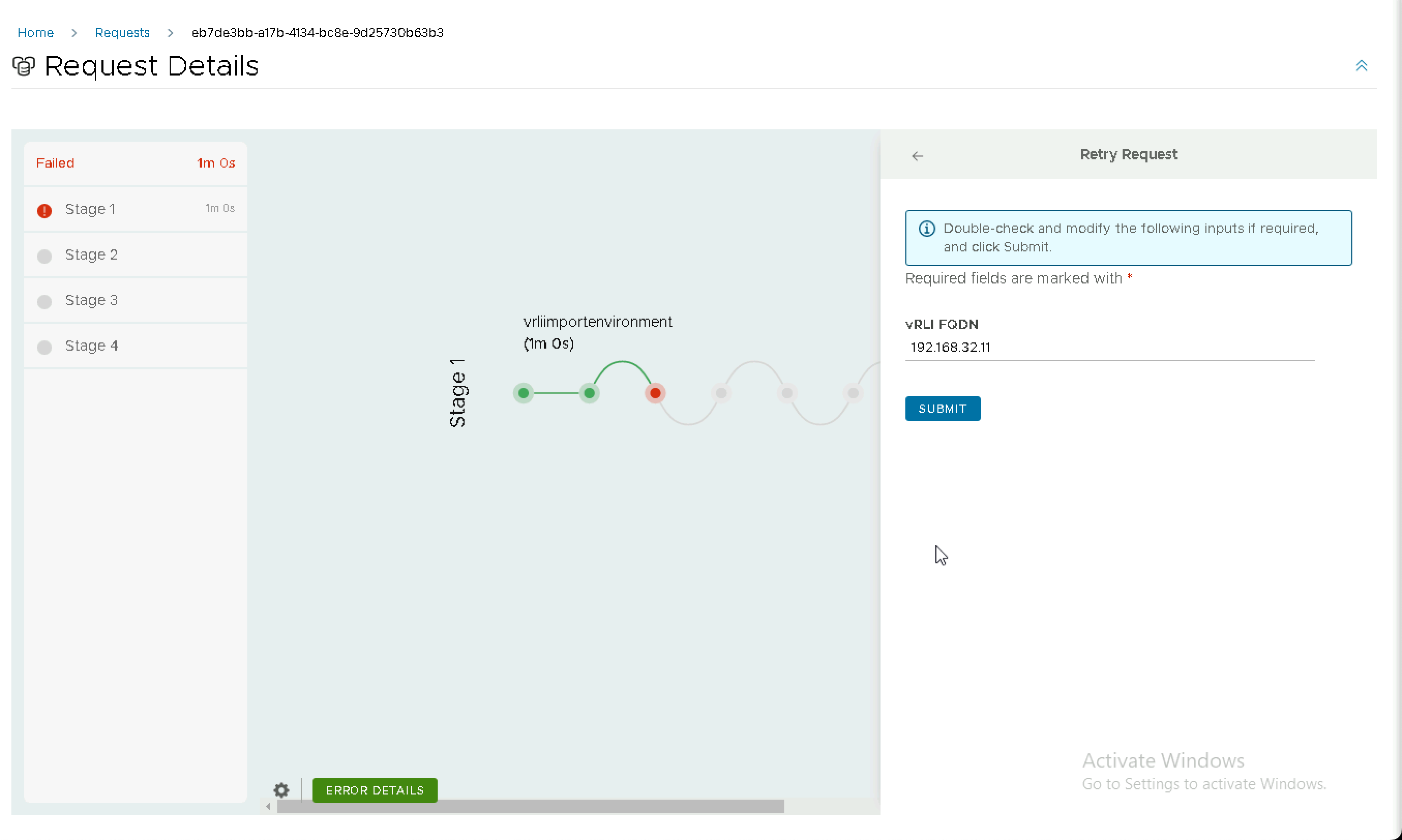

Note that the inventory sync will probably fail so we'll have to add in the new IP for the master node

After the sync has completed, verify that the environment has picked up the new IP addresses

Summary

This article has shown how to change IP addresses on a vRLI cluster. There's quite some steps involved, but it's fairly straightforward as long as you follow the documentation provided.

Thanks for reading