NSX-T 3.1 Home lab - Setup and Fabric configuration

I'm doing a mini-series on my NSX-T home lab setup. It's not meant for anything production, and there is other resources out there that explain NSX in more detail than I'll be doing here.

This post will be a write-up of the NSX-T Fabric configuration in my home lab. The next post will test the configuration, and the third post will be a test of some of the services available (e.g. DHCP, Load Balancer etc).

Latest NSX-T enhancements

With newer versions of NSX-T and vSphere a couple of enhancements have been made that makes the setup a lot easier, like the move to a single N-VDS in 2.4.2/2.5 and with the ability to run NSX on a Virtual Distributed Switch (VDS) in vCenter with VDS version 7.0.

In NSX-T 3.1 we got the ability to have the Edge TEP on the same subnet as the hypervisor TEP. A nice write-up of this feature can be found here: https://www.virten.net/2020/11/nsx-t-3-1-enhancement-shared-esxi-and-edge-transport-vlan-with-a-single-uplink/

Lab environment

First let's have a quick look at the lab environment:

Compute

I have 3 Intel NUCs running vSAN in a cluster in vCenter. The NUCs are running ESXi 7.0.1, the vCenter is running 7.0.2

Since the NUCs ships with only one NIC I've added an extra USB NIC which is used for vSAN traffic. The on-board NIC is used for management, vMotion and VM traffic, and is the NIC which will be used for NSX later on

Network

My home network consists of a few VLANs which all are routed and have .1 configured as the network gateway. The three VLANs used in the setup are mentioned in the below table

| VLAN | Subnet | Role | Virtual Switch |

|---|---|---|---|

| 100 | 192.168.100.0/24 | Management | DSwitch-01 |

| 102 | 192.168.102.0/24 | vSAN | DSwitch-vSAN |

| 103 | 192.168.103.0/24 | TEPs | DSwitch-01 |

| 99 | 192.168.99.0/24 | Edge Uplinks | DSwitch-01 |

As you can see I've already prepared a network for the Tunnel Endpoints (TEP) that will be used in the NSX-T environment.

These networks are also defined as portgroups on two different distributed switches in vCenter that the hosts are connected to.

The Distributed Virtual Switches are running version 7.0.0 which let's us deploy NSX-T on the VDS directly.

My physical network equipment supports Jumbo frames, but the current USB NICs used in the NUCs have a maximum MTU of 4000 so I've set the VDS and vmkernel ports to that MTU size.

Preparations

Check out the NSX-T Data Center Workflow for vSphere for details and documentation on the process

IP Addresses and DNS records

Before deploying NSX-T in the environment I've prepared a few IP addresses and DNS records

| Role | IP |

|---|---|

| NSX Manager | 192.168.100.50 |

| NSX-T Edge node 1 | 192.168.100.54 |

| NSX-T Edge node 2 (currently not in use) | 192.168.100.55 |

| NSX-T T0 GW Interface 1 | 192.168.99.2 |

Note that I've reserved addresses for a second Edge which I'm not going to use at the moment.

Certificate

I've also created a certificate for the NSX-T Manager signed by the Root CA in the lab environment.

Note that the CSR generator included with the NSX-T Manager currently doesn't support Subject Alternate Name (SAN) information. I've generated the CSR with OpenSSL and used that for requesting a certificate

Deploy NSX manager appliance

VMware documentation reference

The NSX manager appliance has been downloaded and imported the OVF to the cluster. I won't go into details about this, I just followed the deployment wizard.

In my lab I've selected to deploy a small appliance which requires 4 vCPUs, 16 GB RAM and 300 GB disk space. For more details about the NSX Manager requirements look at the official documentation

Note that I'll not be deploying a NSX Manager cluster in my setup. In a production environment you should naturally follow best practices and configure a cluster of NSX Managers

NSX-T deployment

Now let's get rocking with our NSX-T setup!

We'll start the NSX manager and prepare it for configuring NSX in the environment

Initial Manager config

After first login I'll accept the EULA and optionally enable the CEIP

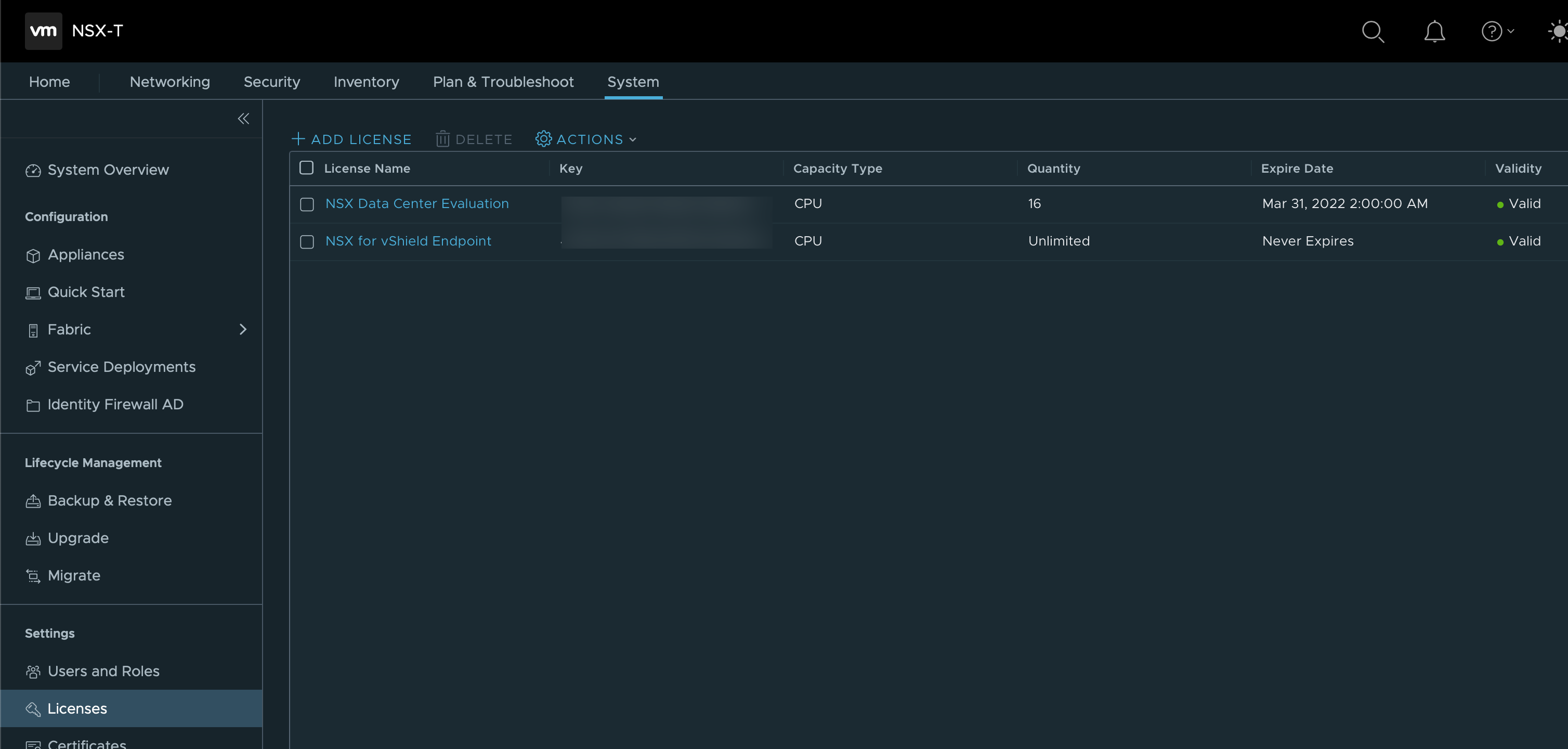

License

Next I'll add the license.

Certificate

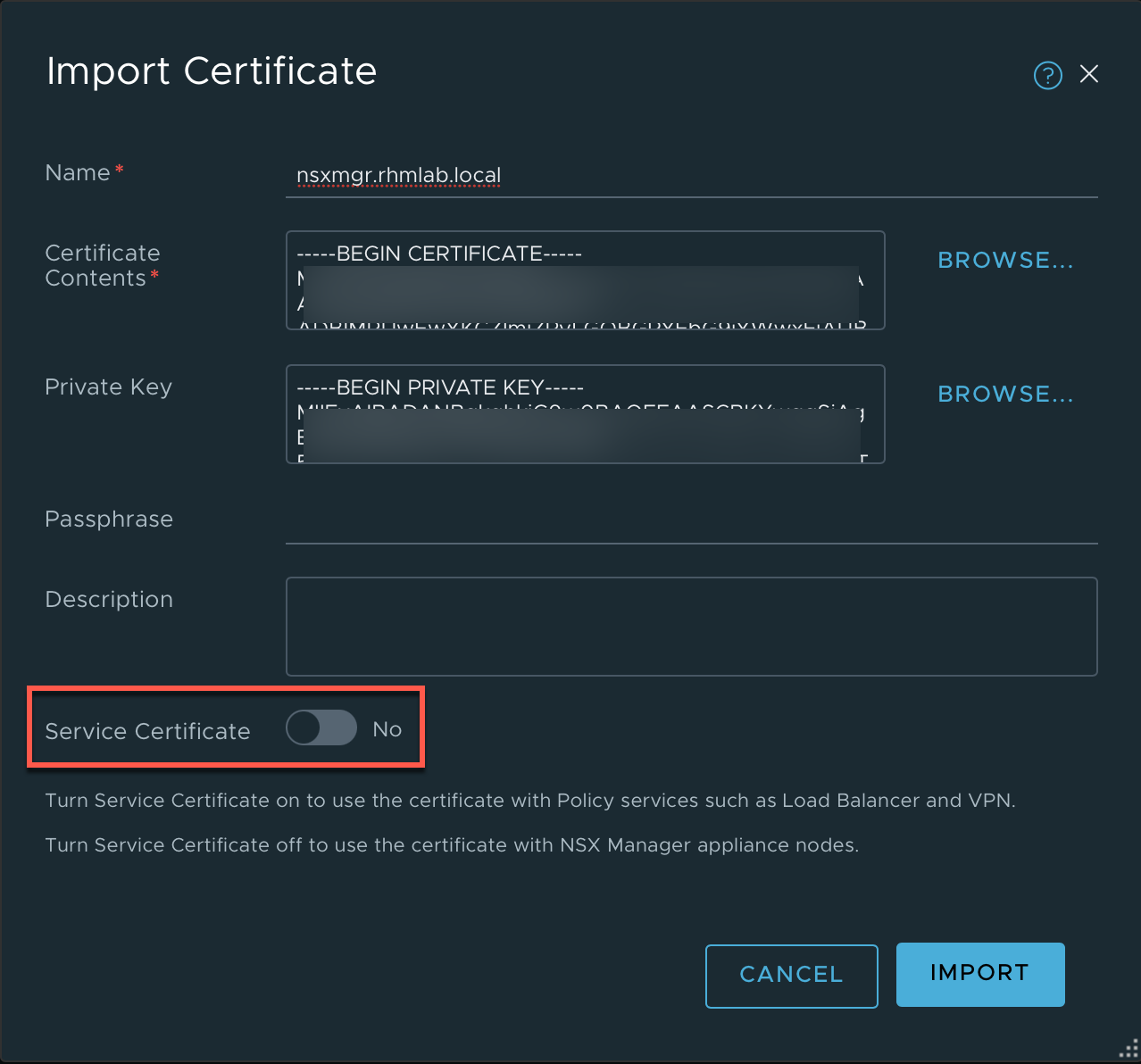

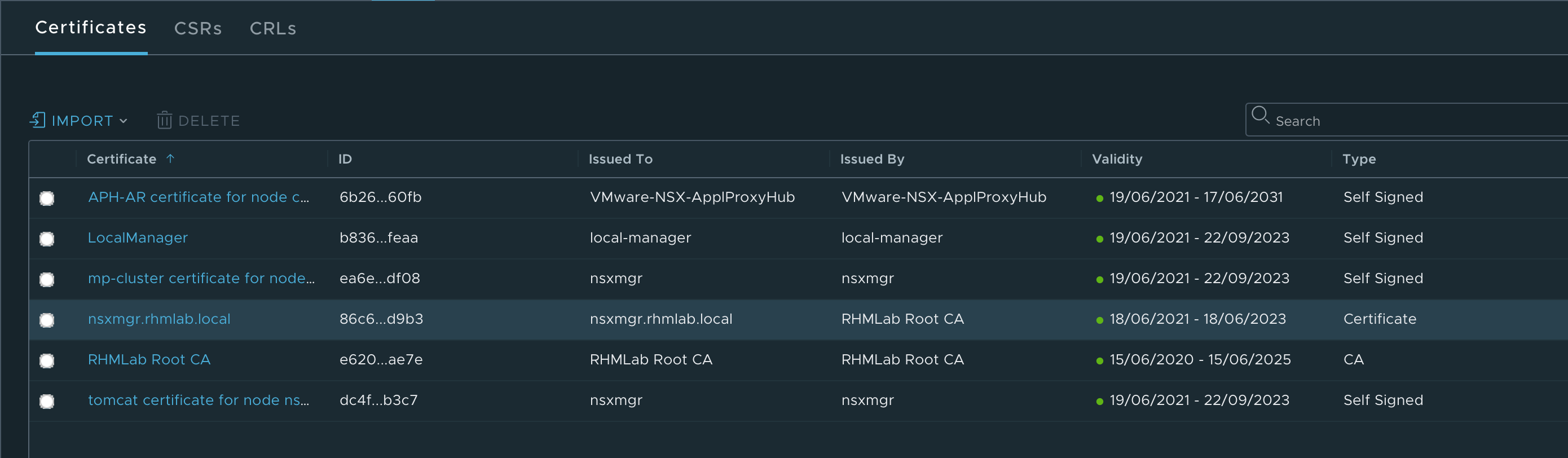

Before continuing I'll replace the self-signed certificate with my own CA signed certificate.

This is done in two steps and the process is detailed in the official documentation

First we'll upload the certificate and the Root CA certificate in the Manager. Note that the certificate should NOT be added as Service certificates

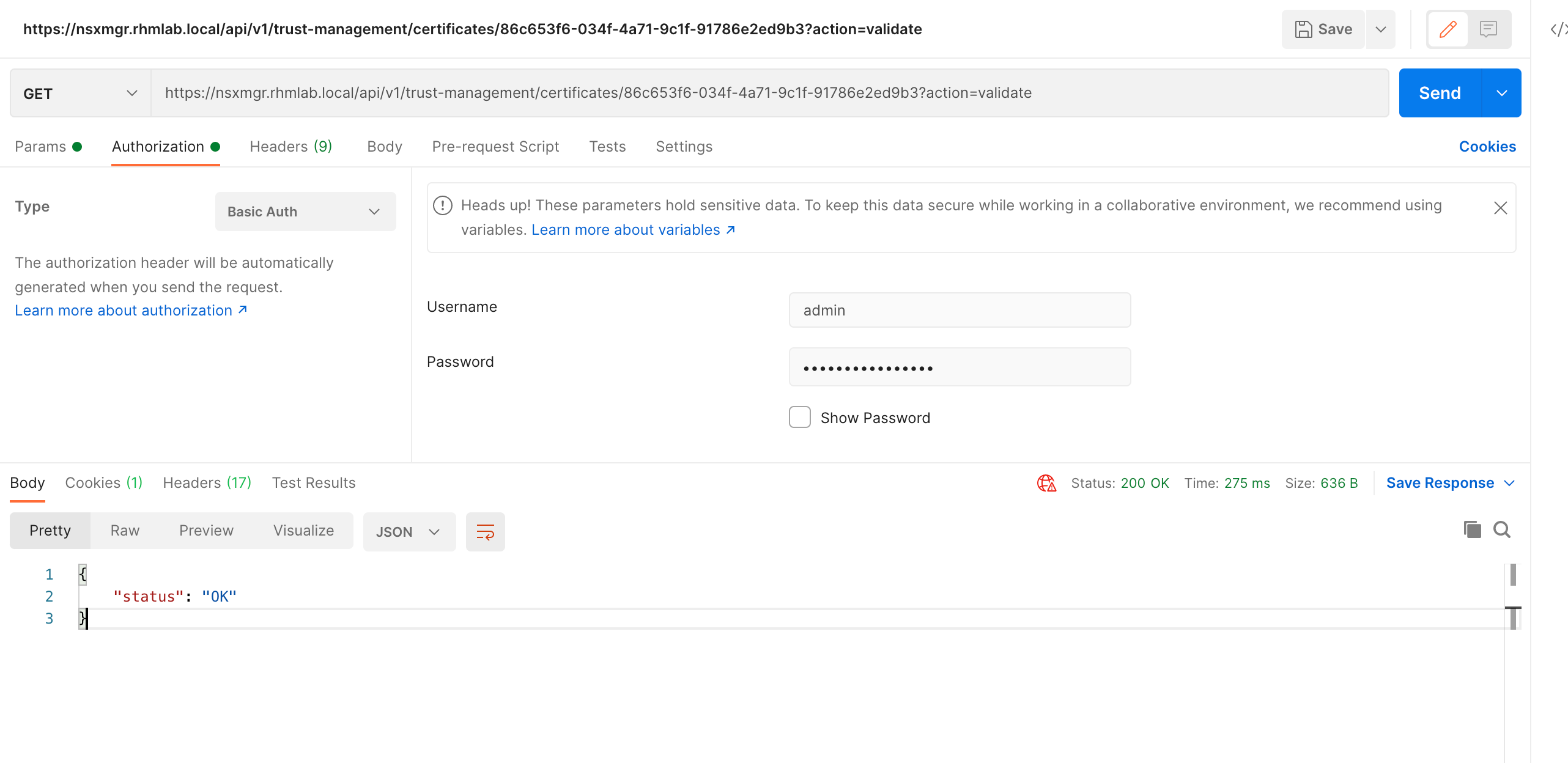

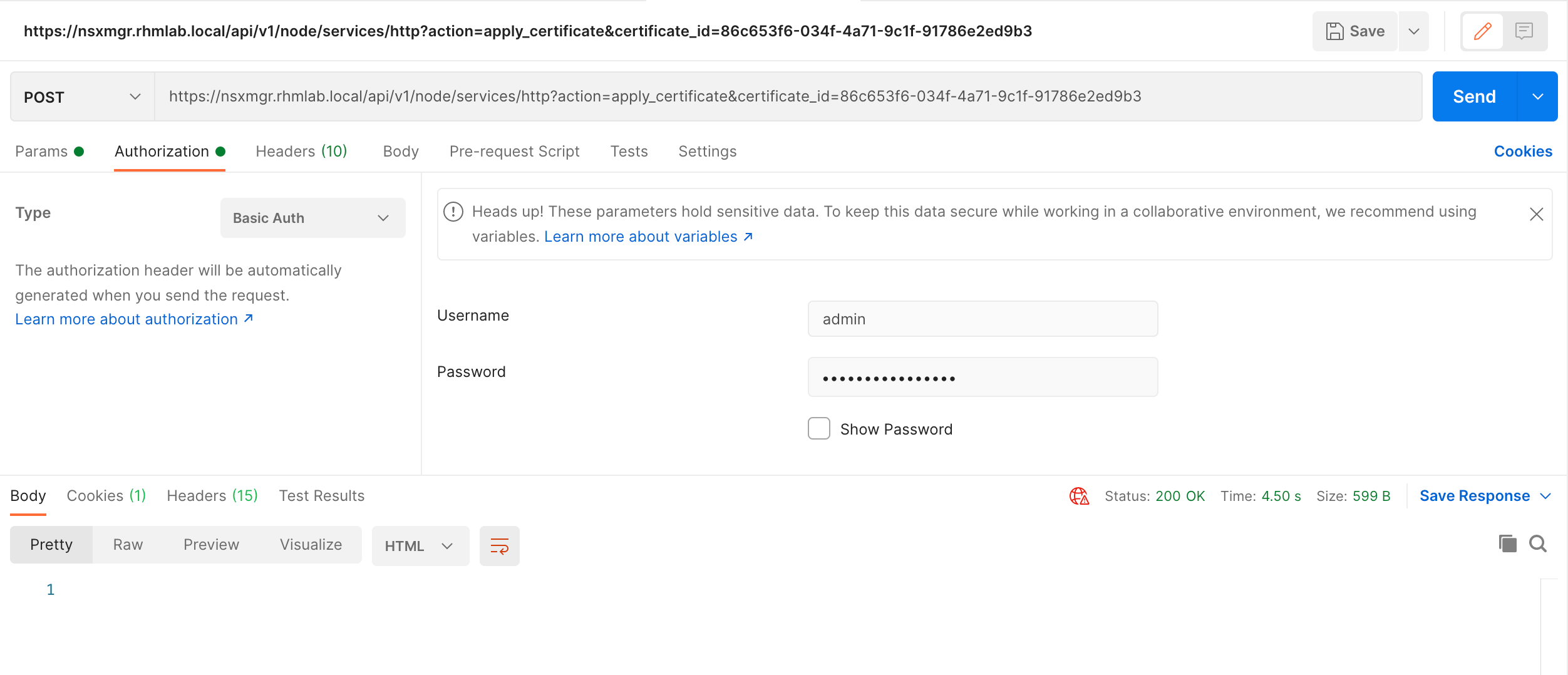

And then we'll have to use the REST API to replace it. I'll use Postman to run the API calls.

Information needed, besides authentication, is the ID of the certificate we want to set the NSX manager to use.

First we'll run an API call for validating the certificate

GET /api/v1/trust-management/certificates/<certificate-id>?action=validate

And if the certificate is valid we can go ahead and replace it

POST /api/v1/node/services/http?action=apply_certificate

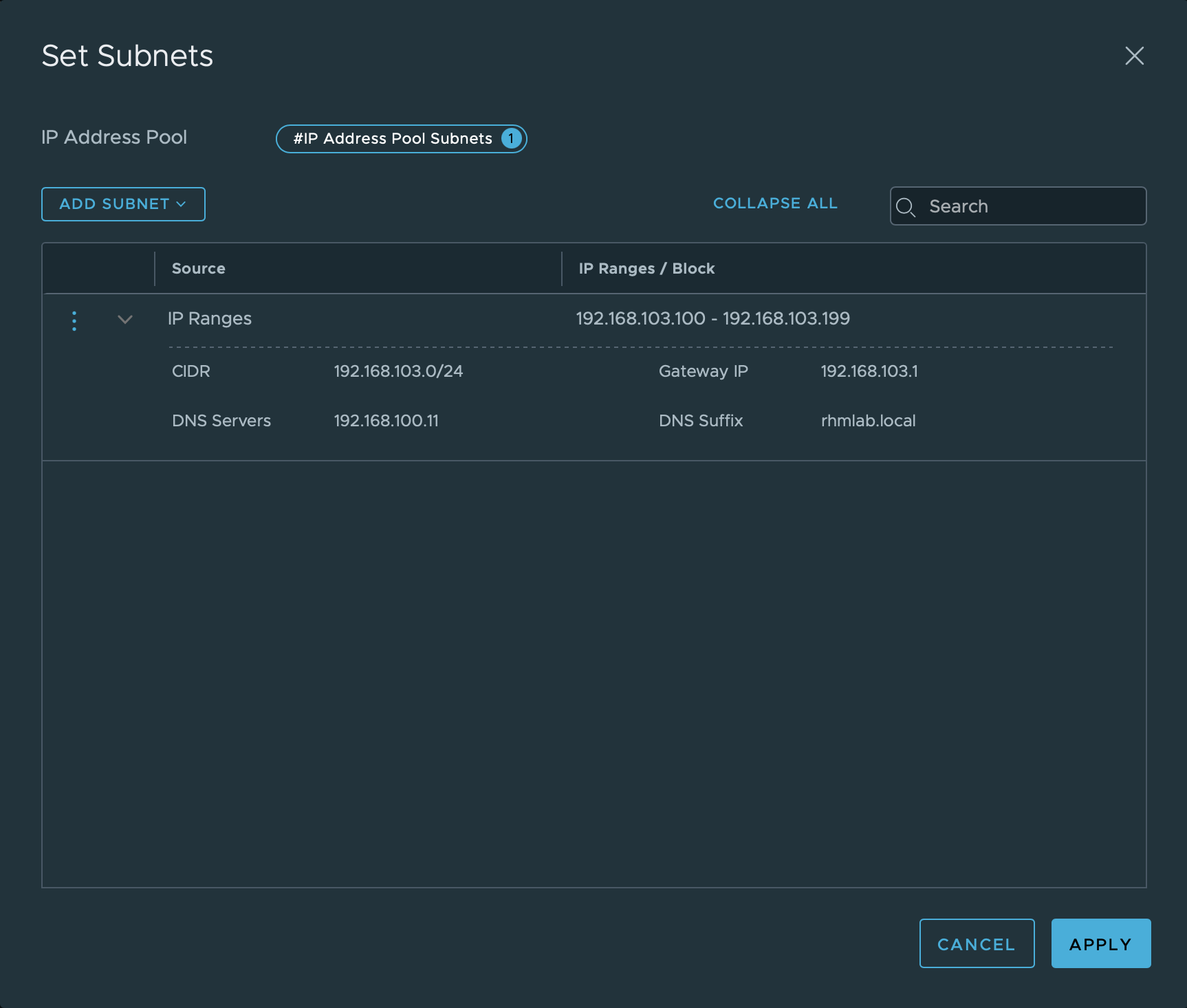

IP Pools

Our Tunnel Endpoints will need IP addresses and I've set aside a subnet for this as mentioned. In NSX Manager we'll add an IP pool with addresses from this subnet. (The IP pool I'm using is probably way larger than needed in a lab setup like this)

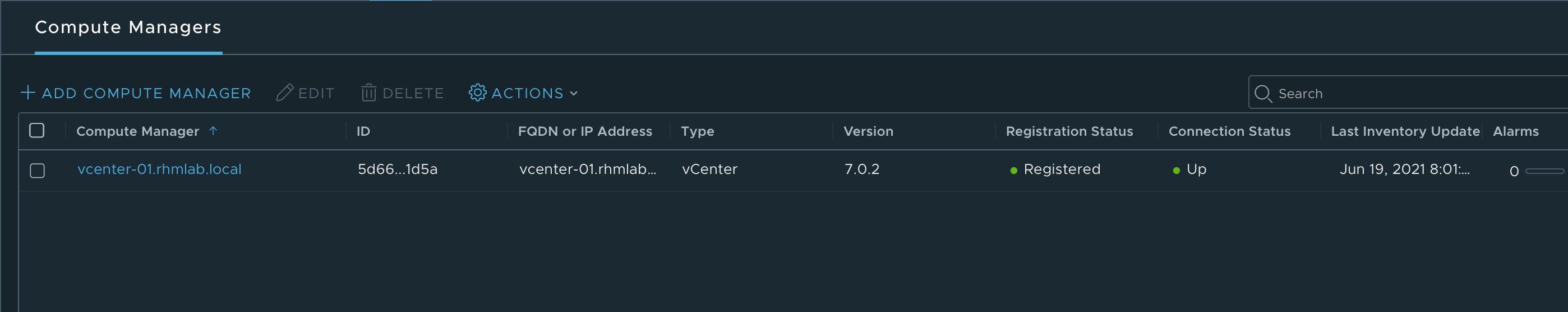

Compute Manager

With all that sorted we'll connect the NSX manager to our vCenter server so we can configure our ESXi hosts and deploy our edge nodes.

I've created a specific service account for the connection

Fabric configuration

Now we're ready for building out our network fabric which will consist of the following:

- Transport Zones

- Overlay

- VLAN

- Transport Nodes

- ESXi Hosts

- Edge VMs

- Edge clusters

Take a look at this summary of the Key concepts in NSX-T to learn more about them.

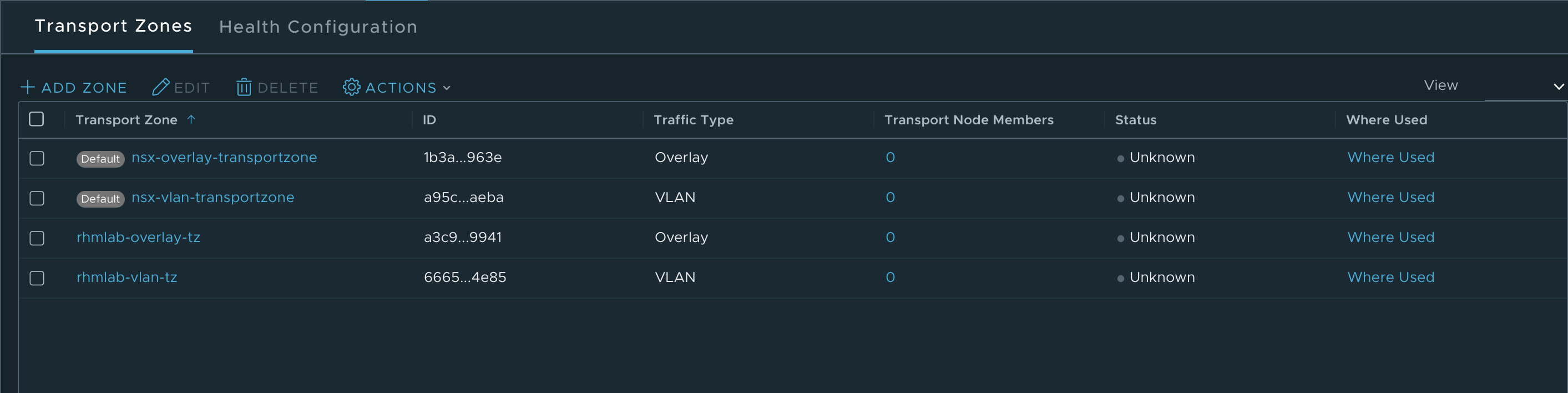

Transport Zone

The first thing we'll create are the Transport Zones. These will be used later on multiple occasions later on. A Transport Zone is used as a collection of hypervisor hosts that makes up the span of logical switches.

The defaults could be used, but I like to create my own.

Uplink Profiles

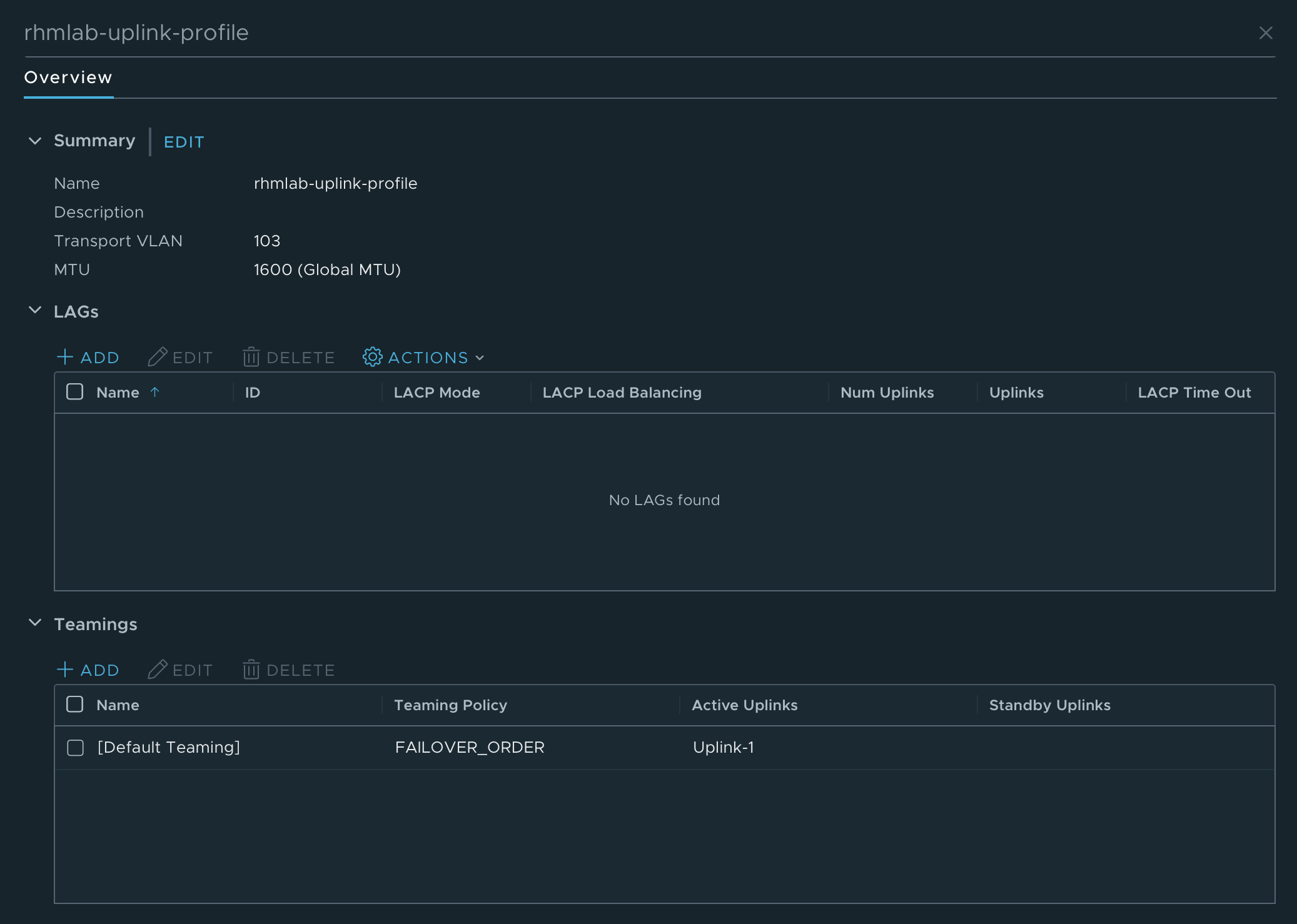

Uplink profiles will be used when we configure our Transport Nodes, both Hosts and Edge VMs. The profile defines how a Host Transport node (hypervisor) or an Edge Transport node (VM) will connect to the physical network.

Again I'm creating my own profile and leave the default profiles be as they are.

In my environment I have only one Uplink to use. Note that I've set the Transport VLAN to 103 which also corresponds with the TEP VLAN mentioned previously.

Transport Node Profile

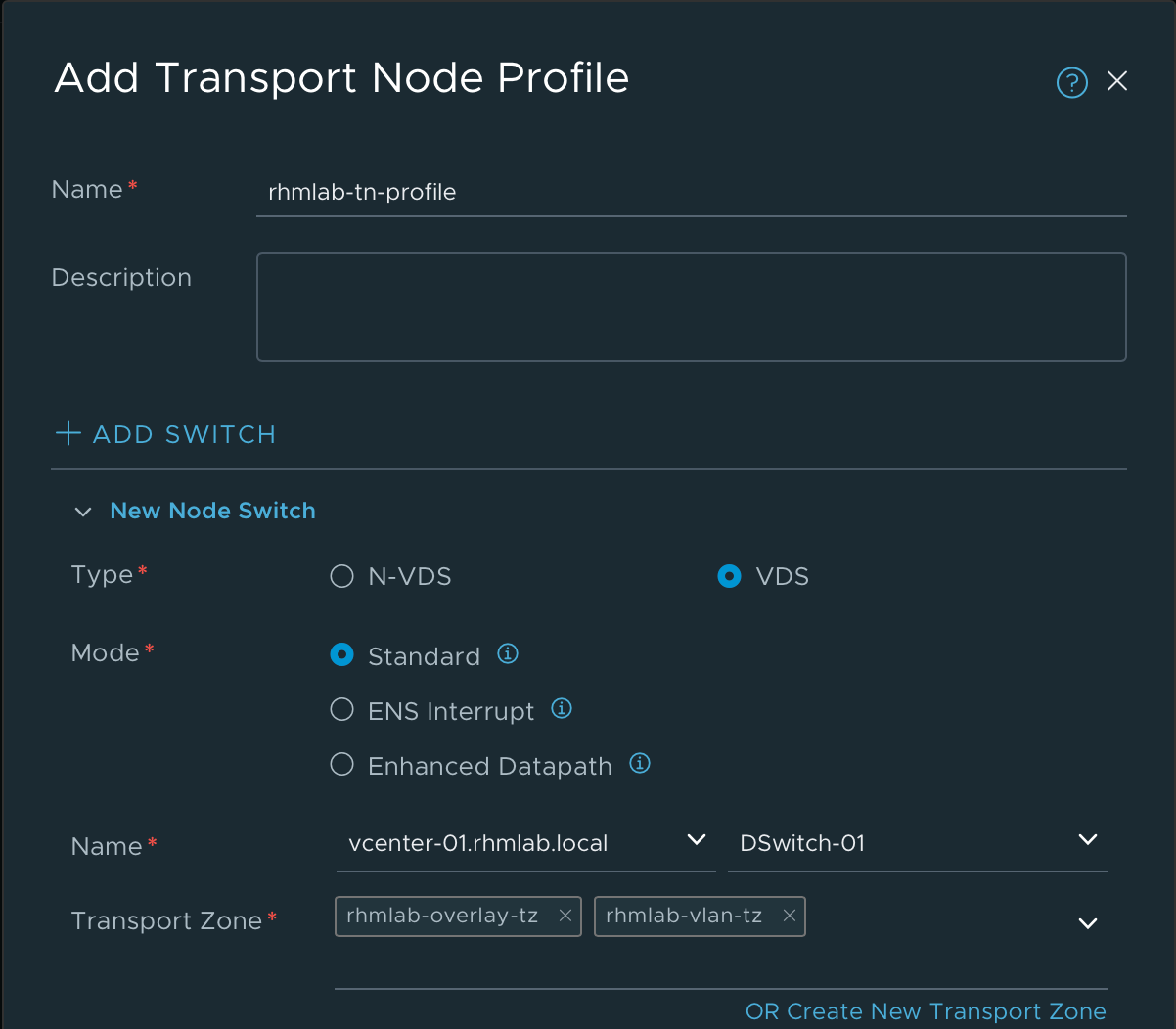

Although not strictly needed, I'm creating a Transport Node profile which will let me configure an entire cluster of hosts with the same settings instead of having to configure each and every host

In the Transport Node profile we first select the type of Host switch. In my case I'm selecting the VDS option, which will let me select a specific switch in vCenter.

We'll also add in our newly created Transport Zones

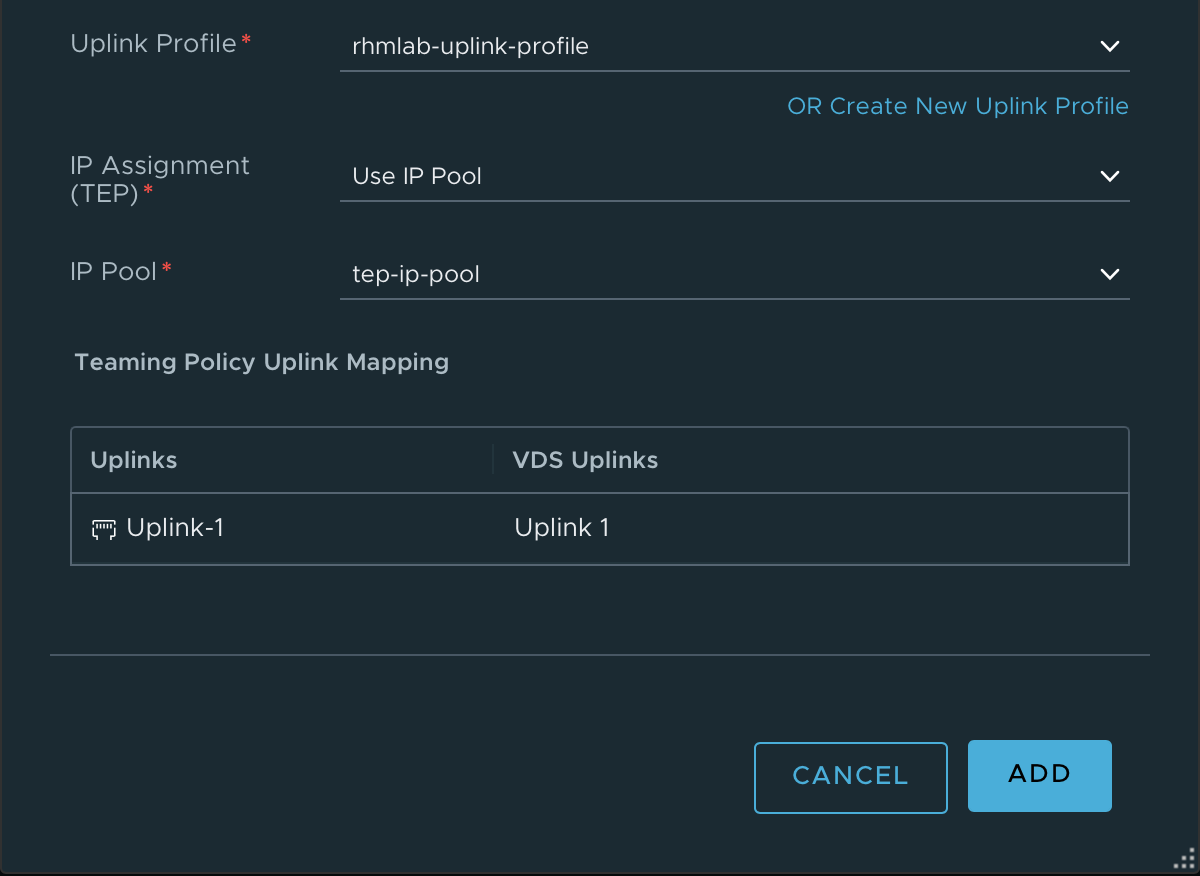

We'll select our Uplink profile and our IP Pool which we created earlier, finally we can set the mapping between the Uplinks

Configure NSX on hosts

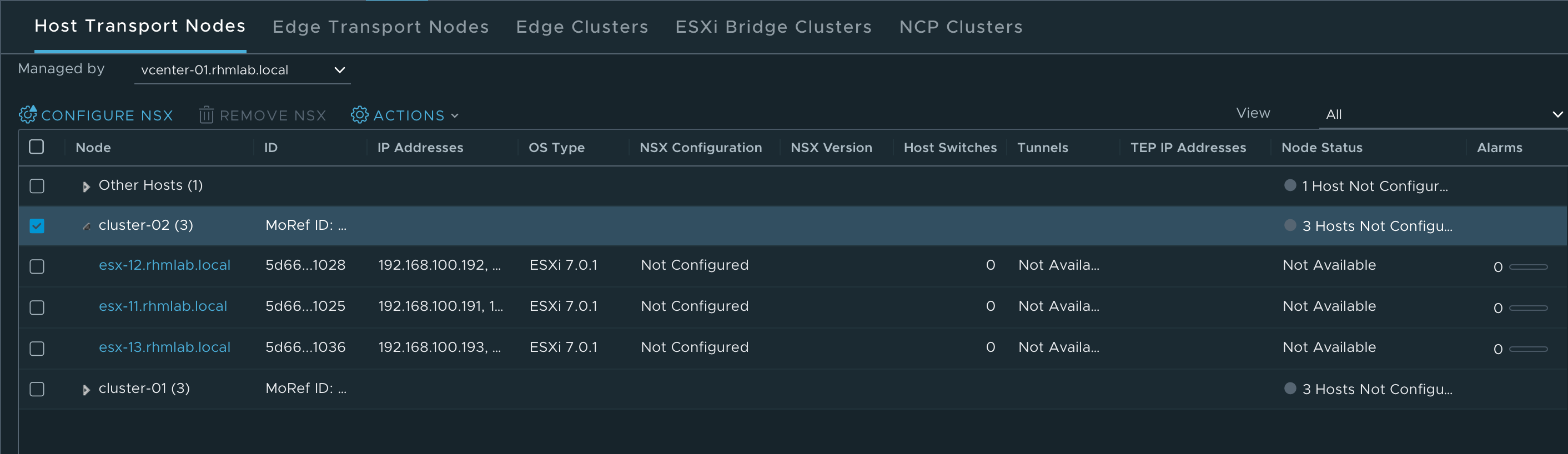

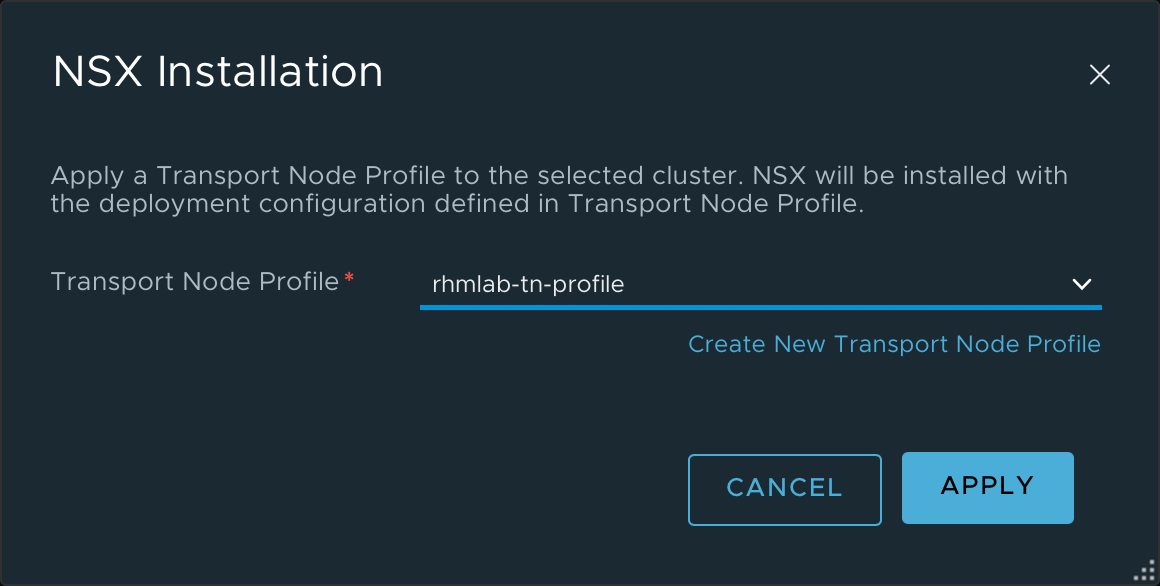

With our Transport Node profile we can go ahead and configure our ESXi hosts for NSX

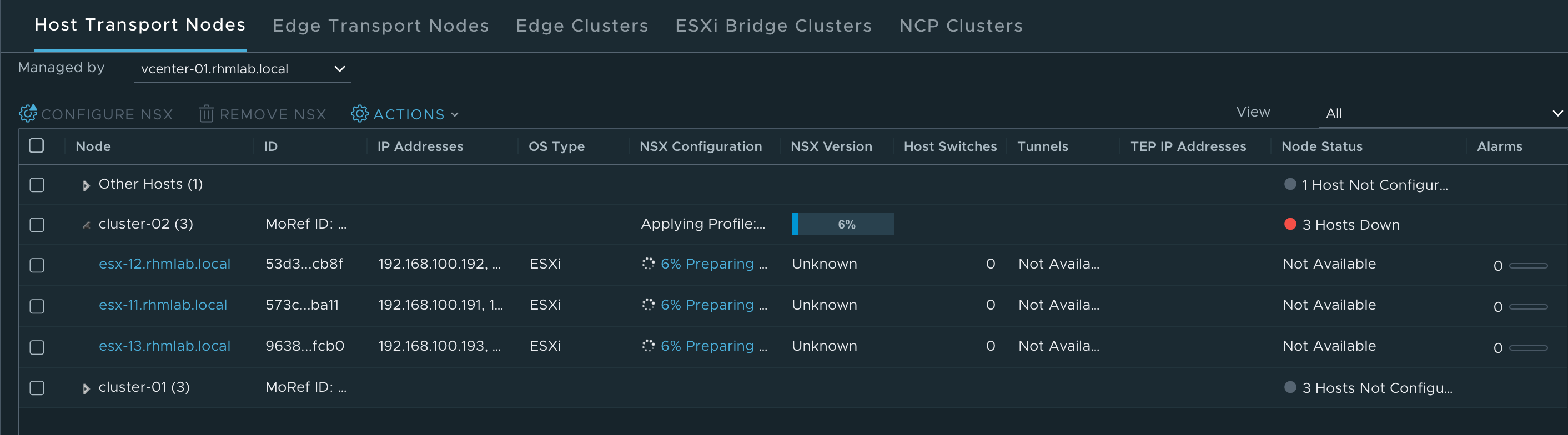

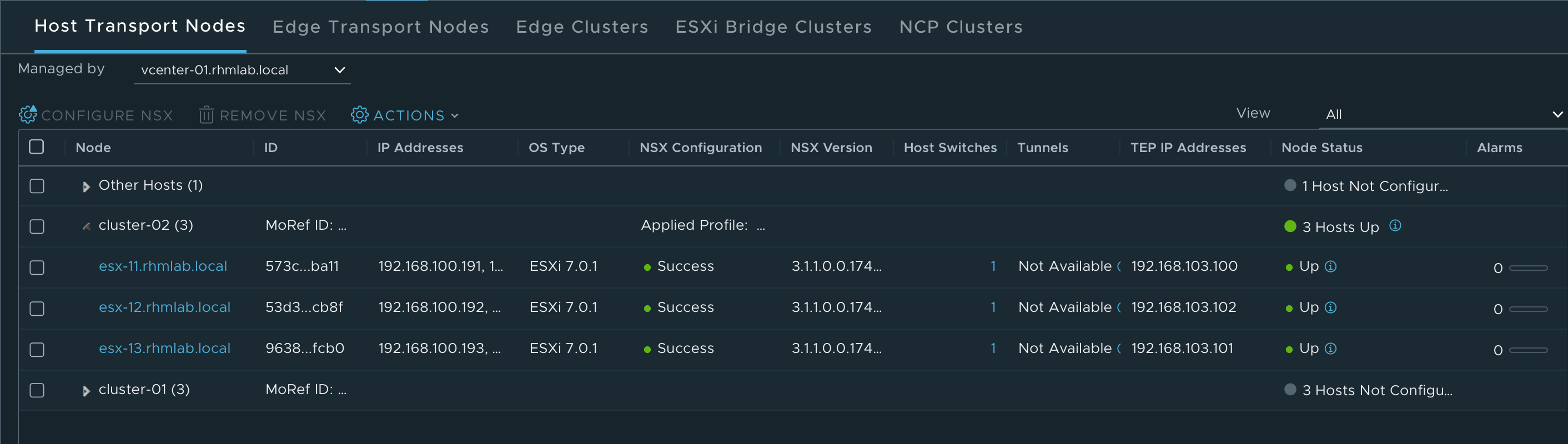

After selecting the profile NSX Manager will go ahead and configure our ESXi hosts.

After a few minutes our hosts should be configured and ready for NSX

Trunk segment

Next up is to create our Edge VMs which we will need for our Gateways and Services (NAT, DHCP, Load Balancer).

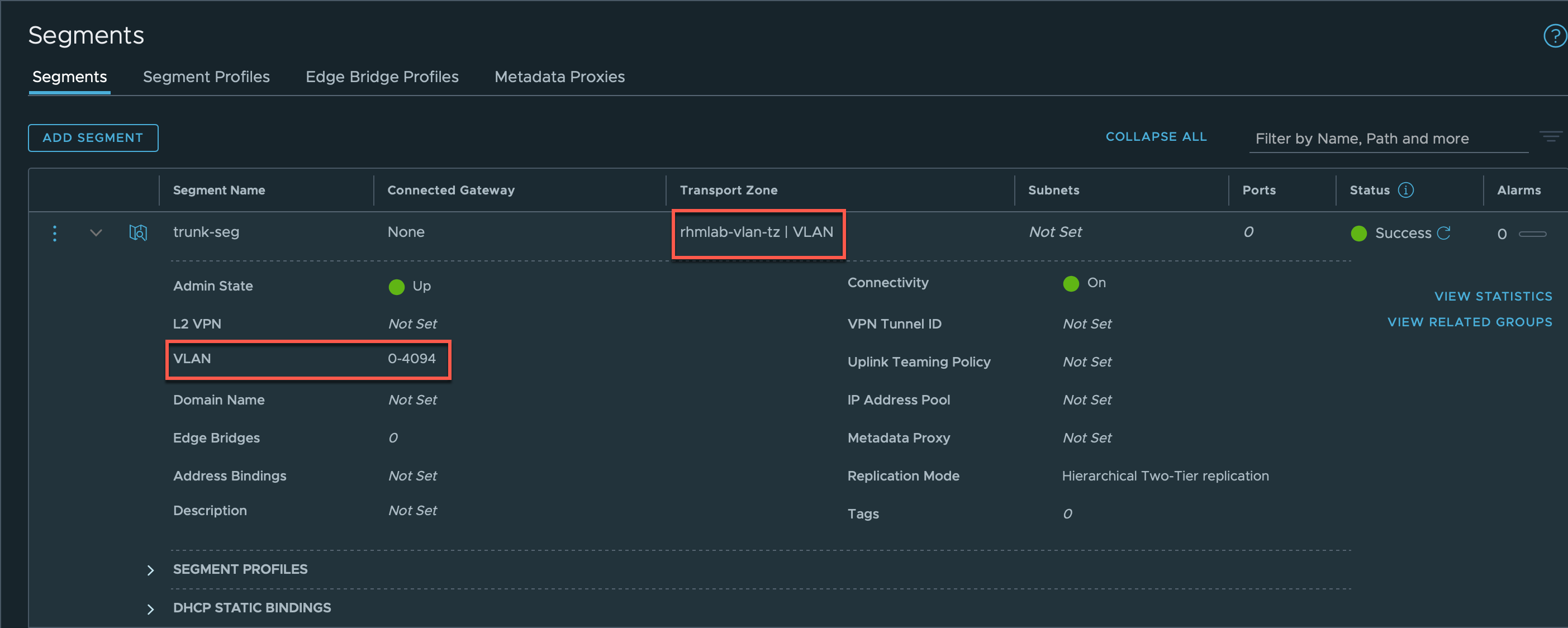

But before we deploy those we'll have to create a segment for the uplink of the Edge VMs. This will be a Trunk segment which we create in NSX. Initially I created a Trunk portgroup on the VDS in vSphere, but that doesn't work. The Trunk needs to be configured as a logical segment in NSX-T when using the same VLAN for both the Hypervisor TEPs and the Edge VM TEPs

Edge VM

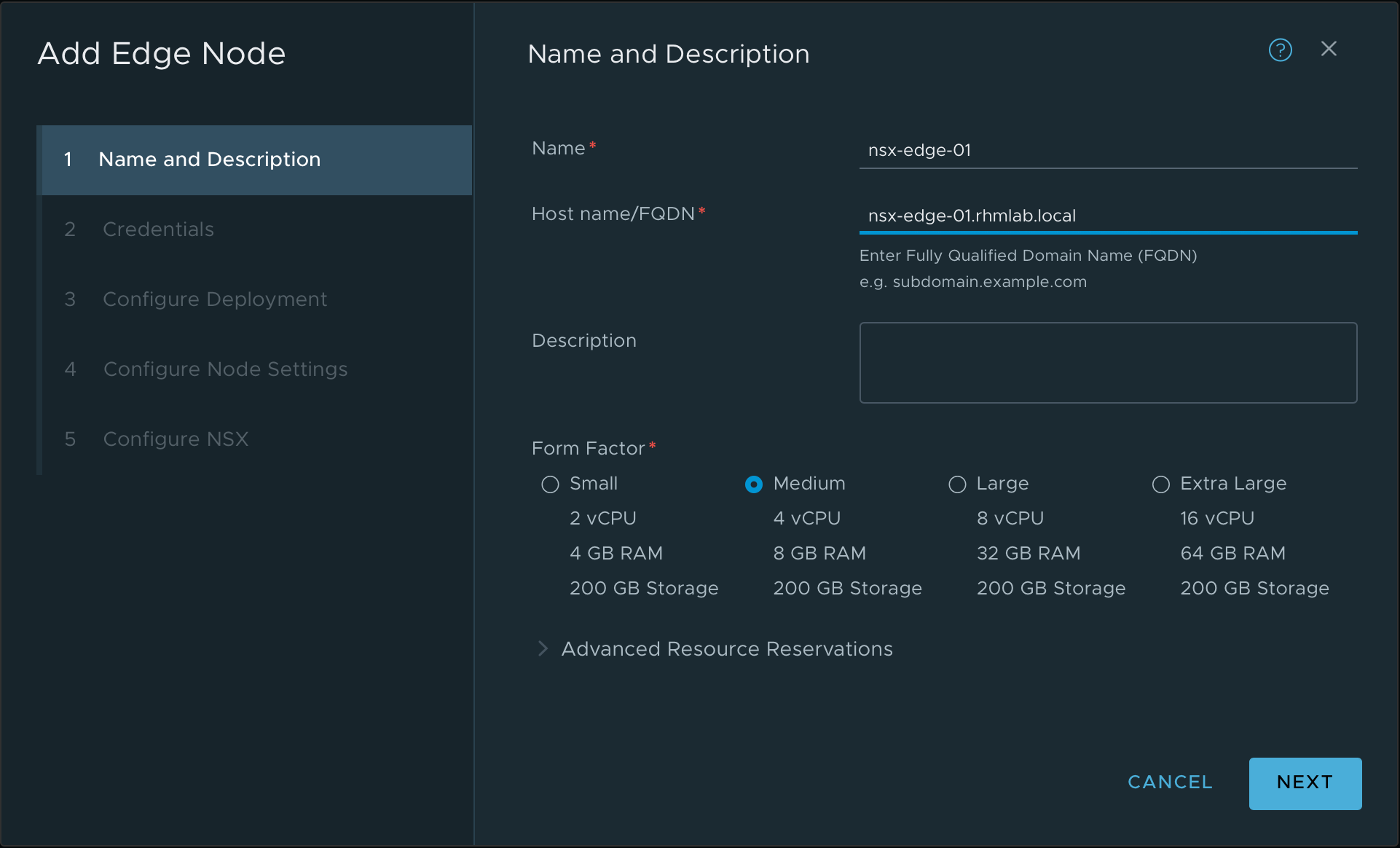

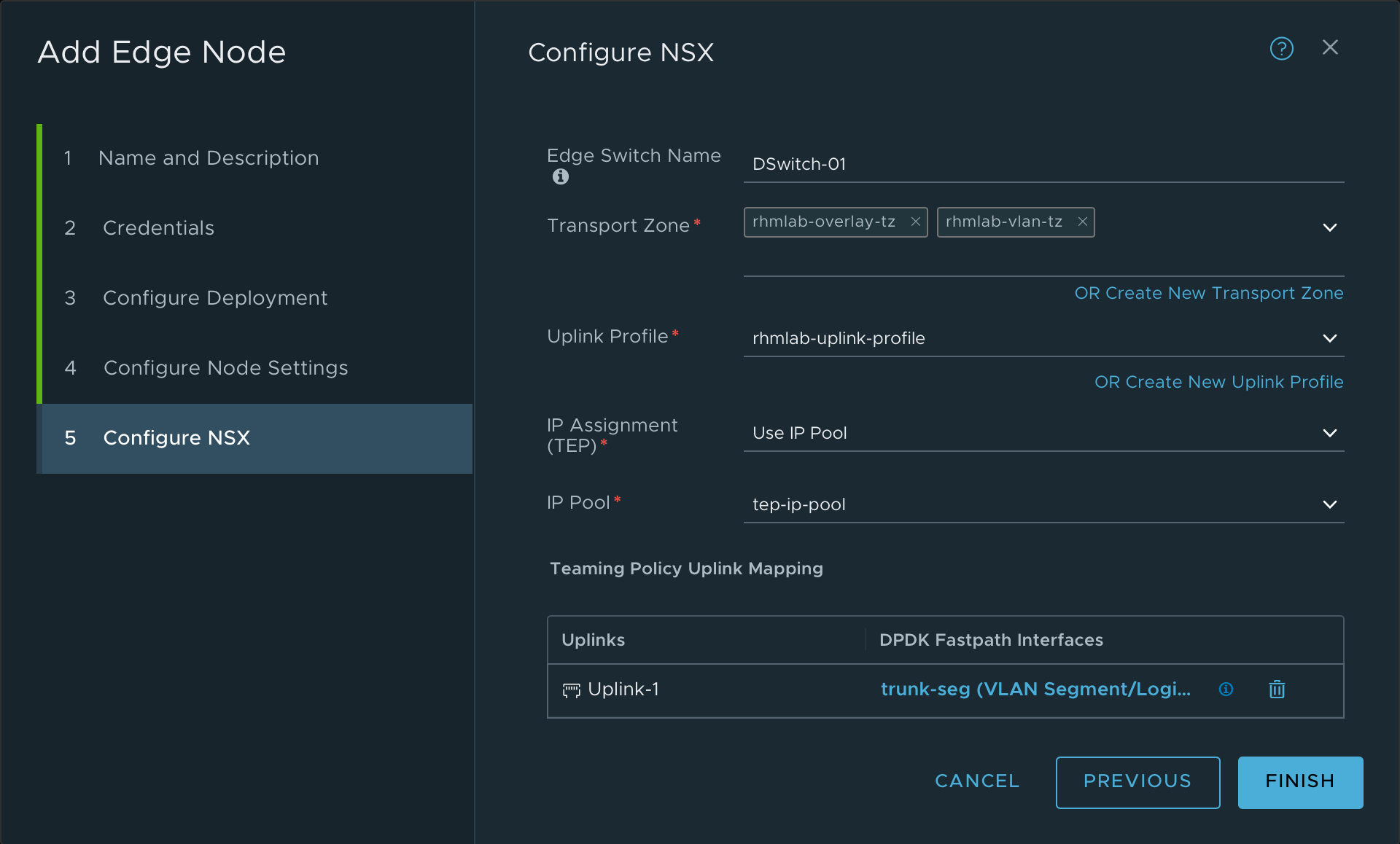

Now we can deploy our Edge VM(s). I'm using Medium sized VMs in my environment. Note that the Edge VMs is not strictly necessary for the test we'll perform later on with connecting two VMs, but if we want to use some services later on, like DHCP, Load balancing and so on we'll need them.

Note the NSX config, where we set the switch name, the Transport Zones we created, the Uplink profile, the IP pool and finally we use the newly created Trunk segment for the Edge uplink

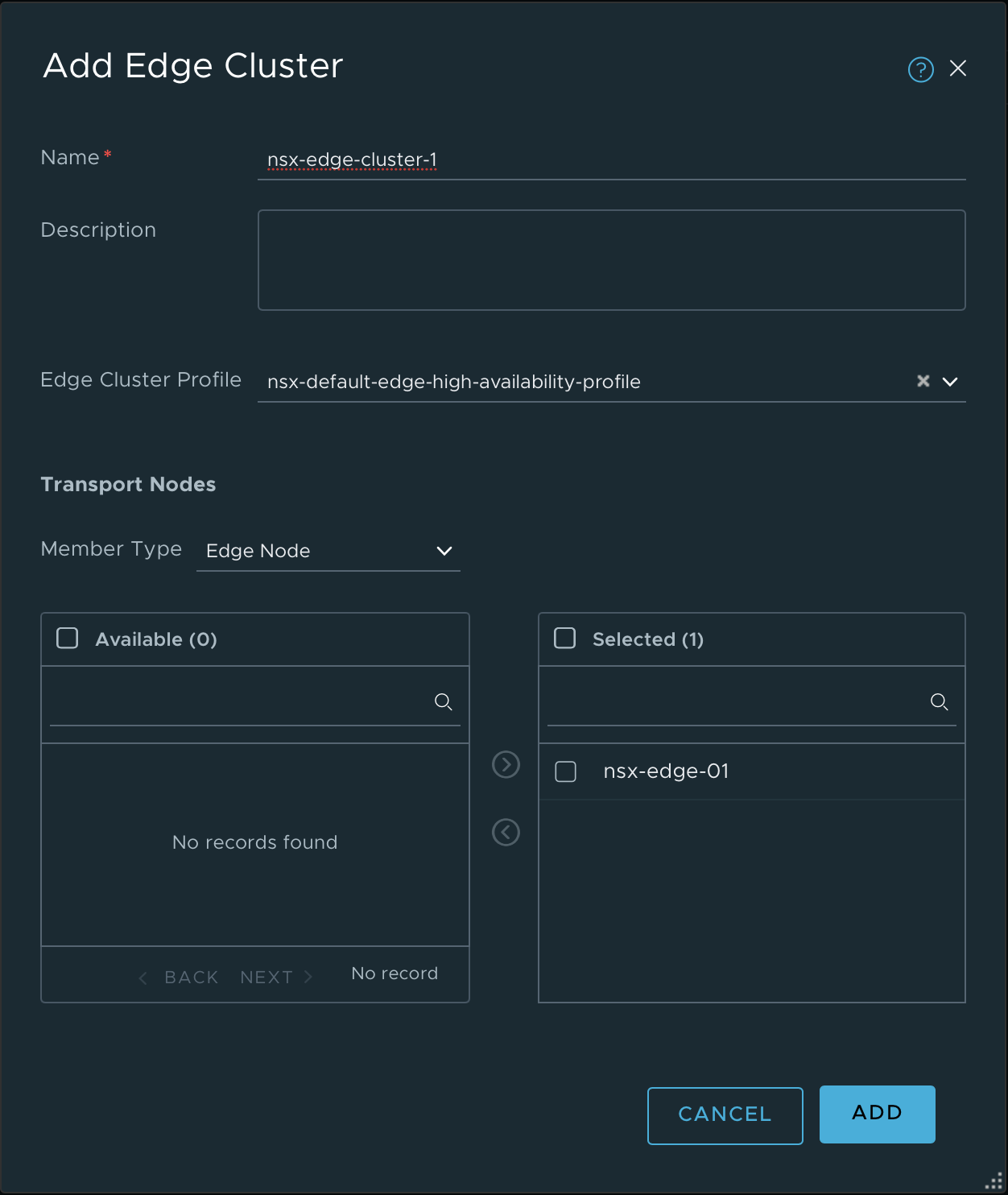

Edge cluster

We'll also create an Edge cluster and add the Edge VM to it

Summary

Wow, this was a lot of configuring, but that was also the whole point of doing this blog post. Stuff like this is learnt best while getting your hands dirty and do some actual work. And I learn even better when I'm writing and documenting it as well.

In the next blog post we'll test the fabric to see if what we've done is working. We'll also try to get some external connectivity to our environment.

Hopefully this post can help someone, if not it has at least helped me.

Thanks for reading!