Tanzu Mission Control - Policies

Overview

In a few posts I've been playing around in Tanzu Mission Control, TMC for short. TMC is VMware's SaaS offering for managing Kubernetes clusters cross different clouds and providers.

In this post we'll take a look at Policies in Tanzu Mission Control and how we can use them with our Kubernetes clusters.

What are Tanzu Mission Control Policies?

TMC lets you create various policies for controlling different concepts in your clusters. These policies helps us to manage the operations and the security of our resources. The (current) types of policies available are:

- Access policy

- Use predefined roles to control access to resources

- Image registry policy

- Specify the source registries from which an image can be pulled

- Network policy

- Define how pods communicate, either with each other or other network resources

- Quota policy

- Constrain resources used in a cluster

- Security policy

- Set contraints on a cluster to define what pods can do and access

- Custom policy

- Implement policies not covered by the built-in types

Note that there are some differences in what Policies are available and what you can do with them based on your license. Check out the full comparison chart for details

Where can we specify our policies?

The policies we define can be specified at different levels of our hierarchy

- Organization

- Object groups (cluster groups and workspaces)

- Kubernetes objects (clusters and namespaces)

A policy defined on a higher level will be inherited to lower levels of the corresponding hierarchy.

Note that not all policy types can be used at every level/resource.

How can we use this?

In this blog post we'll create an Image registry policy where we control what Registries we are allowed to use. We'll define a use-case where our Production namespace is only allowed to use an internal image registry. Another use-case might be to restrict the use of using images with the latest tag.

Scenario

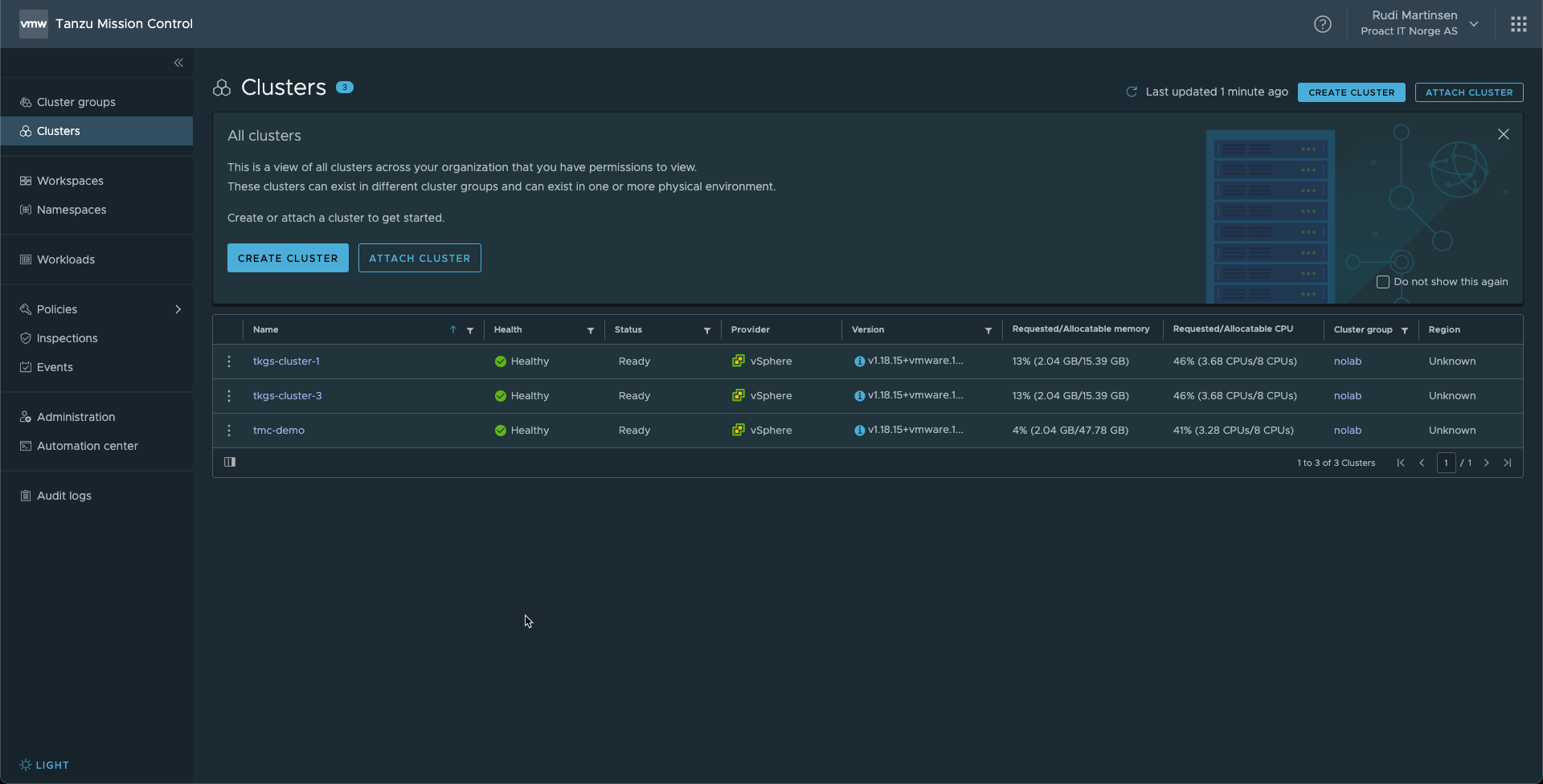

In this scenario we have some clusters attached to a TMC organization

We also have an internal Harbor registry running in the environment where our internal images are stored together with public images that we have tested and verified. (Note that this is not the embedded Harbor registry you get with Tanzu, but a standalone installation. Either way it doesn't matter for this scenario.)

Now let's say that we want to define that for some specific production level namespaces in my clusters we need to ensure that only my internal registry is used. Well, then we can do this by an image registry policy.

Workspaces

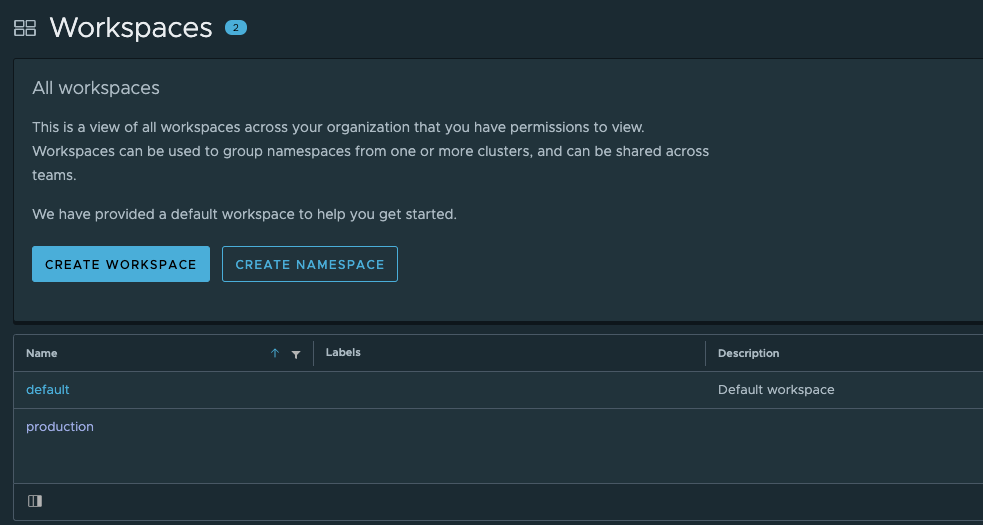

An image registry policy cannot be assigned to a cluster, it needs to be assigned to a Workspace so before we go ahead and create a policy I'll create a workspace for Production.

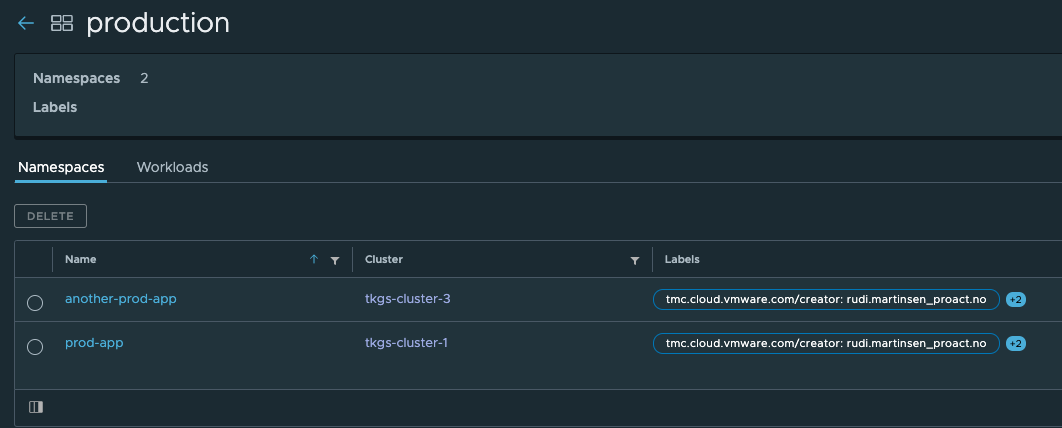

Now in our clusters we'll add my production [Kubernetes] namespaces to the production workspace in TMC

Attach namespace to Workspace

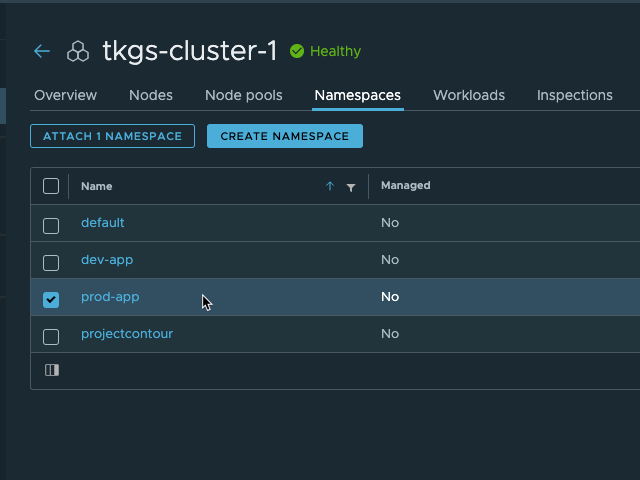

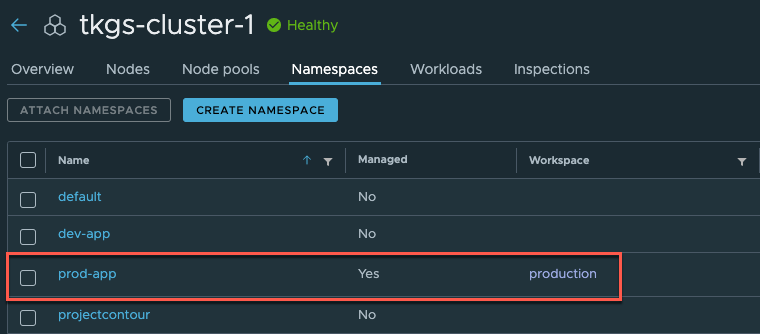

In this first cluster we already have a namespace created for my "production app". We'll need to attach this to our workspace

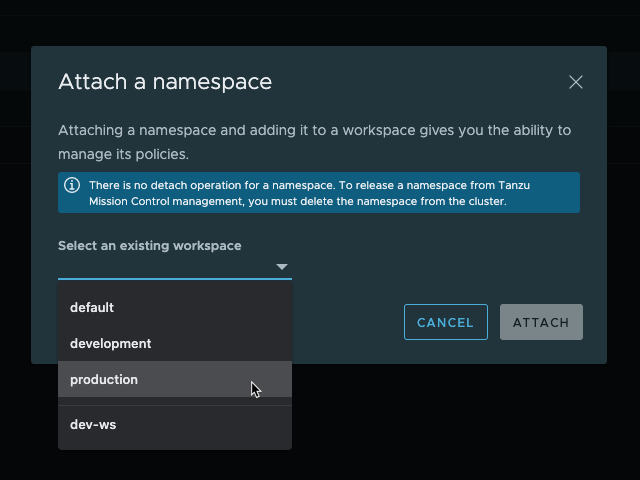

Now we'll hit Attach Namespaces and select the Production workspace

And the namespace is now attached to the cluster

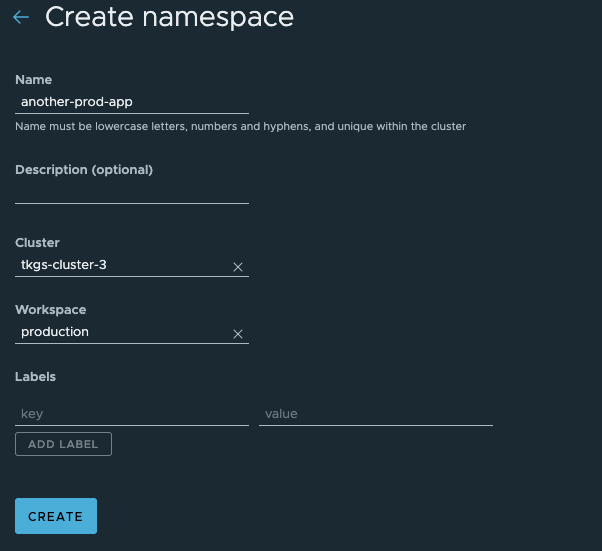

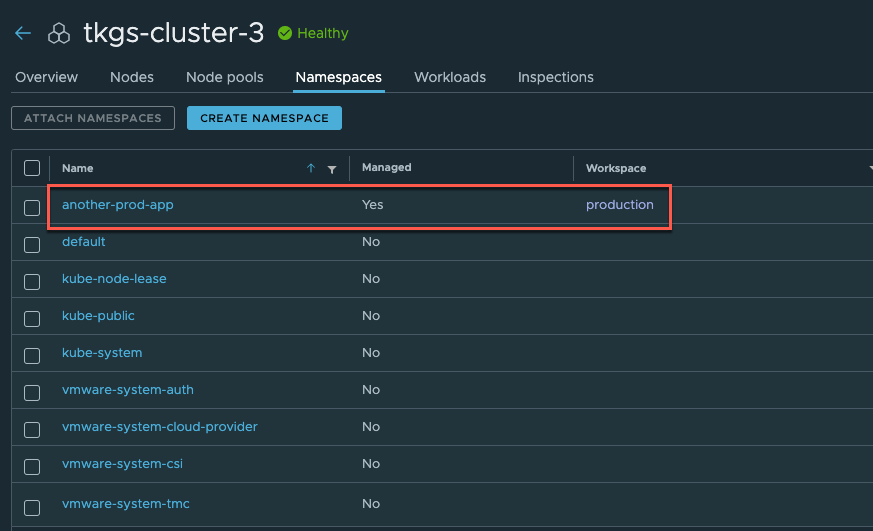

For the second namespace (in a different cluster) the namespace is not already created so we'll go ahead and create it directly from TMC

And with that our namespace should be created and attached to the production workspace

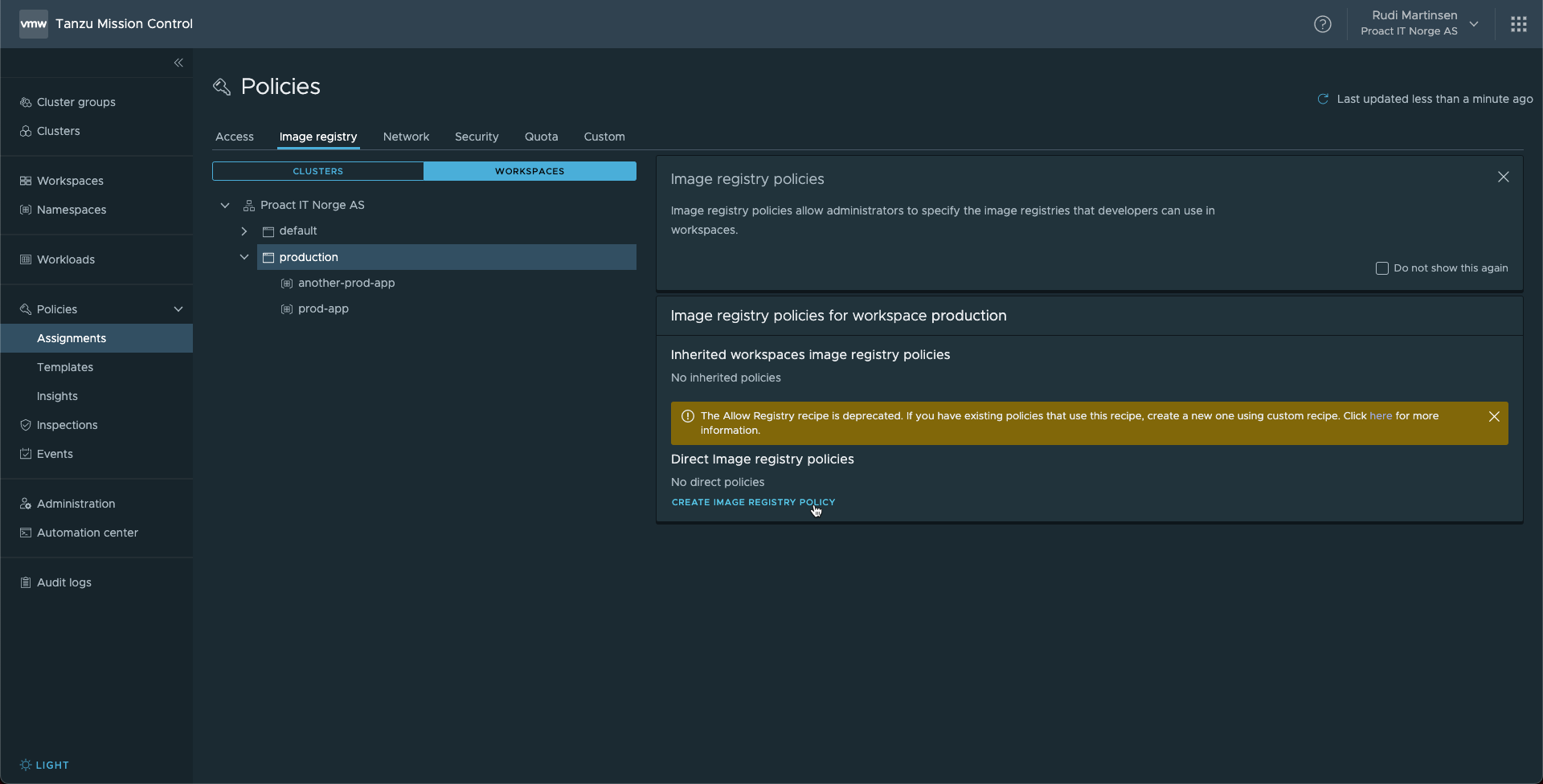

Create policy

With our namespaces attached to the production workspace we can go ahead and create the policy

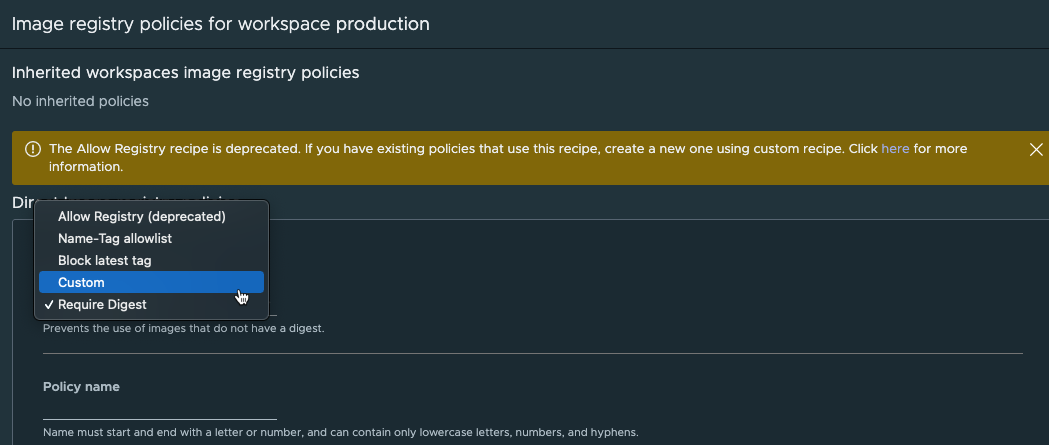

Note that TMC comes with pre-defined templates for the policy types we use. Previously there was an "Allow Registry" template which could fit this use-case, but this has been deprecated. Instead we'll select custom as our template and define it ourselves.

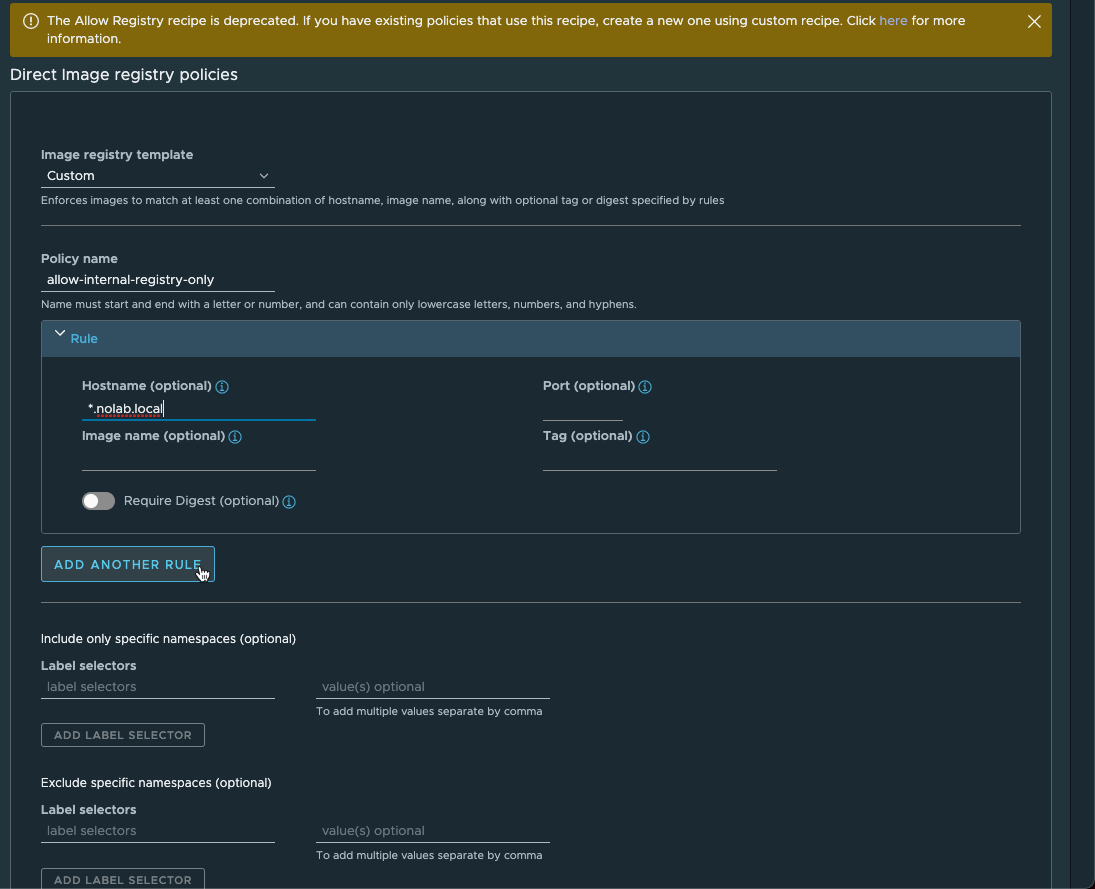

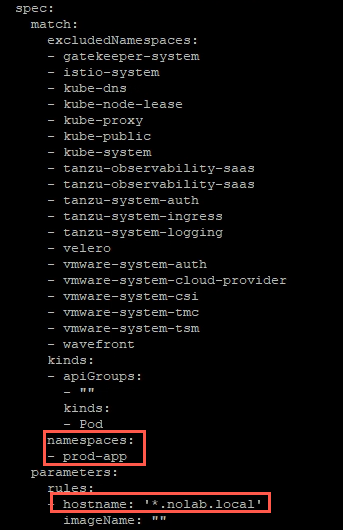

Now, we'll fill in the details of the policy. Note that we can use a wildcard in the Hostname field to support multiple different registries in my environment.

We can also see that there are Label selectors available in this wizard so we could of course have set this policy on the Organization level and specify namespace labels instead of doing it through the Workspace

Note that even though we'll only need the one rule for this policy we must hit

Add another ruleto save the rule we specified. Not sure if this is by design or a bug.

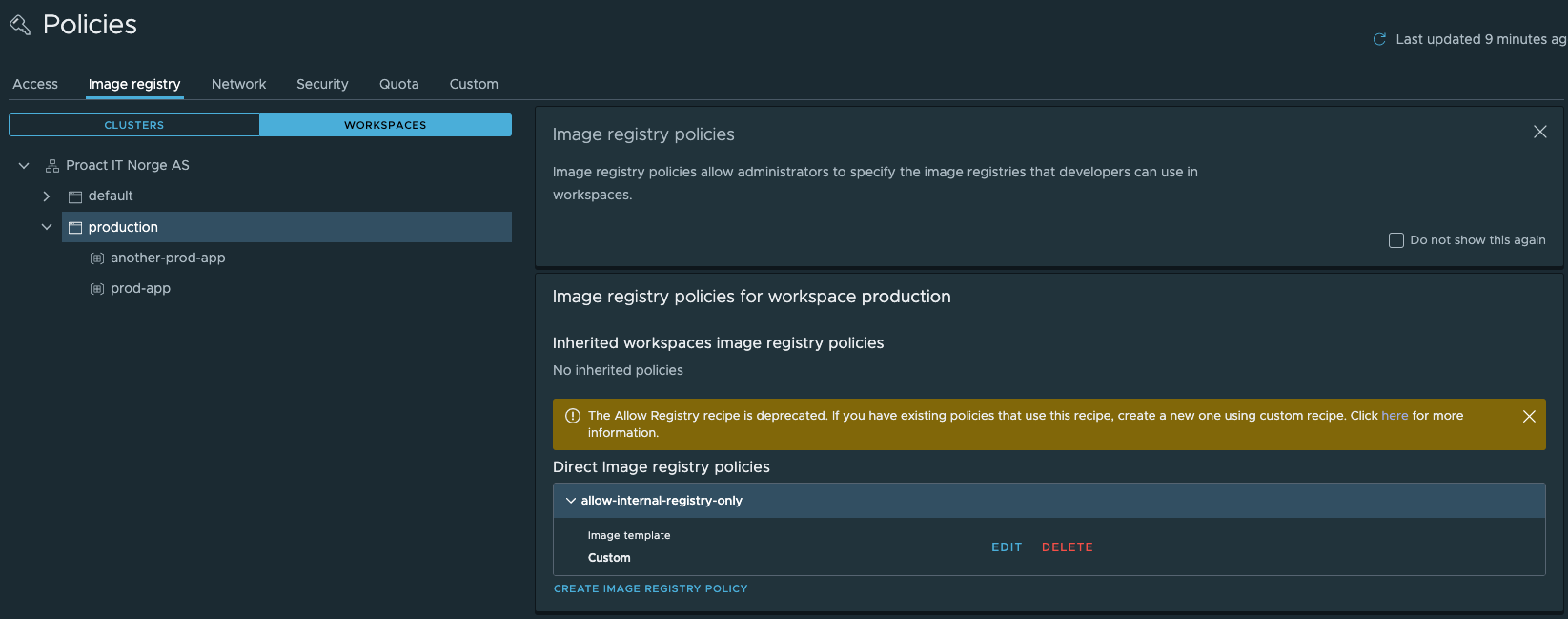

With that we can hit the Create button and our policy should be created

What happens in my Clusters?

So, with this in place, what happens on the Kubernetes side?

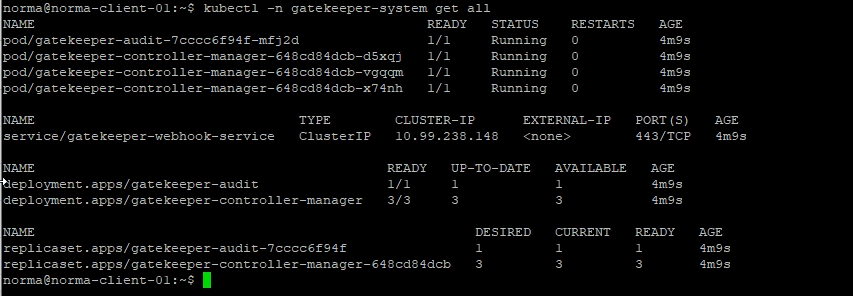

To enforce this we're using the OPA Gatekeeper project. This is automatically installed in our cluster, and constraints will be created based on the policies defined

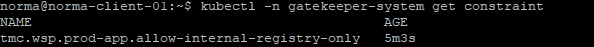

We can see our one constraint created

And by checking the details of the constraint we can find our rule and what namespace it should match against

Does it work?

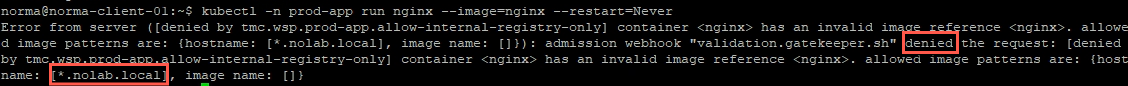

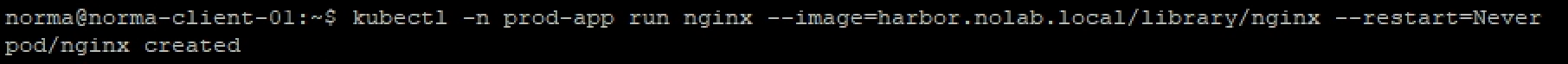

Let's try to run a simple Nginx pod in one of these namespaces and see what happens

Now, if we'll try to use an image residing in our internal Harbor registry we should get a Pod created

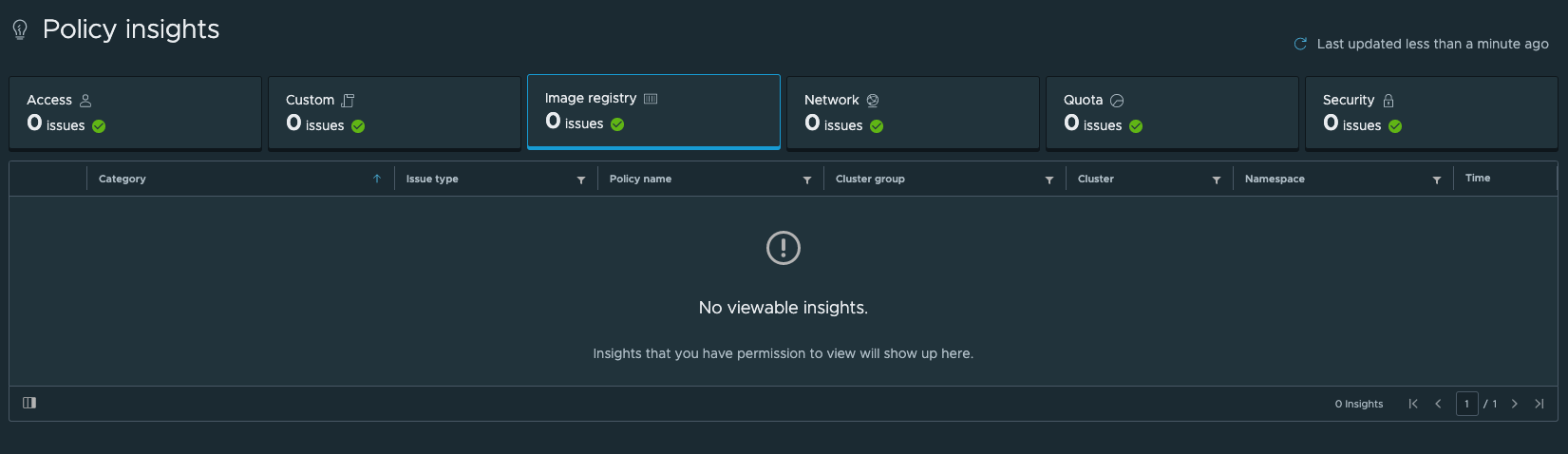

Insights

Back in TMC we can also see if our policies have any issues in the Insights view. In this scenario everything is fine and no issues are reported

Summary

This post has shown a simple use case for Tanzu Mission Control policies where we have restricted some namespaces from using public image registries, and even though we've seen two Tanzu Kubernetes clusters in this post we could have done the same with clusters running on AKS, EKS, native K8S or what have you.

There's lots more to do with policies, not only for controlling Image registries, but there's things to check out around access policies, network policies and more.

This feature is one of the very interesting features of Tanzu Mission Control. Again we're "only" using Open-source technology/projects (like Velero for Data protection), but TMC puts it into a context around multi-cluster operations and simplifies the use of it.