CKA Study notes - Services

Overview

This post is part of my Certified Kubernetes Administrator exam preparations. Time has come for the Networking concepts in Kubernetes.

Networking in Kubernetes is in a way simple, but at the same time complex when we pull in how to communicate in and out of the cluster, so I'll split the posts up in three, covering Cluster & Pod networking, Services(this post), and Ingress.

As for the CKA exam the Services & Networking objective weighs 20% of the exam so it is an important section.

Again, as mentioned in my other CKA Study notes posts, there's more to the concepts than I cover so be sure to check the documentation.

Note #1: I'm using documentation for version 1.19 in my references below as this is the version used in the current (jan 2021) CKA exam. Please check the version applicable to your usecase and/or environment

Note #2: This is a post covering my study notes preparing for the CKA exam and reflects my understanding of the topic, and what I have focused on during my preparations.

Services

Kubernetes Documentation reference

In Kubernetes a way to expose applications/deployments is through Services

Services rely on the built-in DNS service which keeps track of the Pods that makes up the service and their IP addresses. Services are not only exposing things outside of the cluster, it's just as much there for connecting services inside. For instance connect a frontend webserver to it's backend application.

Since container environments can be very dynamic it makes sense that it handles the this instead of relying on outside DNS and IP management services.

Services does also provide load balancing between the Pods that makes up the service.

DNS

Kubernetes Documentation reference

As mentioned Kubernetes handles services preferrably through DNS which is cluster-aware. This DNS service should keep track of the Services in the cluster and update it's records based on that. Normally DNS records have a relatively long Time-To-Live (TTL), which doesn't fit the rapid changes in a container environment.

Service names (and a few other names, like namespaces) needs to be a valid DNS name.

A service with the name my-super-service in the default namespace will have the DNS name my-super-service.default (the full DNS name will include .svc and the cluster name, by default cluster.local).

The default DNS service in Kubernetes is CoreDNS.

Selectors

A Service normally finds it's Pod through Label selectors, and in it's Spec it defines how the service maps request to the app behind the service.

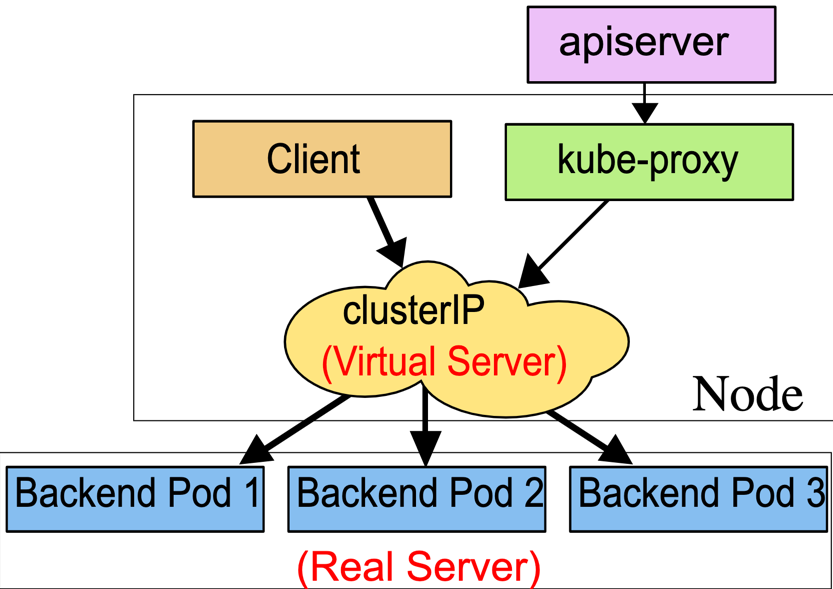

The service will be assigned an IP address, called the cluster IP. Then it's the job of the kube-proxy component to proxy incoming requests to one of the Pods matching the selector for the service. The Kube-proxy constantly monitors creation and deletion of Pods to keep the rules for the service updated. Kube-proxy can be set up with a couple of modes for this, e.g. iptables and ipvs mode with the latter being the most recent one.

A figure of the process below (fetched from the Kubernetes documentation)

Note, this describes the

ipvsproxy mode, there are others available

The controller for Services will also update an Endpoint object that corresponds to the service.

Let's see some examples:

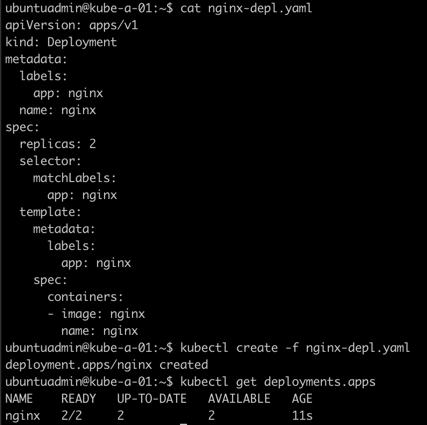

First we'll create a deployment with a nginx image

1cat <file-name>

2kubectl apply -f <file-name>

3kubectl get deployments

Now let's try to expose this to the outside world through a Service with the kubectl expose deployment command. We'll then check the services, and the endpoints created

1kubectl expose deployment nginx --port=80 --type=NodePort

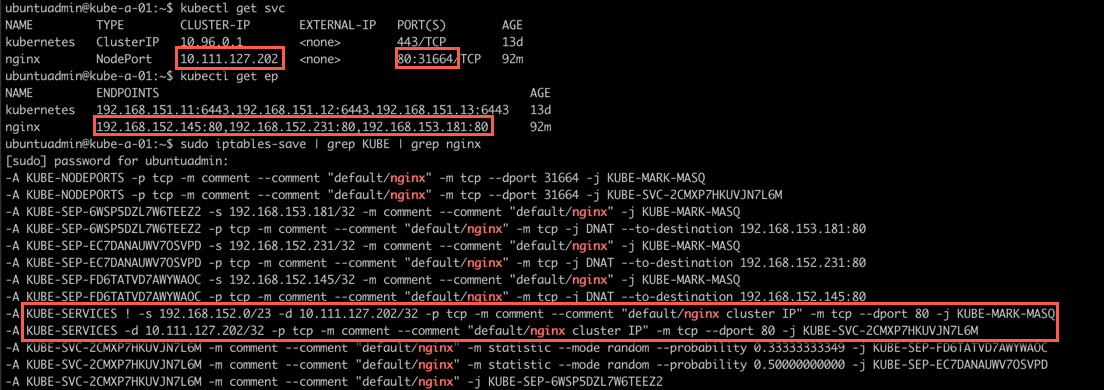

2kubectl get svc

3kubectl get ep

4kubectl get pod -l app=nginx -o wide

As we can see we have created a Service of type NodePort which created a portmapping from a port (31664 in my example) on our nodes to port 80 on the Pod. In addition we got an Endpoint object created with the same name (nginx) which points to Port 80 on two IP addresses.

If we check the Pods matching the label app=nginx we can see that the two Pods corresponds to those two IP's. Note that this is actually more or less how the service monitors which Pods belong to a Service.

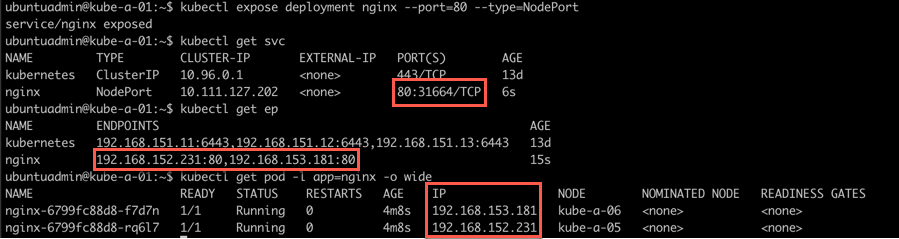

That means if we now scale the deployment to 3 replicas this new replica should automatically be added to the endpoints.

1kubectl scale deployment nginx --replicas=3

2kubectl get svc

3kubectl get ep

4kubectl get pod -l app=nginx -o wide

Notice that the Service is the same as before which is exactly what we want. Since a frontend shouldn't care to much (or at all) how many and which backend servers exist the Service should be the same even if the app consists of 1 or 1000 Pods.

Let's take a look at the iptables rules created on a node to see what's happening:

1kubectl get svc

2kubectl get ep

3sudo iptables-save | grep KUBE | grep nginx

Again note that there are more types of modes available. Check the documentation for more info

Headless services

Kubernetes Documentation reference

In Kubernetes we have also the ability to create headless services where the service doesn't point to an object with an IP inside the cluster. These are not handled by the kube-proxy and by that there's no load balancing.

One of the usecases for this is the ability to point to an external service. For instance to a CNAME DNS record handled outside the cluster

Service types

There's a few different Service Types available. We've already used the NodePort type

- ClusterIP

- The default type. Service is only exposed to the cluster internally, great for backend services etc.

- NodePort

- The service is exposed to a specific port on each Node in the cluster. A ClusterIP is automatically created which the NodePort routes to.

- LoadBalancer

- Service is exposed through an external load balancer, normally in a Cloud Provider. This type will automatically create a NodePort and a ClusterIP which the load balancer should route to

- ExternalName

- Maps a service to a the externalName field, i.e. an external CNAME record

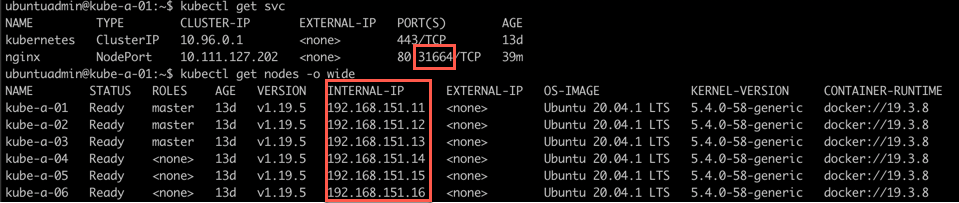

NodePort

Kubernetes Documentation reference

When creating a NodePort service a port will automatically be assigned to the service from a port range, by default 30000-32767. Each of the nodes proxies that port to the service. The NodePort can also be specified, but then you must make sure it's available.

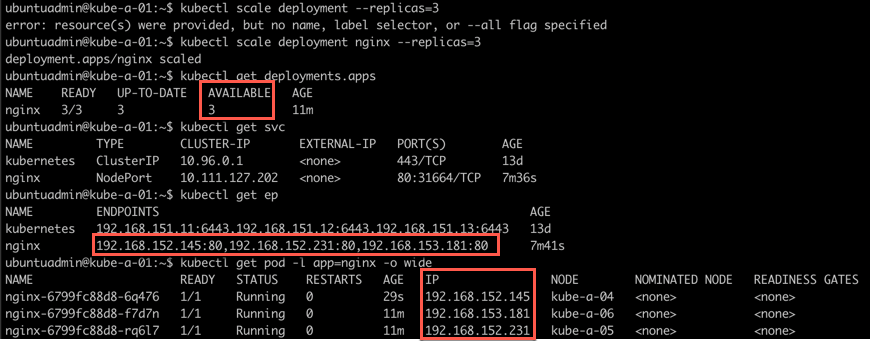

Using NodePort makes it easy to test the service externally as long as the Node's are accessible from where you want to test.

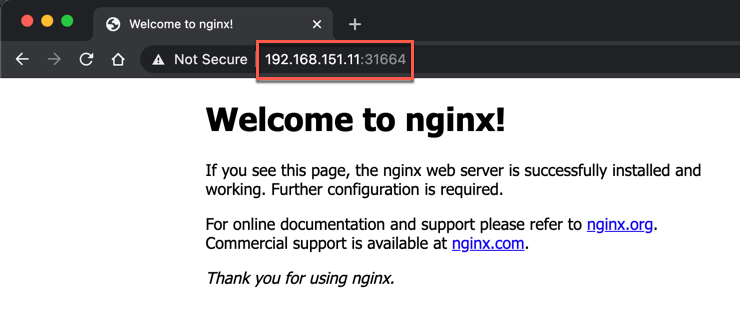

For instance if I want to test the service we created earlier, I can point my browser to the IP of a node and the assigned NodePort

Now let's try to access the service with a browser from my laptop

As we can see I've pointed my browser to the IP and port on one of the control plane nodes and the web page loads. This also shows that the NodePort proxies the service to all nodes, even those not running a Pod serving the web page.

NodePort will also give us the ability to integrate with our own Load balancer if we're not running on a Cloud provider that supports the LoadBalancer type, or a combination.

LoadBalancer

Kubernetes Documentation reference

On a Cloud provider that supports the LoadBalancer type a load balancer will be automatically created for the Service. The provisioning process differs on the different providers, and it might take some time before it is ready.

The Cloud provider is responsible for how the service is load balanced and what configurations that can be done.

Note that if you have a lot of LoadBalancer services you might want to keep an eye on your billing statement as these resources probably will be an extra cost besides the Kubernetes cluster cost.

Summary

Services is a critical component of a Kubernetes cluster and an example of the way we need to think about our applications as decoupled objects. Again take the example of a frontend that needs to reach it's backend servers. We shouldn't need to keep track of the names and IP's of the backends and which nodes they are running on, or even if they're running in the cluster at all. With Service Kubernetes keeps track of this for us.

There much more to Services, though I'm not sure how much we need to know for the exam. As always make sure you check the documentation.

Another way to expose services outside of the cluster is through Ingress which we'll take a look at in this next post

If you want to learn more, and enjoy video resources, I can recommend the free KubeAcademy PRO series from VMware, the Networking course has really helped me in understanding the concepts.

Thanks for reading, if you have any questions or comments feel free to reach out