CKA Study notes - Storage volumes

Continuing with my Certified Kubernetes Administrator exam preparations I'm now going to take a look at how to work with storage in Kubernetes.

Note that this post was created in 2021 and things might have changed since then. I have a newer post covering some of the same content which is also using a separate NFS server running on an Ubuntu Linux server. You can find that post here

Implementing storage in a Kubernetes cluster is a big topic, and my posts will touch on only a few parts of it. Remember that Storage has a weight of 10% in the CKA exam curriculum so I'm guessing it's more of an understanding of concepts that are tested, than specific knowledge.

This first post will cover volumes and a few examples on how to mount it to a Pod. The next post will look at Persistent Volumes and Claims.

Note #1: I'm using documentation for version 1.19 in my references below as this is the version used in the current (jan 2021) CKA exam. Please check the version applicable to your usecase and/or environment

Note #2: This is a post covering my study notes preparing for the CKA exam and reflects my understanding of the topic, and what I have focused on during my preparations.

Volumes

A volume can refer to different concepts in a Docker or Kubernetes scenario. Files in a container are ephemeral and will be lost when the container stops running or is restarted, which can be a problem in many cases where the application state needs to outlive a container or a pod. In other cases we might want to have access to files between containers for synchronization etc.

The Kubernetes volume concept can solve this by abstracting the actual location of said volume(s) away from the containers.

Kubernetes supports many different types of volumes that, but in essence it's just a directory residing somewhere with or without data already in it. A volume is mapped to a Pod and is accessible by the containers running in that Pod. For a container to access a volume it needs a volumeMount which refers to the volumes spec on the Pod.

Volume types available ranges from specific cloud provider objects like AWS EBS volumes and Azure Disks, to fibre channel volumes, hostPaths, and as we have seen in a previous post Kubernetes ConfigMaps

HostPath

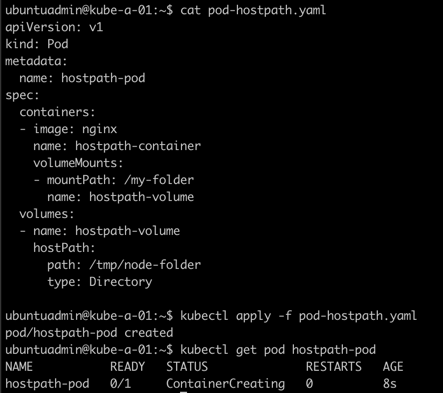

Let's take a look at an example where we mount a directory on the node (hostPath) to the Pod:

1apiVersion: v1

2kind: Pod

3metadata:

4 name: <pod-name>

5spec:

6 containers:

7 - image: <image>

8 name: <container-name>

9 volumeMounts:

10 - mountPath: /<path-in-container>

11 name: <name-of-volume>

12 volumes:

13 - name: <name-of-volume>

14 hostPath:

15 path: /<path-on-node>

16 type: Directory

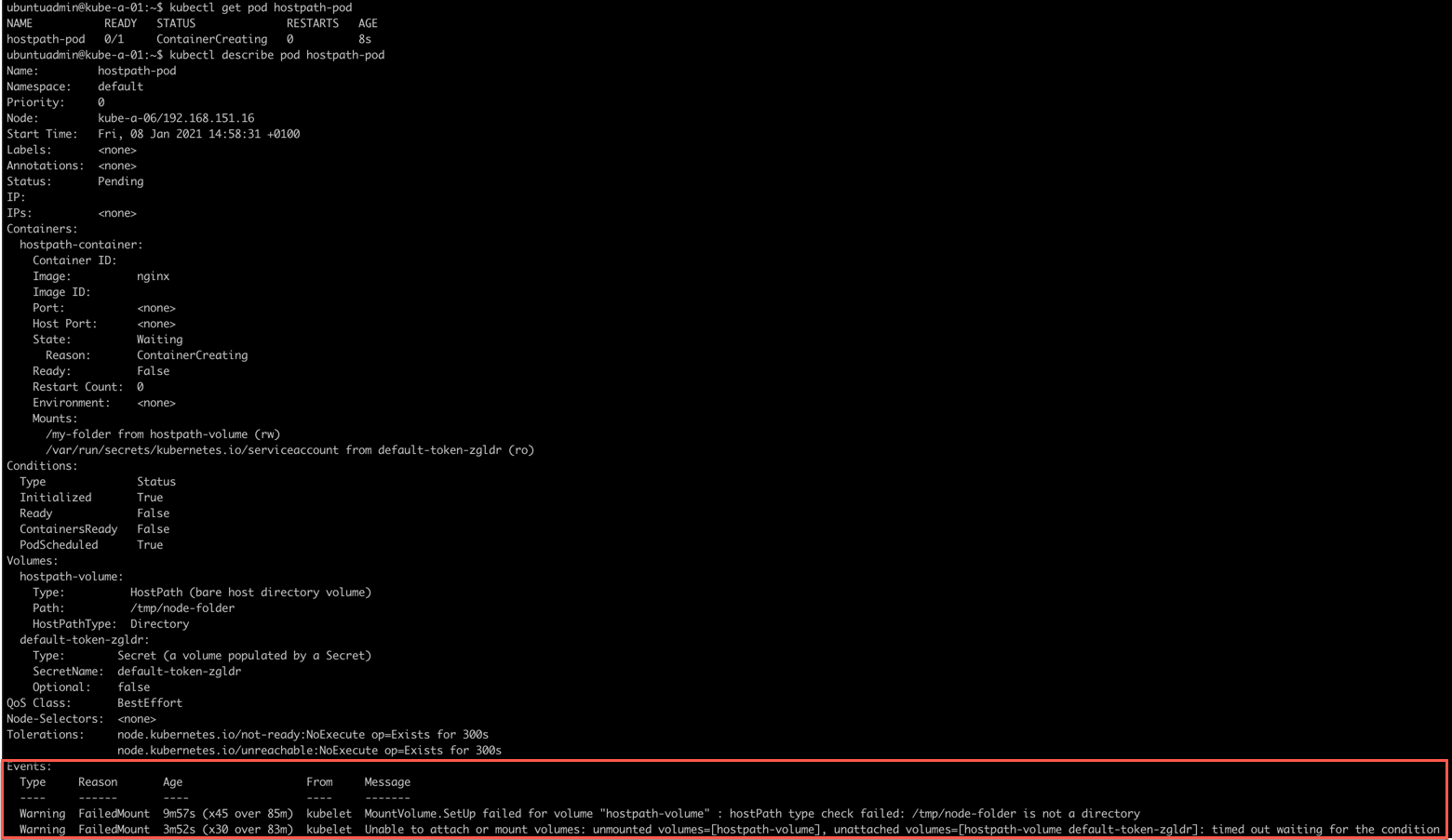

Notice that the container doesn't get created. Let's find out why.

We'll do a kubectl describe pod <pod-name> to see some details on the pod

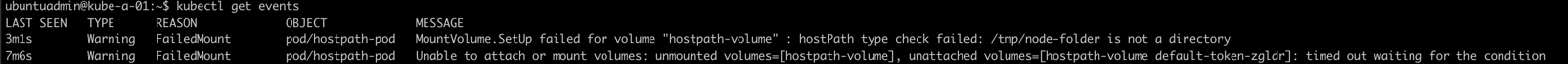

Notice the events at the bottom of this output. We can also pull the events, note that this pulls event from the default or specified namespace and if you have a lot of stuff going on in your environment you'd want to do some filtering

1kubectl get events

The error message indicates that it can't find the hostPath directory on the node it's running.

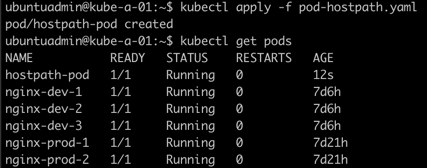

So, let's try to delete the pod, then create the local directory on our worker nodes, and recreate the pod

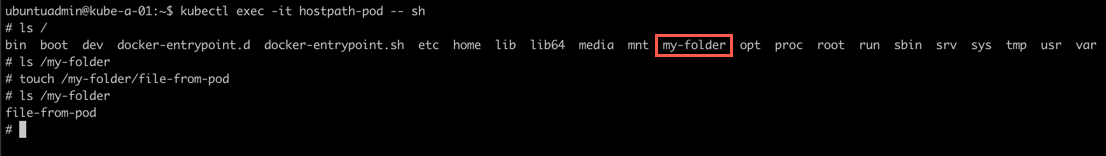

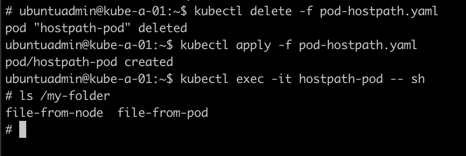

Now let's examine the Pod and check if the directory is available by jumping in to a shell inside the container

1kubectl exec -it <pod-name> -- sh

Nice, we have the directory available and we created a file inside the container. Let's see if that file is available on our host.

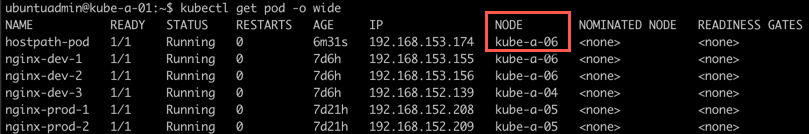

First we'll find the host our pod is running on

1kubectl get pod -o wide

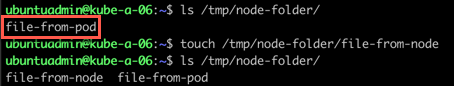

Now, let's examine the directory locally on the node.

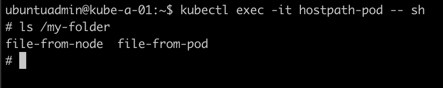

Great, our file was there. We've created a file locally on the node, let's verify that we can access that file from the container

A final test, let's delete the pod and recreate it to see if we still have access to our files.

Perfect. Everything seems fine. But with hostPath we're accessing files locally on a node. What if the pod was created on a different worker node?

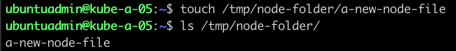

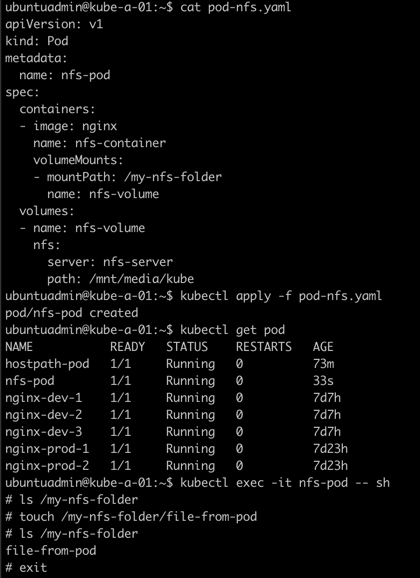

Let's create the directory on one of the other worker nodes, with a new file as the single item in that directory. Then we'll use the nodeName label to point the new Pod to this node

So, we'll delete the existing pod, add the specific node in spec.nodeName, and recreate the Pod. Then check the directory from the container

1kubectl delete -f <file-name>

2

3cat <file-name>

4

5kubectl apply -f <file-name>

6

7kubectl get pod

8

9kubectl exec -it <pod-name> -- sh

10

11#inside pod

12ls /<path-in-container>

Here we can see how hostPath might be a problem as the actual directory is local to each node. HostPath is fine for creating files that could be referenced by other services, maybe for logging purposes, or for fetching files that you know is present on all nodes. But in many cases you need to be able to have a volume specific to your application and the pods and containers that can be accessed regardless of which node they're running on.

For this we'll take a look at using shared storage.

Shared storage

With remote storage Kubernetes can automatically mount and unmount storage to the Pods that needs it. Obviously the remote storage needs to be accessible to the nodes.

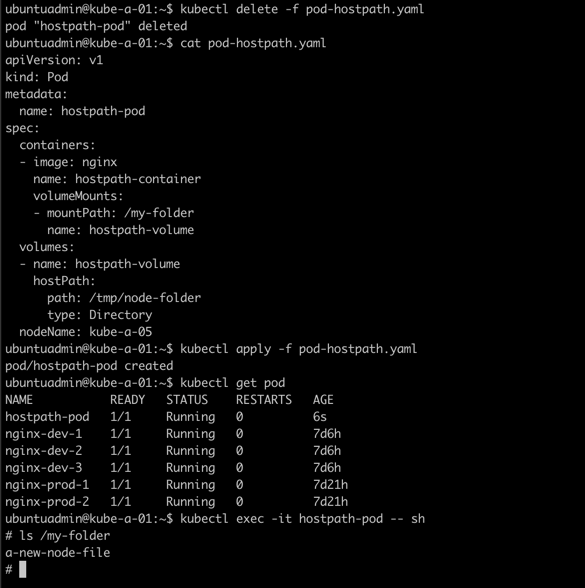

As mentioned above, Kubernestes supports a lot of different volumes. I'll use an NFS server in my example.

1apiVersion: v1

2kind: Pod

3metadata:

4 name: nfs-pod

5spec:

6 containers:

7 - image: nginx

8 name: nfs-container

9 volumeMounts:

10 - mountPath: /my-nfs-folder

11 name: nfs-volume

12 volumes:

13 - name: nfs-volume

14 nfs:

15 server: nfs-server

16 path: /mnt/media/kube

We'll create the pod, jump in to the container shell and access the folder from the underlying NFS server

As long as our nodes have access to this NFS server our Pod should be able to run on all of them.

Note. If you don't have a shared storage solution to test take a look at this NFS server example which deploys an NFS server running in a container!

Persistent volumes

In the next post we'll take a look at working with Persistent Volumes and claims.