Dumping an IPAM solution to Github with vRealize Orchestrator

In this post we'll take a look at the phpipam API, the GitHub API and using vRealize Orchestrator to connect the two.

We'll see how we can extract IP address assignments from an IPAM solution with vRealize Orhcestrator and then push that data to a (private) GitHub repository.

The challenge

In my employer's lab environment we have an IPAM solution handling a small number of subnets. The IPAM solution we use is the open-source phpipam.net

As in most environments the IPAM solution is one of the key components as it handles and records the IP addressing assignments. And since this is is a lab environment, and we are multiple people testing all kinds of stuff which may or may not live to see tomorrow, we need something to keep track of what addresses are in use or not.

Another "feature" of a lab environment is that it's obviously not as stable as a production environment and tends to have some downtime at times. And since we're a few people using the lab, and that the lab is far from our primary concern in our day to day roles as consultants we need to have a way to know what IP addresses to use in our troubleshooting and startup procedures.

One of the, if not the, first information we need when diving in to fix a problem in the lab is the IP addressing info. "What is the IP address of the out-of-band hardware management system?" is a question that comes up almost each time. Especially if the guy that normally handles the physical hardware isn't available.

So we discussed how to document this in the best way. It needed to be accessible to all of us, even when the lab environment was down, it should preferably be available to us even if not connected to the company network, but still available only to a small number of people, of course dynamically updated (just for the HW mgmt use-case we could have done a static thing), it should be searchable, it should be backed up and preferably we needed to have some versioning on it to potentially see what had changed

We discussed where to put the data, a csv file on a company fileshare, OneNote, OneDrive/Dropbox, S3 bucket, Slack(!) etc. Several of these meets many of the requirements, but suddenly it hit (credit @h0bbel): Why not commit it to a git repo?

This will make it accessible and available outside of the lab environment, we can use private repositories only shared to the people we want and it gives us versioning. Again since this is just a lab environment there's really not a lot of "private" info in there so we're fine with storing the data on GitHub.

The setup

So let's bring out and connect the building blocks.

We have phpipam which has an API, and we have a private GitHub repo which can be managed through an API. Now we needed to connect these, and what better than to get some "real-life" example to test the products we normally deploy in our lab?

We're going to use vRealize Orchestrator as the connector. In the lab environment we're running vRO 8.1 embedded in a vRA 8.1 cluster. Since we're using the Powershell runtime inside the scriptable tasks we need the vRA version.

More specifically we'll have a scheduled workflow that first pulls the IP addresses from IPAM and then pushes it to a csv file checked in to a private git repo on GitHub.

Preparing phpIpam

phpipam has an API which can be activated through the Administration page. We'll need to set up an app id and an app code token that will be used when connecting to the API.

This requires phpipam to support https, and that the prettified URL structure is used. Check out the documentation for how to configure this. If you struggle with the SSL configuration I've also used this post as a reference

Preparing Github

Github has an API that can be used for committing stuff to repositories. It's enabled by default, but we need to have a way to authenticate.

At this point I'm using my personal GitHub account for authenticating and committing. I'll create an OAuth App that can be used by our code headless after the initial configuration

For more information about Creating an OAuth App check the documentation

After creating the app it needs to be Authorized. For this I'm going with the Device flow. Create a POST request with your client_id (retrieved when creating the OAuth App) and the Scope (repo access is needed)

1POST https://github.com/login/device/code

2

3{

4 "client_id": "<YOUR-CLIENT-ID>",

5 "scope": "repo"

6}

The response will include a device_code and a user_code which we need later on.

1{

2 "device_code": "3584d83530557fdd1f46af8289938c8ef79f9dc5",

3 "user_code": "WDJB-MJHT",

4 "verification_uri": "https://github.com/login/device",

5 "expires_in": 900,

6 "interval": 5

7}

Next point a web browser to https://github.com/login/device and enter the user_code

Now make another API call to retrieve the OAuth token for your client. You need to provide the client_id, device_code and the grant_type as input paramaters

1POST https://github.com/login/oauth/access_token

2

3{

4 "client_id": "<YOUR CLIENT ID>",

5 "device_code": "<DEVICE CODE RETRIEVED FROM PREVIOUS STEP>",

6 "grant_type": "urn:ietf:params:oauth:grant-type:device_code"

7}

The response includes the access_token property which is your OAuth token that can be used when authenticating with the GitHub API.

1{

2 "access_token": "e72e16c7e42f292c6912e7710c838347ae178b4a",

3 "token_type": "bearer",

4 "scope": "repo"

5}

The solution

Now with the initial configuration in place we can start building out the solution, and as mentioned we'll use vRealize Orchestrator for running the code.

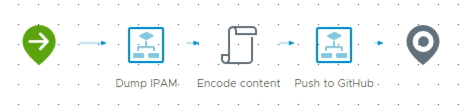

The code will be split in three parts, first a workflow for pulling data from phpipam, then a task for preparing the content for Github, and finally a workflow with the code for pushing the file to the git repo.

We could have done everything in one scriptable task, but this way we have some reusability for the different parts. The two workflows will be added to a parent workflow that also contains the task for encoding the content (this should probably be an action that also could be reused going forward.)

phpIpam code

Let's check out the code for pulling stuff from phpIpam. This is put in a scriptable task with a Powershell runtime inside a vRO workflow

First we need to pull all sections, we can think of this as folders (i.e. Customers, Lab, Internal etc). This is done with the secions URL

1GET https://<phpipam url>/api/sections

Now, in my case, I'll fetch the ID of the specific section I want to pull networks from and use that in a new API call

1GET https://<phpipam url>/api/sections/<sectionId>/subnets

Now I have all subnets for this section. I'll traverse all subnets and pull addresses for each of them. To pull the addresses I need the subnet id

1GET https://<phpipam url>api/subnets/<subnetId>/addresses

With that I can add details of each of the addresses together with the subnet to an output which will be an array of custom PS objects.

The workflow will output an array of Properties. In that way we can reuse this workflow to push to other places later on if needed

Encode task

To be able to push a file to the GitHub API the file contents needs to be Base64 encoded.

So we have a task that iterates the array of objects pulled from phpipam and build a new array of strings and finally Base64 encode the content

The task outputs a string that will be used to push to GitHub

Push to GitHub

Finally we have a workflow that pushes the data to GitHub. This will also be one scriptable task with a Powershell runtime.

The workflow has an input parameter for the content to be pushed, the path to push to, the OAuth token and the committer details (username, email and message).

The API request for pushing a file needs to be a PUT request and is documented here. Notice that to update an existing file we also need to add the sha of the file to be replaced.

The script will therefore first try to fetch the path from the input parameter, and if something is found it will pull the sha of that file and add to the request body.

1GET https://api.github.com/repos/<org or user>/<repo>/contents/<file>

Now the body is created with the commit message, the committer username, committer email, optionally the sha of the file to be replaced and finally the base64 encoded file contents. The request body is added to the PUT request

1PUT https://api.github.com/repos/<org or user>/<repo>/contents/<file>

Schedule the workflow

With the different workflows and tasks configured we can schedule our workflow to run. I've set it up to run daily at midnight

The result

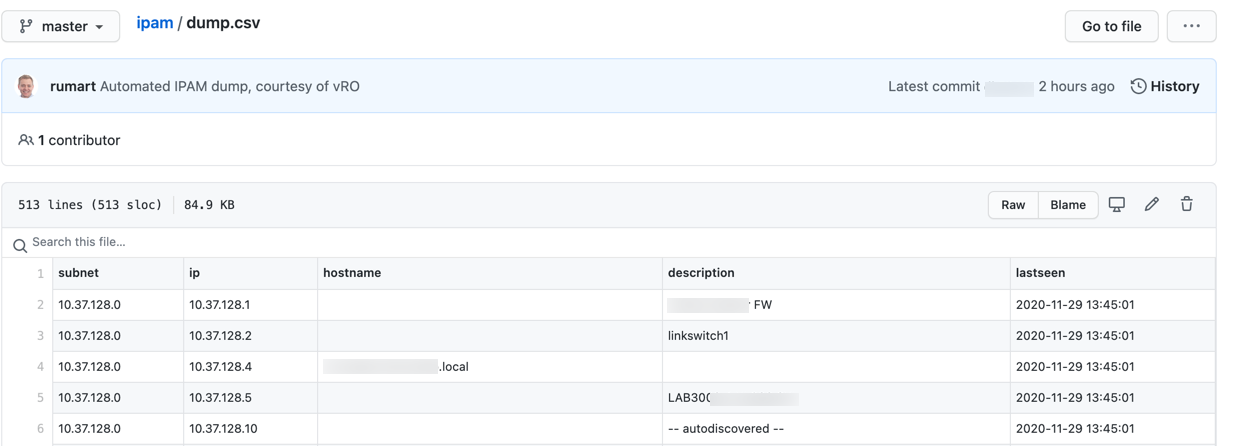

After a run of the workflow we get the data in GitHub, nicely formatted and searchable/filterable. All with the commit history available so we can go back and see the diff.

Summary

Pushing data from an IPAM solution to GitHub might not be a thing you'll use in a production environment, but this is a nice option for our lab environment. Also it has allowed us to explore a few features in both phpIpam, GitHub and vRO that we can use in other projects.

In this GitHub repo I've uploaded the code used in the three different scriptable task as well as a complete Powershell script that can be used outside of vRO.