Deploying a Nested Lab Environment with vRA - Part 3

Over the last weeks I've worked on building out a vRA blueprint with lots of vRO workflows that deploys a nested vSphere lab environment.

I'll go through the project in a this blog series. The first part is describing the background for the project and covered the underlying environment and vRA configuration. In part 2 we looked at the vRA blueprints, and in this third part we will cover the vRO workflows that runs the extensibility. Part 4 will summarize the status of the project, discuss a few bugs/quirks and describe some of the future plans

Extensibility

So with the blueprint covered in part 2 ready to go we will see how we can do some extensibility, namely configure the components and of course deploy our vCenter appliance.

As mentioned I'm doing the extensibility through vRO workflows running as Event subscriptions in vRA.

Some of this could probably be solved by using Software components in vRA, and it might be somewhat easier, but I work mostly with vRO so I chose that route.

In this environment there are a lot of Event subscriptions set up, some of them probably not relevant for other environments.

vRO Workflows

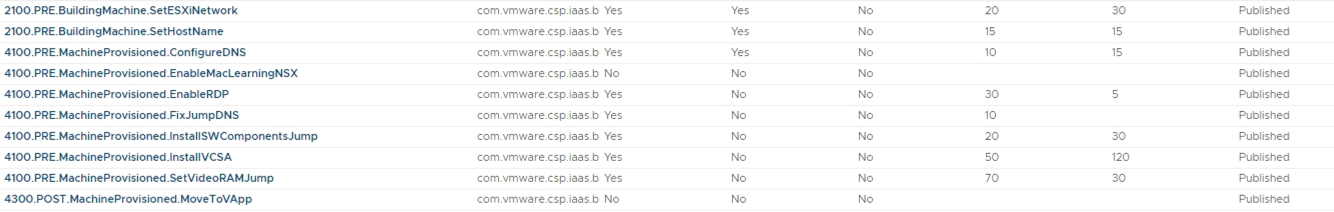

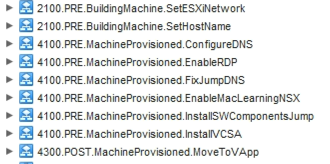

\

The workflows are prefixed after the Lifecycle state they'll run in. As you can see, most of the work will be done in the PRE MachineProvisioned phase. Some workflows in the same phase needs to run before others, this is handled with blocking and priority set in vRA.

I won't go in to all of the details on the workflows, but I'll mention a few points to be aware of. Some of the workflows run other custom workflows, and some of them make use of the Guest Script Manager package for vRO to run scripts inside VMs. If you're not familiar with the package, please check it out.

All the workflows covered below is available on GitHub. Note that some of them depend on other workflows and actions. I've also included a vRO package in the repository which should take care of the dependencies.

Workflow SetESXiNetwork

\

This workflow will set the correct IP address on ESXi hosts based on the number index they have. I use a fixed address prefix, 192.168.101.1XX, and append the index the host has.

I'm using the property VirtualMachine.Cafe.Blueprint.Component.Cluster.Index from the vRA extensibility as well as my own custom property NOLab.NumESXi to calculate the correct IP. Finally I add the updated properties to the Output parameter virtualMachineAddOrUpdateProperties properties object which will take care of updating the resources

Workflow SetHostName

\

Not surprisingly this workflow sets the correct name for the components. This is done by using the custom property NOLab.EnvName and the custom property NOLab.NamePrefix. For ESXi hosts the index number will also be used (see the previous workflow explanation). Again the output of the workflow is the virtualMachineAddOrUpdateProperties object

Workflow ConfigureDNS

\

The ConfigureDNS workflow will run a Powershell script in the deployed DNS host to install the DNS server, and then create resource records for the environment. The script is run with the help of the Guest Script Manager package mentioned previously.

Workflow EnableRDP

\

This workflow runs a separate workflow that enables RDP with a Powershell command. The subscription workflow runs on both the Jump and DNS hosts.

The separate enable RDP workflow runs the following Powershell commands

1Set-ItemProperty -Path 'HKLM:\System\CurrentControlSet\Control\Terminal Server' -name "fDenyTSConnections" -value 0;

2Enable-NetFirewallRule -DisplayGroup "Remote Desktop"

Workflow FixJumpDNS

This workflow is specific to the physical environment as it fixes the DNS server address on the Jump host's primary network adapter to the correct DNS server. We have two sites in the environment with different IP subnets, but we have only one network profile in vRA.

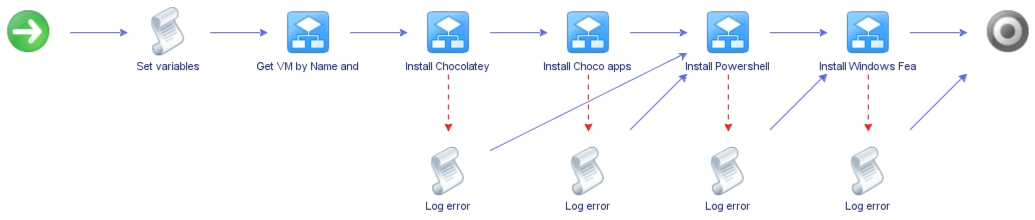

Workflow InstallSWComponentsJump

\

In this workflow I'm running several Powershell scripts in the Jump host to download and install some components.

First we're downloading Chocolatey and using that to install things like VS Code, Putty etc. Then we're installing the PowerCLI powershell modules, and finally we'll enable the DNS-RSAT feature so we can control the DNS server from the Jump host

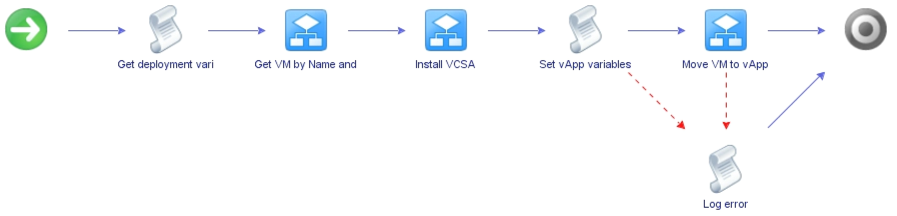

Workflow InstallVCSA

\

Now we're ready to deploy the vCenter server appliance. This workflow runs on the Jump host and needs to run after the previous ones.

The workflow runs a Powershell script in the Jump host that deploys the VCSA using the CLI installer. It pulls the VCSA ISO from a Windows share on a VM running in the "physical environment".

The script is based on William Lam's work around automated lab deployments. I'm only using the code for creating the JSON needed for the unattended deployment and then firing the command for actually running the installer.

I'm using the "standard" deployment which will deploy the VCSA outside of the nested lab. I will look into deploying in the nested environment at some point.

Again I'm using the Guest Script Manager workflows for running the script in the Jump host.

In the script I'm also doing a first config of the newly provisioned vCenter. I'm thinking of moving this out of the VCSA workflow as I want to add in more stuff there later on, but for now it will use PowerCLI to connect to the vCenter, create a datacenter, and if specified in the vRA request create a cluster and add the provisioned ESXi hosts. I wanted to make that optional as I suspect there will be some use cases for doing stuff with a completely clean vCenter.

Workflow EnableMacLearningNSX

\

This workflow will change the MAC learning profile on the logical NSX-T switch to allow VM traffic in the nested environment. This is done by running a script which is using the NSX-T Powershell module (part of PowerCLI).

For more information about the script check this post

Workflow MoveToVApp

This is currently the final workflow and it will move all of the deployed resources in the main environment in to the provisioned vApp. This is done only for practical reasons, but there might be some use cases for using this later on.

Summary

This post discussed how the actual magic is built. The networking parts is handled by the NSX-T integration which we configured in the first part of this series. Most of the configuration and extensibility is done through vRO, but again this could also be done with Software components in vRA.

As I've described I use the Guest Script manager package a lot. Although the package works great and simplify the process of running scripts a lot, this is something I'm really hoping is provided out of the box in the 8.1 release of vRA/vRO.

In the next part of this series we will cover a few quirks that is good to be aware of as well as the future plans of the project.

Thanks for reading!