CKA Study notes - Logging

Continuing with my Certified Kubernetes Administrator exam preparations I'm now going to take a look at the Troubleshooting objective. I've split this into three posts, Application Monitoring, Logging (this post) and Troubleshooting

The troubleshooting objective counts for 30% so based on weight it's the most important objective in the exam so be sure to spend some time studying it. The Kubernetes Documentation is as always the place to go, as this is available during the exam.

Note #1: I'm using documentation for version 1.19 in my references below as this is the version used in the current (jan 2021) CKA exam. Please check the version applicable to your usecase and/or environment

Note #2: This is a post covering my study notes preparing for the CKA exam and reflects my understanding of the topic, and what I have focused on during my preparations.

Logging

Kubernetes Documentation reference

Logging in Kubernetes can be divided in to multiple sections. We have the system logs, the node logs, and the pod logs. The individual containers might have their own logging mechanism as well outside of the cluster. I'm not going in lots of detail here, hopefully it'll be enough to prepare for the CKA exam, and more important to have an understanding of the concepts.

Cluster logs

Kubernetes Documentation reference

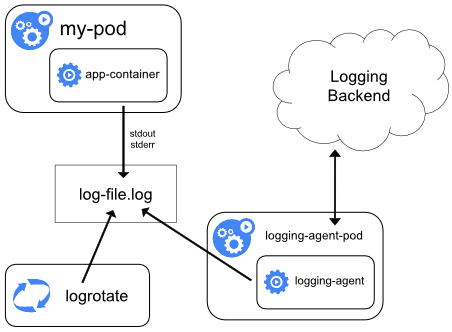

Kubernetes has no cluster-level logging solution natively and the recommended way of handling log at the cluster level is through an agent running at the node-level and pushing to a logging backend. There's a couple of logging agents packed with the Kubernetes release, Stackdriver logging (GCP) and Elasticsearch. Both use fluentd as an agent on a node.

A logging agent on each node should be implemented as a Daemon set which ensures that one pod is running on each node

Pod/application logs

Kubernetes Documentation reference

Logs from a Pod on a node can be examined through the kubectl logs command. This log contains the stdout and stderr output from all the containers running on that node. The output of each container is written to a log file in the /var/log/containers directory on the node

1kubectl logs <pod-name>

We can also specify a specific container in that pod to retrieve logs only from that. Note that the node saves the logs from one previous pod instance by default so by adding the --previous flag we can get the logs of a container that crashed.

Another possibility is to stream logs from a container in a pod with the kubectl logs -f -c <container-name> <pod-name> command.

Pods can also mount the /var/log directory on a node and write to a file in that directory.

Sidecar container

Kubernetes Documentation reference

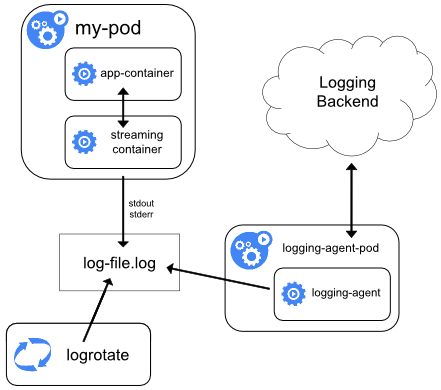

If an application in a container does not write to standard stdout and stderr output streams we can implement a sidecar container which reads logs or output from a container and then streams this to it's stdout and stderr, and then the recommended logging agent push those to the backend.

System and Node logs

Kubernetes Documentation reference

Kubernetes has it's own logging library, klog. This library generates log messages for the Kubernetes system components.

Klog is a fork of the Go logging library, glog.

The Node logs to it's /var/log directory. In here there's also a containers directory where container logs reside. Note that Kubernetes doesn't do any logrotation by default so this needs to be set up so we don't grow out of space on disk.

The different system pods and containers also logs here, like the scheduler and kube-proxy

This is where the logging agent mentioned above can come in to pull cluster-wide logs. The logging Pod can pull in log files from the node it runs on and push it to a logging backend

The kubelet and the container runtime does not run as containers, but if these also logs to files in the /var/log directory, they could also be picked up by the logging agent.